Drone CI in AWS Cloud for DevExtreme

In this article, I’ll talk about how I set up continuous integration in Amazon AWS for the DevExtreme repository.

For several months now we have been developing DevExtreme in an open repository on GitHub . Continuous integration from the very beginning was built on the basis of Docker , in order not to depend on the CI platform (whether it is Travis, Shippable or something else), but since the publication of the repository we did not stand out and used the well-known Travis CI to run the tests . On GitHub, we only have a small part of automatic tests running, so to speak, the first line, and the capabilities of Travis for the Fork and Pull Request equipment were enough.

Over time, colleagues began to lament the queue of pull requests (but suffered). The idea that it was time to do something, came at the end of October, when Travis lost contact with the Docker Hub for two days, and we were preparing for the beta release of DevExtreme 17.2 .

Having received the go-ahead for experiments in the corporate AWS-account, I decided to give a second chance to the Drone project. Why second? Because we have already tried it in the process of "running out to GitHub". Then our repository was private, Drone was even more raw than today, and we launched it on a temporary knee-length infrastructure, more precisely on older workstations left after the workstations were upgraded (our IT department promised to pick them up, but not in a hurry ).

As a result, we managed to raise the elastic CI infrastructure on spot instances , available at the beautiful address https://devextreme-ci.devexpress.com/DevExpress/DevExtreme :

I have published the achievements and now I want to share the experience gained in the process.

Two words about Drone

The Drone itself is well described in Habré . This is such a continuous integration system on Go, in which Docker is below, Docker is on top and Docker Docker is chasing. In the 570th issue of Radio-T, Umputun (a well-known Docker amateur) said about Drone: "Simple as a railway. Its simplicity is on the verge of when it is worse than stealing."

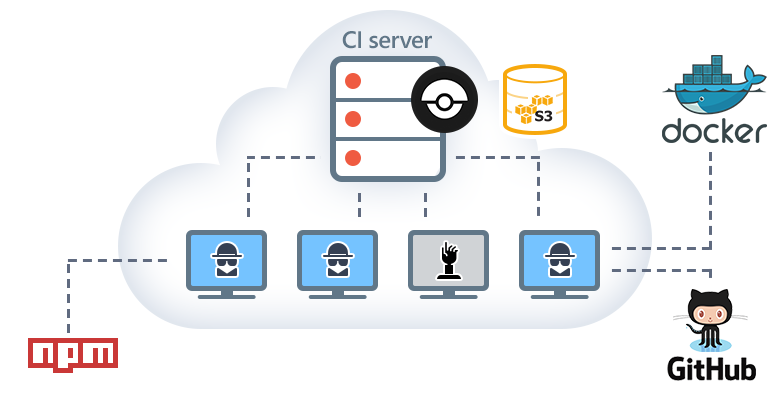

The Drone architecture is typical for the CI platform:

- There is a server. It provides a web interface, listens to web hooks and manages a task queue.

- You can run an arbitrary number of agents, who themselves find the server on which the integration actually takes place.

- Docker everywhere. This has both pros and cons. For example, if you step on a rake at the junction of Drone and Docker , then a fun pastime is guaranteed.

AWS inside

It all started with a small t2.micro instance on which the Drone server was launched.

And for agents, the auto-scaling group was introduced (I will call it ASG below). I wanted to keep agents included only when there is work for them. This is especially attractive in light of the fact that, recently, in EC2, per-second billing .

Scaler

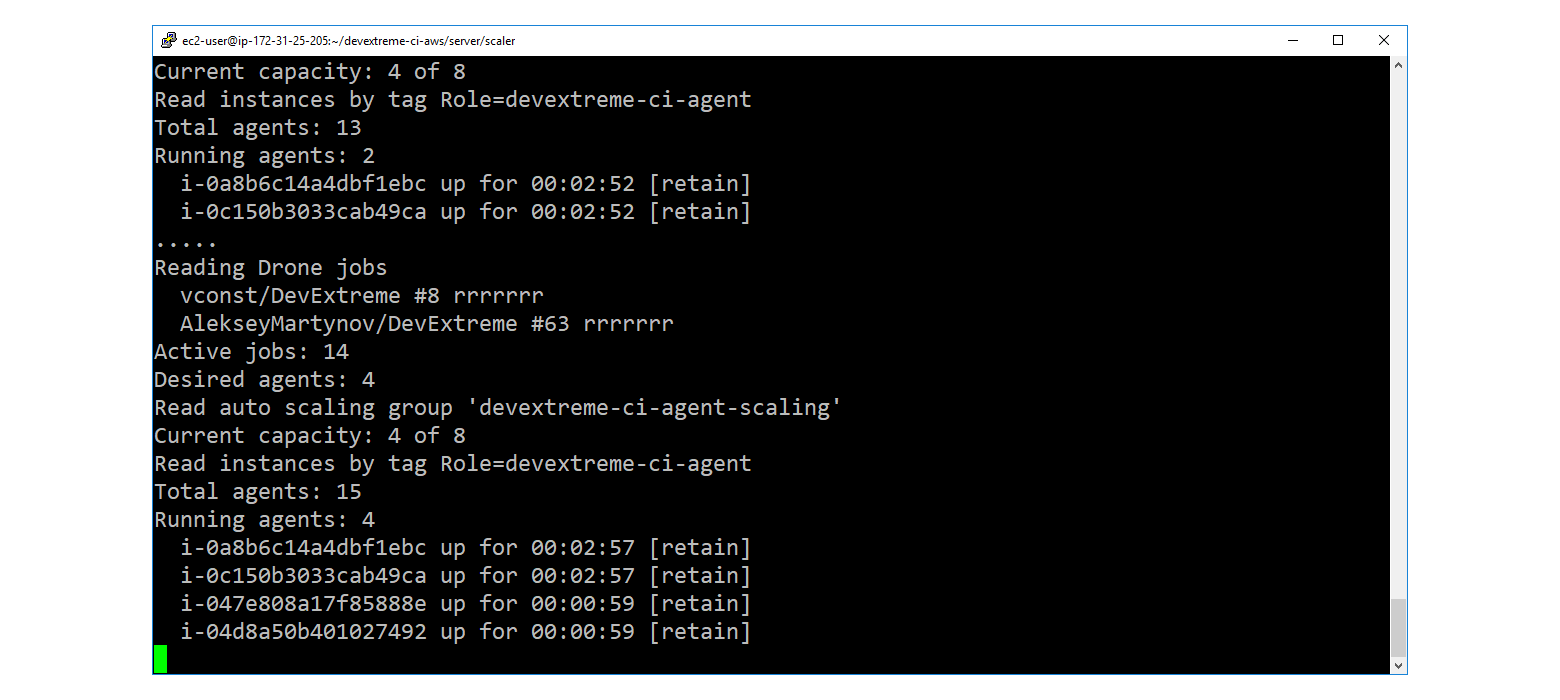

The interesting thing started when the question "how to manage ASG capacity" arose. Standard means from the category "add, if the processor load has increased," do not fit. You need to keep track of the Drone queue, add agents when the queue grows, and gently turn them off when the queue resolves.

For this, a utility was written under the code name scaler . On .NET Core (I can not refrain from C #, when there is such an opportunity).

Scaler implements the following simple cycle:

- Through the Drone REST API, we read the queue.

- If the amount of running + pending exceeds the number of running agents multiplied by the density of "jobs per agent", then through AWS REST API we increase capacity (up to a certain limit).

- If, on the contrary, we send a request for soft completion to the oldest agents.

The main nuance is that you can not just take and reduce capacity. If this is done, then the agent instances can be redeemed while they are still busy, and this will lead to negative consequences up to the formation of the so-called zombie builds .

In this regard, ASG launches Protect From Scale In instances, and the capacity never clearly decreases. As soon as the agent becomes a candidate for elimination, scaler removes him from the group (detach) and sends the following command sequence via SSM . The SIGINT signal gives the agent to understand that you should not take new tasks, you should wait for the completion of existing ones, and then exit. Thus, the agent goes to the side, peacefully finishes his affairs and turns off. An exception to the group is made with the reduced capacity flag set, thanks to this, the ASG is immediately ready to add new agents if the queue starts to fill up.

In addition to managing the ASG capacity, the scaler is hung with additional checks and crutches:

- Sends a terminate to agents that are in the Stopped state .

- Extinguishes agents who live for some reason suspiciously long .

- Suppresses agents whose reachability check for a long time has not passed .

- Monitors the formation of zombies on the side of Drone and kills them (the most fun place!)

- Allows agents to work at least a few minutes . This is to ensure that the relevant Spot Requests have time to go into the "fulfilled" state. As a result of the run-in, it turned out that sometimes ASG tries to cancel a Spot Request, for which the instance is already running, but the status has not yet been updated.

Instances: On-Demand vs. Spot

At first, the agents used the usual (on-demand) c4.xlarge instances. Each has up to 4 agents (at the rate of 1 agent per 1 core).

As soon as the system began to move more or less, I tried the configuration with the tick "Request Spot Instances" as an experiment. It turned out that it works well. There are always spot instances, and I have never seen an instance selected (with Spot Price = On-Demand Price).

It will be necessary to observe their behavior and, perhaps, to fasten the automatic switching between ASG on the spot and ASG on-demand.

Cache

Continuous integration is associated with repeated downloads of the same Docker images and NPM / NuGet packages. To reduce external traffic and mitigate the inaccessibility of the registry of images and packages, I implemented caching in S3.

For Drone, there is a ready-made drone-s3-cache plugin, but I didn’t use it, as there were not enough settings. To load / restore the node_modules and dotnet_packages directories, we use the simple drone-cache.sh script.

S3 bucket "devextreme-ci-cache" is configured so that you can anonymously write to it from within the VPC. This is convenient because you do not need to fiddle with secret keys. However, with this configuration, archives are available for anonymous reading. For the package cache, this is not a problem, but I didn’t master setting the policy so that the reading was limited to the VPC, and it does not take away rights from authorized users. Similar situations are discussed on StackOverflow here and here . If you have any ideas / experience of this kind of setting, let me know in the comments!

Archives in the cache are differentiated by fork. Expired objects are automatically deleted from S3, thanks to the configured Expiration Lifecycle Rule .

For caching Docker images next to the Drone server, the registry is running in pull-through cache mode with S3 blob storage ( configuration ). The registry is available only inside the security group for agents, and the agents themselves are configured to use this mirror . Inactive images / layers are automatically removed from the cache after 7 days.

Https

When it became clear that the system was viable, the Drone-server was hidden behind nginx . The website team kindly singled out to us a subdomain "devextreme-ci.devexpress.com", to which they tied the Elastic IP Drone-server. For the subdomain, I configured HTTPS via Let's Encrypt with automatic renewal .

Subtotals

Drone in the AWS-cloud for the DevExtreme repository on GitHub has been running for the fourth week. The team is pleased. To the question "Well, how are you drone?" basically they answer "Fire! Fast, not like a mustache" (comment mustache = Travis). Of course, AWS resources, unlike Travis CI, are not free, and we still have to find out how much our new CI costs, but [ TODO to think of something to write in order to justify this entire fun game ].

But seriously, the truth has become more pleasant to work with. When we rush and pull-rekvesy flow like water, we no longer sit and not stupid (believe me) waiting for the CI queue. At the same time, the cost of AWS resources is directly dependent on the intensity of use. During periods of inactivity (for example, on the New Year holidays), only one t2.micro instance is running, and the agents are turned off and the caches are cleared. In a word, I want to believe that we use cloud technologies for our own benefit.

Did you try the drone? What did you think of it? Has it or other platforms been deployed for continuous integration in the clouds? Tell us in the comments!

')

Source: https://habr.com/ru/post/342776/

All Articles