The chatbot, which is “just like Siri, only cooler” on the naive Bayes classifier

Hello! It is no secret that lately there has been a sharp upsurge of activity in the world about the study of such a topic as artificial intelligence . So this phenomenon has not bypassed me.

It all started when I watched a typical, at first glance, American comedy on the plane - “Why, is he?” (Eng. Why him? 2016) . There, one of the key characters in the house had a voice assistant who immodestly positioned himself "like Siri, only better." By the way, the bot from the movie was able not only to talk defiantly with the guests, sometimes swearing, but also to control the whole house and the surrounding area - from central heating to the flush toilet. After watching the movie, I got the idea to implement something like this and I started writing code.

Figure 1 - A frame from the same film. Voice assistant on the ceiling.

')

The first stage was easy - the Google Speech API was connected for speech recognition and synthesis. The text received from the Speech API was processed through hand-written regular expression patterns , when coincided with which the intent of the person talking to the chat bot was determined. Based on the intention determined by regexp, one phrase was randomly selected from the corresponding list of answers. If the proposal said by a person did not fall under any pattern, then the bot said pre-prepared general phrases, like: “I like to think that I am not just a computer” and so on.

It is obvious that manually registering a set of regular expressions for each intent is a laborious task, therefore, as a result of searches, I came across the so-called “ naive Bayesian classifier ”. It is called naive because when it is used it is assumed that the words in the analyzed text are not related to each other. Despite this, this classifier shows good results, which will be discussed a little below.

Just so shove a string into the classifier will not work. The input string is processed as follows:

Figure 2 - Input Text Processing Scheme

I will explain in more detail each stage. With tokenization, everything is simple. Trite - this is the division of text into words. After that, the so-called stop words are removed from the received tokens (array of words). The final stage is quite difficult. Stemming is getting the stem of a word for a given source word. Moreover, the basis of the word is not always its root. I used Stemmer Porter for the Russian language (link below).

We turn to the mathematical part. The formula with which it all starts is as follows:

- this is the probability of assigning any intent to the given input string, in other words, to the phrase that the person told us. - probability of intent, which is determined by the ratio of the number of documents belonging to the intent to the total number of documents in the training set. Document probability - , therefore we reject it. - probability of the relation of the document to the intent. She signs as follows:

Where - the corresponding token (word) in the document

Let us write in more detail:

Where:

- how many times the token was assigned to this intent

- smoothing, preventing zero probabilities

- number of words related to intent in training data

- the number of unique words in the training data

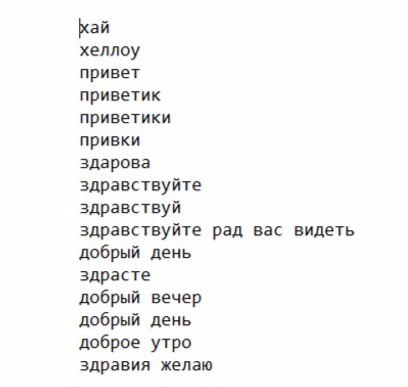

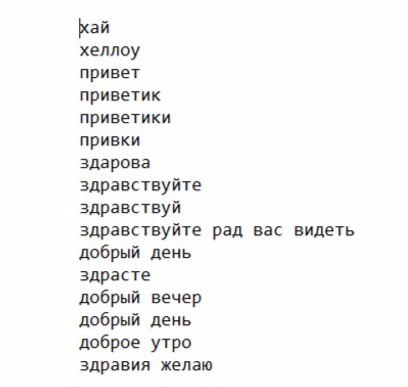

For training, I created several text files with symbolic names “hello”, “howareyou”, “whatareyoudoing”, “weather” etc. For example, give the contents of the file hello:

Figure 3 - Example of the contents of the text file “hello.txt”

I will not describe the learning process in detail, because all Java code is available on Github . I will give only a scheme for using this classifier:

Figure 4 - Classifier operation scheme

After we have trained our model, we proceed to the classification. Since, in the training data, we defined several intent'ov, then the resulting probabilities there will be a few.

So which one to choose? Choose the maximum!

And now the most interesting, classification results:

The first results were a little upset, but in them I saw suspicious patterns:

This problem was solved by reducing the smoothing parameter ( ) from 0.5 to 0.1, after which the following results were obtained:

I consider the results obtained to be successful, and considering my previous experience with regular expressions, I can say that the naive Bayes classifier is a much more convenient and universal solution, especially when it comes to scaling training data.

The next step in this project will be the development of a module for defining named entities in the text ( Named Entity Recognition ), as well as the improvement of current capabilities.

Thank you for your attention, to be continued!

Wikipedia

Stop words

Stemmer porter

Prehistory

It all started when I watched a typical, at first glance, American comedy on the plane - “Why, is he?” (Eng. Why him? 2016) . There, one of the key characters in the house had a voice assistant who immodestly positioned himself "like Siri, only better." By the way, the bot from the movie was able not only to talk defiantly with the guests, sometimes swearing, but also to control the whole house and the surrounding area - from central heating to the flush toilet. After watching the movie, I got the idea to implement something like this and I started writing code.

Figure 1 - A frame from the same film. Voice assistant on the ceiling.

')

Start of development

The first stage was easy - the Google Speech API was connected for speech recognition and synthesis. The text received from the Speech API was processed through hand-written regular expression patterns , when coincided with which the intent of the person talking to the chat bot was determined. Based on the intention determined by regexp, one phrase was randomly selected from the corresponding list of answers. If the proposal said by a person did not fall under any pattern, then the bot said pre-prepared general phrases, like: “I like to think that I am not just a computer” and so on.

It is obvious that manually registering a set of regular expressions for each intent is a laborious task, therefore, as a result of searches, I came across the so-called “ naive Bayesian classifier ”. It is called naive because when it is used it is assumed that the words in the analyzed text are not related to each other. Despite this, this classifier shows good results, which will be discussed a little below.

We write classifier

Just so shove a string into the classifier will not work. The input string is processed as follows:

Figure 2 - Input Text Processing Scheme

I will explain in more detail each stage. With tokenization, everything is simple. Trite - this is the division of text into words. After that, the so-called stop words are removed from the received tokens (array of words). The final stage is quite difficult. Stemming is getting the stem of a word for a given source word. Moreover, the basis of the word is not always its root. I used Stemmer Porter for the Russian language (link below).

We turn to the mathematical part. The formula with which it all starts is as follows:

- this is the probability of assigning any intent to the given input string, in other words, to the phrase that the person told us. - probability of intent, which is determined by the ratio of the number of documents belonging to the intent to the total number of documents in the training set. Document probability - , therefore we reject it. - probability of the relation of the document to the intent. She signs as follows:

Where - the corresponding token (word) in the document

Let us write in more detail:

Where:

- how many times the token was assigned to this intent

- smoothing, preventing zero probabilities

- number of words related to intent in training data

- the number of unique words in the training data

For training, I created several text files with symbolic names “hello”, “howareyou”, “whatareyoudoing”, “weather” etc. For example, give the contents of the file hello:

Figure 3 - Example of the contents of the text file “hello.txt”

I will not describe the learning process in detail, because all Java code is available on Github . I will give only a scheme for using this classifier:

Figure 4 - Classifier operation scheme

After we have trained our model, we proceed to the classification. Since, in the training data, we defined several intent'ov, then the resulting probabilities there will be a few.

So which one to choose? Choose the maximum!

And now the most interesting, classification results:

| No | Input string | Specific intent | Is it true? |

| one | Hello how are you doing? | Howareyou | Yes |

| 2 | Glad to greet you, friend | Whatdoyoulike | Not |

| 3 | How was yesterday | Howareyou | Yes |

| four | What is the weather outside? | Weather | Yes |

| five | What weather is promised for tomorrow? | Whatdoyoulike | Not |

| 6 | I'm sorry, I need to go out | Whatdoyoulike | Not |

| 7 | Have a good day | Bye | Yes |

| eight | Let's get acquainted? | Name | Yes |

| 9 | Hello | Hello | Yes |

| ten | Glad to welcome you | Hello | Yes |

The first results were a little upset, but in them I saw suspicious patterns:

- Phrases number 2 and number 10 differ in one word, but give different results.

- All incorrectly defined intent are defined as whatdoyoulike .

This problem was solved by reducing the smoothing parameter ( ) from 0.5 to 0.1, after which the following results were obtained:

| No | Input string | Specific intent | Is it true? |

| one | Hello how are you doing? | Howareyou | Yes |

| 2 | Glad to greet you, friend | Hello | Yes |

| 3 | How was yesterday | Howareyou | Yes |

| four | What is the weather outside? | Weather | Yes |

| five | What weather is promised for tomorrow? | Weather | Yes |

| 6 | I'm sorry, I need to go out | Bye | Yes |

| 7 | Have a good day | Bye | Yes |

| eight | Let's get acquainted? | Name | Yes |

| 9 | Hello | Hello | Yes |

| ten | Glad to welcome you | Hello | Yes |

I consider the results obtained to be successful, and considering my previous experience with regular expressions, I can say that the naive Bayes classifier is a much more convenient and universal solution, especially when it comes to scaling training data.

The next step in this project will be the development of a module for defining named entities in the text ( Named Entity Recognition ), as well as the improvement of current capabilities.

Thank you for your attention, to be continued!

Literature

Wikipedia

Stop words

Stemmer porter

Source: https://habr.com/ru/post/342728/

All Articles