One for all: how to break the vicious circle of difficulties in developing a boxed product

We make software for video surveillance systems with video analysis functions, and this is a boxed product. And the boxed product in the same form is provided at once to many users and is used by all of them the way it is.

Does it matter if we make a universal product for many or are we developing it individually for a specific user? When it comes to video analysis, it is simply of fundamental importance.

Take two companies: N and M. Let company N develop an “individual” software product, and M - a boxed one. Company N develops a product on order, for use in one particular place. And makes everything work in the conditions for which the product is developed. And company M, which makes a boxed product, must design it so that it provides target parameters (for accuracy, for example) for the most different users in the most different conditions.

')

For a boxed video analysis software, two factors are true:

1. A variety of conditions of applicability;

2. The inability of the new user to adjust and adjust the algorithm every time.

Accordingly, during its development it is necessary to satisfy two conditions:

1. The algorithm should work in automatic mode. That is, without the participation of a person who can "tweak" something and adjust it in a particular place.

2. Conditions can be very different. And with all parameters, the product must provide target values, for example, in accuracy.

And with regard to shooting conditions and video analysis, the range of possible parameters is very wide: these are sharpness, contrast, color saturation, optical noise level, structure level and spatial-temporal distribution of noise movement, camera angle, color rendering parameters, background (scene) complexity, etc. d.

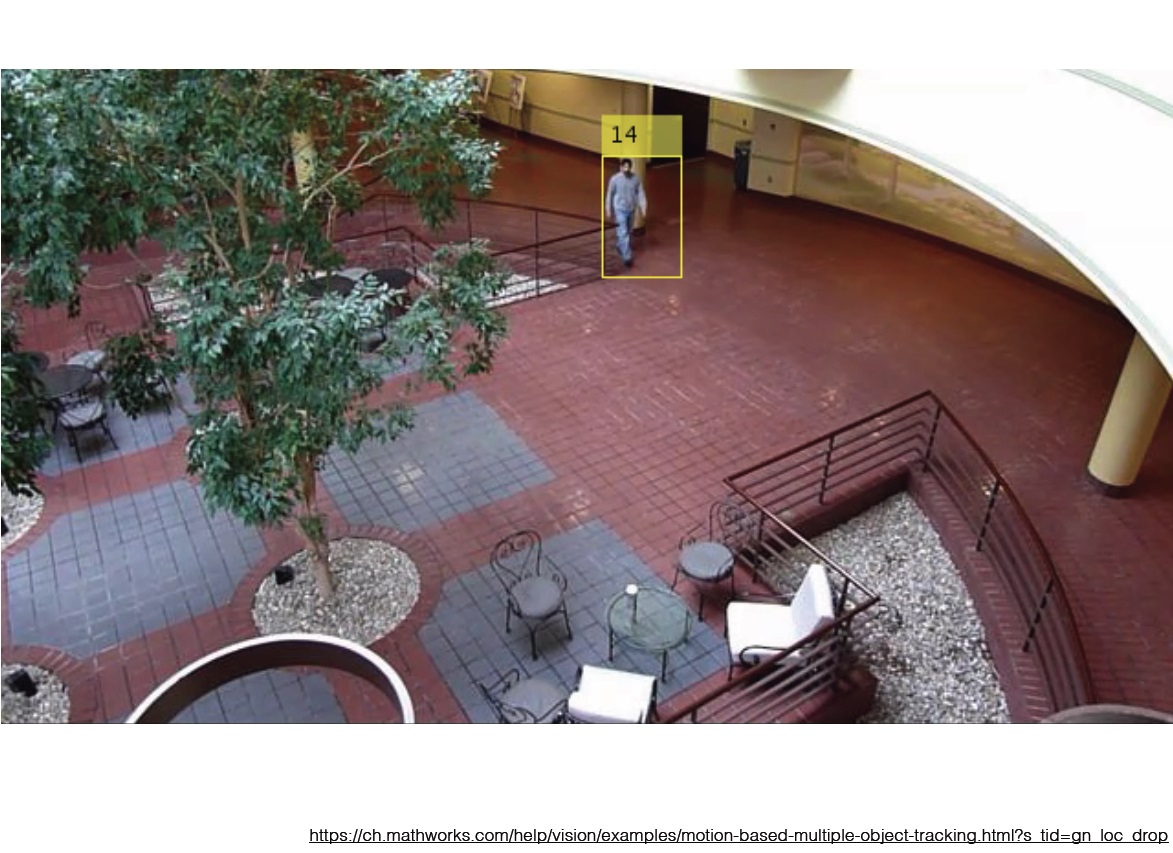

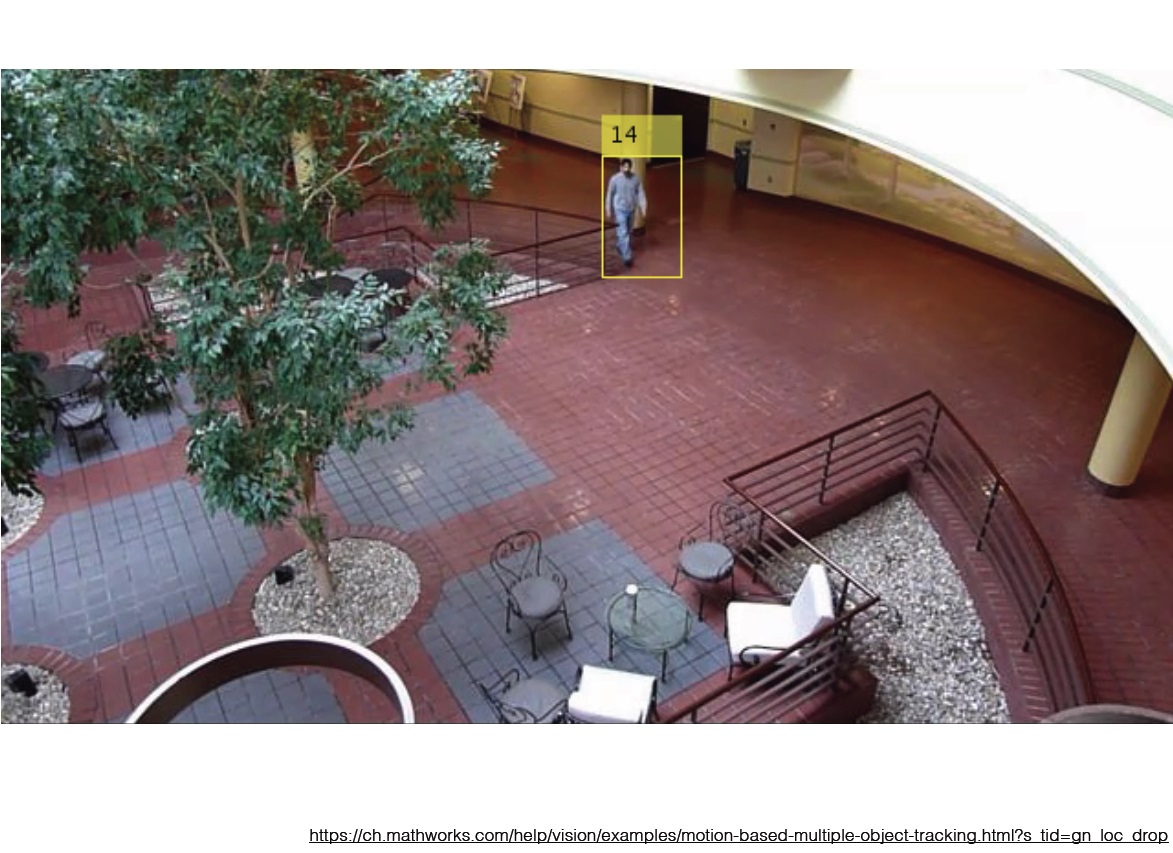

If you take a scientific article, then you can see, for example, such illustrations for detecting a moving object:

Take a look! This is just something sterile: here are two pictures - this is a moving object. And, of course, in such a situation, we will detect everything perfectly.

But these are unreal, ideal conditions.

If all in the same scientific papers take more realistic illustrations, this is what they are:

This is a frame from this camera. But conditions are still very good.

But what we face in fact? We are faced with the fact that our algorithms should work in these conditions:

and in such

and even in such

This is the reality of the use of video analysis algorithms in the boxed product: the conditions are completely different. In addition, they may change over time. Nevertheless, each algorithm should work well in all such conditions and provide target indicators for accuracy.

And therefore, when developing a boxed product, a special non-trivial approach should be sought.

There is another very difficult task in our development specifics.

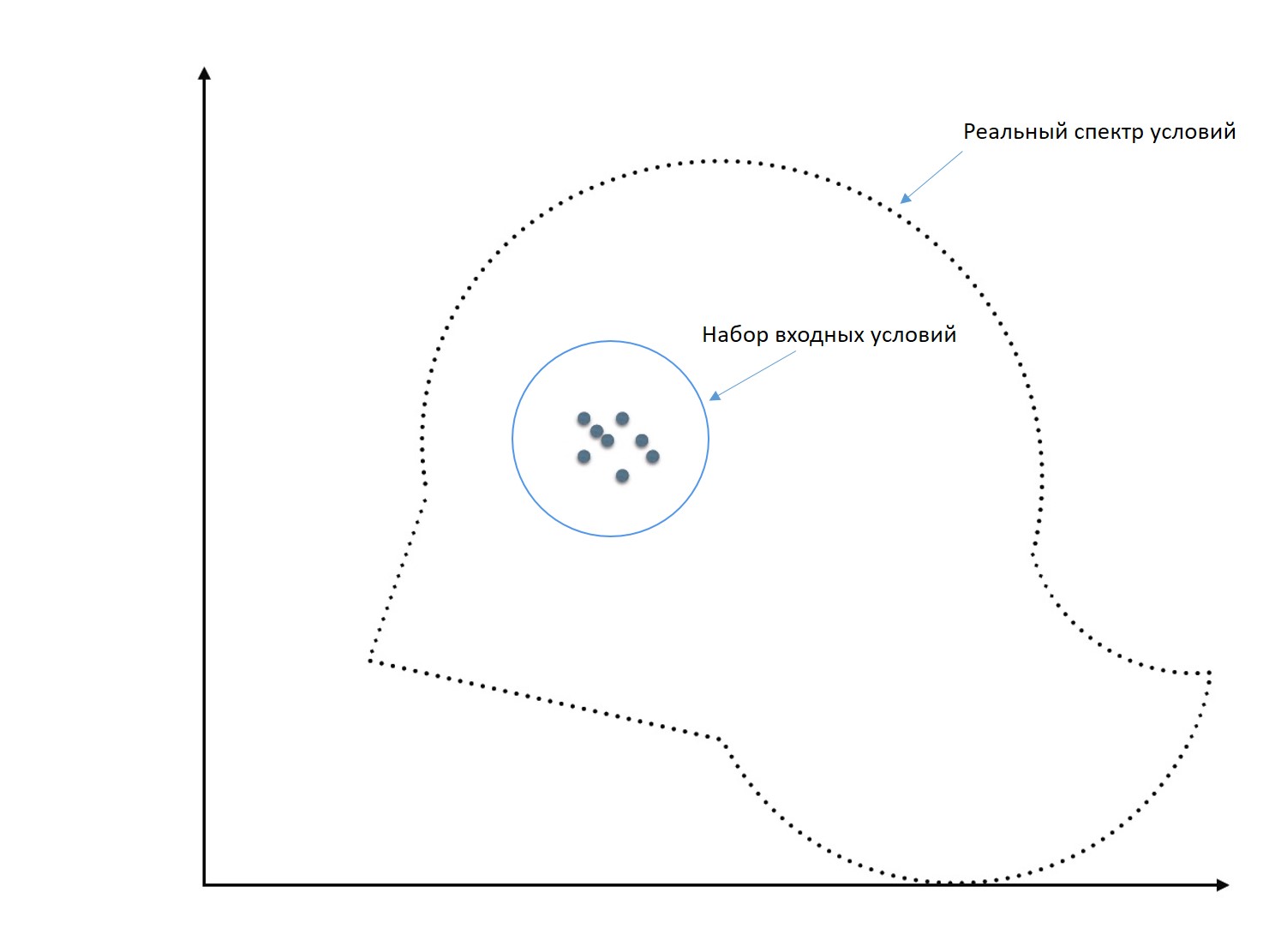

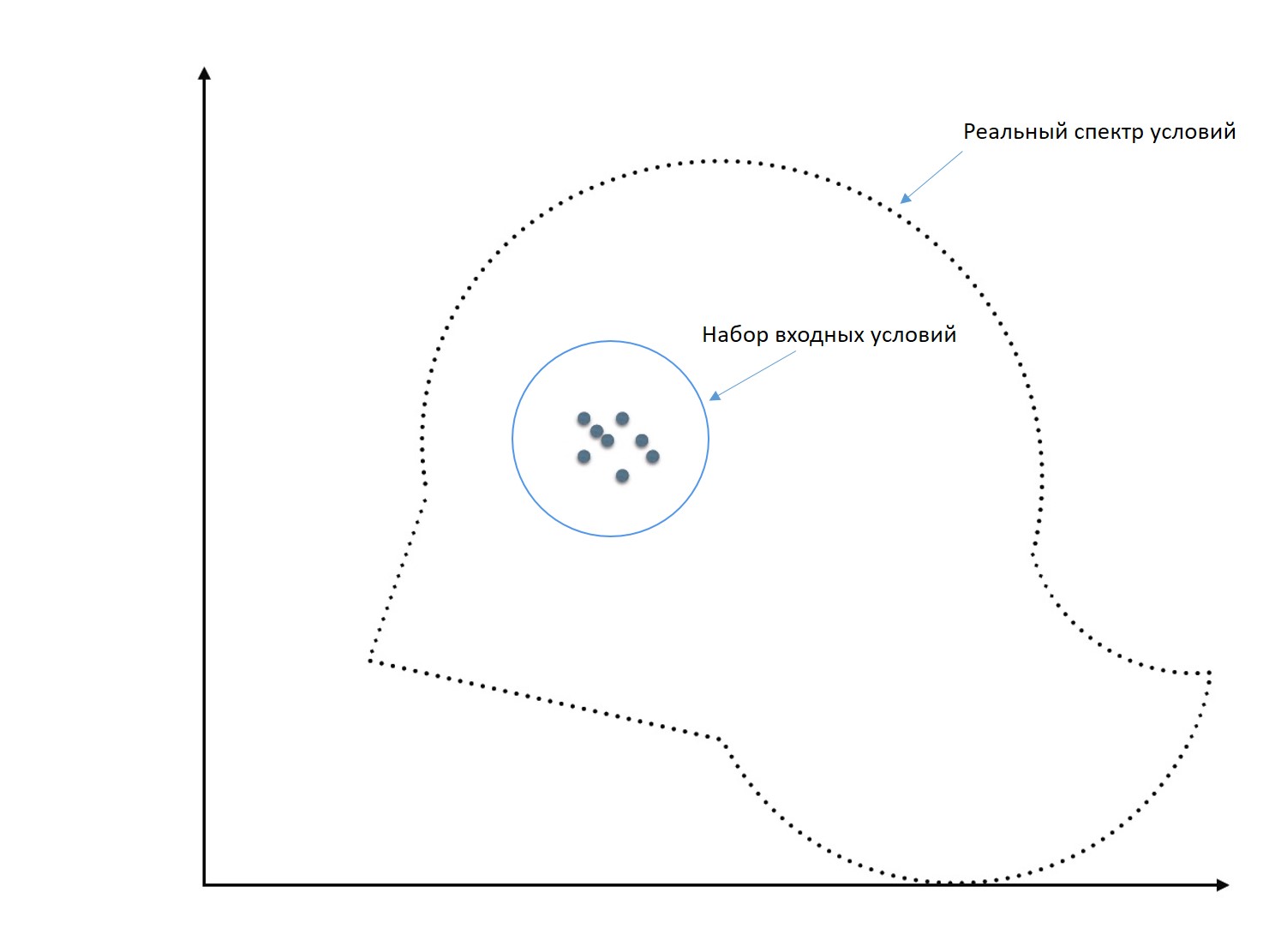

When an algorithm is developed, a sample of frames or video is created for it: videos are being prepared on which work is being tracked. Developers are trying to prepare very different videos, with very different shooting conditions and parameters. But in fact it turns out that these videos somehow fall into some rather narrow area of input conditions.

And when an algorithm that works fine on the sample will be applied under completely different conditions, it may work very poorly or not at all. Because all the development was carried out, adjusting to these narrow input conditions.

And this is a global problem.

But an even bigger problem is that in fact this “condition space” is not two-dimensional as on the graph above. It is multidimensional. And all parameters can vary within wide limits. And in view of the fact that we are considering a boxed product, its algorithms must work correctly and accurately within all bounds.

So, we get a huge multidimensional space of conditions and parameters. And our task in the development of the algorithm is to place the input points more or less evenly in this space and not to miss any area.

There is no universal method. The only thing that can be recommended here is to go and take in a selection of real videos of real cameras from real objects. And try to make them cover the entire parameter space as much as possible.

In this case, the algorithm will still somehow adjust to the specific conditions of these input sample rollers.

The way to avoid such an adjustment is to test and measure accuracy on a different set of videos: develop on some, test on others. Then the chances are increased, because if, during testing, the algorithms do not work on what they were developed on, then there is no adjustment.

But this is not all :) It often happens that you develop on some, test on others and find out that it does not work for you or does not work well enough. You start to understand why it does not work, you understand why, you change something and ensure good work on the test videos. But in this case, there is already a new adjustment ... for them.

1 way - all the time to test on new videos.

It is very effective, but very time consuming. Believe me, it is very difficult to collect videos for input samples and tests from real objects (also because we are talking about video security systems). And in view of the fact that sometimes the testing of the algorithm can take place several times a day, you need to stock up on some unreal amount of video.

This approach increases the chances of success, reduces the risk of adjustment, but is very resource-intensive.

2 way - to do so that developers do not see why it does not work during tests

Let the developers write the algorithm, and there will be some kind of external person who will test it on new videos, which will simply tell them whether accuracy is achieved or not, something needs to be improved or not. The developers of these videos should not see, in order to exclude corrections fixes.

But in this case, developers should refine the algorithm, not understanding why something is not working for them ...

Neither path is realistic. It is necessary every time to look for some kind of compromise. And the one who manages the development must properly maintain this balance: how, on the one hand, not to complicate your life so much so that under each testing you can recruit a new set of test clips, on the other hand - to minimize the risks of adjustment. Or how not to show the developers on which the testing takes place, but at the same time letting them know what works wrong for what and why.

And this is the reality in which we develop.

In development, there is a concept of degradation: when a developer improves something in an algorithm, at the same time something worsens in it. This is a normal, real phenomenon. Therefore, any change in the algorithm must pass the test for introducing degradation into other parameters.

It requires an insane amount of effort. You can do it manually and spend a lot of time and effort, and you can automate the process. But this again triggers the adjustment problem: when you automate, you adjust everything to a finite set of videos. “Automatically” is when everything is marked up: it is said that such conditions and parameters are on this video, and such are on this one.

And again, you need to find a balance when testing degradation ...

All this looks like some kind of vicious circle: achieve high accuracy for any conditions, but waste on this unreal amount of time and effort, or deny the universality of the product, but develop it faster and easier.

It seems that there is one simple way out of this situation (looking ahead, let's say that it is not easy, and it is not a way out :)).

When there is a wide range of parameters, it is possible to clearly formulate the user the conditions under which this algorithm should work. Any program, module or algorithm for video analysis has recommended working conditions under which they provide the stated accuracy.

However, with this approach, you need to understand this:

1. You can tell users that they must provide certain parameters, but often in reality it is simply impossible to comply with all the parameters. And when it comes to the boxed product, it should work in real conditions ...

2. But the main snag is that even if users are ready to comply with all recommended conditions, they are often difficult to formalize.

For example, the contrast can be described (although the contrast for the whole image may be one, and in the specific area where you are analyzing it is different). But how to formalize, for example, the complexity of the background? How do you tell the user what conditions they must comply with in relation to such badly digitized things?

No matter how difficult and hopeless these situations may seem, this is a reality in which both we and other companies work, develop, and do so quite successfully. It is necessary to understand and accept that the development of a boxed product for video analysis, which should work in real conditions, is plus several orders of magnitude to complexity.

Regardless of the way in which complex and contradictory tasks will be solved, it is necessary to develop and test in close conjunction with the user and the actual conditions in which he applies the product.

Does it matter if we make a universal product for many or are we developing it individually for a specific user? When it comes to video analysis, it is simply of fundamental importance.

Let's understand

Take two companies: N and M. Let company N develop an “individual” software product, and M - a boxed one. Company N develops a product on order, for use in one particular place. And makes everything work in the conditions for which the product is developed. And company M, which makes a boxed product, must design it so that it provides target parameters (for accuracy, for example) for the most different users in the most different conditions.

')

For a boxed video analysis software, two factors are true:

1. A variety of conditions of applicability;

2. The inability of the new user to adjust and adjust the algorithm every time.

Accordingly, during its development it is necessary to satisfy two conditions:

1. The algorithm should work in automatic mode. That is, without the participation of a person who can "tweak" something and adjust it in a particular place.

2. Conditions can be very different. And with all parameters, the product must provide target values, for example, in accuracy.

And with regard to shooting conditions and video analysis, the range of possible parameters is very wide: these are sharpness, contrast, color saturation, optical noise level, structure level and spatial-temporal distribution of noise movement, camera angle, color rendering parameters, background (scene) complexity, etc. d.

What is written in smart books?

If you take a scientific article, then you can see, for example, such illustrations for detecting a moving object:

Take a look! This is just something sterile: here are two pictures - this is a moving object. And, of course, in such a situation, we will detect everything perfectly.

But these are unreal, ideal conditions.

If all in the same scientific papers take more realistic illustrations, this is what they are:

This is a frame from this camera. But conditions are still very good.

And how really?

But what we face in fact? We are faced with the fact that our algorithms should work in these conditions:

and in such

and even in such

This is the reality of the use of video analysis algorithms in the boxed product: the conditions are completely different. In addition, they may change over time. Nevertheless, each algorithm should work well in all such conditions and provide target indicators for accuracy.

And therefore, when developing a boxed product, a special non-trivial approach should be sought.

And that is not all

There is another very difficult task in our development specifics.

When an algorithm is developed, a sample of frames or video is created for it: videos are being prepared on which work is being tracked. Developers are trying to prepare very different videos, with very different shooting conditions and parameters. But in fact it turns out that these videos somehow fall into some rather narrow area of input conditions.

And when an algorithm that works fine on the sample will be applied under completely different conditions, it may work very poorly or not at all. Because all the development was carried out, adjusting to these narrow input conditions.

And this is a global problem.

But an even bigger problem is that in fact this “condition space” is not two-dimensional as on the graph above. It is multidimensional. And all parameters can vary within wide limits. And in view of the fact that we are considering a boxed product, its algorithms must work correctly and accurately within all bounds.

So, we get a huge multidimensional space of conditions and parameters. And our task in the development of the algorithm is to place the input points more or less evenly in this space and not to miss any area.

How to do it?

There is no universal method. The only thing that can be recommended here is to go and take in a selection of real videos of real cameras from real objects. And try to make them cover the entire parameter space as much as possible.

In this case, the algorithm will still somehow adjust to the specific conditions of these input sample rollers.

The way to avoid such an adjustment is to test and measure accuracy on a different set of videos: develop on some, test on others. Then the chances are increased, because if, during testing, the algorithms do not work on what they were developed on, then there is no adjustment.

But this is not all :) It often happens that you develop on some, test on others and find out that it does not work for you or does not work well enough. You start to understand why it does not work, you understand why, you change something and ensure good work on the test videos. But in this case, there is already a new adjustment ... for them.

How to break out of this endless series of adjustments?

1 way - all the time to test on new videos.

It is very effective, but very time consuming. Believe me, it is very difficult to collect videos for input samples and tests from real objects (also because we are talking about video security systems). And in view of the fact that sometimes the testing of the algorithm can take place several times a day, you need to stock up on some unreal amount of video.

This approach increases the chances of success, reduces the risk of adjustment, but is very resource-intensive.

2 way - to do so that developers do not see why it does not work during tests

Let the developers write the algorithm, and there will be some kind of external person who will test it on new videos, which will simply tell them whether accuracy is achieved or not, something needs to be improved or not. The developers of these videos should not see, in order to exclude corrections fixes.

But in this case, developers should refine the algorithm, not understanding why something is not working for them ...

Neither path is realistic. It is necessary every time to look for some kind of compromise. And the one who manages the development must properly maintain this balance: how, on the one hand, not to complicate your life so much so that under each testing you can recruit a new set of test clips, on the other hand - to minimize the risks of adjustment. Or how not to show the developers on which the testing takes place, but at the same time letting them know what works wrong for what and why.

And this is the reality in which we develop.

But that's not all.

In development, there is a concept of degradation: when a developer improves something in an algorithm, at the same time something worsens in it. This is a normal, real phenomenon. Therefore, any change in the algorithm must pass the test for introducing degradation into other parameters.

It requires an insane amount of effort. You can do it manually and spend a lot of time and effort, and you can automate the process. But this again triggers the adjustment problem: when you automate, you adjust everything to a finite set of videos. “Automatically” is when everything is marked up: it is said that such conditions and parameters are on this video, and such are on this one.

And again, you need to find a balance when testing degradation ...

To drive into the framework not yourself, but the user?

All this looks like some kind of vicious circle: achieve high accuracy for any conditions, but waste on this unreal amount of time and effort, or deny the universality of the product, but develop it faster and easier.

It seems that there is one simple way out of this situation (looking ahead, let's say that it is not easy, and it is not a way out :)).

When there is a wide range of parameters, it is possible to clearly formulate the user the conditions under which this algorithm should work. Any program, module or algorithm for video analysis has recommended working conditions under which they provide the stated accuracy.

However, with this approach, you need to understand this:

1. You can tell users that they must provide certain parameters, but often in reality it is simply impossible to comply with all the parameters. And when it comes to the boxed product, it should work in real conditions ...

2. But the main snag is that even if users are ready to comply with all recommended conditions, they are often difficult to formalize.

For example, the contrast can be described (although the contrast for the whole image may be one, and in the specific area where you are analyzing it is different). But how to formalize, for example, the complexity of the background? How do you tell the user what conditions they must comply with in relation to such badly digitized things?

"The devil is not so bad ..."

No matter how difficult and hopeless these situations may seem, this is a reality in which both we and other companies work, develop, and do so quite successfully. It is necessary to understand and accept that the development of a boxed product for video analysis, which should work in real conditions, is plus several orders of magnitude to complexity.

Regardless of the way in which complex and contradictory tasks will be solved, it is necessary to develop and test in close conjunction with the user and the actual conditions in which he applies the product.

Source: https://habr.com/ru/post/342592/

All Articles