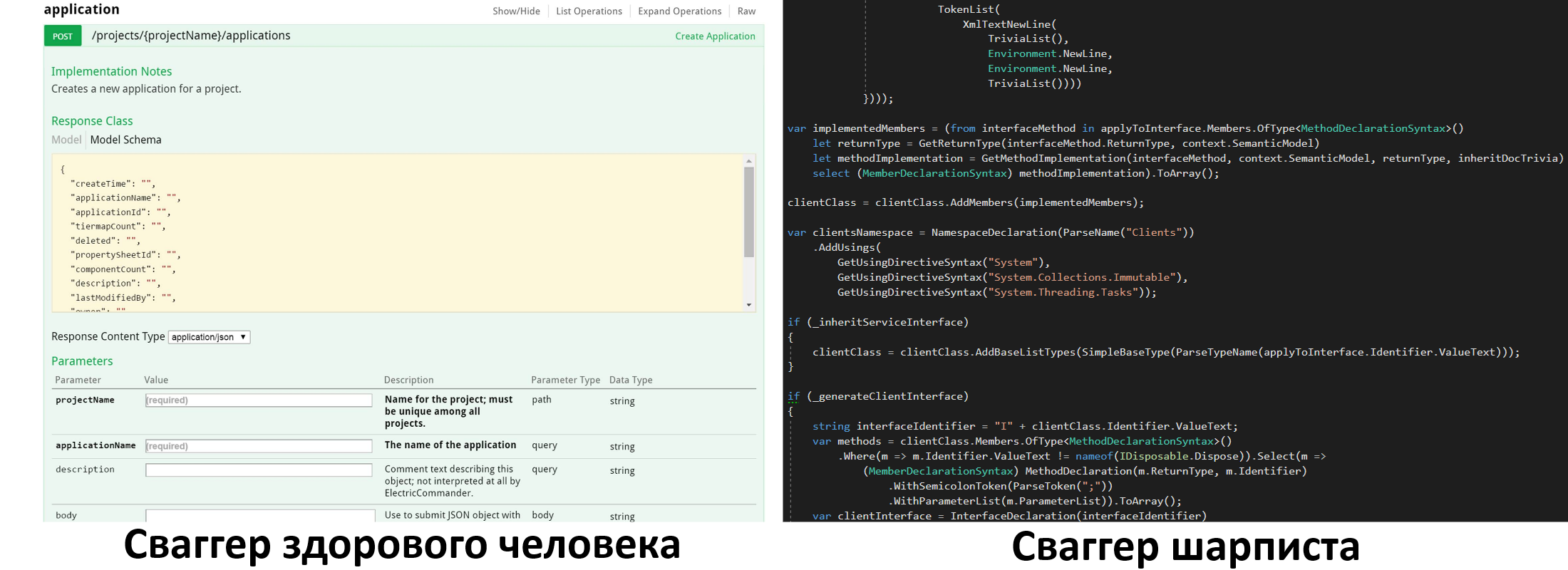

How to write your swagger and not regret it

Once, my colleague in backlog dropped the task “we want to organize interaction with the internal REST-api so that any change to the contract immediately leads to a compilation error”. What could be easier? - I thought, however, working with the resulting cactus forced us to do many hours of smoking documentation, descending from the usual overining engineering concepts “stick more interfaces, add a maximum of indirection, and spice up all this DI” before moving to .Net Core, manual co-generation of the intermediate assembler and learning the new compiler C #. Personally, I discovered a lot of interesting things for myself both in runtime and in the structure of the compiler itself. I think some of the people of Khabrovsk already know, and some will be useful food for thought.

Act one: copy-paste

Since this was a common type of task, and my friend was not inclined to think long over what was obvious, the result appeared rather quickly. The REST service was ours, on WCF, respectively, the general assembly MyProj.Abstracitons was introduced, where the service interfaces were moved. In it, we needed to write classes that implemented the service interface and were engaged in proxying queries to it and deserializing the result. The idea was simple: for each service we write to the client that implements the same interface, respectively, as soon as we change any method in the service, we get a compilation error. And we assume that a person, changing the argument of a function, will ensure that it is serialized correctly. It looked like this:

public class FooClient : BaseClient<IFooService> { private static readonly Uri _baseSubUri public FooClient() : base(BaseUri, _baseSubUri, LogManager.GetCurrentClassLogger()) {} [MethodImpl(MethodImplOptions.NoInlining)] public Task<Foo> GetFoo(int a, DateTime b, double c) { return GetFoo<Foo>(new Dictionary<string, object>{ {“a”, a}, {“b”, b.ToString(SerializationConstant.DateTimeFormat)}}, new Dictionary<string, object>{ {“c”, c.ToString(SerializationConstant.FloatFormat)}}); } } Where BaseClient<TService> is such a thin wrapper over HttpClient , which determines which method we are trying to call ( GetFoo in this case), calculates its URL, sends a request, takes the answer, deserializes the result (if necessary) and returns it.

I.e:

- Inherit

BaseClient<TService> - We implement all the methods

- We prescribe dictionaries for all arguments everywhere, trying not to be mistaken

In principle, it is not difficult, it even worked, but after writing the 20th method for the 30th class, which were absolutely the same type, people constantly forgot to write NoInlining , which is why everything broke (Little quiz # 1: what do you think, why?) , I asked myself the question "is it possible to approach this somehow humanly?" But, the task was already committed to the master, and from above I was told "go and drank the features, and do not suffer from garbage." However, I didn’t like the idea of spending 3 hours a day writing any wrappers. Not to mention a bunch of attributes, the fact that people periodically forgot to synchronize serialization with their changes and all such pain. Therefore, having survived until the next weekend, and having set himself the goal of somehow improving the situation, he outlined an alternative solution for a couple of days.

Act Two: Reflection

The idea here was even simpler: what prevents us from doing everything the same, but not with our hands, but dynamically generated? We have exactly the same type of tasks: take input arguments, convert them to two dictionaries, one for queryString arguments, the rest as arguments to the request body, and just call some typical HttpClient with these parameters. As a result, all problems with the same SerializationConstant were solved by the fact that they were written only once in this handler, which allowed them to be implemented correctly once and always rejoice at the correct result. After not very long smoking documentation and stackoverflow, MVP was ready.

Now, to use the service, simply:

- We create the interface

')public interface ISampleClient : ISampleService, IDisposable { } - We write a small wrapper (only for convenience of further use):

public static ISampleClient New(Uri baseUri, TimeSpan? timeout = null) { return BaseUriClient<ISampleClient>.New(baseUri, Constant.ServiceSampleUri, timeout); } We use:

[Fact] public async Task TestHelloAsync() { var manager = new ServiceManager(); manager.RunAll(BaseAddress); using (var client = SampleClient.New(BaseAddress)) { var hello = await client.GetHello(); Assert.Equal(hello, "Hello"); } manager.CloseAll(); }

In this test, of course, the real WCF service rises, which makes a real request, so strictly speaking it is not a unit test. But we all learn from our mistakes, now I’m locking dependencies and doing everything differently, but at that time I didn’t know how to do that.

Everything is very simple, of course, does not require special magic, such as the inheritance of special classes or the hanging of attributes. Variables and method names are displayed automatically. In general, beauty. Moreover, paragraph 2 can be omitted, if not too lazy to indicate each time a constant string with the name of the service.

How does it work? In fact, enough black magic. Here is the main piece responsible for generating the proxy methods:

private static void ImplementMethod(TypeBuilder tb, MethodInfo interfaceMethod) { var wcfOperationDescriptor = ReflectionHelper.GetUriTemplate(interfaceMethod); var parameters = GetLamdaParameters(interfaceMethod); var newDict = Expression.New(typeof(Dictionary<string, object>)); var uriDict = Expression.Variable(newDict.Type); // queryString var bodyDict = Expression.Variable(newDict.Type); // var wcfRequest = Expression.Variable(typeof(IWcfRequest)); var dictionaryAdd = newDict.Type.GetMethod("Add"); var body = new List<Expression>(parameters.Length) // var dict = new Dictionary<...> { Expression.Assign(uriDict, newDict), Expression.Assign(bodyDict, newDict) }; for (int i = 1; i < parameters.Length; i++) { var dictToAdd = wcfOperationDescriptor.UriTemplate.Contains("{" + parameters[i].Name + "}") ? uriDict : bodyDict; // , uri , body.Add(Expression.Call(dictToAdd, dictionaryAdd, Expression.Constant(parameters[i].Name, typeof(string)), Expression.Convert(parameters[i], typeof(object)))); // } var wcfRequestType = ReflectionHelper.GetPropertyInterfaceImplementation<IWcfRequest>(); // , T, var wcfProps = wcfRequestType.GetProperties(); var memberInit = Expression.MemberInit(Expression.New(wcfRequestType), Expression.Bind(Array.Find(wcfProps, info => info.Name == "Descriptor"), GetCreateDesriptorExpression(wcfOperationDescriptor)), Expression.Bind(Array.Find(wcfProps, info => info.Name == "QueryStringParameters"), Expression.Convert(uriDict, typeof(IReadOnlyDictionary<string, object>))), Expression.Bind(Array.Find(wcfProps, info => info.Name == "BodyPrameters"), Expression.Convert(bodyDict, typeof(IReadOnlyDictionary<string, object>)))); body.Add(Expression.Assign(wcfRequest, Expression.Convert(memberInit, wcfRequest.Type))); var requestMethod = GetRequestMethod(interfaceMethod); // (GetResult Execute), body.Add(Expression.Call(Expression.Field(parameters[0], "Processor"), requestMethod, wcfRequest)); var bodyExpression = Expression.Lambda ( Expression.Block(new[] { uriDict, bodyDict, wcfRequest }, body.ToArray()), parameters ); var implementation = bodyExpression.CompileToInstanceMethod(tb, interfaceMethod.Name, MethodAttributes.Public | MethodAttributes.Virtual); // tb.DefineMethodOverride(implementation, interfaceMethod); } Note the line with ReflectionHelper.GetPropertyInterfaceImplementation<IWcfRequest>() . What do you think, why did she need it? Reflection for the sake of reflection, is it more interesting for a person to write code that generates what he wants, instead of just writing it?

The main point here is that we use Expression to generate the method body, in which we put all the arguments either into the body or in the queryString, and then using the CompileToInstanceMethod extension we compile it not into the delegate, but immediately into the class method. This is not very difficult, although several dozens of iterations were carried out before the working version was obtained, until the correct one was crystallized:

internal static class XLambdaExpression { public static MethodInfo CompileToInstanceMethod(this LambdaExpression expression, TypeBuilder tb, string methodName) { var paramTypes = expression.Parameters.Select(x => x.Type).ToArray(); var proxyParamTypes = new Type[paramTypes.Length - 1]; Array.Copy(paramTypes, 1, proxyParamTypes, 0, proxyParamTypes.Length); var proxy = tb.DefineMethod(methodName, MethodAttributes.Public | MethodAttributes.Virtual, expression.ReturnType, proxyParamTypes); var method = tb.DefineMethod($"<{proxy.Name}>__Implementation", MethodAttributes.Private | MethodAttributes.Static, proxy.ReturnType, paramTypes); expression.CompileToMethod(method); proxy.GetILGenerator().EmitCallWithParams(method, paramTypes.Length); return proxy; } } The saddest thing is that this is still a relatively readable option, which I had to give up after moving to Core, because they removed the CompileToMethod apishka. As a result, you can generate an anonymous delegate, but you cannot generate a class method. And this is what we needed. Therefore, in the cow version, all this is replaced by the old one. kind ILGenerator. A typical trick that I do in this case - I just write C # code, parse it with ildasm and see how it works, in what places I need to fix it to cover the general case. If you try to write IL yourself, then in 99% of cases you can get a Common Language Runtime error detected an invalid program :). But in this case, the final code is much harder to understand than the relatively readable expressions.

The question of cutting this apish from the bark is discussed here (we are interested in the first item on the list), although the requester looks pretty dead. But not everything is so bad, because an even better solution was found!

Act Three: under the cover of the compiler

After rewriting and debugging the whole thing for the hundredth time, I wondered why it was impossible to do all this at the compilation stage? Yes, by caching the generated types, the overhead of using clients is insignificant, we pay only for the Activator.CreateInstance call, which is a trifle in the context of making a whole HTTP request, especially since they can be used as a singleton, since There is no state except the service URL in it. But still, we have decent restrictions here:

- We cannot look at the generated code and take care of it. In principle, this is not necessary, because it is primitive, but so far I have not written the final working code, I had to guess a lot about why it does not work as intended. In summary: debugging dynamic builds is still fun

- The customer must always have the same interface as the customer. When is it uncomfortable? Well, for example, when the server has a synchronous apish, but on the client it must be asynchronous, for an HTTP request. And therefore, either you have to block the stream and wait for a response, or make all server methods asynchronous, force the service to set Task.FromResult anywhere, even if it does not need it.

- It’s always nice to get rid of reflection in rantayme

Just at that time, I heard a lot of interesting things about Roslyn - a new modular compiler from Microsoft, which allows you to dig into the process well. Initially, I really hoped that in it, as in LLVM, you can simply write middleware for the desired transformation, but after reading the documentation, it seemed that Roslyn cannot do full-fledged code generation without any extra gestures from the user: This is done in the LINQ replacement project for cycles , but for obvious reasons it is not very convenient), or the analyzer in the style of “you forgot the comma here, let me insert it to you”. And then I came across an interesting rekvest feature in the gitkhab language repository on this topic ( tyts ), but then two problems quickly came to light: first, before the release of this feature, for a very long time, and secondly, I was told quite quickly that working form does not help me. Although it was not so bad, because in the comments I was given a link to an interesting project, which seemed to be doing what I needed.

Having picked a few days and, having mastered the basic project, I understood that it works! And it works as it should. Just some kind of magic. Unlike writing your own compiler on top of the usual one, here we write the usual nuget package, which we can simply plug into the solution, and it will do its dirty work during the build, in our case, it will generate client code for the service. Full integration with the studio, do not do anything - lepota. True, the backlight after the first installation of the solution will not work, but after the rebuild and re-opening of the solution there will be both the backlight and IntelliSense! True, not everything works: for example, I did not understand how to force the display of extended documentation from an interface with <inheritdoc />, for some reason the studio does not want to do this. Well, okay, the main thing is done - the classes are generated, they work, and the result of the generation can always be overlooked and corrected, set by one click through the nuget. Everything we wanted.

For a user, the usage looks like this:

Just write the interface, hang up a couple of attributes, compile, and can use the generated class. PostSharp is not needed! (joke).

So how does it all work?

Act Four: Final

Initially, I was not going to go deep, because there was already a ready-made library that fully met my requirements, it only remained to write the analyzer and make a package. However, the reality turned out to be more cruel, and catching mistakes, then due to my improper use of the provided API, then due to errors or flaws in the library itself, the inevitable retribution still caught up with me. I had to understand and contribute to the result that everything started up as in the picture above.

Virtually all the salt, in fact, lies in the new .Net Core toolchain:

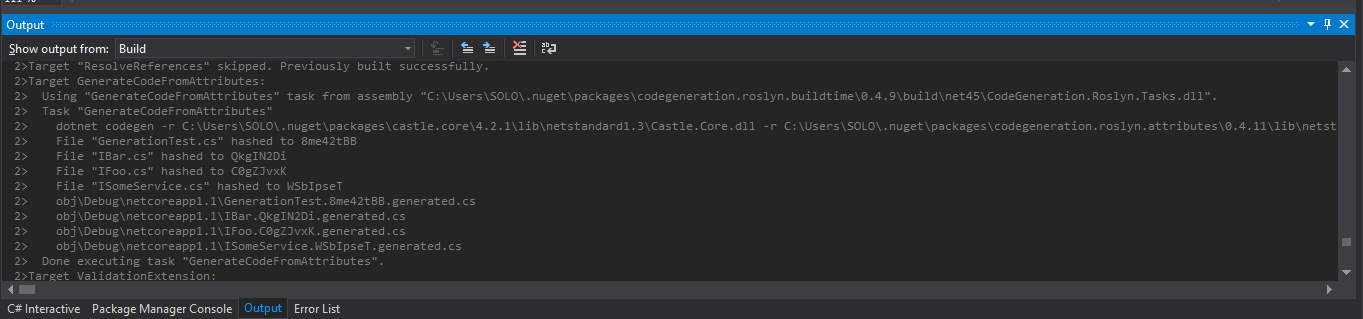

<Project Sdk="Microsoft.NET.Sdk"> <PropertyGroup> <PackageType>DotnetCliTool</PackageType> <OutputType>Exe</OutputType> <TargetFramework>netcoreapp1.0</TargetFramework> <AssemblyName>dotnet-codegen</AssemblyName> </PropertyGroup> </Project> In essence, this is a way to determine middleware when building a project. After that, the compiler understands what dotnet-codegen is and can call it. When building the project, you can see something like this:

How it all works when you click build (or even just save the file!):

- There is a

GenerateCodeFromAttributesfrom theCodeGeneration.Roslyn.Tasksassembly, which inheritsMicrosoft.Build.Utilities.ToolTaskand determines the launch of all this stuff during the project build. Actually, we saw the work of this task in the output window a little higher. - The text file

CodeGeneration.Roslyn.InputAssemblies.txt, where the full path to the assembly that we are currently collecting is written - It is called

CodeGeneration.Roslyn.Tool, which gets a list of files for analysis, input assemblies, etc. In general, everything you need to work. - Well, then everything is simple, we find all the heirs of the

ICodeGeneratorinterface in the project and call the onlyGenerateAsyncmethod that generates the code for us. - The compiler will automatically pick up the new generated files from the obj-directory and add them to the resulting assembly

As a result, the current version of this library allows you to hang an attribute on a class, to write literally 100 lines of code, which, on the basis of it, will generate everything you need. There is a restriction that you cannot generate classes for another assembly, that is, the generated classes are always added to the same assembly that compiles, but in principle you can live with it.

Additional act: debriefing

When I wrote this library, I expected that it would be useful to someone, but then became somewhat disappointed, because Swagger performs the same task, but cross-platform, and has a user-friendly interface. But, nevertheless, in my case, you can simply change the type, save the file and immediately get a compilation error. That for which everything was started:

And not least, I got a lot of fun realizing the whole thing, and also, as it seems to me, I’ve gotten better knowledge of the language and the compiler. Therefore, I decided to write an article: maybe the world doesn’t need a new swagger, but if you need code generation, T4 you despise or it doesn’t suit you, and reflection is not our option, then this is a great tool that just does its job, great it integrates into the current pipeline and eventually spreads simply as a nuget-package. Yes, and the lighting from the studio included! (but only after the first generation and re-opening of the solution).

I will say right away that I have not tried this process with non-core projects, with an adult framework, maybe there will be some difficulties. But given that the packages of this package include portable-net45+win8+wpa81 , portable-net4+win8+wpa81 and even net20 , there should not be any special difficulties. And even if you don’t like something, unnecessary dependencies or NIH there - you can always make your own, more kosher, implementation, the benefit of the code is so much. Another caveat - debugging, how to debug all this stuff I did not understand, the code was written blindly. But the author of the native library CodeGeneration.Roslyn definitely has the necessary knowledge, just look at the structure of the project, I just ended up doing without them.

And now I can say with a clear conscience: I absolutely do not regret that I wrote another swagger.

References:

- The base class of the very first version with manual writing classes

- My first version of the generation is based on reflection . Unfortunately, I deleted the initial non-core version, although the first version of the repository gives an idea of what it was.

- The current version of code generation on Roslyn . A very compact project that shows the power of both the approach in general and this particular library in particular:

- Basic code generation project on which everything relies

All my projects are MIT-licensed, forcite-study-break whatever you want, I have no complaints :)

Initially, all this was planned as a fully working project, which appeared as a result of real requirements, so this can all be used in production, at least after a minor dopilka.

Well, the answers to the questions, of course:

- MethodImplOptions.NoInlining is used to determine the name of the method we need to call. Since Since most methods are quite simple, many are literally single-line, the compiler likes to inline them. As you know, the compiler inline methods with a body is less than 32 bytes (there are still a lot of conditions, but we will not focus on this, here they are all executed), so you could see a funny bug that methods with a large number of arguments are successfully called, and with a small - throw an error in runtime, because we reach the very top of the callstack, not finding the right method:

MethodBase method = null; for (var i = 0; i < MAX_STACKFRAME_NESTING; i++) { var tempMethod = new StackFrame(i).GetMethod(); if (typeof(TService).IsAssignableFrom(tempMethod.DeclaringType)) { method = tempMethod; break; } } - The fact is that when I wrote the method with reflection, I didn’t really think that we add classes not to the current

RemoteClient.Coreassembly, but to the dynamically created one. And this is very important. As a result, after testing all the functionality and getting confidence that it all works, I saw that myWcfRequestclass is public. “Disorder” - I thought - “Implementation should be private, and only the interface should be visible”. And put the attribute internal. And it broke. Well, it's enough just to understand why, we generateA.Dynamicalygenerated.dll, which tries to instantiate the internal class in the parent assemblyA.dlland naturally falls with an access error. Well, and this is not counting the fact that we get an unpleasant cyclical dependence between assemblies. , «-», , , ,A.dll.

Source: https://habr.com/ru/post/342566/

All Articles