How to break a bicycle over crutches when testing your distribution

Disposition

Imagine for a minute, you are developing a software and hardware complex, which is based on its distribution, consists of multiple servers, has a bunch of logic, and ultimately it all has to be rolled onto quite real hardware. If you let in byaku, users will not stroke you on the head. Three perennial questions pop up: what to do? how to be? and who is to blame?

Next in the text there will be a story, how to start a stable release and how it came to this. In order not to stretch the article, I will not talk about the modular, manual testing and all the stages of rolling out on productive.

First there was MVP

It is difficult to do everything at once and correctly, especially when the end goal is not exactly known. The initial deployment at the MVP stage looked like this: no way.

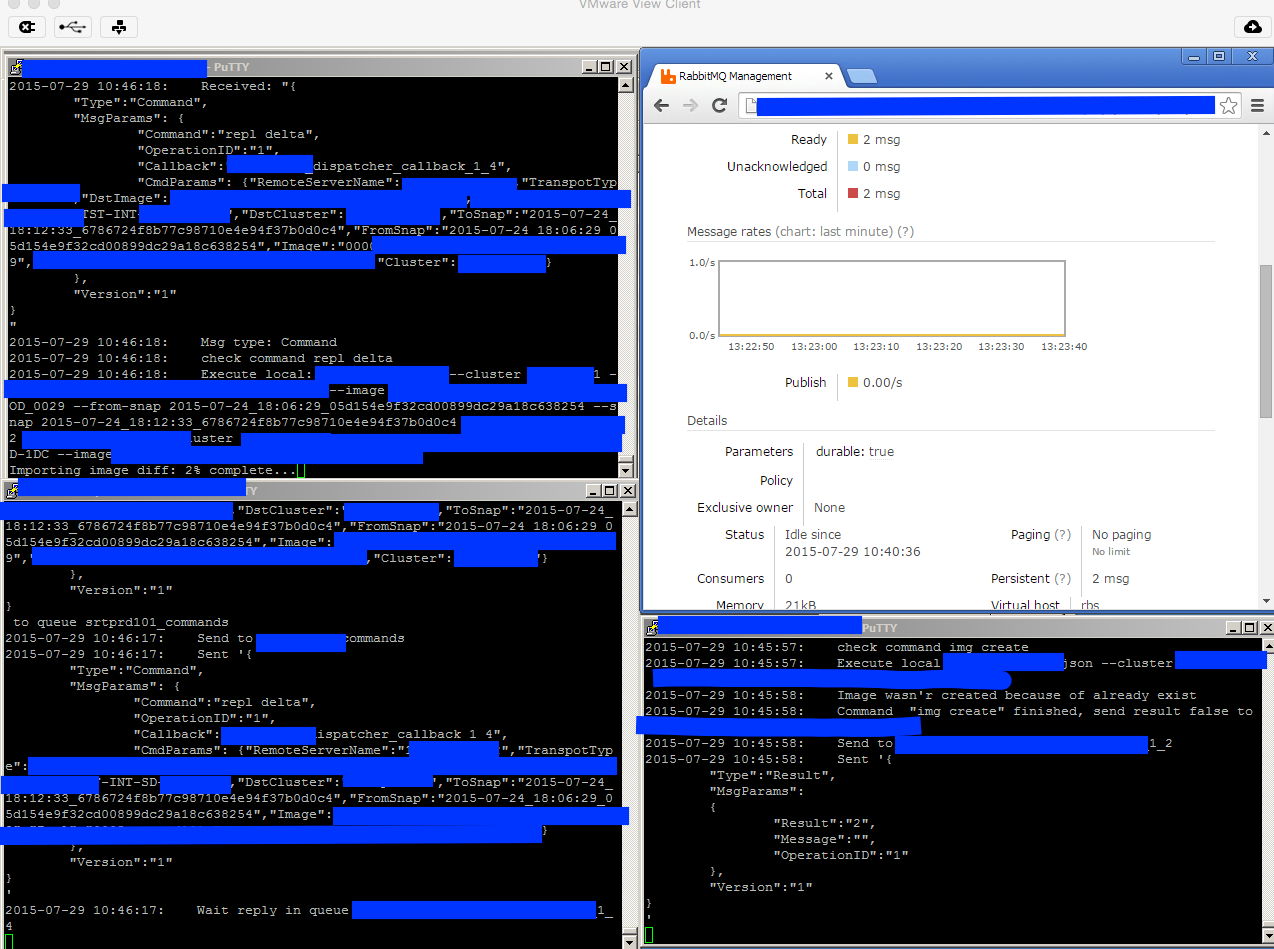

make dist for i in abc ; do scp ./result.tar.gz $i:~/ ssh $i "tar -zxvf result.tar.gz" ssh $i "make -C ~/resutl install" done The script is of course simplified utterly to convey the fact that there is no CI. From the developer's machine on an honest word, they collected and poured it on to the test environment for display. At this stage, the secret knowledge of setting up servers was in the heads of the developers and a bit in the documentation.

The problem is that there is a secret knowledge of how to fill.

Figak-Figak and on staging

Historically, teamcity has been used on many projects, and even then gitlab CI was not. Teamcity was chosen as the basis for CI on the project.

Once created a virtual machine, inside it run "tests"

make install && ./libs/run_all_tests.sh make dist make srpm rpmbuild -ba SPECS/xxx-base.spec make publish the tests boiled down to the following:

- in the semi-manual pre-prepared environment install a set of utilities

- check their work

- if ok then publish rpm

- in semi-manual mode, go to staging and roll a new version

Got better:

- Now in the master is something checked

- we know that in some environment it works

- catching children's mistakes

But feel the pain?

- problems with dependencies (part of the package rebuilt)

- The environment for the development of each develops as best he can

- tests run in some incomprehensible environment

- distributive build, installation setup and tests - three different incoherent things

Making the world a little better

This scheme has lived for some time, but we are engineers for that, to solve problems and make the world a better place.

- Dependencies of the entire distribution kit are placed in the meta package.

- A virtual machine template was created using vagrant tools

- Bash installation creation scripts rewritten to ansible

- Created a library for integration testing to verify that the system works as a whole correctly

- Some scripts are covered via serverspec

It allowed:

- Make identical development / testing environment

- Keep deployment code with application code

- Accelerate the inclusion of new developers in the process.

This scheme has lived a very long time, because in a reasonable time (30-60 minutes per build) allowed me to catch a lot of errors without bringing them to manual testing. But the sediment was that when the kernel was updated or when a package was rolled back, everything went awry, and somewhere the puppy began to feel sad.

It's getting hot

In the course of the play, various problems appeared that would pull on a separate article:

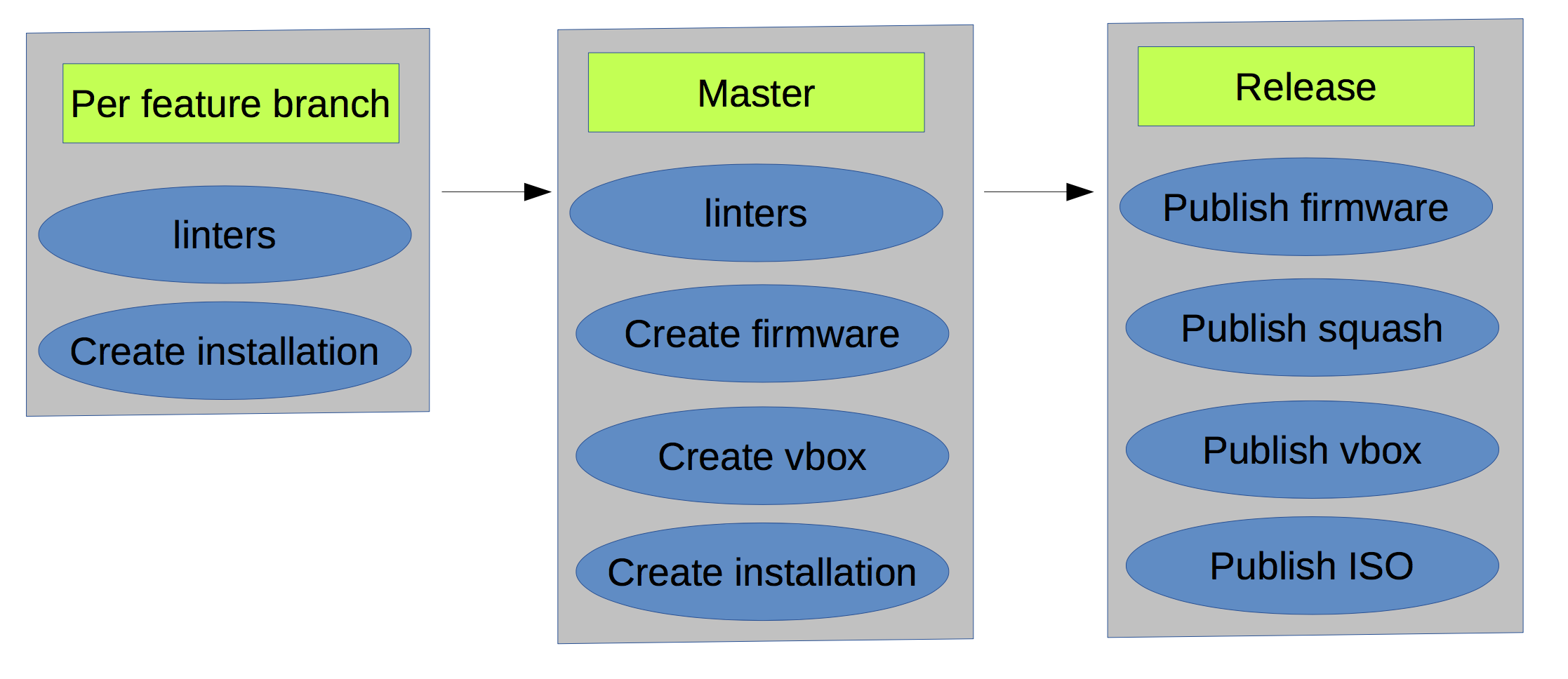

- The integration tests run over time began to drag out, since The virtual machine template has become behind the current versions of the packages. A couple of months rebuilt in semi-manual mode. As a result, they did so that upon release of the release:

- automatically going to vmdk

- vmdk hitched to a virtual machine

- The resulting VM was packed and poured into s3 (by the way, who knows how to make vagrant friends with s3?)

- With the approval of Merge, the build status is not visible - moved to gitlab ci. Cost a little blood - I had to abandon the triger of some builds on the regular tag, otherwise they are happy.

- Once a week there was a release release routine - automated:

- Release version increment

- Generate release notes for closed tasks

- Update changelog

- Create merge requests

- Create a new milestone

- To speed up builds - part of the steps was rendered to the docker, such as: linters, notifications, documentation build, part of tests, etc. etc.

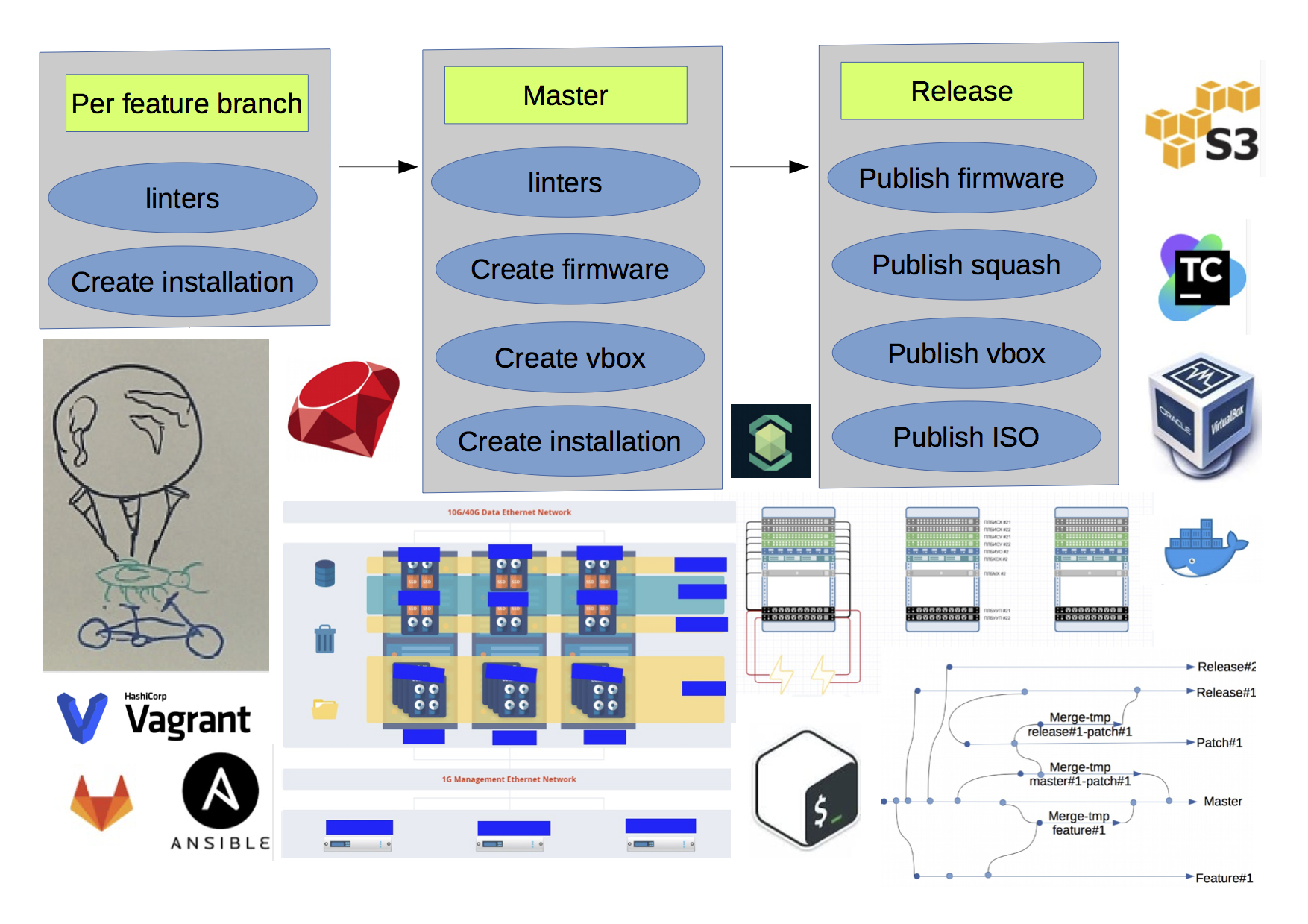

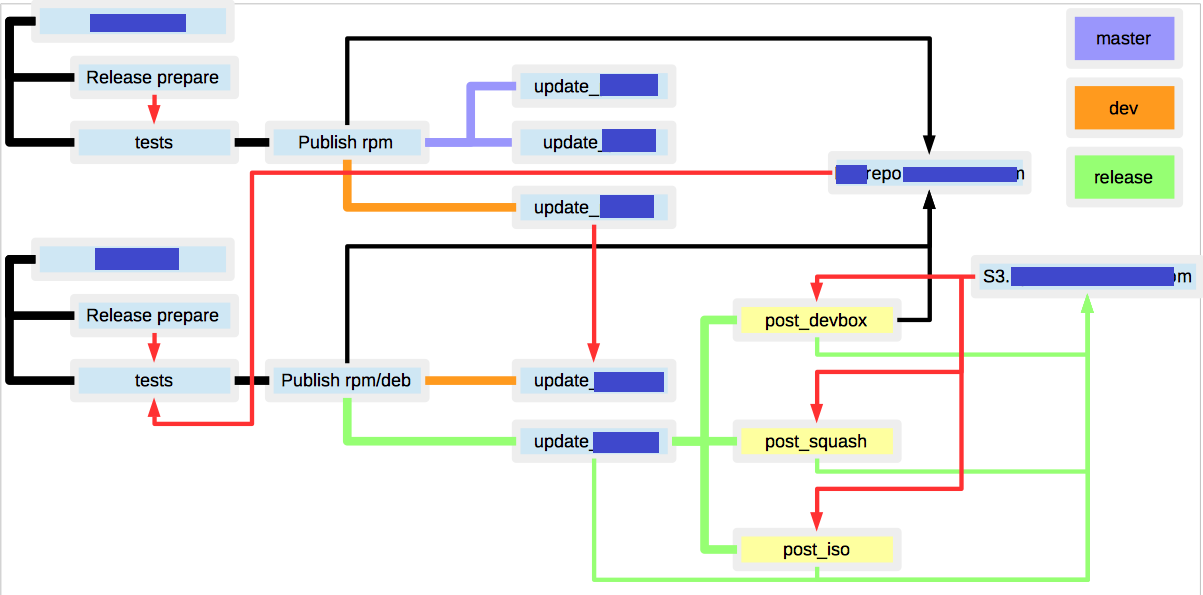

Somewhat simplified, the final scheme turned out to be this (the non-obvious links between builds are red):

- many RPM / DEB repositories for development packages

- S3 storage for storing artifacts (firmware, squash, iso, VM templates)

- if, using the same brunch, run the distribution kit assembly, the result may be different, because dependencies between packages are not hard-coded, and the status of the repositories could change

- a lot of non-obvious connections between builds

It allowed:

- Issue a private release once a week

- Increase development speed by reducing the number of conflicts and increasing test runs

Conclusion

It is difficult to call the result obtained ideal, but on the other hand I have not met any ready-made solutions for problems of this kind. The main messages from this opus are:

- the road to a thousand whether begins with the first step (s)

- there is pain - reduce it.

UPD: Russian version

')

Source: https://habr.com/ru/post/342216/

All Articles