Frontend distribution via CDN

In the world of modern web technologies, everything is rapidly developing and changing. A couple of years ago, it was completely normal, at the request of the client, to render the DOM structure on the server (for example, using PHP) and return a fully-formed page. Now more and more sites appear with a complete separation of the frontend (Angular, React, Vue.js ...) from the backend (some API endpoints), where at the frontend almost all the content is generated through scripts, and the server gives only data on request. Here we could mention the SSR (Server Side Rendering), but this is not about this work.

At all times, developers and website owners faced a difficult task: to deliver content as quickly as possible to as many customers as possible. One of the best decisions is to use the CDN (Content Delivery Network) to distribute static files. In the case of the dynamic rendering of pages on the server, we had to be limited to a small list of objects that could be placed in the CDN: style sheets, script files, images. However, the frontend written on Angular (React, Vue.js ...) is static entirely, including the index page. And here comes the thought: why not organize distribution through the entire front-end CDN?

This article will talk about setting up a complete solution for development, version control, automatic assembly and delivery of a static site using Gitlab CI, Amazon S3 and Amazon CloudFront. We will also discuss setting up related things: git, secure connection via HTTPS, domain mail, DNS hosting, backend server ...

')

If you are interested in this topic, welcome under cat. Caution! Many screenshots.

I spent a lot of time looking for suitable services based on a number of criteria. Firstly, this is the price - I would like the service to be either free or not very expensive. Secondly - reliability. It is also good if the service takes on most of the responsibilities, eliminating the need to install and configure something. For example, why set up your own git server, use some kind of third-party CI (Continuous Integration) service, if Gitlab provides all these services out of the box, as well as an unlimited number of private repositories, organizations, and coworkers? Why set up domain mail on your own server, if you can provide it to Yandex? About the CDN - I just did not find anything cheaper than Amazon CloudFront. Amazon S3 file storage is also not expensive (and why store a lot of files on VDS, if it is expensive?).

To begin, we will tie your domain to Yandex mail. We take this step primarily to configure domain mail. We will need to confirm domain ownership in order to receive the Amazon Amazon certificate, and for this we will need to receive an email at webmaster@yourdomain.com.

1.1. We are registered / logged in in Yandex .

1.2. Go to the domain mail Yandex and add your domain (menu item "Connect domain").

Here I want to immediately note that Yandex has got “traffic rules 2.0” or “Yandex.Connect”. However, I can’t decide if the Yandex.Connect interface isnot overworked too simple and lacks the necessary functionality, or if it’s too complicated and doesn’t have the necessary functionality. Anyway, I was not able to add several domains at once to Yandex.Connect, with the ability to edit the DNS records of each of them separately. Therefore, for me personally, it turned out to be easier to first add the domain to the traffic rules (Mail For Domain), and then transfer it to Yandex.Connect, which we will do.

1.3. We confirm ownership of the domain.

The easiest way for us is to delegate a domain to Yandex. To do this, install the following NS servers for your domain in the registrar's control panel:

Attention! This step should be performed strictly after the domain is connected to the mail for the domain (clause 1.2).

1.4. We are waiting up to 72 hours (as lucky).

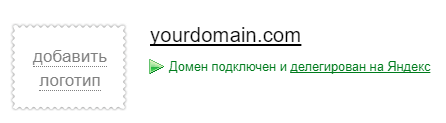

Verification of domain ownership should pass automatically. After that, on the page of the traffic rules in the list of "My domains" you should see a green message indicating that "the domain is connected and delegated to Yandex".

Now we can connect mail for the domain. You do not need to configure MX records for a domain, because we delegated the domain to the NS NS servers.

At this stage, we have already received domain mail with the ability to add up to 1000 mailboxes and with the correct DNS mail records.

1.5. We create the first mailbox johndoe@yourdomain.com.

1.6. In the top menu, click on the link "Migrate to Connect."

We carry out the transfer of the domain in Yandex.Connect, specify the name of the organization. After that, the https://connect.yandex.ru/portal/home page becomes available.

1.7. Go to the "Admin". Here we have various settings available.

The most interesting thing for us is the “DNS Management” menu item. We return to it later. Now we need to add a mailbox alias called webmaster@yourdomain.com. We will need this box to receive a letter requesting confirmation of domain ownership from Amazon.

1.8. Go to the menu item "Organizational structure" and select the only (so far) user that was automatically created when the first mailbox was added (item 1.5).

1.9. Click the ellipsis in the upper right corner of the user card, in the drop-down menu, select the item “Manage aliases”.

In the window that appears, click the button "Add new".

Enter the "webmaster" and click the "Add" button.

Now we have an alias of the mailbox webmaster@yourdomain.com and we are ready to accept letters to the domain mail.

To follow the steps in this guide, you need an AWS root user account.

If you do not have an account yet, then you are incredibly lucky and you can use the free AWS level. For this you need to go through the registration procedure . During the registration process, they will ask you for the credit card details, from where they will withdraw (and will not return!) $ 1 to verify solvency. You will also need to specify your phone number, which will be called by a robot from America.

In general, the registration process is quite simple and in my opinion does not require a detailed description.

To automatically exchange data with AWS services, we will use the aws cli console utility (AWS Command Line Interface). The utility is authorized using the Access key ID and Secret access key pairs. Create them.

3.1. Go to the AWS console.

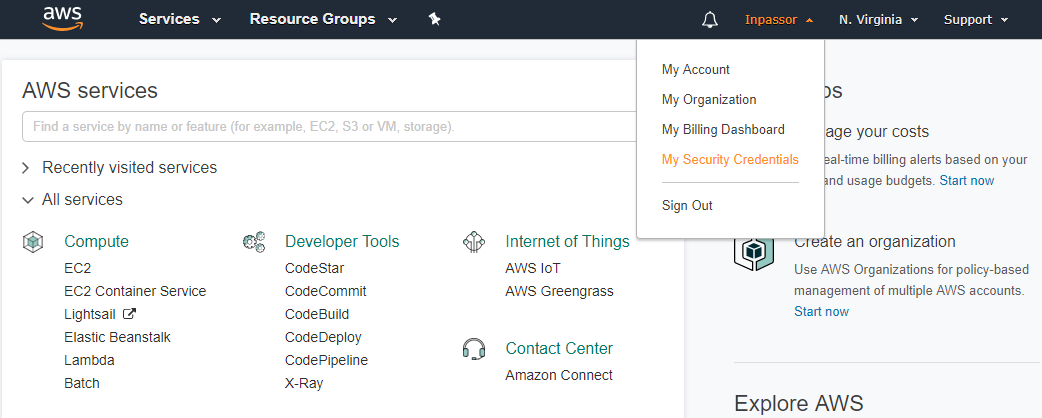

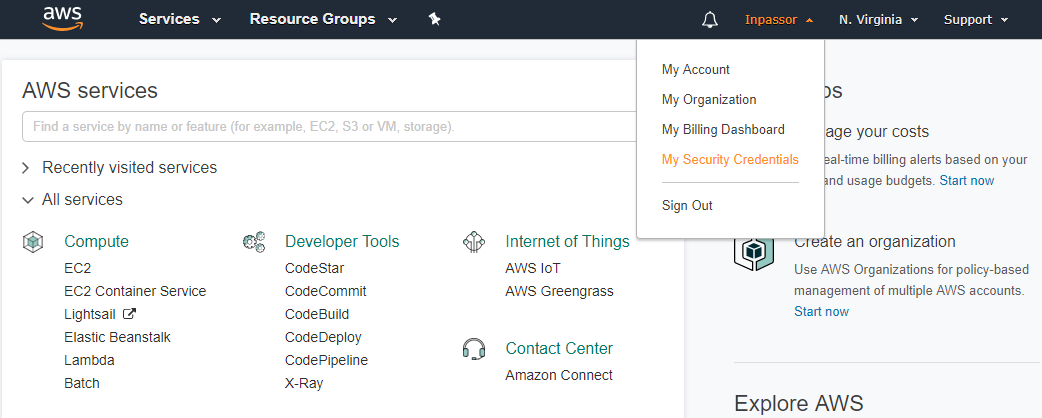

3.2. In the top menu on the right, click on your login. In the drop-down menu, select the item "My Security Credentials".

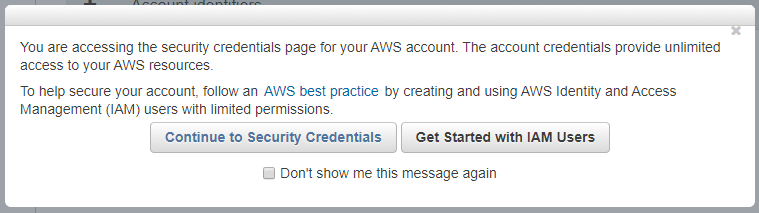

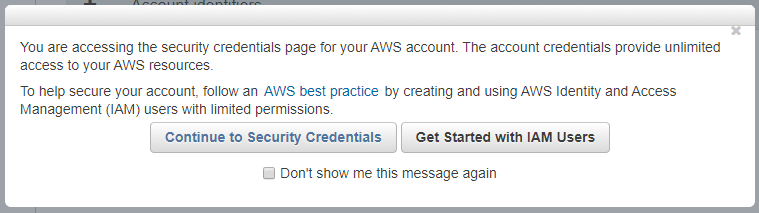

3.3. A warning window may appear here.

You can ignore it and click the "Continue to Security Credentials" button.

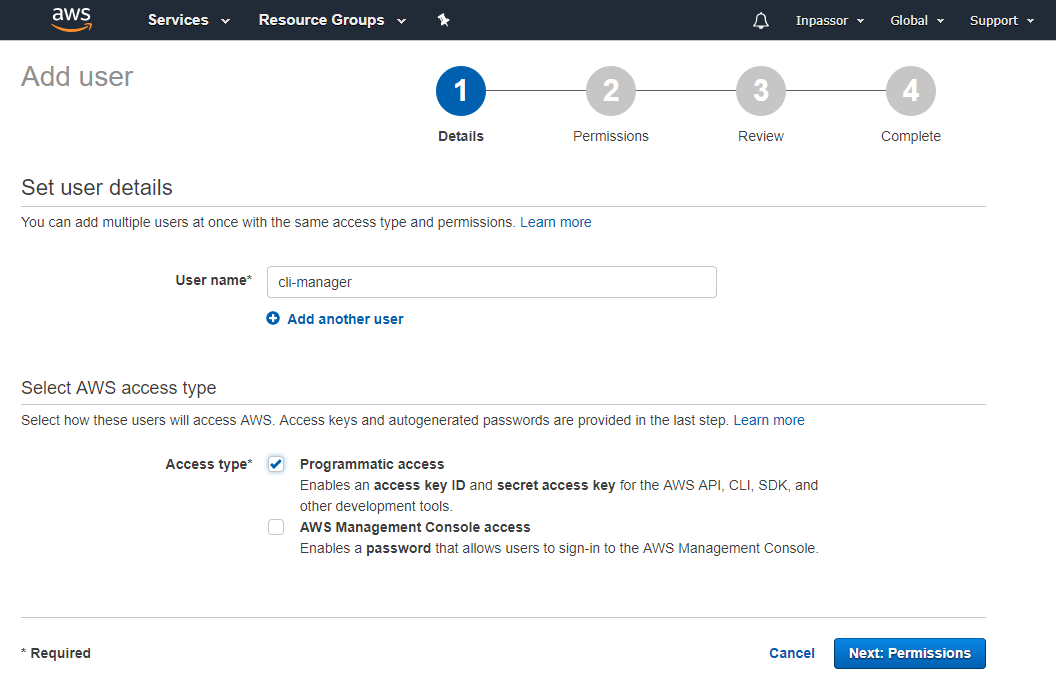

3.4. In the menu on the left, select the item "Users". Click the button "Add user".

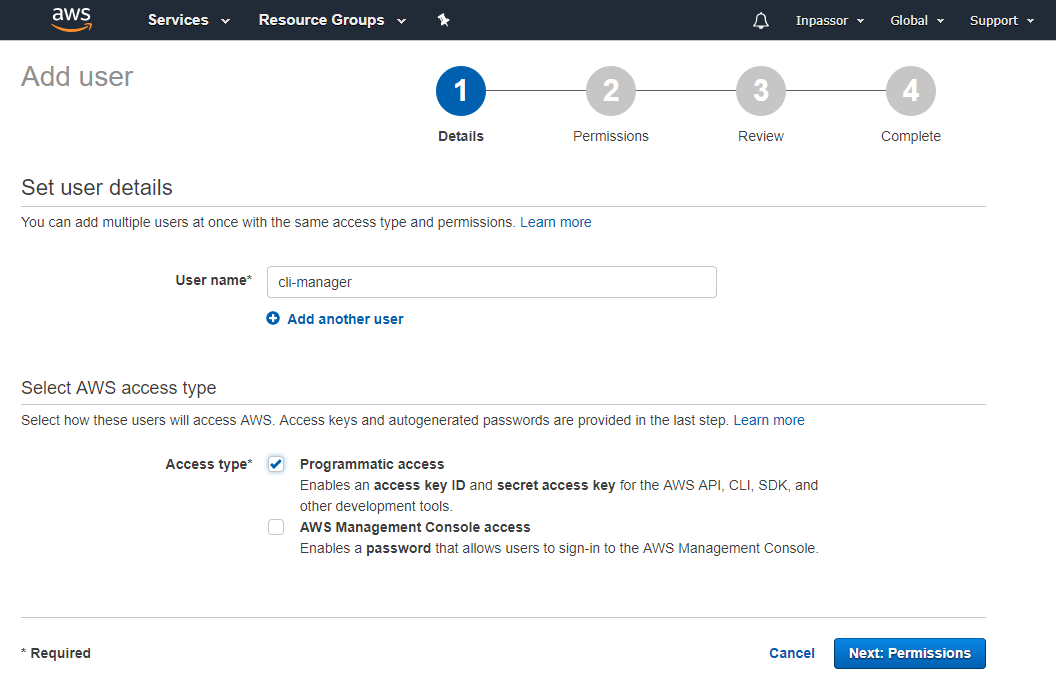

3.5. In the field "User name" we write the user name. For example, "cli-manager".

In the item “Access type” put a tick “Programmatic access”.

Click the button "Next: Permissions".

3.6. In the next paragraph, select “Attach existing policies directly”. Select the checkbox "AdministratorAccess".

Click the "Next: Review" button.

3.7. In the next paragraph, click the button "Create user".

3.8. In the last paragraph, we will see the newly created user and his data.

Attention! Immediately write down the Access key ID and Secret access key (you can see it by clicking “show”) and / or download the .csv file with user data (by clicking the Download .csv button). You will never see the secret access key anywhere else.

Now we need to get an SSL certificate for our domain.

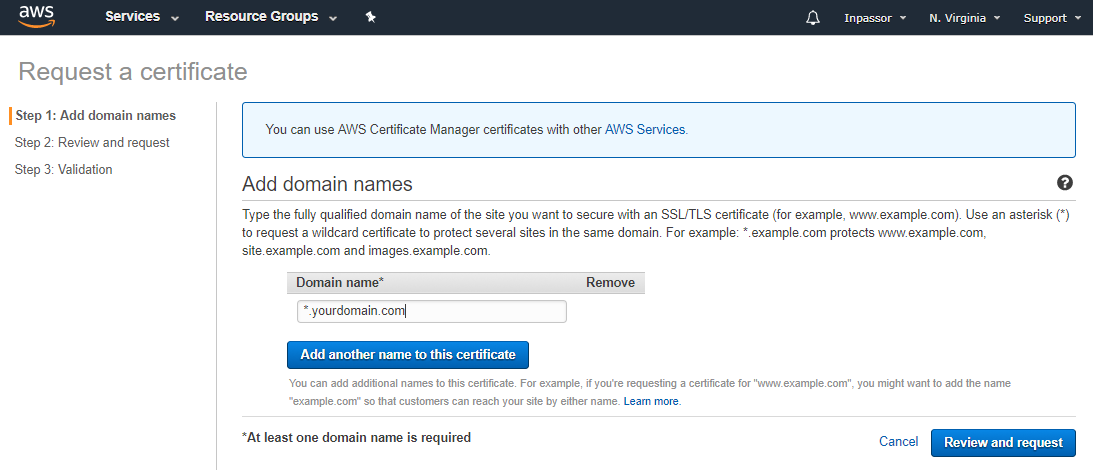

4.1. In the AWS console, select Services from the top menu.

4.2. In the search for services, type "Certificate manager".

4.3. Click the button “Request a certificate”.

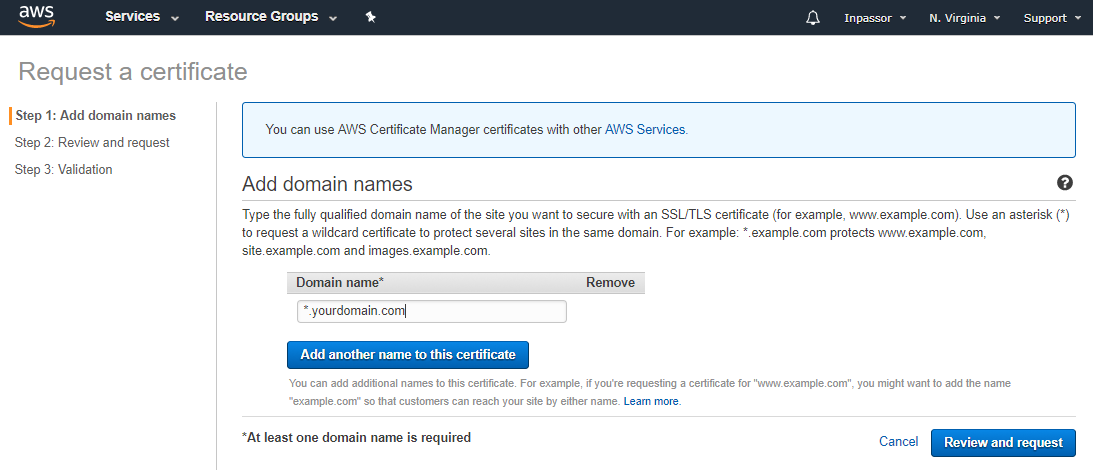

4.4. In the field "Domain name" we write "* .yourdomain.com".

Pay attention to the asterisk and the point before the domain name. Thus, we will receive a wildcard certificate for the domain and all its subdomains. Click the button “Review and request”.

4.5. In the next paragraph, click the "Confirm and request" button.

4.6. In the next paragraph, press the button "Continue".

Here we will see the certificate just requested and its status: “Pending validation”.

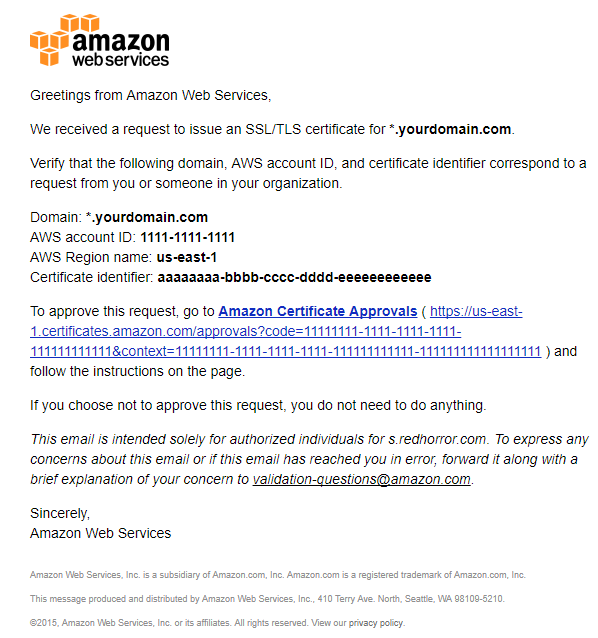

4.7. Now we go into the mail Yandex .

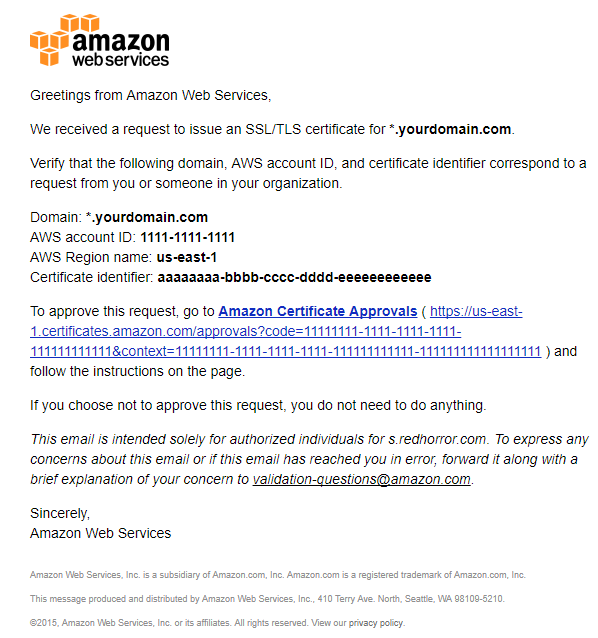

Login to your domain mailbox, which you created in step 1.5. of this guide (johndoe@yourdomain.com). You should have received a letter requesting confirmation of your domain ownership from Amazon.

4.8. Follow the link in the letter.

A new browser tab opens with a domain ownership confirmation page.

Push the "I Approve" button.

The certificate must pass a successful confirmation.

Returning to the Certificate manager we will see our certificate and its status: “Issued”.

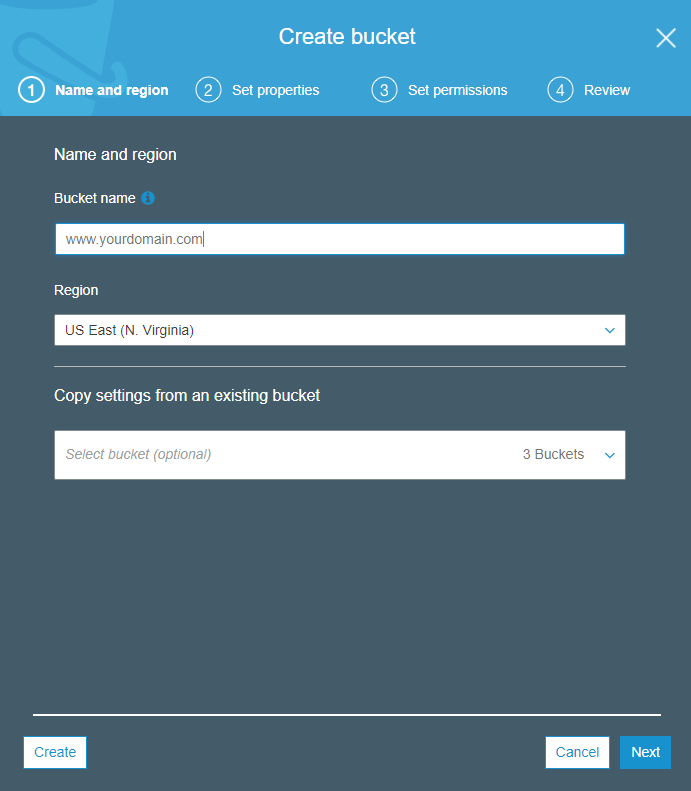

Create an Amazon S3 bucket in which static frontend files will be stored.

5.1. In the AWS console, select Services from the top menu.

5.2. In the search for services we type "S3".

5.3. Click the "Create bucket" button.

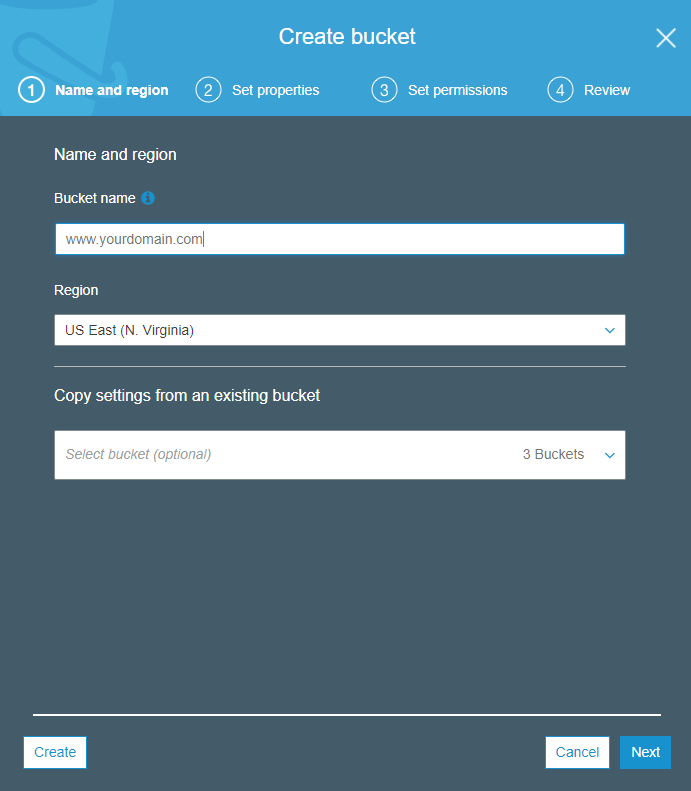

In the field “Bucket name” we indicate the name of the host (along with www). It must completely coincide with the name of your domain. For example: www.yourdomain.com .

In the "Region" field, select "US East (N. Virginia)". First, it will allow to avoid possible problems with redirection to the wrong objects ( article of documentation ). Secondly, it is the most popular region and the cheapest prices here. Third, we do not care in which region the basket is located, since CDN will distribute content, and clients will not directly access S3 files.

Click "Next" several times, leaving all the fields as is.

A new basket with the name www.yourdomain.com appears in the list of your baskets.

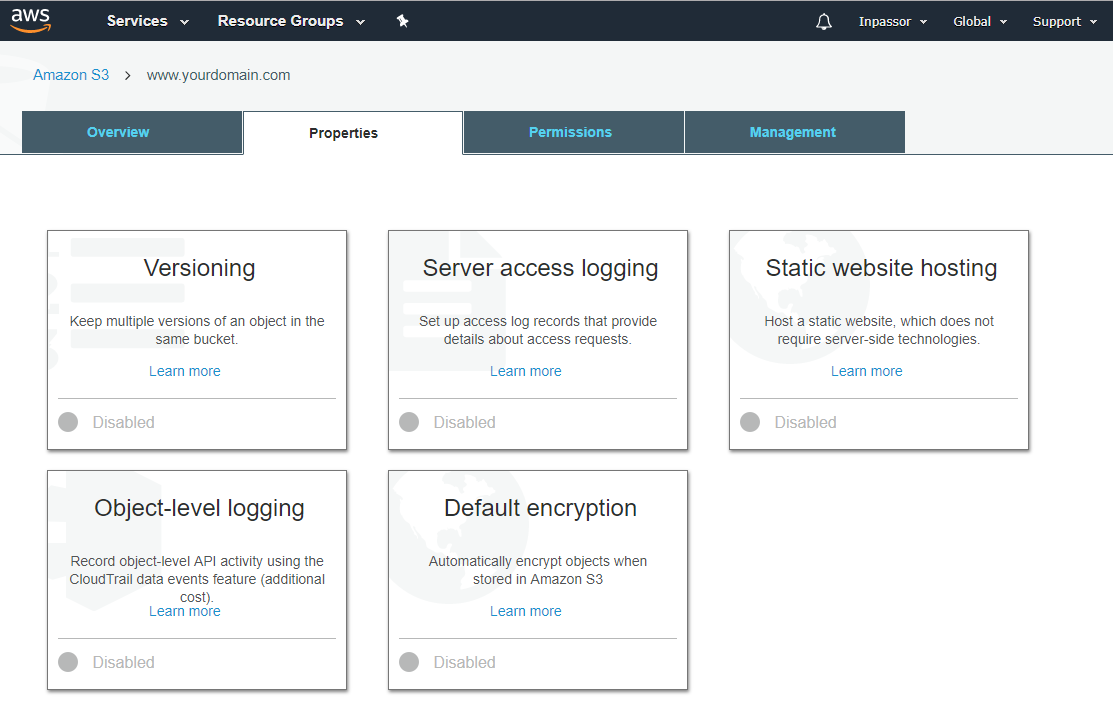

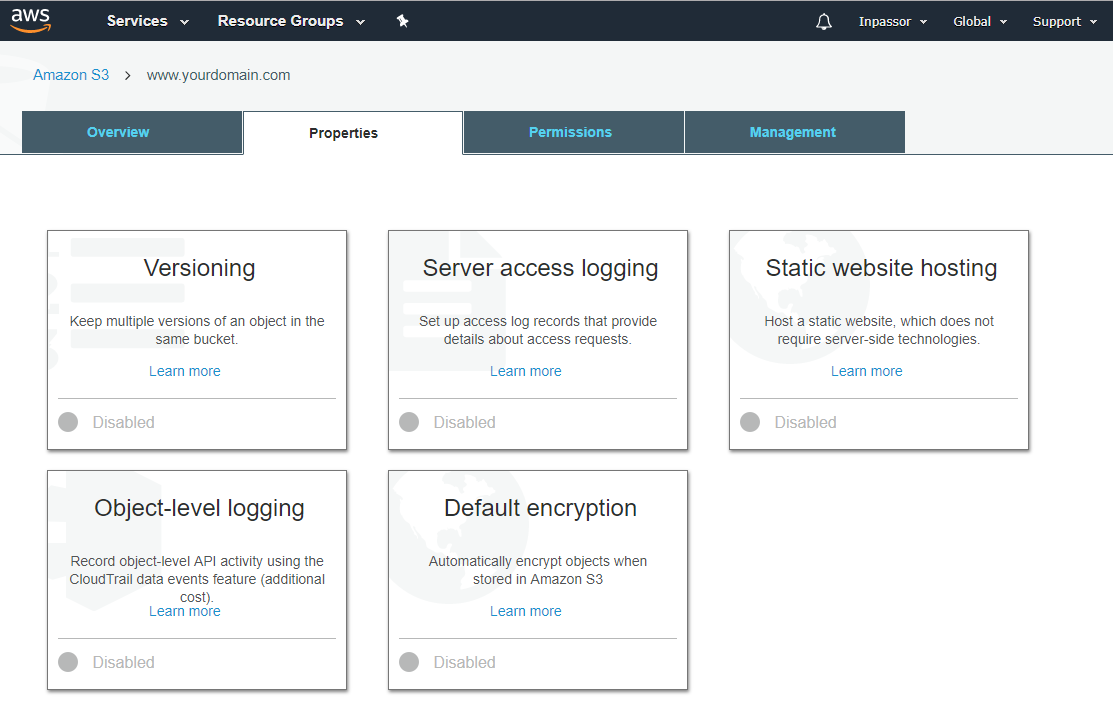

5.4. Edit the properties of the basket.

Click on the empty space next to the name of your basket. A pop-up window will appear on the right side of the window.

5.5. Select the item "Properties".

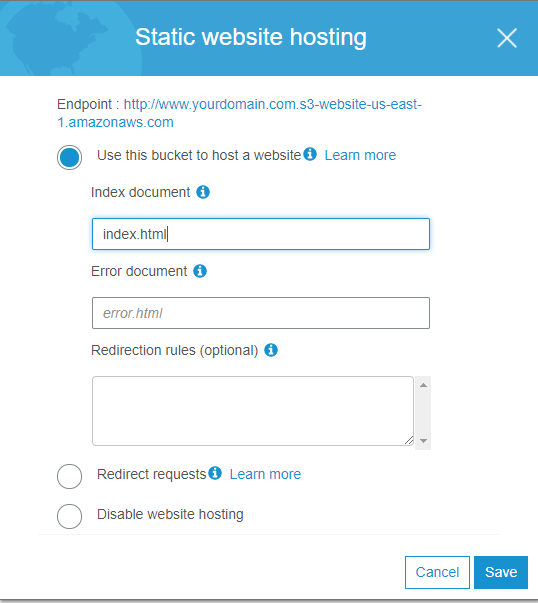

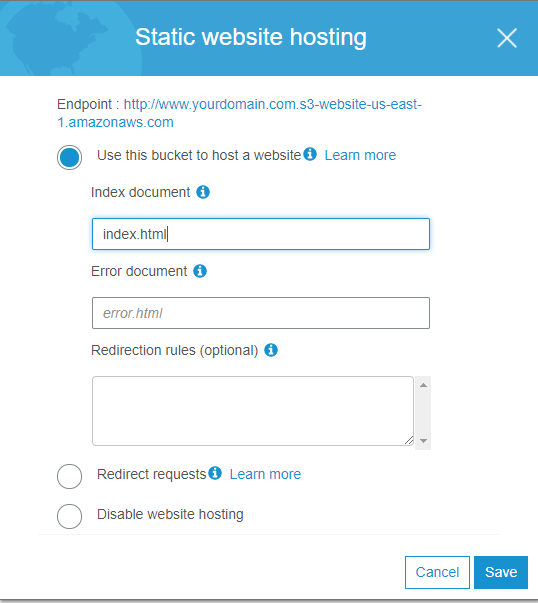

5.6. Click on "Static website hosting".

Immediately copy the "Endpoint" URL (listed at the top of the window). You will need it for further settings.

Select the first item “Use this bucket to host a website”.

In the field "Index document" we write "index.html".

Click the "Save" button.

5.7. Select the "Permissions" tab.

Here we need to add www.yourdomain.com.s3-website-us-east-1.amazonaws.com and https: //*.yourdomain.com to the list of allowed hosts for cross-domain queries.

Press the "CORS configuration" button.

We will not parse the XML file format. For a detailed study of this issue, you can read the documentation . We just need to copy this into the text field:

Replace the AllowedOrigin tag values with yours. The first address is the same Static website endpoint that you copied in paragraph 5.6 of this guide.

Click the "Save" button.

Now we need to organize the distribution of files through the CDN from the newly created basket.

6.1. In the AWS console, select Services from the top menu.

6.2. In the search for services, we type "CloudFront".

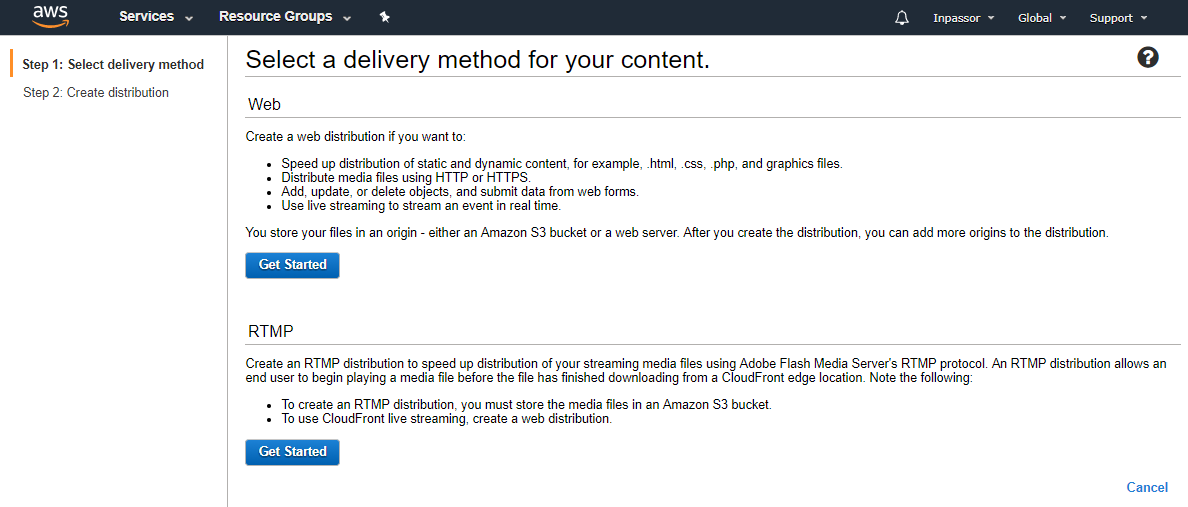

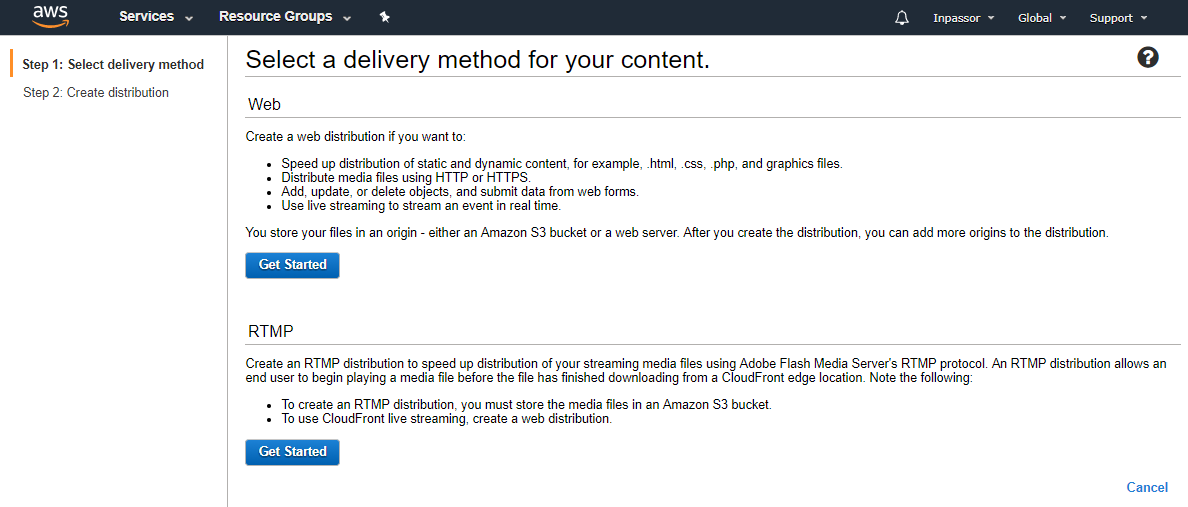

6.3. Click the button "Create Distribution".

Select the delivery method "Web", click the corresponding button "Get Started".

A very large form will open (I will not do the screen).

Go through the fields we need. We do not touch the rest of the fields, leave as is. In any case, we have the opportunity to further change all these settings.

Origin Settings

Origin Domain Name : here carefully! No need to choose the domain " www.yourdomain.com.s3.anazonaws.com " from the tooltip for this field! We put in here the Static website endpoint, which you copied in paragraph 5.6 of this guide, without the “http: //” at the beginning.

Default Cache Behavior Settings

Viewer Protocol Policy : select the item "Redirect HTTP to HTTPS".

Allowed HTTP Methods : select "GET, HEAD, OPTIONS".

Cached HTTP Methods : put a tick in front of "OPTIONS".

Cache Based on Selected Request Headers : select the "Whitelist" item. In the " Whitelist Headers " item that appears, select "Origin", click the "Add" button.

Object Caching : Select the item "Customize".

Minimum TTL : write the value "300".

Compress Objects Automatically : select “Yes”.

Distribution Settings

Alternate Domain Names (CNAMEs) : in the text box, write " www.yourdomain.com " and "static.yourdomain.com" - one on each line. At static.yourdomain.com, we will have access to everything the same as through www.yourdomain.com . We will use it to get static files to reduce the number of requests to the main domain.

SSL Certificate : select the item “Custom SSL Certificate”. Below in the drop-down list, select the previously obtained SSL certificate "* .yourdomain.com".

Default Root Object : enter “index.html” (without a slash at the beginning).

Click the button "Create Distribution".

Distribution created. It will appear in the list with the status "In Progress". For some time (usually up to 10 minutes), it will acquire the status “Enabled”.

Immediately copy the ID and Domain Name of our distribution, we will need them for further settings.

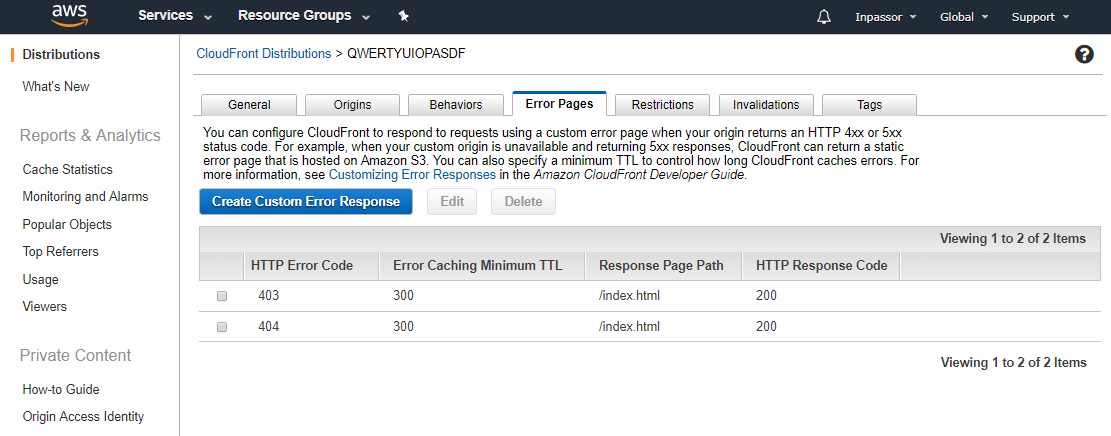

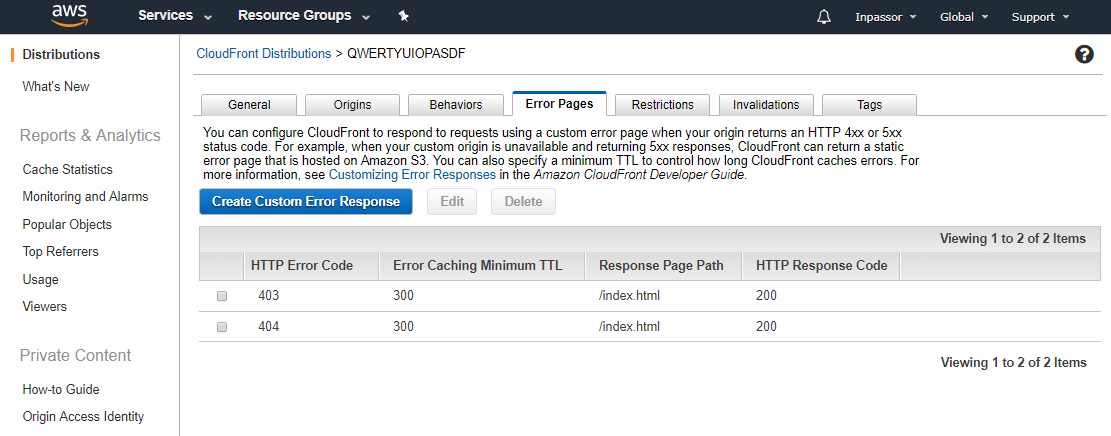

6.4. Set up error pages.

Click on the distribution ID in the list. Go to the tab "Error Pages".

Click the "Create Custom Error Response" button.

In the "HTTP Error Code" select "403: Forbidden".

“Customize Error Response” - select “Yes”.

In the "Response Page Path" enter "/index.html".

In the "HTTP Response Code" select "200: OK".

Repeat the same steps for error 404.

Thus, not found or denied addresses will be redirected to index.html and processed by Angular Router.

It's time to set up a backend server.

Even if you have a completely static site that does not require API calls on the server, VDS will not hurt. The fact is that the root domain entry must be type A (and / or AAAA if you have IPv6) and, accordingly, refer to the IP address. My opinion is the easiest and cheapest way to get a permanent IP address on the Internet is to rent a VDS. In addition to this, we are able to place various services on this IP address: API, databases, real-time messaging service, and anything else. Some DNS hosting providers provide the ability to use ALIAS instead of an A record, where you can enter a domain name, not an IP address. For example, you can use Amazon Route 53, set up a domain root record as a link to another S3 basket, which will redirect to your CloudFront distribution.

In any case, this is the choice of everyone. I'm inclined to rent VDS, especially since the prices for them are now quite affordable. For example, Ayhor Hosting provides VDS (1 PU / 512 MB RAM / 10 GB HDD) for only 1080 rubles per year. This is the cheapest fare, to begin with, it is quite suitable for us. Although, I recommend purchasing VDS with SSD.

Next, I will describe the process of configuring VDS (or a full server) with root access and the Debian 9 operating system on board.

In my work, I use several VDS of the same type and do not use any control panels, such as ISPManager. Therefore, I automated the server setup process by writing a simple bash script. Let's do the same and create some files. Be careful! Line strings in files should be in Unix (LF) style, not Windows (CRLF).

nginx.conf :

This is the main nginx configuration file. The main thing that we are doing here is to enable gzip compression and enable configs, which will be located in the / etc / nginx / sites-enabled directory.

ssl.conf :

Nginx configuration template for SSL. Here we connect the certificate that Let's give us to Encrypt and set the required SSL parameters, which should give us an A + rating in the Qualys SSL Server Test . Pay attention to the {{DOMAIN}} substrings - this is as it should be. The setup script itself will replace them with your domain.

site.conf :

Nginx configuration template for the domain root zone. Here we connect the SSL configuration, make available from outside the directory in which Let's Encrypt will add files for domain validation. All requests to yourdomain.com or yourdomain.com are redirected to www.yourdomain.com .

api.conf :

Nginx configuration template for the api.yourdomain.com subdomain. Here we also connect the SSL configuration, make available from the outside the directory in which Let's Encrypt will add files for domain validation. All requests to api.yourdomain.com are redirected to api.yourdomain.com . We connect PHP7.1-FPM.

setup.sh :

Bash script to automatically configure the server. At the beginning of the file, several variables are declared:

DOMAIN = yourdomain.com # your domain (without www in the beginning)

API_DOMAIN = "api. $ DOMAIN" # subdomain on which the API backend will be (here it is api.yourdomain.com)

SUPERUSER = "inpassor" # name of root user with root rights

SUPERUSER_PASSWORD = "12341234" # root password

USER = "johndoe" # username

USER_EMAIL = "johndoe@yourdomain.com" # user email - used to register with Let's Encrypt

USER_PASSWORD = "12341234" # user password

Of course, you need to reassign them.

Then the following actions are performed sequentially:

That's all, the server setup script is ready here.

Now we can log in to our VDS through SSH (by its IP address) as root, copy the * .conf and setup.sh files, make setup.sh executable (chmod 700 setup.sh) and run it.

After the VDS setup process is ready for operation.

We have installed nginx, which listens to requests for addresses: yourdomain.com , yourdomain.com , api.yourdomain.com , api.yourdomain.com . Requests to http are redirected to https, requests to yourdomain.com are redirected to www.yourdomain.com . At api.yourdomain.com we have an API. Now there is empty and in order for it to respond to our requests, you need to create the index.php file in the /var/www/api.yourdomain.com/ directory.

But so far this is not available, for the reason that we have not registered the necessary DNS settings.

After we set up the distribution of CloudFront and backend server, we are ready to make the final setup of DNS records.

8.1.We return to Yandex.Connect .

8.2.Go to the "Admin".

8.3. Select the menu item "DNS Management".

We need to add four new DNS records.

We have just sent requests to yourdomain.com (without www) and api.yourdomain.com to our VDS, and requests to www.yourdomain.com and static.yourdomain.com - to the distribution of CloudFront.

Next we need to create a private git repository to store and version the source files of our project on Angular.

9.1.Register / log in to Gitlab .

9.2. Press the "New project" button.

9.3.Next, choose the path to the project and its name.

Set “Visibility Level” to “Private” and press the “Create project” button.

9.4. Add your public SSH key in the settings.

If you do not have a key, you need to create it. How to create a private key pair - a public key in the Windows system can be read, for example, here .

In the top menu, click on your avatar, in the drop-down menu, select the item "Settings".

In the menu on the left, select the item "SSH Keys".

Insert the contents of the public key file into the “Key” field. In Windows, it is usually located along the path: C: \ Users \ YourUsername \ .ssh \ id_rsa.pub.

In the field “Title” we write the name of the key (whatever).

9.5. We clone a repository on the local computer.

To do this, we must have git / git for Windows installed on our computer.

In the command line, go to the local folder with your projects, for example, in C: \ Projects .

Run the command:

The repository address “git@gitlab.com: YourLogin / my-awesome-project.git” can be found on the project’s main page on the Gitlab website. Here “MyAwesomeProject” is the name of the local folder with the project that will be created automatically.

We have just cloned an empty repository to a local computer and we have a project folder in which we can start creating your project.

We set up Gitlab CI to automatically build a project, synchronize the collected files with the Amazon S3 bucket and update the CloudFront distribution.

10.1. We go to our project on the site https://gitlab.com .

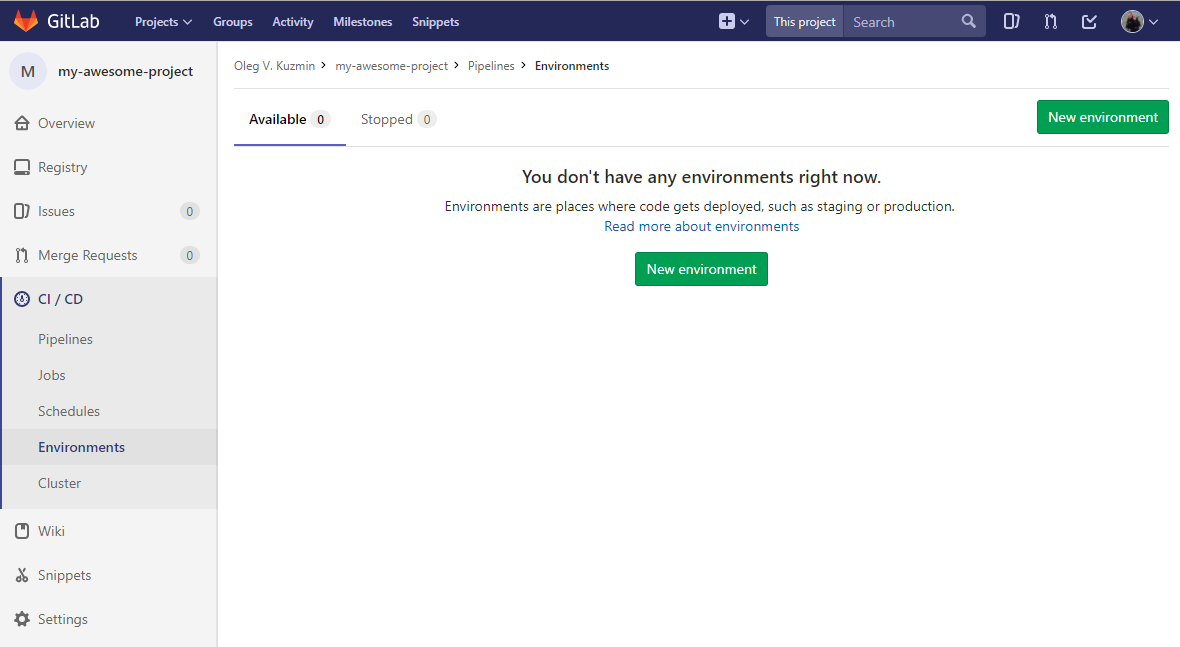

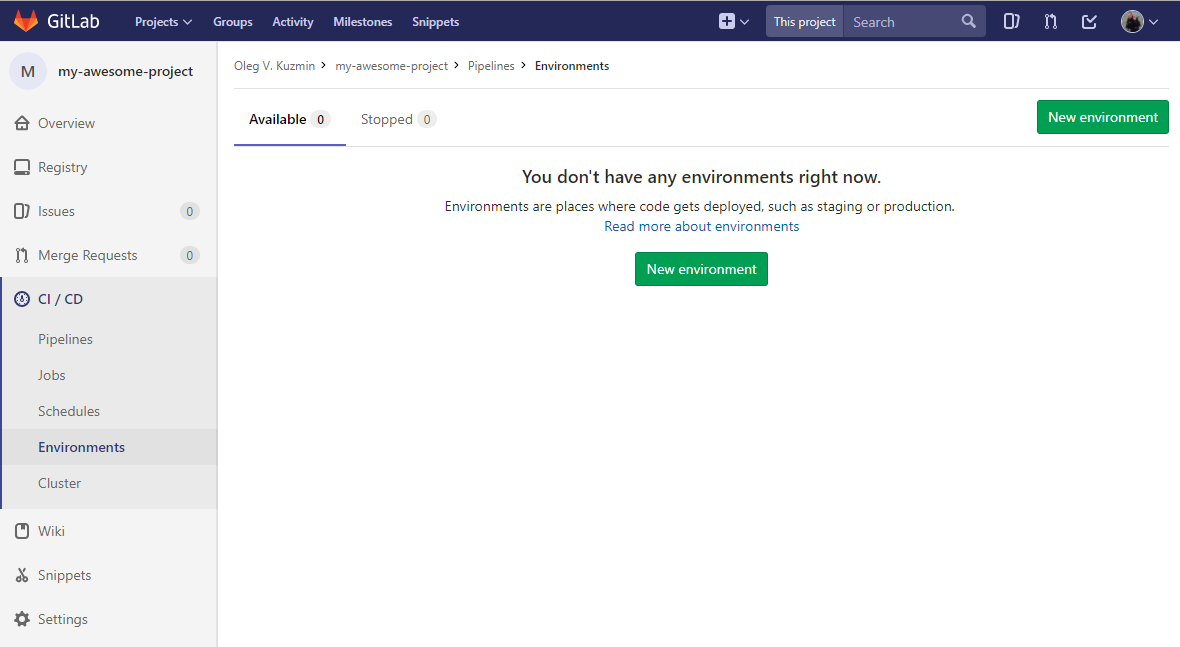

10.2.In the menu on the left, select "CI / CD" - "Environments".

Press the "New environment" button.

10.3.In the field "Name" enter "prod".

In the field “External URL” enter your domain (along with https: // and www at the beginning). For example: www.yourdomain.com . Click the "Save" button.

10.4. In the menu on the left, select “Settings” - “CI / CD”.

10.5. Opposite the point “Secret variables” press the button “Expand”.

In the “Key” field, enter “AWS_ACCESS_KEY_ID”.

In the field "Value" enter your Access key ID, obtained in paragraph 3.8 of this guide.

In the field «Environment scope» leave an asterisk.

Click the button “Add new variable”.

Add another variable “AWS_SECRET_ACCESS_KEY”.

We repeat the actions, in the “Value” field, enter your Secret access key, obtained in paragraph 3.8 of this guide.

And one more variable is “AWS_DISTRIBUTION_ID”.

We repeat the actions, in the field “Value” we enter your Distribution ID, obtained in clause 6.3 of this guide.

This time, in the “Environment scope” field, enter “prod”.

It should turn out as in the screenshot:

We have completed the environment settings and CI variables, the rest will be written further directly in our project in the .gitlab-ci.yml file .

It is time to create our project on Angular.

11.1.In the command line, go to the directory of our project - where we have cloned an empty repository (see clause 9.5 of this guide).

In our example, this is C: \ Projects \ MyAwesomeProject .

We execute the following commands:

We replace yourdomain.com with the name of your project (it does not have to be the same as the domain name).

We have globally established Angular cli and in the project directory there are files with which you can start working.

11.2.Create a .gitignore file :

11.3.Edit the file .angular-cli.json .

In the “apps” section, we find the “assets” key and change its value to:

Thus, all the files and directories that will be located in src / assets will fall into the root of our build.

In “styles”, replace “styles.scss” with “styles / styles.scss”.

11.4.Create the src / styles directory and drag the src / styles.scss file there .

Create a src / styles / _variables.scss file with the following contents:

At the beginning of the src / styles / styles.scss file, insert the line:

In the future, the build script will update the $ static-url variable in the src / styles / _variables.scss file .

Thus, we will have the opportunity to prescribe in styles the paths to images and fonts through this variable.

11.5. Add the file src / assets / favicon.ico . How are we without an icon then?

11.6. Create a file src / assets / robots.txt : 11.7. Create the src / assets / sitemap.xml file :

11.8.Create a .gitlab-ci.yml file :

Let's see what we have here.

Docker image "node: 8.9" is used for the script.

Declares one step of the “deploy” build.

The "node_modules" directory is cached.

Before executing the build script, a global installation of angular / cli is performed, after which all dependencies of the project are installed.

Next, the necessary python-dev and unzip packages are installed, the aws cli utility is downloaded, unpacked and installed.

A single task is declared with the name “deploy_prod”, which is performed in the “prod” environment, at the “deploy” stage and only in the “master” branch.

As a result of this task, artifacts will be created - the entire contents of the “dist” directory (the result of the project build).

Task fulfillment process:

11.9. Run the following command in the project directory:

Everything!If we have completed all the steps in this guide correctly, it remains to wait for Gitlab to complete the task, and we will see our launch site at www.yourdomain.com .

Congratulations!

So, we have just deployed a convenient working environment for creating a site on Angular, set up an automatic build of the project and its delivery via CDN. We also set up a backend server, SSL certificates, DNS records and we have domain mail.

What I did not mention in the article and what else can I do?

At all times, developers and website owners faced a difficult task: to deliver content as quickly as possible to as many customers as possible. One of the best decisions is to use the CDN (Content Delivery Network) to distribute static files. In the case of the dynamic rendering of pages on the server, we had to be limited to a small list of objects that could be placed in the CDN: style sheets, script files, images. However, the frontend written on Angular (React, Vue.js ...) is static entirely, including the index page. And here comes the thought: why not organize distribution through the entire front-end CDN?

This article will talk about setting up a complete solution for development, version control, automatic assembly and delivery of a static site using Gitlab CI, Amazon S3 and Amazon CloudFront. We will also discuss setting up related things: git, secure connection via HTTPS, domain mail, DNS hosting, backend server ...

')

If you are interested in this topic, welcome under cat. Caution! Many screenshots.

What we get as a result of the walkthrough:

- Start site on Angular.

- Version control (git).

- Automatic assembly and publication of the frontend in the Amazon S3 shopping cart.

- Frontend distribution via CDN (Amazon CloudFront).

- Free SSL certificate from Amazon with auto-renewal (for site operation via HTTPS).

- Free domain mail from Yandex and a convenient interface for managing DNS records (and not only).

- Bash script automatically configure the backend server.

- Backend server running on Debain 9 OS (nginx + PHP7.1-FPM).

- Free SSL certificate from Let's Encrypt with auto-renewal (for the work of the domain root zone and backend services via HTTPS).

What we need for this:

- Domain name with access to the control panel of the registrar.

- Gitlab account .

- AWS (Amazon Web Services) Account

- Account on Yandex .

- VDS (Virtual Dedicated Server) with Debain 9 installed.

A few words about this choice of tools

I spent a lot of time looking for suitable services based on a number of criteria. Firstly, this is the price - I would like the service to be either free or not very expensive. Secondly - reliability. It is also good if the service takes on most of the responsibilities, eliminating the need to install and configure something. For example, why set up your own git server, use some kind of third-party CI (Continuous Integration) service, if Gitlab provides all these services out of the box, as well as an unlimited number of private repositories, organizations, and coworkers? Why set up domain mail on your own server, if you can provide it to Yandex? About the CDN - I just did not find anything cheaper than Amazon CloudFront. Amazon S3 file storage is also not expensive (and why store a lot of files on VDS, if it is expensive?).

So let's go!

1. DNS hosting and Yandex mail

To begin, we will tie your domain to Yandex mail. We take this step primarily to configure domain mail. We will need to confirm domain ownership in order to receive the Amazon Amazon certificate, and for this we will need to receive an email at webmaster@yourdomain.com.

1.1. We are registered / logged in in Yandex .

1.2. Go to the domain mail Yandex and add your domain (menu item "Connect domain").

Here I want to immediately note that Yandex has got “traffic rules 2.0” or “Yandex.Connect”. However, I can’t decide if the Yandex.Connect interface is

1.3. We confirm ownership of the domain.

The easiest way for us is to delegate a domain to Yandex. To do this, install the following NS servers for your domain in the registrar's control panel:

dns1.yandex.net

dns2.yandex.net

Attention! This step should be performed strictly after the domain is connected to the mail for the domain (clause 1.2).

1.4. We are waiting up to 72 hours (as lucky).

Verification of domain ownership should pass automatically. After that, on the page of the traffic rules in the list of "My domains" you should see a green message indicating that "the domain is connected and delegated to Yandex".

Now we can connect mail for the domain. You do not need to configure MX records for a domain, because we delegated the domain to the NS NS servers.

At this stage, we have already received domain mail with the ability to add up to 1000 mailboxes and with the correct DNS mail records.

1.5. We create the first mailbox johndoe@yourdomain.com.

1.6. In the top menu, click on the link "Migrate to Connect."

We carry out the transfer of the domain in Yandex.Connect, specify the name of the organization. After that, the https://connect.yandex.ru/portal/home page becomes available.

1.7. Go to the "Admin". Here we have various settings available.

The most interesting thing for us is the “DNS Management” menu item. We return to it later. Now we need to add a mailbox alias called webmaster@yourdomain.com. We will need this box to receive a letter requesting confirmation of domain ownership from Amazon.

1.8. Go to the menu item "Organizational structure" and select the only (so far) user that was automatically created when the first mailbox was added (item 1.5).

1.9. Click the ellipsis in the upper right corner of the user card, in the drop-down menu, select the item “Manage aliases”.

In the window that appears, click the button "Add new".

Enter the "webmaster" and click the "Add" button.

Now we have an alias of the mailbox webmaster@yourdomain.com and we are ready to accept letters to the domain mail.

2. Amazon Web Services Account

To follow the steps in this guide, you need an AWS root user account.

If you do not have an account yet, then you are incredibly lucky and you can use the free AWS level. For this you need to go through the registration procedure . During the registration process, they will ask you for the credit card details, from where they will withdraw (and will not return!) $ 1 to verify solvency. You will also need to specify your phone number, which will be called by a robot from America.

In general, the registration process is quite simple and in my opinion does not require a detailed description.

3. Amazon Security Credentials

To automatically exchange data with AWS services, we will use the aws cli console utility (AWS Command Line Interface). The utility is authorized using the Access key ID and Secret access key pairs. Create them.

3.1. Go to the AWS console.

3.2. In the top menu on the right, click on your login. In the drop-down menu, select the item "My Security Credentials".

3.3. A warning window may appear here.

You can ignore it and click the "Continue to Security Credentials" button.

3.4. In the menu on the left, select the item "Users". Click the button "Add user".

3.5. In the field "User name" we write the user name. For example, "cli-manager".

In the item “Access type” put a tick “Programmatic access”.

Click the button "Next: Permissions".

3.6. In the next paragraph, select “Attach existing policies directly”. Select the checkbox "AdministratorAccess".

Click the "Next: Review" button.

3.7. In the next paragraph, click the button "Create user".

3.8. In the last paragraph, we will see the newly created user and his data.

Attention! Immediately write down the Access key ID and Secret access key (you can see it by clicking “show”) and / or download the .csv file with user data (by clicking the Download .csv button). You will never see the secret access key anywhere else.

4. Amazon SSL Certificate

Now we need to get an SSL certificate for our domain.

4.1. In the AWS console, select Services from the top menu.

4.2. In the search for services, type "Certificate manager".

4.3. Click the button “Request a certificate”.

4.4. In the field "Domain name" we write "* .yourdomain.com".

Pay attention to the asterisk and the point before the domain name. Thus, we will receive a wildcard certificate for the domain and all its subdomains. Click the button “Review and request”.

4.5. In the next paragraph, click the "Confirm and request" button.

4.6. In the next paragraph, press the button "Continue".

Here we will see the certificate just requested and its status: “Pending validation”.

4.7. Now we go into the mail Yandex .

Login to your domain mailbox, which you created in step 1.5. of this guide (johndoe@yourdomain.com). You should have received a letter requesting confirmation of your domain ownership from Amazon.

4.8. Follow the link in the letter.

A new browser tab opens with a domain ownership confirmation page.

Push the "I Approve" button.

The certificate must pass a successful confirmation.

Returning to the Certificate manager we will see our certificate and its status: “Issued”.

5. Amazon S3 Recycle Bin

Create an Amazon S3 bucket in which static frontend files will be stored.

5.1. In the AWS console, select Services from the top menu.

5.2. In the search for services we type "S3".

5.3. Click the "Create bucket" button.

In the field “Bucket name” we indicate the name of the host (along with www). It must completely coincide with the name of your domain. For example: www.yourdomain.com .

In the "Region" field, select "US East (N. Virginia)". First, it will allow to avoid possible problems with redirection to the wrong objects ( article of documentation ). Secondly, it is the most popular region and the cheapest prices here. Third, we do not care in which region the basket is located, since CDN will distribute content, and clients will not directly access S3 files.

Click "Next" several times, leaving all the fields as is.

A new basket with the name www.yourdomain.com appears in the list of your baskets.

5.4. Edit the properties of the basket.

Click on the empty space next to the name of your basket. A pop-up window will appear on the right side of the window.

5.5. Select the item "Properties".

5.6. Click on "Static website hosting".

Immediately copy the "Endpoint" URL (listed at the top of the window). You will need it for further settings.

Select the first item “Use this bucket to host a website”.

In the field "Index document" we write "index.html".

Click the "Save" button.

5.7. Select the "Permissions" tab.

Here we need to add www.yourdomain.com.s3-website-us-east-1.amazonaws.com and https: //*.yourdomain.com to the list of allowed hosts for cross-domain queries.

Press the "CORS configuration" button.

We will not parse the XML file format. For a detailed study of this issue, you can read the documentation . We just need to copy this into the text field:

<?xml version="1.0" encoding="UTF-8"?> <CORSConfiguration xmlns="http://s3.amazonaws.com/doc/2006-03-01/"> <CORSRule> <AllowedOrigin>http://www.yourdomain.com.s3-website-us-east-1.amazonaws.com</AllowedOrigin> <AllowedMethod>GET</AllowedMethod> <AllowedMethod>HEAD</AllowedMethod> <AllowedHeader>Content-*</AllowedHeader> <AllowedHeader>Host</AllowedHeader> <AllowedHeader>Origin</AllowedHeader> </CORSRule> <CORSRule> <AllowedOrigin>https://*.yourdomain.com</AllowedOrigin> <AllowedMethod>GET</AllowedMethod> <AllowedMethod>HEAD</AllowedMethod> <AllowedHeader>Content-*</AllowedHeader> <AllowedHeader>Host</AllowedHeader> <AllowedHeader>Origin</AllowedHeader> </CORSRule> </CORSConfiguration> Replace the AllowedOrigin tag values with yours. The first address is the same Static website endpoint that you copied in paragraph 5.6 of this guide.

Click the "Save" button.

6. Distributing Amazon CloudFront

Now we need to organize the distribution of files through the CDN from the newly created basket.

6.1. In the AWS console, select Services from the top menu.

6.2. In the search for services, we type "CloudFront".

6.3. Click the button "Create Distribution".

Select the delivery method "Web", click the corresponding button "Get Started".

A very large form will open (I will not do the screen).

Go through the fields we need. We do not touch the rest of the fields, leave as is. In any case, we have the opportunity to further change all these settings.

Origin Settings

Origin Domain Name : here carefully! No need to choose the domain " www.yourdomain.com.s3.anazonaws.com " from the tooltip for this field! We put in here the Static website endpoint, which you copied in paragraph 5.6 of this guide, without the “http: //” at the beginning.

Default Cache Behavior Settings

Viewer Protocol Policy : select the item "Redirect HTTP to HTTPS".

Allowed HTTP Methods : select "GET, HEAD, OPTIONS".

Cached HTTP Methods : put a tick in front of "OPTIONS".

Cache Based on Selected Request Headers : select the "Whitelist" item. In the " Whitelist Headers " item that appears, select "Origin", click the "Add" button.

Object Caching : Select the item "Customize".

Minimum TTL : write the value "300".

Compress Objects Automatically : select “Yes”.

Distribution Settings

Alternate Domain Names (CNAMEs) : in the text box, write " www.yourdomain.com " and "static.yourdomain.com" - one on each line. At static.yourdomain.com, we will have access to everything the same as through www.yourdomain.com . We will use it to get static files to reduce the number of requests to the main domain.

SSL Certificate : select the item “Custom SSL Certificate”. Below in the drop-down list, select the previously obtained SSL certificate "* .yourdomain.com".

Default Root Object : enter “index.html” (without a slash at the beginning).

Click the button "Create Distribution".

Distribution created. It will appear in the list with the status "In Progress". For some time (usually up to 10 minutes), it will acquire the status “Enabled”.

Immediately copy the ID and Domain Name of our distribution, we will need them for further settings.

6.4. Set up error pages.

Click on the distribution ID in the list. Go to the tab "Error Pages".

Click the "Create Custom Error Response" button.

In the "HTTP Error Code" select "403: Forbidden".

“Customize Error Response” - select “Yes”.

In the "Response Page Path" enter "/index.html".

In the "HTTP Response Code" select "200: OK".

Repeat the same steps for error 404.

Thus, not found or denied addresses will be redirected to index.html and processed by Angular Router.

7. Backend server

It's time to set up a backend server.

Even if you have a completely static site that does not require API calls on the server, VDS will not hurt. The fact is that the root domain entry must be type A (and / or AAAA if you have IPv6) and, accordingly, refer to the IP address. My opinion is the easiest and cheapest way to get a permanent IP address on the Internet is to rent a VDS. In addition to this, we are able to place various services on this IP address: API, databases, real-time messaging service, and anything else. Some DNS hosting providers provide the ability to use ALIAS instead of an A record, where you can enter a domain name, not an IP address. For example, you can use Amazon Route 53, set up a domain root record as a link to another S3 basket, which will redirect to your CloudFront distribution.

In any case, this is the choice of everyone. I'm inclined to rent VDS, especially since the prices for them are now quite affordable. For example, Ayhor Hosting provides VDS (1 PU / 512 MB RAM / 10 GB HDD) for only 1080 rubles per year. This is the cheapest fare, to begin with, it is quite suitable for us. Although, I recommend purchasing VDS with SSD.

Next, I will describe the process of configuring VDS (or a full server) with root access and the Debian 9 operating system on board.

In my work, I use several VDS of the same type and do not use any control panels, such as ISPManager. Therefore, I automated the server setup process by writing a simple bash script. Let's do the same and create some files. Be careful! Line strings in files should be in Unix (LF) style, not Windows (CRLF).

nginx.conf :

user www-data; worker_processes 1; error_log /var/log/nginx/error.log warn; pid /var/run/nginx.pid; events { worker_connections 1024; use epoll; } http { include /etc/nginx/mime.types; default_type application/octet-stream; log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; sendfile on; tcp_nopush on; client_header_timeout 30; client_body_timeout 30; reset_timedout_connection on; keepalive_timeout 30; client_max_body_size 32m; client_body_buffer_size 128k; server_tokens off; gzip on; gzip_vary on; gzip_disable "msie6"; gzip_proxied any; gzip_min_length 1024; gzip_comp_level 5; gzip_types application/atom+xml application/javascript application/json application/ld+json application/manifest+json application/octet-stream application/rss+xml application/vnd.geo+json application/vnd.ms-fontobject application/x-font-ttf application/x-web-app-manifest+json application/xhtml+xml application/xml font/opentype image/bmp image/svg+xml image/x-icon text/cache-manifest text/css text/plain text/vcard text/vnd.rim.location.xloc text/vtt text/x-component text/x-cross-domain-policy; expires max; include /etc/nginx/conf.d/*.conf; include /etc/nginx/sites-enabled/*; } This is the main nginx configuration file. The main thing that we are doing here is to enable gzip compression and enable configs, which will be located in the / etc / nginx / sites-enabled directory.

ssl.conf :

ssi on; ssl on; ssl_certificate "/etc/letsencrypt/live/{{DOMAIN}}/fullchain.pem"; ssl_certificate_key "/etc/letsencrypt/live/{{DOMAIN}}/privkey.pem"; ssl_trusted_certificate "/etc/letsencrypt/live/{{DOMAIN}}/chain.pem"; ssl_ciphers AES256+EECDH:AES256+EDH; ssl_prefer_server_ciphers on; ssl_protocols TLSv1.2; ssl_ecdh_curve secp384r1; ssl_dhparam /etc/nginx/dhparam.pem; ssl_stapling on; ssl_stapling_verify on; ssl_session_timeout 24h; ssl_session_cache shared:SSL:24m; ssl_buffer_size 1400; Nginx configuration template for SSL. Here we connect the certificate that Let's give us to Encrypt and set the required SSL parameters, which should give us an A + rating in the Qualys SSL Server Test . Pay attention to the {{DOMAIN}} substrings - this is as it should be. The setup script itself will replace them with your domain.

site.conf :

server { server_name {{DOMAIN}}; listen 80; listen 443 ssl http2; error_log off; access_log off; add_header X-Content-Type-Options nosniff; add_header X-XSS-Protection "1; mode=block"; add_header Strict-Transport-Security "max-age=31536000;"; add_header Cache-Control public; include /etc/nginx/ssl.conf; location /.well-known/acme-challenge/ { alias /var/www/.well-known/acme-challenge/; } location / { return 301 https://www.$host:443$request_uri; } } Nginx configuration template for the domain root zone. Here we connect the SSL configuration, make available from outside the directory in which Let's Encrypt will add files for domain validation. All requests to yourdomain.com or yourdomain.com are redirected to www.yourdomain.com .

api.conf :

server { server_name {{DOMAIN}}; listen 80; error_log off; access_log off; add_header X-Content-Type-Options nosniff; add_header X-XSS-Protection "1; mode=block"; location /.well-known/acme-challenge/ { alias /var/www/.well-known/acme-challenge/; } location / { return 301 https://$host:443$request_uri; } } server { server_name {{DOMAIN}}; listen 443 ssl http2; access_log /var/log/nginx/{{DOMAIN}}.access.log; error_log /var/log/nginx/{{DOMAIN}}.error.log; add_header X-Frame-Options DENY; add_header X-Content-Type-Options nosniff; add_header X-XSS-Protection "1; mode=block"; add_header Strict-Transport-Security "max-age=31536000;"; add_header Cache-Control public; add_header 'Access-Control-Allow-Origin' "$http_origin"; add_header 'Access-Control-Allow-Credentials' 'true'; add_header 'Access-Control-Allow-Methods' 'GET, HEAD, OPTIONS, POST, PUT, DELETE, PATCH'; add_header 'Access-Control-Allow-Headers' 'Accept,Accept-Encoding,Accept-Language,Authorization,Cache-Control,Content-Length,Content-Type,Origin,If-Modified-Since,User-Agent,X-Requested-With'; add_header 'Access-Control-Expose-Headers' 'X-Powered-By'; set $root_path /var/www/{{DOMAIN}}; root $root_path; disable_symlinks if_not_owner from=$root_path; charset utf-8; index index.php; autoindex off; include /etc/nginx/ssl.conf; if ($request_method ~* ^(OPTIONS|HEAD)$) { return 204; } location / { try_files $uri $uri/ /index.php?$query_string; } location ~ \.php$ { try_files $uri =404; fastcgi_pass unix:/var/run/php/php7.1-fpm.sock; fastcgi_index index.php; include fastcgi_params; fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name; } } Nginx configuration template for the api.yourdomain.com subdomain. Here we also connect the SSL configuration, make available from the outside the directory in which Let's Encrypt will add files for domain validation. All requests to api.yourdomain.com are redirected to api.yourdomain.com . We connect PHP7.1-FPM.

setup.sh :

#!/usr/bin/env bash time_start=`date +%s` ######################## # EDIT THESE VARIABLES: ######################## DOMAIN=yourdomain.com API_DOMAIN="api.$DOMAIN" SUPERUSER="inpassor" SUPERUSER_PASSWORD="12341234" USER="johndoe" USER_EMAIL="johndoe@yourdomain.com" USER_PASSWORD="12341234" ######################## apt update echo y | apt install dialog apt-utils echo y | apt install sed wget gnupg nano htop curl zip unzip apt-transport-https lsb-release ca-certificates debian-archive-keyring certbot # add users adduser --quiet --disabled-password --gecos "" $SUPERUSER echo "$SUPERUSER:$SUPERUSER_PASSWORD" | chpasswd adduser --quiet --disabled-password --gecos "" $USER echo "$USER:$USER_PASSWORD" | chpasswd sed -i "s/$SUPERUSER:x:1000:1000/$SUPERUSER:x:0:0/" /etc/passwd # add external repositories and GPG keys echo "deb http://nginx.org/packages/debian/ stretch nginx" > /etc/apt/sources.list.d/nginx.list wget --quiet -O - https://nginx.org/packages/keys/nginx_signing.key | apt-key add - echo "deb https://packages.sury.org/php/ stretch main" > /etc/apt/sources.list.d/php.list wget --quiet -O - https://packages.sury.org/php/apt.gpg | apt-key add - apt update echo y | apt upgrade echo y | apt install nginx php7.1-cli php7.1-fpm php7.1-mbstring php7.1-curl php7.1-xml # nginx setup mkdir /var/www mkdir "/var/www/$API_DOMAIN" chown -R $USER:www-data /var/www rm /etc/nginx/conf.d/default.conf cp nginx.conf /etc/nginx/nginx.conf echo "" > /etc/nginx/ssl.conf mkdir /etc/nginx/sites-available mkdir /etc/nginx/sites-enabled sed "s@{{DOMAIN}}@$DOMAIN@g" site.conf > /etc/nginx/sites-available/$DOMAIN.conf ln -s /etc/nginx/sites-available/$DOMAIN.conf /etc/nginx/sites-enabled sed "s@{{DOMAIN}}@$API_DOMAIN@g" api.conf > /etc/nginx/sites-available/$API_DOMAIN.conf ln -s /etc/nginx/sites-available/$API_DOMAIN.conf /etc/nginx/sites-enabled # ssl setup service nginx restart certbot register --agree-tos --email $USER_EMAIL certbot certonly --webroot -w /var/www -d $DOMAIN -d $API_DOMAIN --rsa-key-size 4096 openssl dhparam -out /etc/nginx/dhparam.pem 4096 sed "s@{{DOMAIN}}@$DOMAIN@g" ssl.conf > /etc/nginx/ssl.conf service nginx restart chown -R nginx:$USER /var/log/nginx chmod 664 /var/log/nginx/* time_end=`date +%s` echo Execution time: $((time_end-time_start)) sec. Bash script to automatically configure the server. At the beginning of the file, several variables are declared:

DOMAIN = yourdomain.com # your domain (without www in the beginning)

API_DOMAIN = "api. $ DOMAIN" # subdomain on which the API backend will be (here it is api.yourdomain.com)

SUPERUSER = "inpassor" # name of root user with root rights

SUPERUSER_PASSWORD = "12341234" # root password

USER = "johndoe" # username

USER_EMAIL = "johndoe@yourdomain.com" # user email - used to register with Let's Encrypt

USER_PASSWORD = "12341234" # user password

Of course, you need to reassign them.

Then the following actions are performed sequentially:

- Installs the necessary packages.

- External repositories and GPG keys are added to access more recent nginx and PHP packages.

- The packages nginx, php7.1-cli, php7.1-fpm, php7.1-mbstring, php7.1-curl and php7.1-xml are installed.

- Configured nginx.

- The process of obtaining the SSL certificate Let's Encrypt is launched. Please note that the dhparam.pem file is also generated here. This process is very long, so you can save the generated file and later copy it.

That's all, the server setup script is ready here.

Now we can log in to our VDS through SSH (by its IP address) as root, copy the * .conf and setup.sh files, make setup.sh executable (chmod 700 setup.sh) and run it.

After the VDS setup process is ready for operation.

We have installed nginx, which listens to requests for addresses: yourdomain.com , yourdomain.com , api.yourdomain.com , api.yourdomain.com . Requests to http are redirected to https, requests to yourdomain.com are redirected to www.yourdomain.com . At api.yourdomain.com we have an API. Now there is empty and in order for it to respond to our requests, you need to create the index.php file in the /var/www/api.yourdomain.com/ directory.

But so far this is not available, for the reason that we have not registered the necessary DNS settings.

8. DNS settings

After we set up the distribution of CloudFront and backend server, we are ready to make the final setup of DNS records.

8.1.We return to Yandex.Connect .

8.2.Go to the "Admin".

8.3. Select the menu item "DNS Management".

We need to add four new DNS records.

- Type A record: in the “Host” field, enter “@”, in the “Record Value” field - the IP address of our VDS, in the “TTL” field - “3600”.

- Record A: “Host” - “api”, “Record value” - VDS IP address, “TTL” - “3600”.

- CNAME record: “Host” - “www”, “Record value” - Domain Name of CloudFront distribution (see clause 6.3 of this guide), “TTL” - “3600”.

- CNAME record: “Host” - “static”, “Record value” - Domain Name of CloudFront distribution, “TTL” - “3600”.

We have just sent requests to yourdomain.com (without www) and api.yourdomain.com to our VDS, and requests to www.yourdomain.com and static.yourdomain.com - to the distribution of CloudFront.

9. Gitlab repository

Next we need to create a private git repository to store and version the source files of our project on Angular.

9.1.Register / log in to Gitlab .

9.2. Press the "New project" button.

9.3.Next, choose the path to the project and its name.

Set “Visibility Level” to “Private” and press the “Create project” button.

9.4. Add your public SSH key in the settings.

If you do not have a key, you need to create it. How to create a private key pair - a public key in the Windows system can be read, for example, here .

In the top menu, click on your avatar, in the drop-down menu, select the item "Settings".

In the menu on the left, select the item "SSH Keys".

Insert the contents of the public key file into the “Key” field. In Windows, it is usually located along the path: C: \ Users \ YourUsername \ .ssh \ id_rsa.pub.

In the field “Title” we write the name of the key (whatever).

9.5. We clone a repository on the local computer.

To do this, we must have git / git for Windows installed on our computer.

In the command line, go to the local folder with your projects, for example, in C: \ Projects .

Run the command:

git clone git@gitlab.com:YourLogin/my-awesome-project.git MyAwesomeProject The repository address “git@gitlab.com: YourLogin / my-awesome-project.git” can be found on the project’s main page on the Gitlab website. Here “MyAwesomeProject” is the name of the local folder with the project that will be created automatically.

We have just cloned an empty repository to a local computer and we have a project folder in which we can start creating your project.

10. Gitlab CI

We set up Gitlab CI to automatically build a project, synchronize the collected files with the Amazon S3 bucket and update the CloudFront distribution.

10.1. We go to our project on the site https://gitlab.com .

10.2.In the menu on the left, select "CI / CD" - "Environments".

Press the "New environment" button.

10.3.In the field "Name" enter "prod".

In the field “External URL” enter your domain (along with https: // and www at the beginning). For example: www.yourdomain.com . Click the "Save" button.

10.4. In the menu on the left, select “Settings” - “CI / CD”.

10.5. Opposite the point “Secret variables” press the button “Expand”.

In the “Key” field, enter “AWS_ACCESS_KEY_ID”.

In the field "Value" enter your Access key ID, obtained in paragraph 3.8 of this guide.

In the field «Environment scope» leave an asterisk.

Click the button “Add new variable”.

Add another variable “AWS_SECRET_ACCESS_KEY”.

We repeat the actions, in the “Value” field, enter your Secret access key, obtained in paragraph 3.8 of this guide.

And one more variable is “AWS_DISTRIBUTION_ID”.

We repeat the actions, in the field “Value” we enter your Distribution ID, obtained in clause 6.3 of this guide.

This time, in the “Environment scope” field, enter “prod”.

It should turn out as in the screenshot:

We have completed the environment settings and CI variables, the rest will be written further directly in our project in the .gitlab-ci.yml file .

11. Starting site on Angular

It is time to create our project on Angular.

11.1.In the command line, go to the directory of our project - where we have cloned an empty repository (see clause 9.5 of this guide).

In our example, this is C: \ Projects \ MyAwesomeProject .

We execute the following commands:

npm i -g @angular/cli ng new yourdomain.com --style=scss --skip-git=true --directory=. We replace yourdomain.com with the name of your project (it does not have to be the same as the domain name).

We have globally established Angular cli and in the project directory there are files with which you can start working.

11.2.Create a .gitignore file :

/.idea

/dist

/out-tsc

/node_modules

/e2e/*.js

/e2e/*.map

npm-debug.log

package-lock.json

11.3.Edit the file .angular-cli.json .

In the “apps” section, we find the “assets” key and change its value to:

[ { "glob": "**/*", "input": "./assets/", "output": "./" } ] Thus, all the files and directories that will be located in src / assets will fall into the root of our build.

In “styles”, replace “styles.scss” with “styles / styles.scss”.

11.4.Create the src / styles directory and drag the src / styles.scss file there .

Create a src / styles / _variables.scss file with the following contents:

$static-url: 'https://static.yourdomain.com'; At the beginning of the src / styles / styles.scss file, insert the line:

@import 'variables'; In the future, the build script will update the $ static-url variable in the src / styles / _variables.scss file .

Thus, we will have the opportunity to prescribe in styles the paths to images and fonts through this variable.

11.5. Add the file src / assets / favicon.ico . How are we without an icon then?

11.6. Create a file src / assets / robots.txt : 11.7. Create the src / assets / sitemap.xml file :

User-agent: *

Host: {{SERVER_URL}}

Sitemap: {{SERVER_URL}}/sitemap.xml

<?xml version="1.0" encoding="UTF-8"?> <urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9"> <url> <loc>{{SERVER_URL}}</loc> </url> </urlset> 11.8.Create a .gitlab-ci.yml file :

image: node:8.9 stages: - deploy cache: paths: - node_modules/ before_script: - npm install --unsafe-perm --silent --global @angular/cli - npm install --unsafe-perm --silent - apt update - echo y | apt install python-dev unzip - curl "https://s3.amazonaws.com/aws-cli/awscli-bundle.zip" -o "awscli-bundle.zip" - unzip awscli-bundle.zip - ./awscli-bundle/install -i /usr/local/aws -b /usr/local/bin/aws deploy_prod: stage: deploy only: - master environment: name: prod artifacts: paths: - dist script: - DEPLOY_SERVER="${CI_ENVIRONMENT_URL/https:\/\/www./}" - STATIC_URL="https://static.$DEPLOY_SERVER" - sed -i "s@$static-url:.*;@$static-url:'$STATIC_URL';@g" src/styles/_variables.scss - ng build --prod --aot --build-optimizer --no-progress --extract-licenses=false - sed -i -e "s@\" href=\"@\" href=\"$STATIC_URL\/@g; s@href=\"styles@href=\"$STATIC_URL\/styles@g; s@src=\"@src=\"$STATIC_URL\/@g" dist/index.html - SED_PATTERN="s@{{STATIC_URL}}@$STATIC_URL@g; s@{{SERVER_URL}}@$CI_ENVIRONMENT_URL@g" - sed -i -e "$SED_PATTERN" dist/robots.txt - sed -i -e "$SED_PATTERN" dist/sitemap.xml - aws configure set aws_access_key_id $AWS_ACCESS_KEY_ID - aws configure set aws_secret_access_key $AWS_SECRET_ACCESS_KEY - aws s3 rm s3://www.$DEPLOY_SERVER/ --recursive --exclude "*" --include "*.css" --include "*.js" --include "*.json" --include "*.html" --include "*.xml" --include "*.txt" - aws s3 sync dist/ s3://www.$DEPLOY_SERVER/ --no-progress --delete --size-only --acl public-read - aws cloudfront create-invalidation --distribution-id $AWS_DISTRIBUTION_ID --paths /*.html /*.xml /*.txt /*.json Let's see what we have here.

Docker image "node: 8.9" is used for the script.

Declares one step of the “deploy” build.

The "node_modules" directory is cached.

Before executing the build script, a global installation of angular / cli is performed, after which all dependencies of the project are installed.

Next, the necessary python-dev and unzip packages are installed, the aws cli utility is downloaded, unpacked and installed.

A single task is declared with the name “deploy_prod”, which is performed in the “prod” environment, at the “deploy” stage and only in the “master” branch.

As a result of this task, artifacts will be created - the entire contents of the “dist” directory (the result of the project build).

Task fulfillment process:

- $DEPLOY_SERVER, $CI_ENVIRONMENT_URL ( «External URL», . 10.3 ), « www .» (yourdomain.com).

- $STATIC_URL — static .$DEPLOY_SERVER (https://static.yourdomain.com).

- "$static-url" src/styles/_variables.scss $STATIC_URL (https://static.yourdomain.com).

- Angular (environment — production, AOT, build optimizer, , ).

- index.html , $STATIC_URL.

- In the robots.txt and sitemap.xml files, the substring "{{SERVER_URL}}" is replaced with the value $ CI_ENVIRONMENT_URL (https://www.yourdomain.com), the substring "{{STATIC_URL}}" is replaced with the value $ STATIC_URL (https: //static.yourdomain.com).

- The access key ID and secret key are set to securely connect to AWS.

- S3, recursively deletes all * .css, * .js, * .json, * .html, * .xml and * .txt files from the recycle bin.

- The “dist” directory is synchronized with the S3 basket (without displaying progress on the screen, deleting the files missing from the “dist” files from the basket, comparing only the sizes of the files, publishing the files for reading).

- To distribute CloudFront, invalidation ( documentation ) is created with the removal of * .html, * .xml, * .txt and * .json files from the root folder.

11.9. Run the following command in the project directory:

git add . git commit -m "first commit" git push Everything!If we have completed all the steps in this guide correctly, it remains to wait for Gitlab to complete the task, and we will see our launch site at www.yourdomain.com .

Congratulations!

12. Conclusion

So, we have just deployed a convenient working environment for creating a site on Angular, set up an automatic build of the project and its delivery via CDN. We also set up a backend server, SSL certificates, DNS records and we have domain mail.

What I did not mention in the article and what else can I do?

- If we need an API backend, we need to create it (in our example, in PHP). Most likely, it will be necessary to complicate the bash server setup script by adding there, for example, installing databases.

- , robots.txt Host, S3 CloudFront.

- Gitlab CI — «prod». , , .

- Gitlab CI .

- , , Angular. ?

Source: https://habr.com/ru/post/342152/

All Articles