Create a backup of a large remote database using YII2

Recently, I had the opportunity to execute one small and interesting order. Its essence was to copy the database (its approximate size is almost 800Mb) to the test server and then perform a number of changes in the table structures.

It would seem that there is nothing easier to take, we perform a request of the form on a remote server:

mysqldump -uLogin -pPassword db_name > db_name.sql and then pick up the file, but in my case it turned out to be a database server without the ability to download a file from there, so I had to figure out and write my export mechanism from this remote database using YII2 tools, which I was not very familiar with at that time.

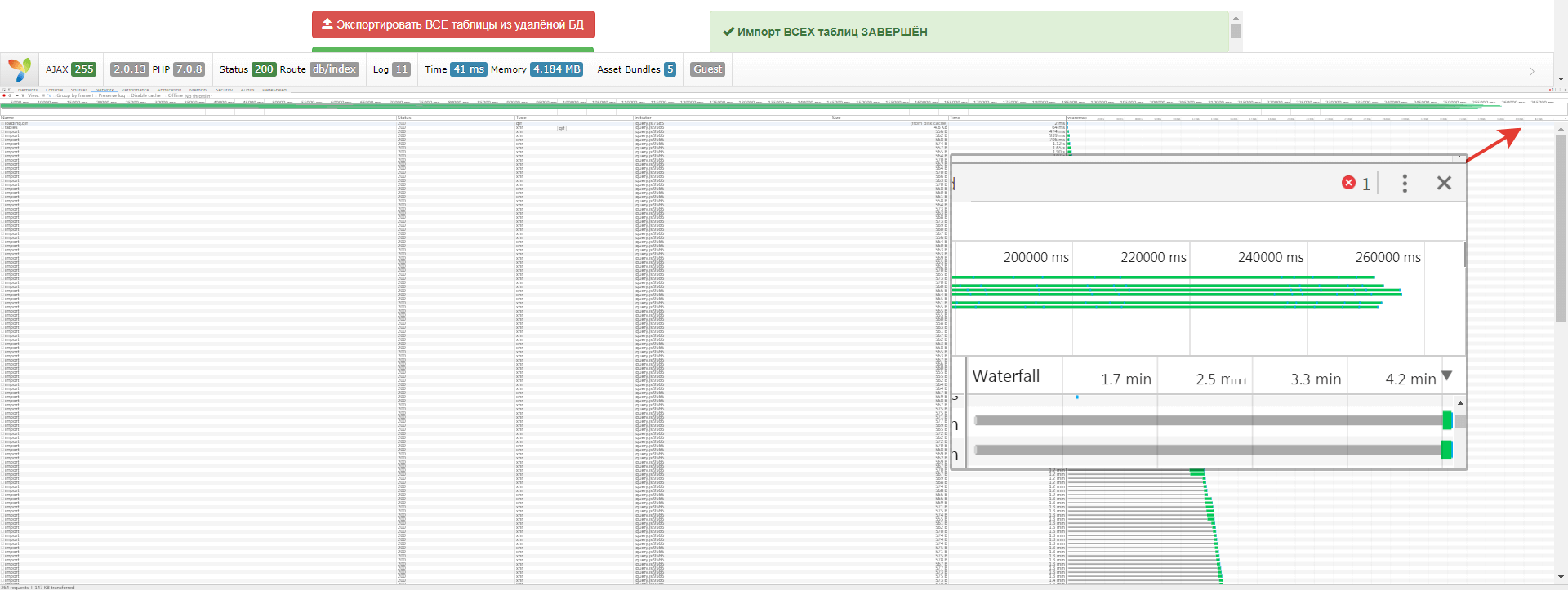

To begin with, I implemented a simple interface, described in views / db / index.php and presented in Figure 1.

Figure 1. - Appearance

For convenience, all actions were divided into 3 parts:

- Export DB;

- Import DB;

- Applying changes;

- Delete backup files.

On the right is a block for displaying information about the progress of operations.

Next, the jQuery scripts in the web / js / common.js file were described .

To determine the need for detailed logging of master data to the console, the constant DEBUG = false was globally defined.

The variables dbExportAll, dbImportAll have also been defined globally, in order to accurately determine the completion of the import and export process.

For convenience, the constants URL_TABLES, URL_EXPORT, URL_IMPORT, URL_REMOVE, URL_MIGRATE were initialized. From the values described in the file views / db / index.php: 59

For service purposes, the following JS functions were described:

- isTrue - checks whether all elements of the dbExportAll arrays, dbImportAll are true;

- startDB - precedes the start of processing button presses, and blocks all further actions on the page;

- finishFailDB - called if the action cannot be performed;

- finishSuccessDB - called in case of successful execution of all actions after clicking on our buttons;

- count is an analogue of the PHP function of the same name;

- logMess - output an array to the log;

- logMessStr - displays a string in the log.

Export

Finally, we come to the point why we started everything, to function,

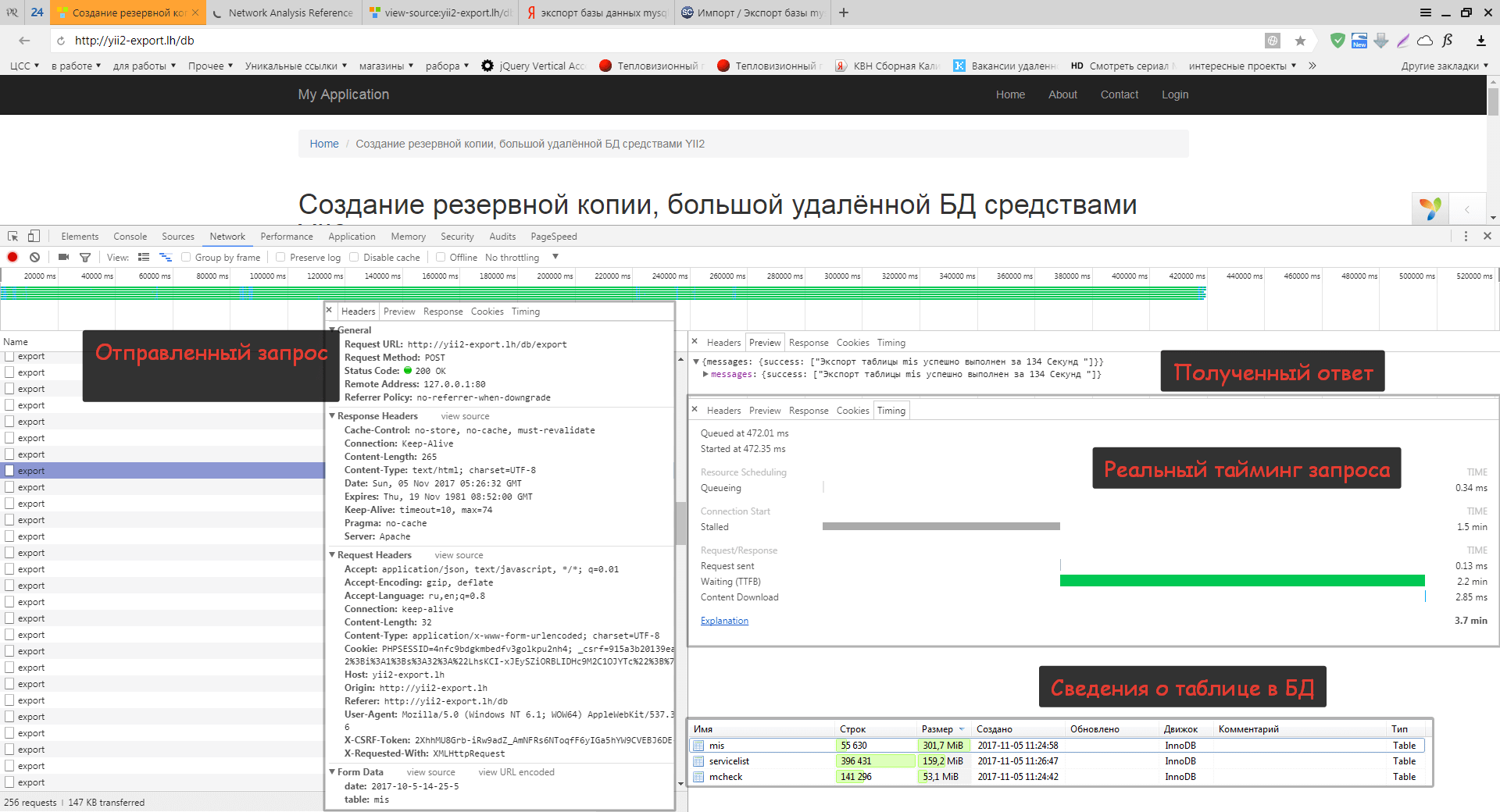

$('body').on('click', '#dbExportAll', function () {}); At the beginning of the work, we send a request to get a list of all the tables in the remote database and fill in the dbExportAll array with the received data. Next, we go through the entire list of tables and send requests to create backups of each table separately, so that the requests are not too long in time. All received requests are processed in

')

DbController()->actionExport() and

DbWrap::export($table, $date) .An example of the duration of requests is shown in Figure 2. From Figure 2 it can be seen that the export of such a large table requires at least 134 seconds (2.2 minutes), and this is without taking into account the time that the request was in queue for execution.

Figure 2. - Information about exporting the mis table

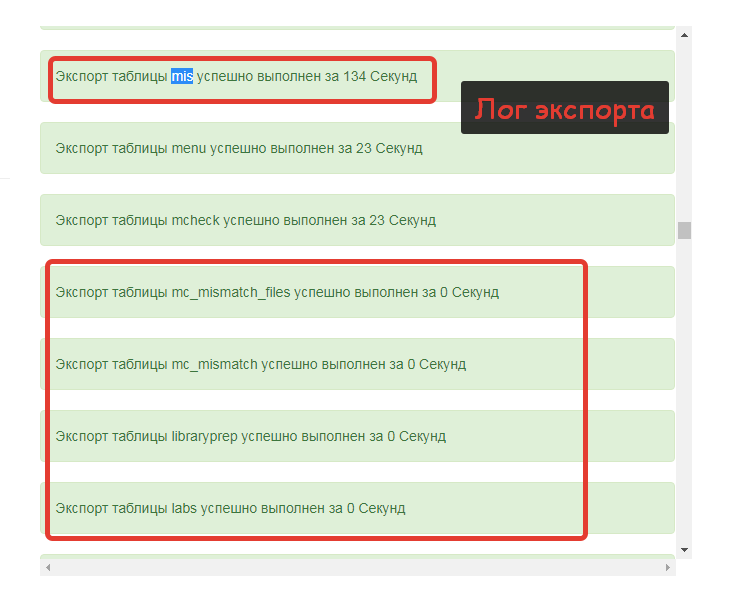

But from the log (Figure 3) it can be seen that the export of most tables takes very little time, less than a second.

Figure 3. - Export Log

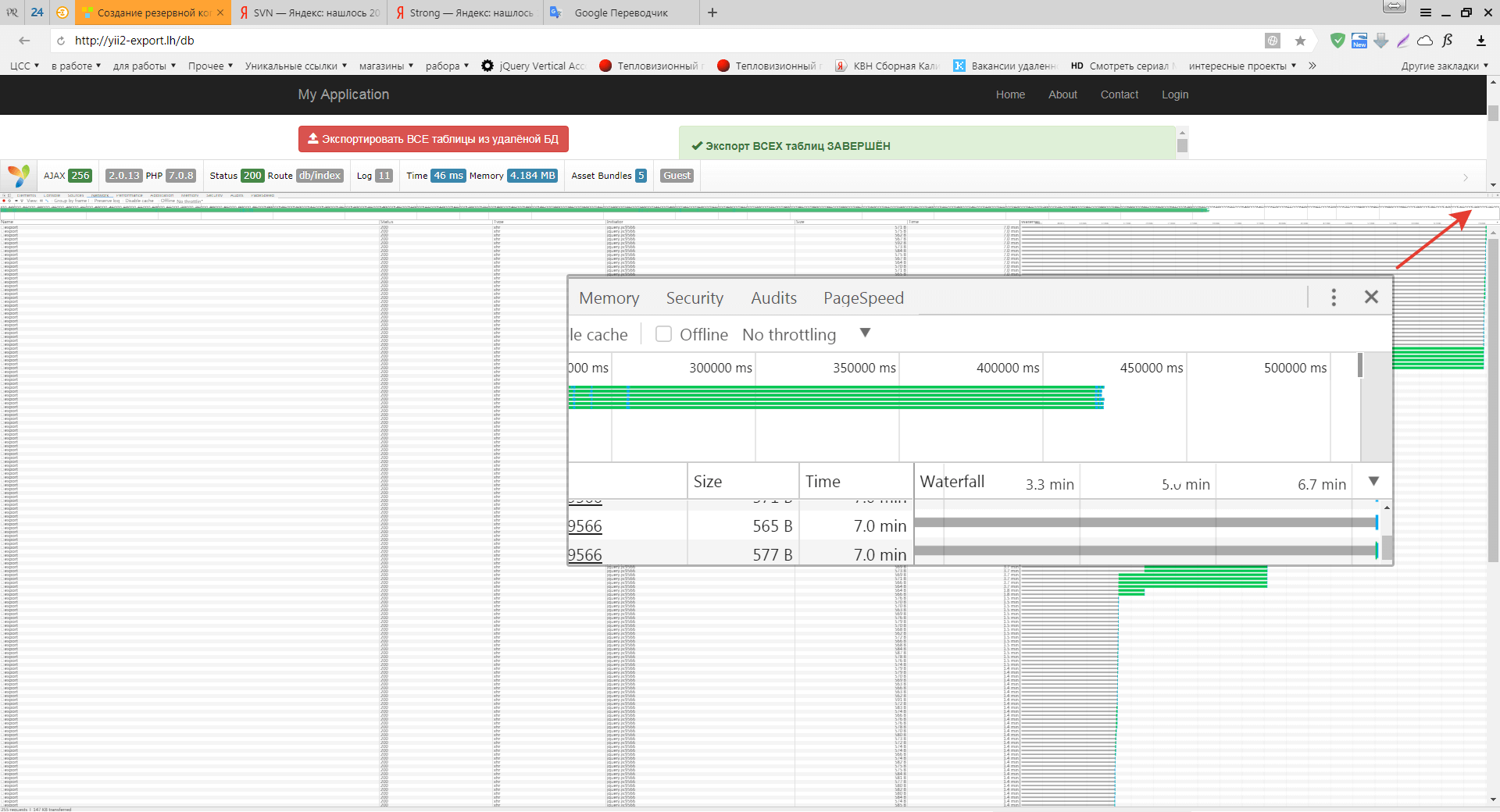

And from Figure 4 it can be seen that 7 minutes is enough for exporting all the tables.

Figure 4. - Export timing

Import

Before importing, you must perform an export if you have not previously exported or backup files have been deleted.

Import work also begins with the development of the JS script described in the function

$('body').on('click', '#dbImportAll', function () {}); Similar to export, a list of all tables is produced and the dbImportAll variable is initialized. Next, the tables are individually sent to import for which they are responsible

DbController()->actionImport() and

DbWrap::importAll($table) The logic of the script in this place is simple, we find the latest file and execute a command like “mysql -uroot -pPass db <file.sql” (if the password is empty, then send the command without specifying a password).

From Figure 5 it can be seen that it takes 5 minutes to complete the import.

Figure 5. - Import Timing

Apply changes

Applying changes starts with a JS function.

$('body').on('click', '#dbMigrate', function () {...}); in which we send a request to the DbController controller and its actionMigrate method, which in turn calls DbWrap :: migrate ($ mess). The migrate function describes a transaction within which a series of table change requests are sent.

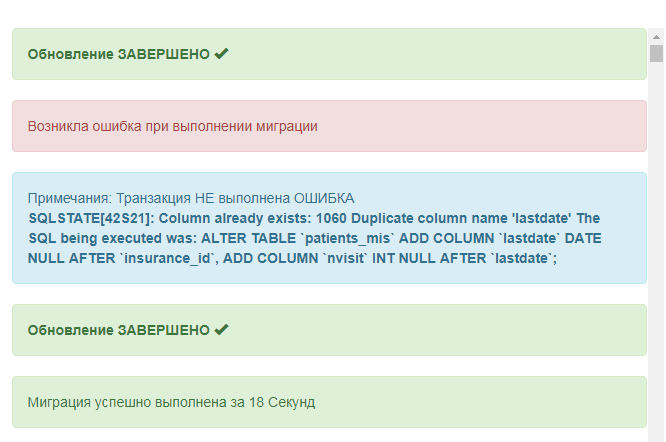

After practicing the application of changes, a corresponding message is displayed with a detailed description in case of an error (in our case, the error is related to the fact that the changes have already been applied before), or with the elapsed time to complete (see Figure 6).

Figure 6 - Migration

Deletion

And removal begins with working off the JS function.

$('body').on('click', '# dbRemove, function () {}); in which we send a request to the DbController controller and its actionRemove method, which in turn calls

DbWrap::remove() The m remove function describes the definition of the operating system family on which the PHP script is running and executes the rm –rf or RD / S / q commands depending on the OS.

Result

As a result, we were able to quickly apply the changes on the test server in 3 clicks, or lift the base in case of its fall. In addition, we have a stock for other long requests, the main principle of which is

Divide and rule

In fairness it should be noted that all measurements were made on a local server running on PHP7 with a relatively powerful processor and 1.5Gb of RAM. Therefore, the performance of this script is likely to be lower on weak servers and hosting.

I remembered in time, for those who want to get acquainted with the source in details, the sources are placed on Bitbucket

Literature:

- http://sitear.ru/material/mysql-backups

- http://www.yiiframework.com/doc-2.0/yii-db-connection.html

- http://php.net/manual/ru/function.fopen.php

- http://php.net/manual/ru/function.fwrite.php

- http://php.net/manual/ru/function.shell-exec.php

- https://dev.mysql.com/doc/refman/5.7/en/sql-mode.html

- http://sc-blog.ru/import-export-bazy-mysql-consol/

Source: https://habr.com/ru/post/341840/

All Articles