Let's look into the future: how will the storage infrastructure change?

The outpacing growth in the importance of the information part of the systems compared to the computational component itself is one of the key IT development trends. In order to understand the correctness of this thesis, it is not at all necessary to resort to references to authoritative analytical studies, it is enough to simply turn to your own experience. Companies have long understood that information is not just a tool to support its operational activities, but is also becoming an increasingly important asset for it, to a significant extent ensuring not only increasing the company's capitalization, but also its successful development in the future. Digital transformation, which affects all levels of modern society from a private user to a state, is basically based on the basic thesis “data-based activity”.

But in the course of the implementation of this global trend in recent years, certain fundamental difficulties have become apparent. And the matter is not only in the accelerated growth of volumes of stored information, modern technologies are quite successfully coping with this task.

')

One of the problems, of course, is the rising costs of maintaining and developing a physical storage infrastructure. Of course, consumers want to reduce or at least optimize their expenses, but in the end, this task can be solved in purely financial ways.

However, there are other, more serious problems. One of them is the recent limitations in the growth of the speed of data exchange between the actual computing resources (servers) and data storage devices. Against the background of rapid growth in processor capacity and storage capacity, this factor is now a bottleneck in terms of productivity growth of IT systems. The second urgent problem is the diversity of sources and repositories of information is constantly growing. This means that the computing infrastructure requires not only an increase in the speed of interaction between data processing facilities (processors) and storage devices, but also the flexible possibilities for systems to adapt to the specific hardware used and tasks to be solved.

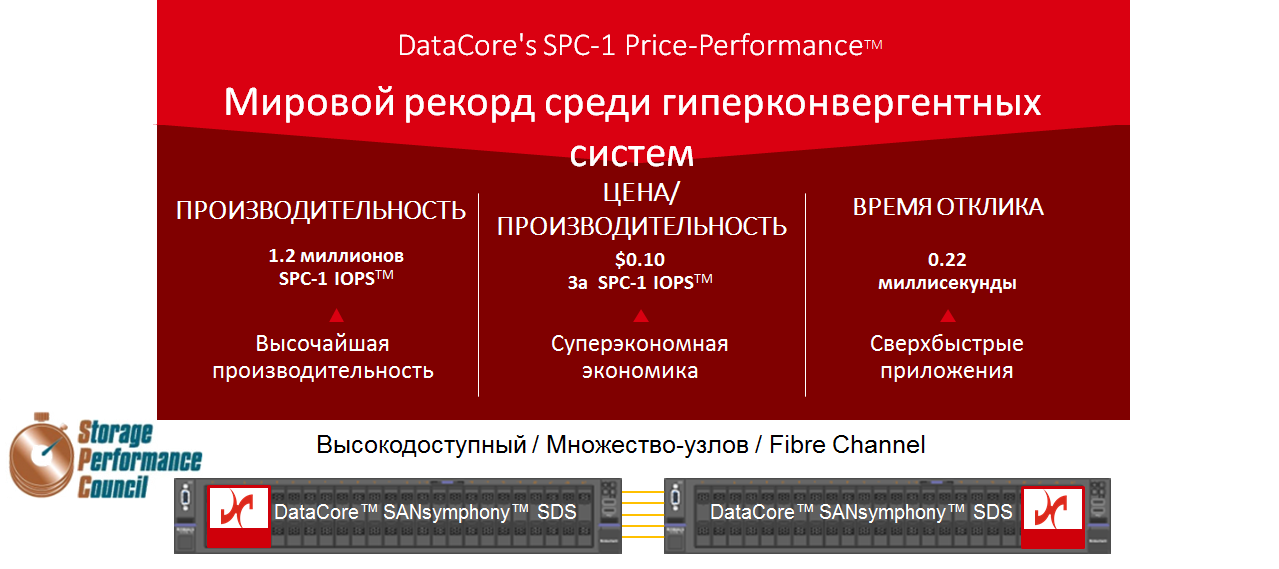

Modern architectural solutions and data management technologies based on the concept of software-defined IT make it possible not only to reduce costs, but also to increase the efficiency of using server systems as compared to the orientation towards using traditional monoventoring data storage systems. This approach is used in DataCore Software solutions that allow in practice to create hyper-convergent virtual data storage systems (SAN) with record price / performance indicators today.

The use of DataCore Software products provides the creation of an intermediate software exchange layer for data exchange that is executed on standard architecture servers, including virtual machines. Separating the control layer from the actual data warehouses immediately allows solving the task of managing heterogeneous information storage media, including inherited ones. This dramatically increases the flexibility of increasing storage volume, including through the simple purchase of individual disk drives.

One of the company's flagship products today is SANsymphony-V. This software solution allows you to get rid of many I / O bottlenecks and increase the number of virtual machines and workloads that can be combined on a single server on hyperconvergent (using virtualization systems from different vendors: VMware, Microsoft, etc.) platforms. This software runs on commercially available x86 servers and covers the entire infrastructure, regardless of the type of storage system (solid-state drives, hard drives, or cloud storage), providing automation, improved performance and efficient resource allocation.

In September this year, the company introduced its first database optimization product, designed for ultra-fast transaction processing and real-time analytics, MaxParallel for SQL Server. This solution eliminates resource conflicts in multi-core systems, which significantly improves the productivity of the Online Transaction Processing (OLTP) and Online Analytical Processing (OLAP) applications and ensures fast processing of requests during peak periods. Its application does not require additional equipment and modification of the program code of application systems.

Source: https://habr.com/ru/post/341782/

All Articles