Pediatric Bone Age Challenge. Deep learning and many, many bones

Competition to determine the bone age. Member Notes

On October 6, a very interesting contest organized by American radiologists from The Radiological Society of North America ( RSNA ) and Radiology Informatics Committee ( RIC ) came to Volodya Iglovikov’s radars, and he threw a cry in the ODS.ai community

The aim of the competition was to create an automatic system for determining the bone age from X-rays of a hand. The bone age is used in pediatrics for a comprehensive assessment of the physical development of children, and its deviation from the chronological one helps to identify violations in the work of various body systems. When it comes to medical projects, I don’t have to persuade me, but this competition started in August and it seemed like an adventure to join it 8 days before the end. In order to at least begin the preprocessing of images, hand masks were required, and Volodya made them in a few days, of excellent quality, and shared them with the others. How he so quickly coped with this difficult task, which included manual marking, is a mystery, and he may write about it himself. With masks, the idea did not look hopeless anymore, I decided to participate and eventually managed to realize almost all the plans.

')

Task

Bone age (bone age) - is the conditional age, which corresponds to the level of development of the bones of children and adolescents. Skeleton formation occurs in several stages. It is used in pediatrics to compare bone age with chronological, which allows time to notice violations in the endocrine system and metabolic system.

Bone age (bone age) - is the conditional age, which corresponds to the level of development of the bones of children and adolescents. Skeleton formation occurs in several stages. It is used in pediatrics to compare bone age with chronological, which allows time to notice violations in the endocrine system and metabolic system.To determine the bone age, two methods are mainly used - Greulich and Pyle's GP (Greulich and Pyle) and Tanner TW, Whitehouse and Healy (Tanner, Whitehouse, Healy), developed in the second half of the 20th century. Both techniques are based on an x-ray of the hand and wrist. Due to the large number of areas of growing tissue in the bones and nuclei of ossification, using these radiographs, one can trace the appearance of the epiphyses (terminal sections of the tubular bones), the stages of their development, the processes of fusion of the epiphyses with the metaphysis with the formation of bone joints.

The GP method is based on comparing radiographs with a special atlas. Here you can see how bone age is determined by the TW2 method. The method consists in evaluating 20 specific bones, most of which belong to the wrist or to the joint zone of the metacarpal bones and proximal phalanges. Classical methods for determining bone age require considerable time specialists and are highly dependent on subjective assessments, which adversely affects the diagnosis and subsequent therapy.

The first and best automatic system for determining bone age today is the commercial BoneXpert system, approved for use in Europe. BoneXpert uses the AAM computer vision algorithm, with which it reconstructs the contours of 13 palm bones, then determines the bone age according to the GP or TW methodology according to their shape, texture and intensity. The accuracy of this system is 0.65 years. Serious limitations of BoneXpert include sensitivity to image quality and the fact that it does not use the bones of the wrist, which are especially important when determining the bone age of young children. A recent publication of the “Fully Automated Deep Learning System for Bone Age Assessment” described a machine learning system that showed an accuracy of 0.82–0.93 years using only radiographs.

The task of our competition was to create an automatic system for determining the bone age only by X-ray.

Data

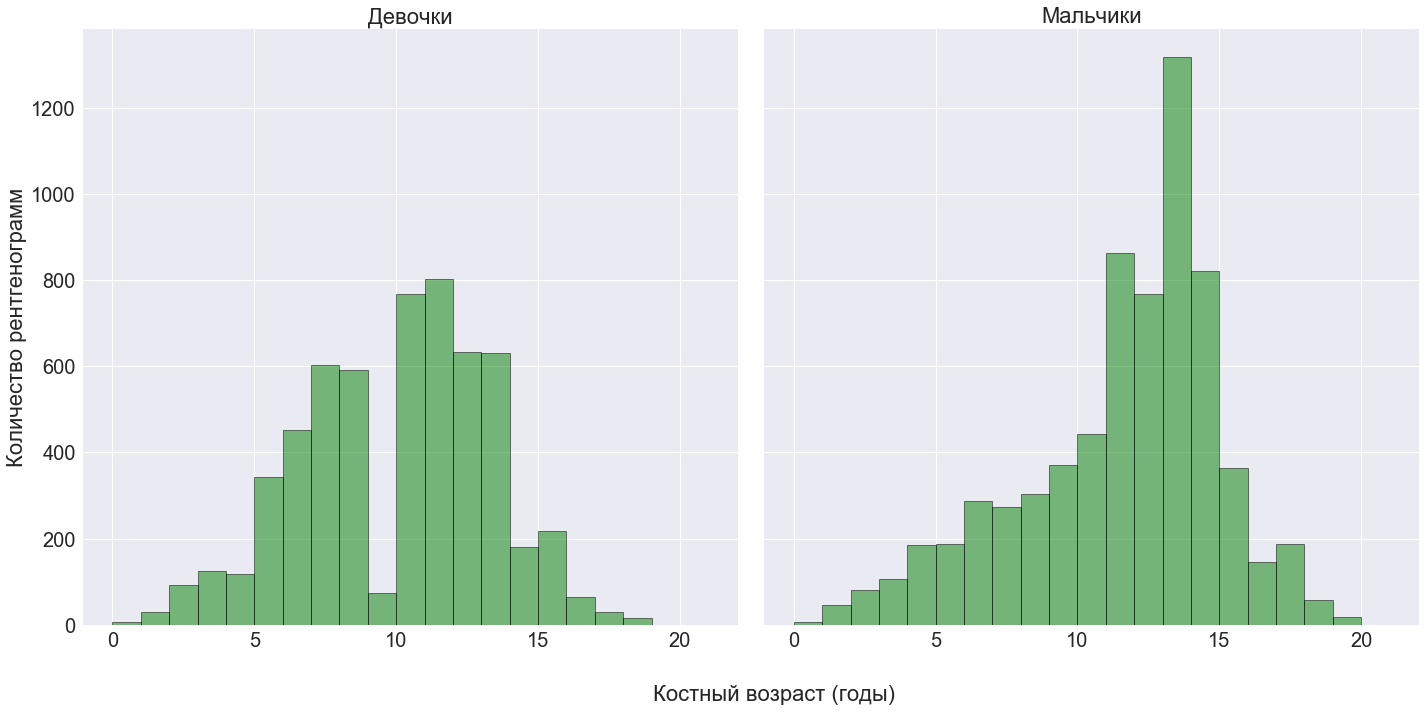

The organizers provided 12,611 training, 1,425 validation and 200 test shots collected from three laboratories - Stanford University, the University of Colorado, the University of California. In the training set 5,778 girls, 6,833 boys. Age from 1 to 228 months. In the test set of boys and girls equally.

The pictures were taken on different equipment, at different times and of various types: scale, hand size, orientation, contrast, accompanying inscriptions, frames and other garbage. Monochrome in png format, typical size is 2044x1514 pixels. For all the pictures, the gender was indicated, and for the training - the bone age in months, obtained by expert evaluation.

Preprocessing

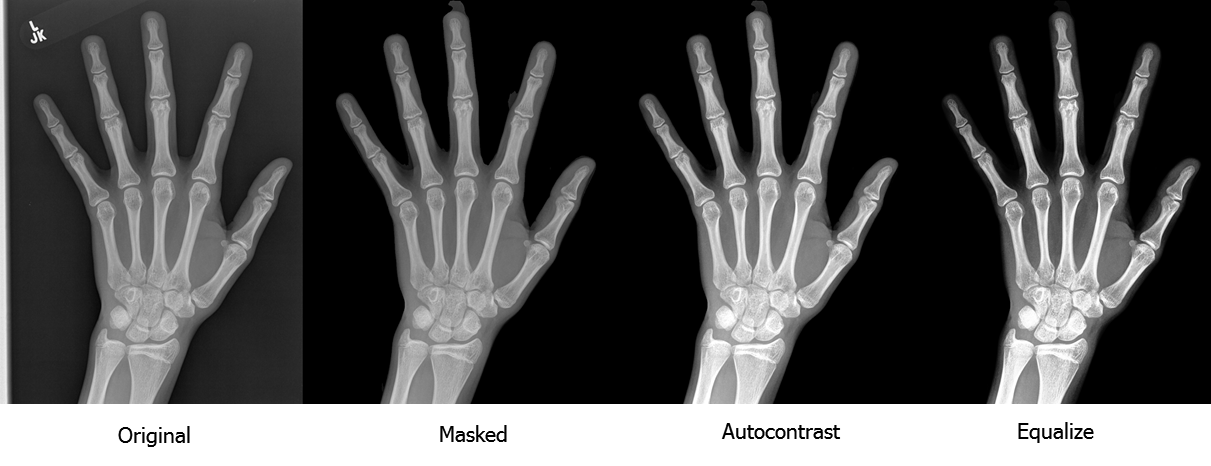

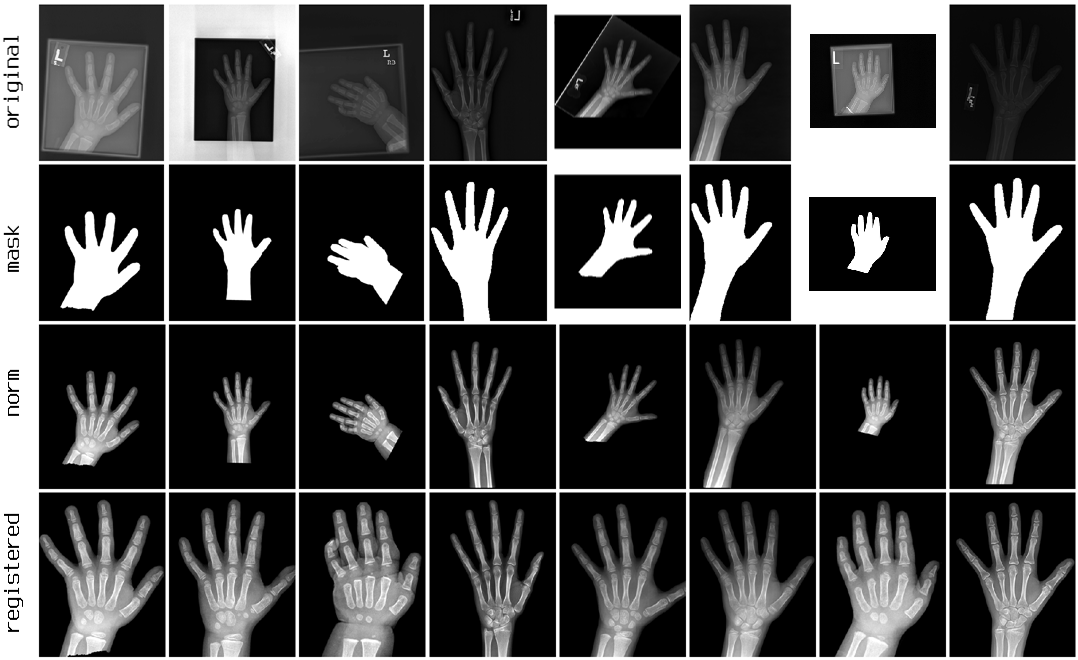

To normalize the contrast and remove debris, it was necessary to zero the background, which was done using V. Iglovikov’s masks. Further 2 options were considered: autocontrast and equalize from PIL.ImageOps. The equalization of the histogram did not suit the fact that it overexposed light areas, and as a result, autocontrast was used.

With this preprocessing, one could finish and train the model in pictures of different scales and orientations. It is possible that it would work. But in the original techniques, the focus is on several compact zones - the metacarpal bones (Metacarpal), the proximal phalanges (Proximal Phalanx) and the wrist (Carpal). It was very tempting to train several different models on small areas with high resolution, but for the correct localization of these zones, you first had to bring all the palms to the same size and position, i.e. to register. Thus, the further task was reduced to two models:

- for registration of images

- to determine the age of the specified zones

Registration of images. Model number 1

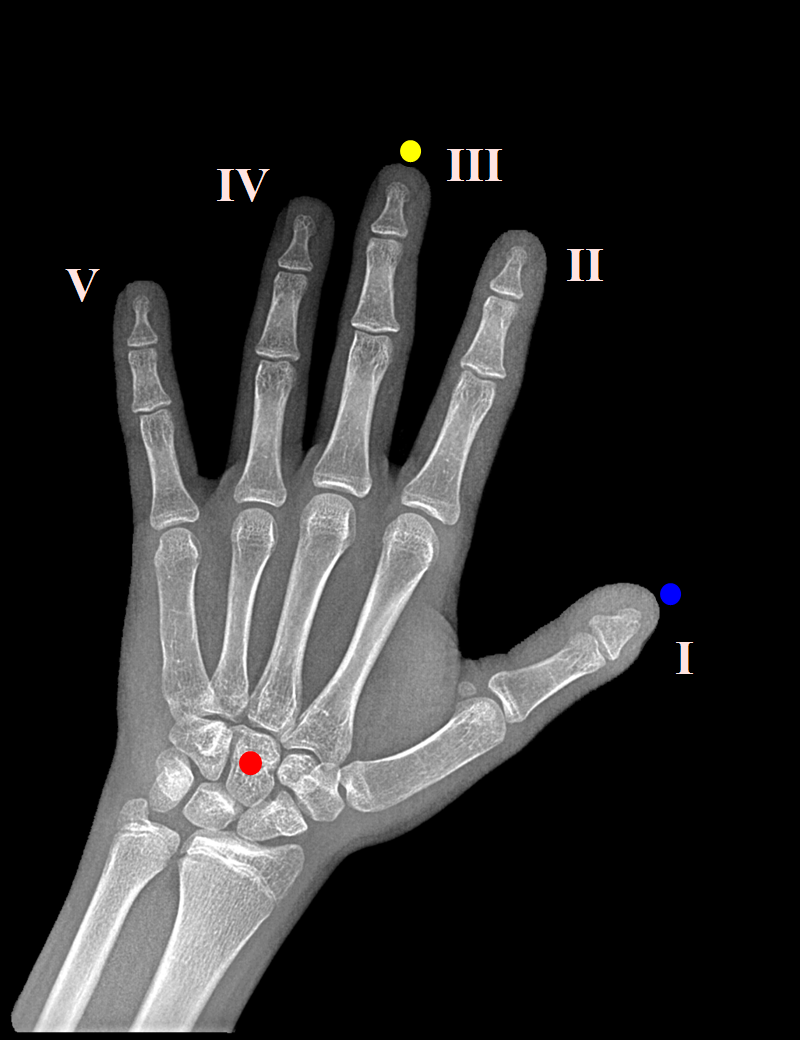

The key points were the middle finger tip, the center of the capitate bone in the wrist (Capitate), the tip of the thumb. Manually tagged 800 shots. For some reason, it turned out to be difficult to find working software for such a simple task as marking points, but in the end VGG Image Annotator (VIA) was found and perfectly suited. The images were reduced to a size of 2080x1600 and in the process of training were scaled with a 16-fold reduction.

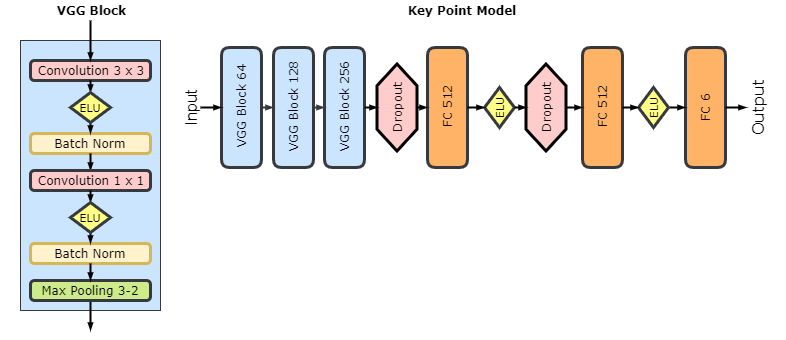

The key points were the middle finger tip, the center of the capitate bone in the wrist (Capitate), the tip of the thumb. Manually tagged 800 shots. For some reason, it turned out to be difficult to find working software for such a simple task as marking points, but in the end VGG Image Annotator (VIA) was found and perfectly suited. The images were reduced to a size of 2080x1600 and in the process of training were scaled with a 16-fold reduction.To determine the coordinates of key points (x, y), I used a regression VGG-like model with blocks of 64-128-256, two fully connected layers of 512 neurons, 6 outputs (3 x 2), and the MSE loss function. VGG block consists of two convolutionary layers with 3x3 and 1x1 filters, batch normalization, ELU activation and MaxPooling 3-2. I usually use SGD optimizer, but here Adam proved to be good, and time was running out. Why VGG, not Resnet or Inception? I don’t know what this is connected with, but in my tasks VGG often works a little better, and there was no time to choose the architecture again. Coached with augmentation: rotation, shift, zoom. The accuracy of localization turned out to be about 3%, which is clearly not the limit, and after the contest there was a desire to try other ways of registering medical images.

When the key points are found, it remains to calculate the affine transformation (scaling, rotation, shift and here and there mirror image), so that the tip of the middle finger and the capitate bone are on the same vertical, 100 pixels from the top and 480 from the bottom, respectively, and the thumb is on the right:

Main model

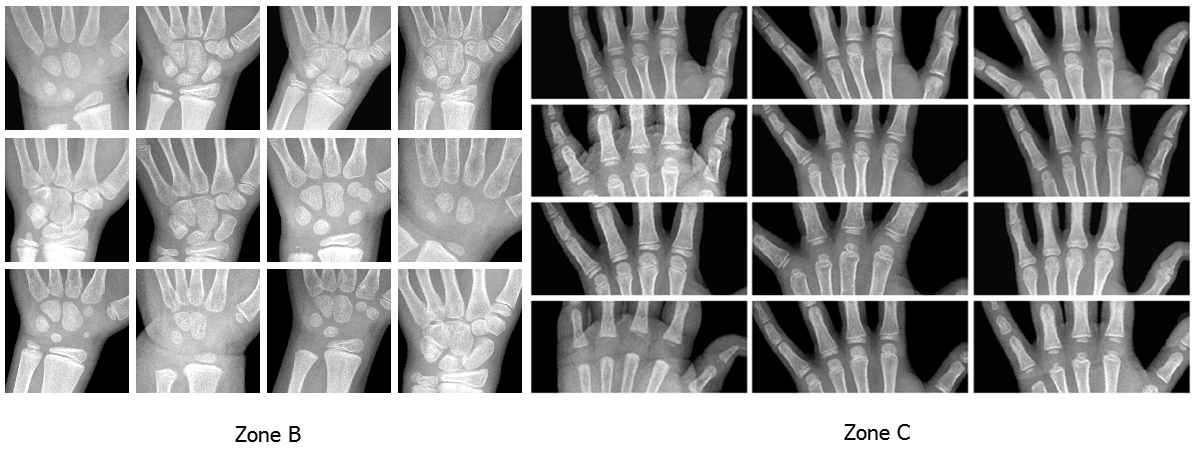

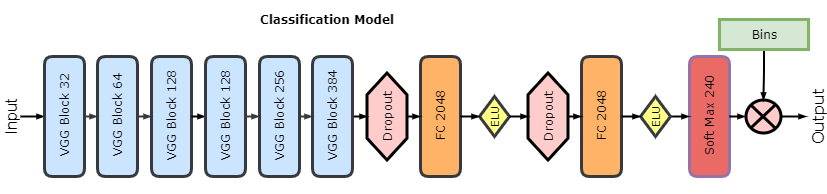

As you can guess, this is also a regression VGG model, but working on large pictures and deeper: blocks 32-64-128-128-256-384, two fully connected layers of 2048 neurons and one output. The competition metric was Mean Absolute Distance, respectively, and the model used Mean Absolute Error (MAE) as a loss function. At the entrance there are pictures 2080x1600, but for this model a generator was written that makes a crop of a given size from a given zone with augmentation and scaling. 3 zones were selected:

A) the whole hand, crop 2000x1500, scale 1: 4

B) wrist, cropping 750x750, scale 1: 2

C) Metacarpals and proximal phalanges, 600x1400, scale 1: 3

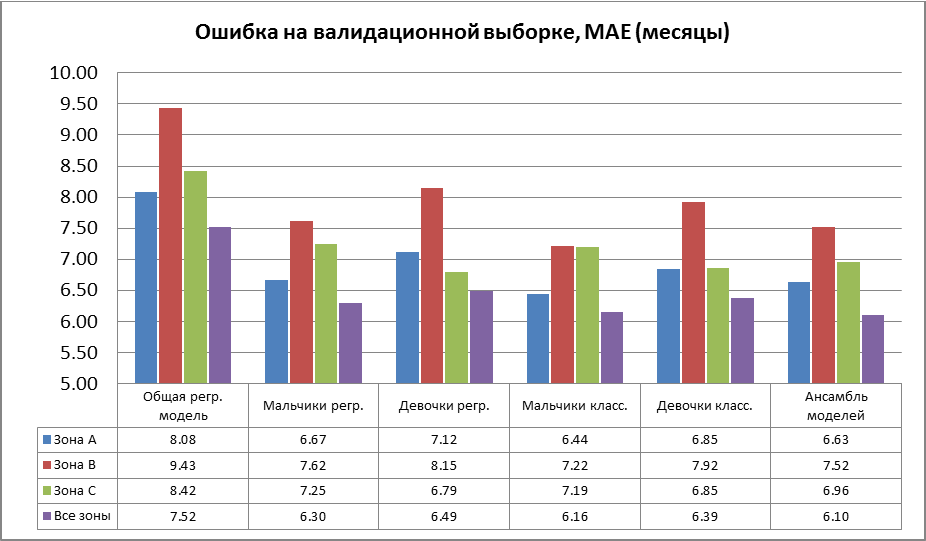

So 3 models from different zones were trained. There were 2 days left until the end of the competition, and I didn’t do a full cross-qualification; the models trained on 11,600 pictures and validated for 1,000. Thanks to augmentation and dropout, convergence was very even, with no signs of retraining.

It turned out that the model trained on the wrist (zone B) is almost as good as the model for the whole arm (A) with an area of 5.3 times larger. An unexpected result was shown by a model on the metacarpal bones (C), which was not pinned much hope. It turned out to be better (B) and in some cases slightly better (A), although in the TW2 method, the zone of metacarpal bones is given not the greatest value, but its area is less (A) 3.6 times.

It turned out that the model trained on the wrist (zone B) is almost as good as the model for the whole arm (A) with an area of 5.3 times larger. An unexpected result was shown by a model on the metacarpal bones (C), which was not pinned much hope. It turned out to be better (B) and in some cases slightly better (A), although in the TW2 method, the zone of metacarpal bones is given not the greatest value, but its area is less (A) 3.6 times.At the end of the competition, I remembered that in standard techniques, bone age is determined by gender. Tyunig models separately for boys and girls gave a significant improvement, about 1.4 months, and instead of three models, it was six.

And after the official completion, one misunderstanding helped to realize the idea, which was not reached in time - classification instead of regression. The organizers appointed the end of the competition at “midnight October 15”. We at ODS.ai thought it was midnight between 15 and 16, but on October 14 we learned that there were several hours left until completion and we barely had time to submit our decisions. As it should be with such a beautiful deadline, not everyone was lucky. Several contestants got caught, started complaining, the competition was extended for a day, and I had an extra day.

The classification was not entirely traditional. The role of classes was played by age in months, but the output of the network was not probabilities, but the expectation of age for all classes. I considered different variants of quantization, and the simplest, 1 month - 1 class, only 240 classes, showed the best. In the penultimate layer, I set softmax to 240 outputs, which scalar multiplied by the age vector (0, ..., 239 normalized to [-1, 1]). He trained a model with the same labels and the same loss function (MAE) as in the case of regression.

Separate classification models only slightly exceeded the regression models, but they entered a linear ensemble with more significant weights and markedly improved the overall result.

Conclusion

The competition turned out to be wonderful and the most impetuous of all in which I had to participate. I am grateful to the organizers for a very interesting topic, V.Iglovikov - for drawing me into this and helping with masks, A.Stromnov , A.Shvets , Yu. Kashnitsky , P.Nesterov - for comments and corrections to the text.

It was possible to do almost everything planned, except for the boosting of features from the last layers. But this is unlikely to significantly improve the result: it seems that the limit associated with the ambiguity of the annotation of images, as is often the case in medical ML, has been reached.

I hope that our results will benefit medicine. The accuracy of bone age, shown by ODS.ai participants on the test sample (A.Rakhlin - 4.97 months / 13th place, V.Iglovikov - 5.41 / 18, A.Shvets - 5.50 / 19), significantly exceeded BoneXpert and “Fully Automated Deep Learning System for Bone Age Assessment ”with their 0.65 and 0.82–0.93 years, respectively. We look forward to making decisions of the winners, which will be presented at the annual RSNA conference next month, where we are also invited.

Update December 15th.

Published an article on bioRxiv and arXiv . Added description of segmentation and various statistics in the context of the chronological stages of development of the skeleton.

Source: https://habr.com/ru/post/341408/

All Articles