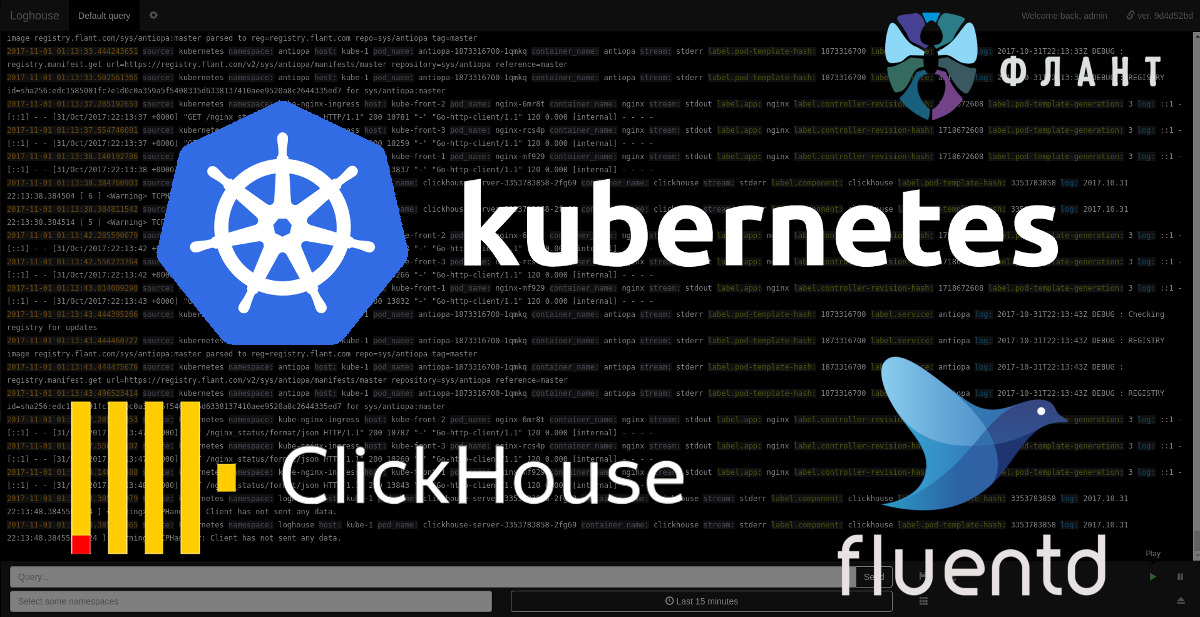

Introducing loghouse - an open source system for working with logs in Kubernetes

Serving many installations of Kubernetes in projects of different scale, we are faced with the problem of collecting and viewing logs from all containers in the cluster. After examining the existing solutions, we came to the need to create a new one - reasonable in the consumption of resources and disk space, and also offering a convenient interface for viewing logs in real time with the possibility of filtering them according to the necessary criteria.

This is how the loghouse project was born , and I am pleased to introduce its alpha-version to DevOps engineers and system administrators who are familiar with the issues identified.

')

The loghouse was based on the wonderful open-source column database, ClickHouse , for which special thanks to colleagues from Yandex. As it is easy to guess, this circumstance became the reason for the name of the new project. ClickHouse's Big Data orientation and corresponding optimizations in performance and data storage approach are vital to the Kubernetes log collector we needed.

Another important component of the current loghouse implementation is fluentd - an open source project with the CNCF foundation, which, while providing good performance (10 thousand records per second with 300 MB of memory) , helps in collecting and processing logs and sending them to ClickHouse .

Finally, since the solution is focused on Kubernetes, it uses its basic mechanisms to integrate the various components of the loghouse into a single system that can be easily and quickly deployed in a cluster.

Opportunities

- Efficient collection and storage of logs in Kubernetes. About fluentd already written, but examples of the place occupied by the logs in ClickHouse: 3.7 million records - 1.2 GB, 300 million - 13 GB, 5.35 billion - 54 GB.

- JSON log support.

- A simple query language for selecting records with matching keys with specific values and regular expressions, supporting a variety of conditions through AND / OR.

- Ability to select records for additional data on containers from the Kubernetes API (namespace, labels, etc.).

- Simple deployment in Kubernetes using ready-made Dockerfile and Helm charts.

How it works?

Russian documentation of the project explains the essence:

On each node of the cluster Kubernetes is installed under fluentd to collect logs. Technically, DaemonSet is created for this in Kubernetes, which has tolerations for all possible taints and falls on all nodes of the cluster. Directories with logs from all host systems are mounted to the fluentd subdirectories from this DaemonSet , where fluentd service is “watching” them. For all Docker container logs , the kubernetes_metadata filter is used , which collects additional information about containers from the Kubernetes API. After that, the data is converted using the record_modifier filter. After data conversion, they get into the fluentd output plugin, which calls the clickhouse-client console utility located in the fluentd container to write data to ClickHouse.

An important architectural note is that at the moment, writing to a single instance of the ClickHouse database , Deployment , is supported, which by default falls on a random K8s node. You can select a specific node for its placement using nodeSelector and tolerations . In the future, we plan to implement other installation options (with ClickHouse instances on each node of the cluster and as a ClickHouse cluster).

Web interface

The user part of the loghouse, which we call the loghouse-dashboard, consists of two components:

- frontend - nginx with basic authorization (used to distinguish user rights);

- backend is a Ruby application where you can view logs from ClickHouse.

The interface is designed in Papertrail style:

A small video with the interface in action can be viewed here (3 MB Gif).

Among the available features are the selection of a period (for specified “from and to” or the last hour, day, etc.), endless scrolling of records, saving arbitrary queries, restricting access to users on given Kubernetes namespaces, exporting the results of the current query to CSV.

Installation and use

The official status is the alpha version , and we ourselves use the loghouse in production for the second month. To install the loghouse you will need Helm and in the simplest case:

# helm repo add loghouse https://flant.imtqy.com/loghouse/charts/ # helm fetch loghouse/loghouse --untar # vim loghouse/values.yaml # helm install -n loghouse loghouse (See the documentation for details.)

After installation, the web interface will be raised, accessible at the address specified in the

values.yaml ( loghouse_host ) with basic authentication in accordance with the auth parameter from the same values.yaml .Development plan

Among the planned improvements in the loghouse:

- additional installation options: ClickHouse instances on each node, ClickHouse cluster;

- support for parentheses in the query language;

- uploading data to other formats (JSON, TSV) and in compressed form;

- upload archives with logs in S3;

- Migrate the frontend of the web interface to AngularJS;

- migration of the web interface backend to Go;

- console interface;

- ...

More detailed plan will appear in the near future in the form of issues of the project on GitHub.

Conclusion

The loghouse source code is published on GitHub under the free Apache License 2.0. As in the case of dapp , we invite DevOps engineers and Open Source enthusiasts to take part in the project - especially since he is still very young and therefore doubly needs an “active” look from the outside. Ask questions (you can right here in the comments) , point out problems , suggest improvements . Thanks for attention!

PS

Read also in our blog:

- " Officially present dapp - DevOps utility to maintain the CI / CD ";

- “ We assemble Docker images for CI / CD quickly and conveniently along with dapp (review and video) ”;

- " Our experience with Kubernetes in small projects " (video of the report, which includes an introduction to the technical device Kubernetes).

Source: https://habr.com/ru/post/341386/

All Articles