Google Analytics API for a marketer on a practical example

Hello! Based on the implementation of one of the tasks for analyzing traffic sources, I decided to write an article-instruction for marketers. This is the case when marketers cannot do without the Google Analytics API. The article is written for the benefit of web developers, so that marketers are not distracted by "any garbage."

We get acquainted with technology on a practical example. Go!

Task

There are about 150,000 users who are registered on the site. It is necessary to understand from which sources 1500 users who bought the product in October originally came.

To attract leads, a freemium model is used, the sales cycle can be up to 1 year.

')

From the advanced settings, at the stage of integration of Google Analytics, we connected the UserID and duplicated its value in Custom Dimension 1 (Scope: User) so that you can interact with the UserID in the reports.

Decision

Algorithm

To determine the first source you need:

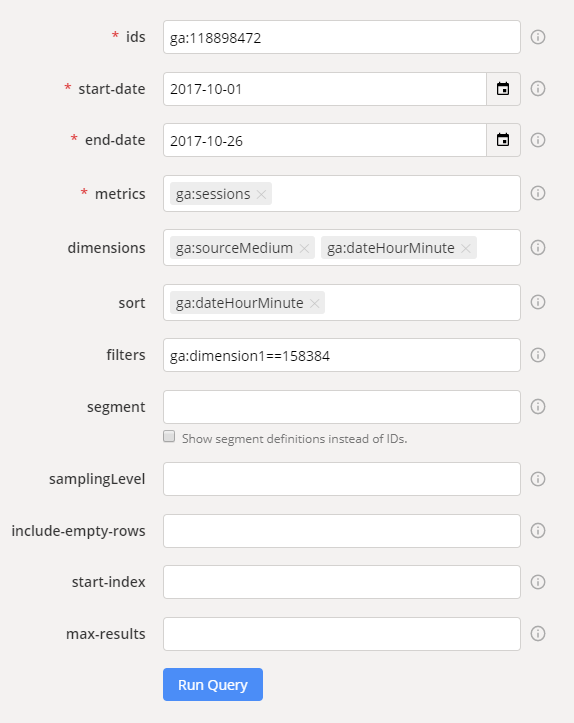

- Open Query Explorer service.

- Sign in, select Account, Property and View.

- To make a report:

- end-date : user registration date + 5 days (with a reserve just in case)

- start-date : user registration date -25 days (with a margin, the user could make interactions with the site before registration)

- metrics : ga: sessions

- dimensions :

ga: sourceMedium - source / channel

ga: dateHourMinute - time, includes the date, hour, and minute. - sort : ga: dateHourMinute - so that the very first session is displayed in the first line.

- filters : ga: dimension1 == 158384 - user ID.

- It should be something like this:

- Run the query by clicking on Run Query .

Result

After the execution of the request, a table should appear with the results:

Those. we see that in a given period before registration, the first session was from the source of yandex / organic.

For one user done. But how to assemble for 1500, automation?

Automation

Under the table with the results of the query, there are 2 text fields:

Direct link to this Report and API Query URI .

We are interested in the second field with the tick “ Include current access_token ... ”.

The content of the field is like this:

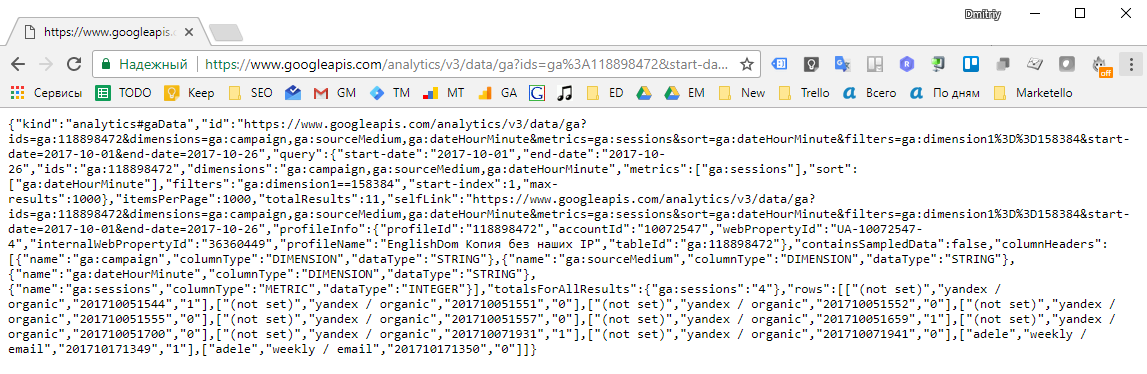

https://www.googleapis.com/analytics/v3/data/ga?ids=ga%3A118898472&start-date=2017-10-01&end-date=2017-10-26&metrics=ga%3Asessions&dimensions=ga%3AsourceMedium%2Cga%3AdateHourMinute&sort=ga%3AdateHourMinute&filters=ga%3Adimension1%3D%3D158384&access_token=ya29.Gl30BMgGpR89kexsBJS8VMIWimIEghKVHubx9iQH7RljCyQNLjX2LLBQ9AyCCRW9K0TjfJEvwe6qY3SIRKbkm8idMZjdygbN647O7JUgXqcGyDt5b63Y2FjDbeQabfA If we open the link in the browser, we will see the result of executing the request in JSON format:

Those. Substituting in the query url data dimension1, start-date, end-date - we can get this information for each of the users.

User data file

I made a regular txt file that contains lines of the form (UserID start-date end-date):

123456 2017-10-01 2017-10-26 123457 2017-10-02 2017-10-27 You can prepare start-date and end-date easily - via Excel or Google Spreadsheets:

start-date:

= date (year (B2), month (B2), day (B2) -25)

end-date:

= date (year (B2), month (B2), day (B2) +5)

Where B2 is the cell with the date of user registration.

Making a script

I chose Python as my language, simply because I am currently studying it.

Algorithm of the script:

- Open the file with information about users.

- Each of the lines of the file is divided by “tabulation”.

- We substitute the separated data from the string into the Query String, which we obtained when working with the Query Explorer.

- We get information on this link.

- Convert the response text to JSON so that you can interact with it.

- We make backup answers for each of the users, which will be saved in users /% userID% .txt

- Let's try to cycle through the “rows” section.

- If it works, write to the UserID screen and Source / Medium.

- If it does not work out, we write just the UserID.

import json import requests with open('users_regs.txt') as f: for line in f: value = line.split("\t") source = 'https://www.googleapis.com/analytics/v3/data/ga?ids=ga%3A118898472&start-date=' + value[1] +'&end-date=' + value[2].strip('\n') +'&metrics=ga%3Asessions&dimensions=ga%3AsourceMedium%2Cga%3AdateHourMinute&sort=ga%3AdateHourMinute&filters=ga%3Adimension1%3D%3D' + value[0] +'&access_token=ya29.Gl3wBOWAecjWj4GgW0Gj920Sx2SBtVBkCHZjOsPNu6MWnN1XnsNwwzVzPVBcdVwDf_7lWJd0mege38pP1PSNvc9aBA7wbndUn-h6vqS5bbbEhSOKHp4cjQvVSQiN5R4' r = requests.get(source) l = json.loads(r.text) with open('users/' + value[0]+'.txt', 'w') as outfile: json.dump(l, outfile) try: for row in l["rows"]: print(value[0] + " " + row[0]) break except: print(value[0]) metrics = ga% 3Asessions & dimensions = ga% 3AsourceMedium% 2Cga% 3AdateHourMinute & sort = ga% 3AdateHourMinute & filters = ga% 3Adimension1% 3D% 3D' + value [ import json import requests with open('users_regs.txt') as f: for line in f: value = line.split("\t") source = 'https://www.googleapis.com/analytics/v3/data/ga?ids=ga%3A118898472&start-date=' + value[1] +'&end-date=' + value[2].strip('\n') +'&metrics=ga%3Asessions&dimensions=ga%3AsourceMedium%2Cga%3AdateHourMinute&sort=ga%3AdateHourMinute&filters=ga%3Adimension1%3D%3D' + value[0] +'&access_token=ya29.Gl3wBOWAecjWj4GgW0Gj920Sx2SBtVBkCHZjOsPNu6MWnN1XnsNwwzVzPVBcdVwDf_7lWJd0mege38pP1PSNvc9aBA7wbndUn-h6vqS5bbbEhSOKHp4cjQvVSQiN5R4' r = requests.get(source) l = json.loads(r.text) with open('users/' + value[0]+'.txt', 'w') as outfile: json.dump(l, outfile) try: for row in l["rows"]: print(value[0] + " " + row[0]) break except: print(value[0]) h6vqS5bbbEhSOKHp4cjQvVSQiN5R4' import json import requests with open('users_regs.txt') as f: for line in f: value = line.split("\t") source = 'https://www.googleapis.com/analytics/v3/data/ga?ids=ga%3A118898472&start-date=' + value[1] +'&end-date=' + value[2].strip('\n') +'&metrics=ga%3Asessions&dimensions=ga%3AsourceMedium%2Cga%3AdateHourMinute&sort=ga%3AdateHourMinute&filters=ga%3Adimension1%3D%3D' + value[0] +'&access_token=ya29.Gl3wBOWAecjWj4GgW0Gj920Sx2SBtVBkCHZjOsPNu6MWnN1XnsNwwzVzPVBcdVwDf_7lWJd0mege38pP1PSNvc9aBA7wbndUn-h6vqS5bbbEhSOKHp4cjQvVSQiN5R4' r = requests.get(source) l = json.loads(r.text) with open('users/' + value[0]+'.txt', 'w') as outfile: json.dump(l, outfile) try: for row in l["rows"]: print(value[0] + " " + row[0]) break except: print(value[0]) The script file called ga.py.

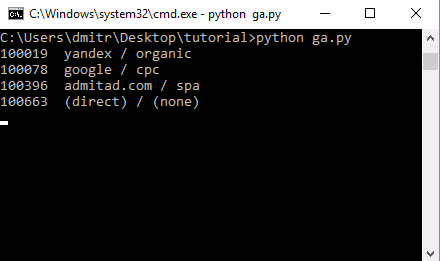

Run the script via the command line, enjoy the result:

The results of the script execution will be displayed in the terminal, you can simply copy them for further processing.

The script is presented for example, you can independently save the results into a single file or database.

If you will work with a large amount of data - once per hour you will have to generate a new token (open the Query Explorer again and click Run Query) and substitute it in the Query String in your script.

Successes!

Reader Bonuses

Online Courses

We give free access to three months of learning English through our online courses. To do this, simply follow the link until December 31, 2017.

Individually by Skype

We will be glad to see you at the individual lessons of the course “English for IT-specialists”.

Take a free introductory lesson and get a comprehensive feedback on your level of knowledge, then choose a teacher and a training program for yourself to your liking!

Source: https://habr.com/ru/post/341282/

All Articles