How to create multiple VMFS datastores on a single disk device

In some specific situations, you may need to create multiple data stores on a single disk device. As, for example, happened in our case.

On one of our Vsphere ESxi version 5.5 servers, an Adaptec ASR72405 RAID adapter is installed with 24 5-TB hard drives connected to it, brand ST5000NM0024. We decided to build a RAID 60 on 22 disks and configure another 2 disks to hot-spare. This provided us with the necessary disk space at the maximum obtained access speed for streaming reading / writing - around 3.5 gigabytes per second for writing and 3.3 gigabytes per second for reading. I draw attention once again that according to the specifics of our task, we were interested in precisely the maximum speed during streaming reading / writing.

However, the total capacity of the resulting volume (and the one we need for our task) is 81.826 terabytes, which exceeds the maximum supported ESXi 64TB. (This restriction is valid up to the latest version 6.5 at the moment) Creating two logical devices / raid arrays of 10 disks each did not fit, as it reduced the maximum access speed by almost half and significantly reduced the total total storage size due to the increase in the number of disks allocated for parity (in two arrays of them, respectively, will be 2 times more than in one) and hot-spare disks, since each RAID-60 array requires an even number of disks — that is, 4 disks forcedly left in hot-spare. Initially, we tried to create two logical disks (volumes) using ½ each hard disk for each volume, respectively, but the fault tolerance tests revealed an unpleasant feature (I would even call it a bug) of the logic of the Adaptec internal microprograms in our configuration: and rebuilding the RAID array, the controller connected half of one hot-spare disk to one logical drive, and half of another hot-spare disk to another logical drive. And when replacing a disk with a serviceable one, it was not able to finish the copying-back process and hung up in a state of 99% for more than a week — we did not wait any longer. However, Adaptec does not provide any tools to find out the cause of this hangup and / or somehow manually restore the logical drives. (Naturally, we used the latest available firmware and raid management.)

In our appeal to Adaptec technical support, we received a recommendation to use hard drives in logical drives as a whole rather than partially. Literally - “If you’re not completely complete, you’re not happy with your system.” For the reasons described above, this solution did not suit us.

')

Thus, we needed to divide a single RAID disk with a size of almost 82 terabytes into two volumes of approximately 41 terabytes at the partition level on the disk by means of the operating system. Vsphere ESXi in our case. However, this feature is not supported via GUI as standard. ESXi allows you to create only one data store on each connected drive through the GUI, and despite the fact that there is still free space on the disk, the GUI does not allow you to create another data store. Below you will find instructions on how to do this using CLI.

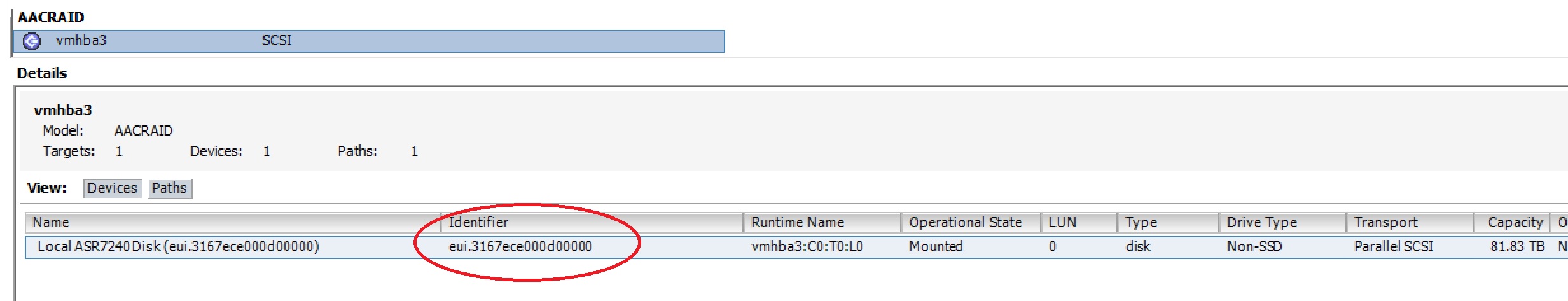

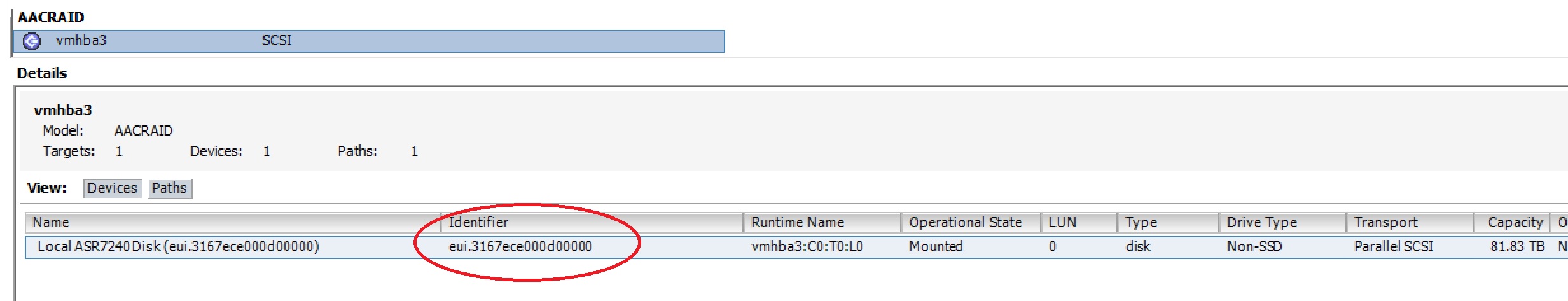

First of all, you need to allow SSH access to your ESXi host. After that, find out exactly how your RAID is called. This, for example, can be viewed through the GUI:

Ie, for all further operations our disk will be called

Before starting the disk layout, check the current state of the partition table and make sure that you do not have any existing partitions on this disk:

Next, check the maximum available for use disk sector:

Now you can create the necessary sections:

The first section is created from sector 2048 to sector 87031810047, the second from sector 87031810048 to sector 175720329182. In your case, these values will most likely be different. The identifier AA31E02A400F11DB9590000C2911D1B8 must be copied unchanged, it means that a VMFS partition is created. 0 after this identifier means that the section will not be loaded.

If all values are correct, you will get an answer.

You can also check the resulting partition table:

This means that we have successfully created two sections, and they have the names eui.3167ece000d00000: 1 and eui.3167ece000d00000: 2

Now we just have to create data stores.

This is done by the teams.

and

If everything is done correctly, then after each launch of the command you will see something like:

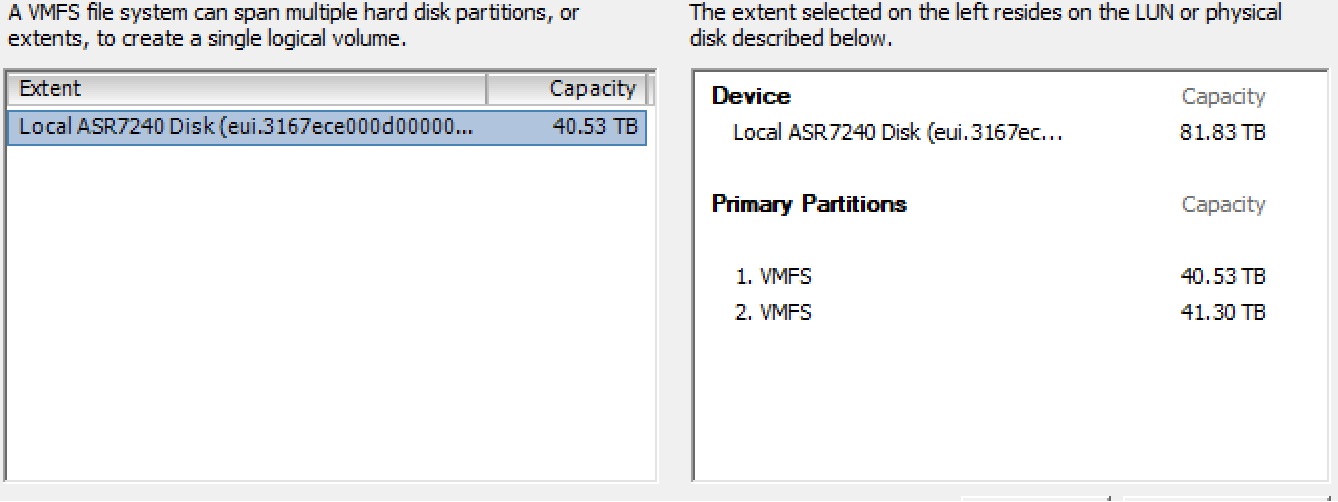

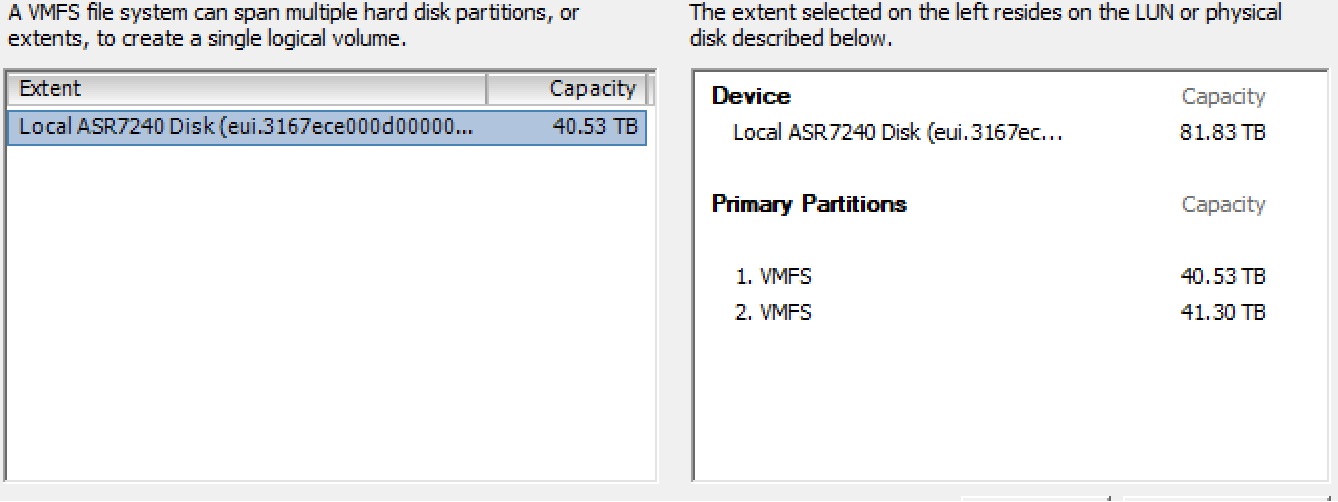

After a few seconds, both of your data store will be available in the GUI:

More information on the use of these commands can be found here and here .

I would be glad if I saved someone a few hours. I will answer any questions.

Thank you for reading to the end.

On one of our Vsphere ESxi version 5.5 servers, an Adaptec ASR72405 RAID adapter is installed with 24 5-TB hard drives connected to it, brand ST5000NM0024. We decided to build a RAID 60 on 22 disks and configure another 2 disks to hot-spare. This provided us with the necessary disk space at the maximum obtained access speed for streaming reading / writing - around 3.5 gigabytes per second for writing and 3.3 gigabytes per second for reading. I draw attention once again that according to the specifics of our task, we were interested in precisely the maximum speed during streaming reading / writing.

However, the total capacity of the resulting volume (and the one we need for our task) is 81.826 terabytes, which exceeds the maximum supported ESXi 64TB. (This restriction is valid up to the latest version 6.5 at the moment) Creating two logical devices / raid arrays of 10 disks each did not fit, as it reduced the maximum access speed by almost half and significantly reduced the total total storage size due to the increase in the number of disks allocated for parity (in two arrays of them, respectively, will be 2 times more than in one) and hot-spare disks, since each RAID-60 array requires an even number of disks — that is, 4 disks forcedly left in hot-spare. Initially, we tried to create two logical disks (volumes) using ½ each hard disk for each volume, respectively, but the fault tolerance tests revealed an unpleasant feature (I would even call it a bug) of the logic of the Adaptec internal microprograms in our configuration: and rebuilding the RAID array, the controller connected half of one hot-spare disk to one logical drive, and half of another hot-spare disk to another logical drive. And when replacing a disk with a serviceable one, it was not able to finish the copying-back process and hung up in a state of 99% for more than a week — we did not wait any longer. However, Adaptec does not provide any tools to find out the cause of this hangup and / or somehow manually restore the logical drives. (Naturally, we used the latest available firmware and raid management.)

In our appeal to Adaptec technical support, we received a recommendation to use hard drives in logical drives as a whole rather than partially. Literally - “If you’re not completely complete, you’re not happy with your system.” For the reasons described above, this solution did not suit us.

')

Thus, we needed to divide a single RAID disk with a size of almost 82 terabytes into two volumes of approximately 41 terabytes at the partition level on the disk by means of the operating system. Vsphere ESXi in our case. However, this feature is not supported via GUI as standard. ESXi allows you to create only one data store on each connected drive through the GUI, and despite the fact that there is still free space on the disk, the GUI does not allow you to create another data store. Below you will find instructions on how to do this using CLI.

First of all, you need to allow SSH access to your ESXi host. After that, find out exactly how your RAID is called. This, for example, can be viewed through the GUI:

Ie, for all further operations our disk will be called

/vmfs/devices/disks/eui.3167ece000d00000 Before starting the disk layout, check the current state of the partition table and make sure that you do not have any existing partitions on this disk:

~ # partedUtil getptbl /vmfs/devices/disks/eui.3167ece000d00000 gpt 10938084 255 63 175720329216 Next, check the maximum available for use disk sector:

~ # partedUtil getUsableSectors /vmfs/devices/disks/eui.3167ece000d00000 34 175720329182 Now you can create the necessary sections:

~ # partedUtil setptbl "/vmfs/devices/disks/eui.3167ece000d00000" gpt "1 2048 87031810047 AA31E02A400F11DB9590000C2911D1B8 0" "2 87031810048 175720329182 AA31E02A400F11DB9590000C2911D1B8 0" The first section is created from sector 2048 to sector 87031810047, the second from sector 87031810048 to sector 175720329182. In your case, these values will most likely be different. The identifier AA31E02A400F11DB9590000C2911D1B8 must be copied unchanged, it means that a VMFS partition is created. 0 after this identifier means that the section will not be loaded.

If all values are correct, you will get an answer.

gpt 0 0 0 0 1 2048 87031810047 AA31E02A400F11DB9590000C2911D1B8 0 2 87031810048 175720329182 AA31E02A400F11DB9590000C2911D1B8 0 You can also check the resulting partition table:

~ # partedUtil getptbl /vmfs/devices/disks/eui.3167ece000d00000 gpt 10938084 255 63 175720329216 1 2048 87031810047 AA31E02A400F11DB9590000C2911D1B8 vmfs 0 2 87031810048 175720329182 AA31E02A400F11DB9590000C2911D1B8 vmfs 0 This means that we have successfully created two sections, and they have the names eui.3167ece000d00000: 1 and eui.3167ece000d00000: 2

Now we just have to create data stores.

This is done by the teams.

~ # /sbin/vmkfstools -C vmfs5 -b 1m -S Data1 /vmfs/devices/disks/eui.3167ece000d00000:1 and

~ # /sbin/vmkfstools -C vmfs5 -b 1m -S Data2 /vmfs/devices/disks/eui.3167ece000d00000:2 If everything is done correctly, then after each launch of the command you will see something like:

create fs deviceName:'/vmfs/devices/disks/eui.3167ece000d00000:1', fsShortName:'vmfs5', fsName:'Data1' deviceFullPath:/dev/disks/eui.3167ece000d00000:1 deviceFile:eui.3167ece000d00000:1 Checking if remote hosts are using this device as a valid file system. This may take a few seconds... Creating vmfs5 file system on "eui.3167ece000d00000:1" with blockSize 1048576 and volume label "Data1". Successfully created new volume: 59e14137-1e03a524-12db-002590826ec4 After a few seconds, both of your data store will be available in the GUI:

More information on the use of these commands can be found here and here .

I would be glad if I saved someone a few hours. I will answer any questions.

Thank you for reading to the end.

Source: https://habr.com/ru/post/341216/

All Articles