About backups, black friday and communication between people: how we messed up and learned not to do it anymore

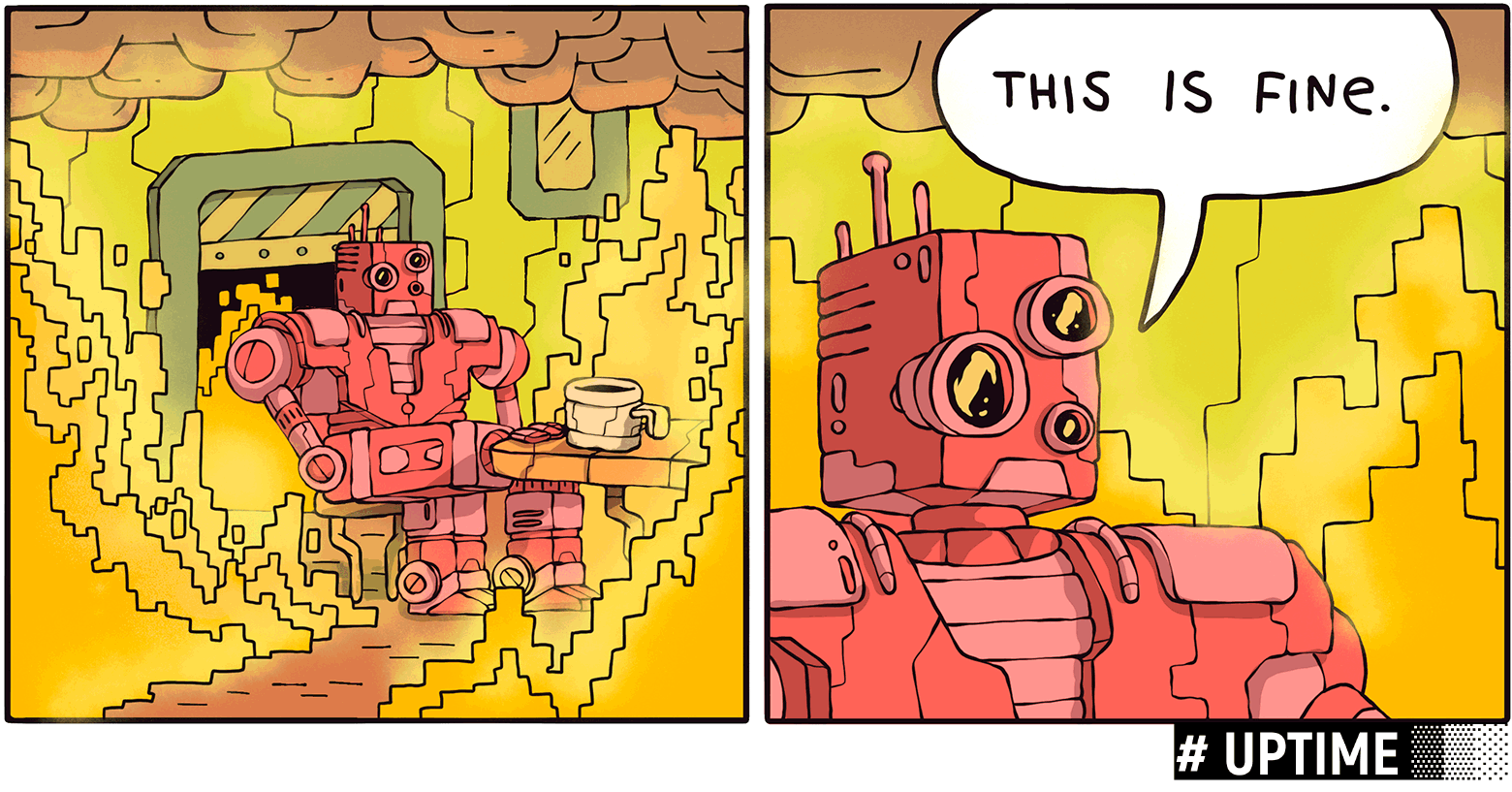

On October 13, we held the second Uptime Community Conference. This time, the date of the meeting fell on Friday the 13th, so the main topic - the accident, and how to cope with them. This is the first of a series of posts about reports from the last conference.

I have three terrible stories about how our fault broke everything, how we repaired it, and what we are doing now so that it does not happen again.

Scale: number of alerts

We have been working since 2008, we are now 75 people (Irkutsk, St. Petersburg, Moscow), we are engaged in round-the-clock technical support, system administration and devops for web sites around the world. We have 300 clients and 2000 servers on support.

Our main job is that if any problem occurs, we have to come and fix it in 15 minutes.

In 2010, there were about 450 alerts per month at the peak.

This little red line is how many alerts we had before the end of 2012.

In 2015, the peak reached 100,000 alerts per month.

Now there are about 130,000 - 140,000.

About 400 alerts per hour are delivered to our attendants. That is, 400 messages from the monitoring system that need to be investigated. It can be something quite simple: for example, the disk space ends; maybe something complicated: everything fell and nothing works, and you need to understand what happened.

On “Black Friday,” we reach 900 alerts per hour, although online stores prepare for this event and warn administrators. Three years ago, “Black Friday” was held on Friday. Two years ago, the stores decided to trick each other and made a "black Friday" on Thursday, all together. Last year it was Wednesday, it is obvious that this “black Friday” will be on Tuesday and, ultimately, everything will go in a circle. Shops do not like to say when exactly this will happen, so good stores prepare for load testing, and other good stores do not talk about it at all, and then come and say: “Hello, we just sent out a mailing list to 300,000 people, please prepare server to load.

If you prepare the server for the load in a hurry, then a lot of problems arise. To be able to think quickly in such conditions is a special skill. Usually people want to work according to plan, they need to think carefully about what they are going to do. But in our case it is more like a surgical department on the battlefield.

Accidents

Alerts are many, so mistakes are inevitable. And sometimes in the process of fixing one problem, you can create much more.

We divide our mistakes into three categories:

- The lack of a process: something happened that we hadn’t come across before, what exactly to do is unclear, trying to fix it on the move - it gets worse.

- Errors in the already debugged process: the problem seems to be familiar, it seems to be clear what to do, but all possible options were not provided.

- Interaction errors: the process seems to be debugged, but there is a problem of communication between people.

And I have a scary story on each item.

No process

The first is one of the worst stories that happened to us. It happened on March 31, 2016. A client came to us for support, with a 2 TB database in MySQL without a single backup, with 70 employees who worked on this database. It was quite complicated: about 300 GB per table, hundreds of millions of entries in the tables. The whole company works only because this base exists.

A separate problem - previous administrators built flashcache on the disk and sometimes the files were deleted, sometimes not, something strange was happening.

The first and most important task was to create a backup database, because if anything happens to it, the company will simply stop working.

The base does not have a slave and people work from 9 to 18 with this base very tightly - the work cannot be stopped. The database has 300 GB of MyISAM tables and xtrabackup, which is good for backing up InnoDB, but it does everything at the moment of the MyISAM backup. That is, we first tried to run xtrabackup and see what happens. Xtrabackup blocked MyISAM tables, the site went down, everything stopped working.

We canceled the launch of xtrabackup and began to think what to do. We have xtrabackup that works well with InnoDB and that does not work well with MyISAM. But MyISAM in this case was not so necessary in the backup itself. The plan was this: we will run xtrabackup manually without the MyISAM part. Let's transfer the data to the slave, from the slave we will raise another slave, which we will simply dump by copying.

We collectively discussed this option, but could not decide. Everything sounded reasonable, except that we never did that.

At two nights, I myself launched xbackup, gutting the backup so that it doesn’t do anything related to MyISAM and just transfer data. But, unfortunately, at that moment not all of our administrators knew that when xtrabackup works (in fact, it is such a MySQL dumper), it creates a temporary log, and quite tricky - it creates it as a file that opens for reading and writing, and immediately deletes. This file cannot be found on the disk, but it grows and grows. At the same time, xtrabackup does not stream it to another server. Accordingly, if there are a lot of records, then the disk space quickly ends. About this to us at 16 o'clock an alert came.

There was little free space on the server, and the admin went to figure out what was going on. He saw that the place ends, and how it is filled is incomprehensible. But there is xtrabackup, which has a "deleted file", which has something to do with it. And I decided that this is a remote log, accidentally opened by xtrabackup and I would need to annihilate it.

The admin wrote a script that read the file descriptors opened by xtrabackup and deleted all the files marked “deleted”. I started the script at 18:00, and the site immediately fell.

By 18:30 we understood that MySQL does not rise and does not respond. At that time I was driving by car from Novosibirsk, where I was at a conference, to friends in Barnaul. I sat with friends for just five minutes, because a client called me and said that, as a result of a seemingly innocuous operation, the site suddenly lay down. I looked in the admins chat. And I saw there the phrase: "Well, we did not kill the base for them." The answer was: “Yes, they killed. And maybe meet. "

The admin, running a script that zeroes the already prescribed file descriptors, launched it over the MySQL pid and killed ibdata, which weighed 200–300 GB.

After a little panic, I came to the customers and said what had happened. It was a difficult conversation. By 19 o'clock, we realized that xtrabackup had time to ibdata.

MySQL has an old data structure when everything is in one file in ibdata, and there is a separate new InnoDB structure where the tables are in separate files. In ibdata, unfortunately, there are still “last sequence numbers”, that is, MySQL starts, looks at what positions the data is in the files, goes there and then everything is fine, if there is no ibdata - MySQL does not start. At all.

We had a hot ibdata transfer, we tried to just slip it, but MySQL data recovery refused to work with this table. It was clear that probably something can be done, but what exactly is not clear.

We called a well-known company that is associated with MySQL, and, including, is engaged in data recovery. We said that there is a wonderful situation: there is a 2TB database with 300GB tables and a dead ibdata. There is a separate ibdata, which is copied to the hot one and we would very much like them to try to restore this base. We were told that it was possible to see, but it cost $ 5,000. Since the joint was scary, I asked where to pay, after which it turned out that $ 5,000 is a fuck off money for this company, they still refused to work. As I later learned, when we described the whole problem, the guys from this company argued for a long time whether we could restore this base.

By 21 o'clock we found a blog of a man named Alexander Kuzminsky, who had previously worked in the company where we turned. He is very cool, I saw him recently, thanked him 50 times. In his blog it was written that if you accidentally deleted ibdata, and at the same time you have copied to a hot ibdata, you can put it in place, start MySQL under gdb, and during start-up, change the wrong values in memory “last sequence numbers "On the right.

We really wanted to return the base, because all the previous time before that, I thought that we had just destroyed a company that employs 70 people. In the end, we tried to do as it was written, and it started. Unfortunately, MySQL runs in recovery mode and, of course, nothing can be written there, and indeed it’s scary. Therefore, we decided that the base should be set. This time we did a MySQL dump. Therefore, the next two days we dumped the entire base in an ordinary manner, and hoped that everything would work. It worked.

We decided that from now on we will conduct an analysis of incidents.

There were a lot of emotions, but let's look at everything a little more detached.

What exactly happened?

Given:

2016-03-31 - - (MySQL) — 2, 300 - , - 300 MyISAM ( xtrabackup) Plan:

2016-03-31 - xtrabackup , MyISAM - , - – A life:

2016-03-31 02:00 xtrabackup 2016-03-31 02:00 — 2016-04-01 16:00 (deleted) redo-log- 2016-04-01 16:00 « » 2016-04-01 17:00 redo-log-, (deleted) xtrabackup, file-handler- (deleted) 2016-04-01 18:00 2016-04-01 18:00 The rescue:

2016-04-01 18:00 — 18:30 MySQL 2016-04-01 18:30 file handler ibdata MySQL 2016-04-01 19:00 ibdata xtrabackup 2016-04-01 20:00 data recovery MySQL 2016-04-01 21:00 gdb 2016-04-01 21:00 — 2016-04-02 03:00 , recovery mode 2016-04-02 03:00 — 2016-04-03 06:00 MySQLdump Why did this happen?

The admin did not understand how xtrabackup works, that a tmp file is being created that can grow.

There was no checklist. They tried to do everything that came into my head, without any plan, because it was no longer up to it. I intervened and asked why it is still not ready.

Non-standard operations about which employees were not informed. Someone started xtrabackup, and the administrator on duty thinks that some kind of basic thing is working and for some reason the place is running out.

findings

- Avoid non-standard operations.

If they occur, then we work only with one group, and people within the group need to know what exactly is happening. - An incomprehensible problem is to report to the manager. Previously, if there was an incomprehensible problem on the server, the admins themselves tried to figure it out. Now, if for some reason the place ends, the admin must inform the manager that for some unknown reason the place ends. Since the manager may be informed why this is happening, perhaps he knows who will better deal with this problem, or he will say that the admin can try to solve it himself. Any risky operation now requires double control: the person who is going to do something, first talks about it, and only then does it. As a co-pilot on the plane.

Problems related to the violation of the debugged process

In January 2017 there was a “cyber Tuesday”. It was our second big school, not so scary, but also a little pleasant.

To prepare for Cyber Tuesday, one big store in the morning decided to do load testing right on the production to find out where problems might arise. It was a good idea - we will know where the most vulnerable spot is and the project will be ready for serious workloads.

The administrator on duty during the morning and afternoon testing made a note to the alerts that the pages may slow down, the pages may not respond, everything is fine, this is a test. And so he passed it on to the next shift, which came by the evening of “Cyber Tuesday”. At the time of mailing, the cache of the promotion page was turned off, traffic was thrown there, the site did not respond in the evening and night.

What exactly happened?

The whole "cyber tuesday" store was not working.

It is interesting that it is very difficult to blame someone here. Each one did everything correctly: the first admin noted that the alerts fly because load testing is underway, the other admin read about it and did not fix anything when the alerts flew. Everything was correct, everyone complied with the process, everything was fine. But everything turned out badly.

Why did this happen and what conclusions should be made?

Admins passed messages to each other. Now we are doing so that admins send messages when they confirm the manager. If there is any reason not to respond to critical alerts, manager confirmation is required. At the same time, the duty manager confirms all current messages of the monitoring system during the transfer of the admin shift.

Interaction process errors

October 5, 2017 - our third big joint.

An employee of the monitoring development department asked the administrator on duty to turn off the module on the client’s production server. Sales in the mobile app did not go six hours.

What exactly happened?

Everything was on the protocol, but now there was an interaction error.

When the developer came and told him to turn off the module in production, the admin decided that if the developer said it with such confidence, then, apparently, he had already asked permission from both the manager and the client.

The developer decided that the admin is a responsible person, you can ask him to turn it off, and he will ask the manager and the client, and, if everything is normal, then turn it off.

As a result, this module was simply slashed without asking anyone. Everything was pretty bad.

Why did this happen and what conclusions should be made?

The administrator on duty expected that the developer agreed on the shutdown, and the developer himself thought that the administrator would agree on the shutdown.

Any expected action must be confirmed. You should not hope that someone else will ask. The developer had to send his request through the manager, the manager would confirm with the client and give to the admin.

Conclusion

Errors are inevitable, anyone can make a mistake. We have a very dense flow of tasks, between which, moreover, we must constantly switch. We try to learn from our mistakes and never repeat them.

Most of the problems have happened before and there are ready-made solutions for them, but sometimes something unexpected happens. To better cope with this, in the support department, we have identified four tmlidov. They have been working for a long time, and we know that they make fewer mistakes. All standard situations are resolved by a group of administrators on standard protocols, and if something completely incomprehensible happens, they report to the Teemlides, and they already decide on the go, along with the client.

')

Source: https://habr.com/ru/post/341194/

All Articles