Localization of comments in the code. Yandex lecture

In the process of entering the international market with the Maps API, we decided not to comment on the code in Russian. At the same time, based on comments, service references are compiled, which are then published on our portal, and we did not want to refuse to support reference books in Russian. From the report of Olesya Gorbacheva and Maxim Gorkunov, you will learn how Yandex technical writers, together with the developers of the Maps API, changed the language of comments and organized simultaneous support for reference books and examples in two languages at once.

- My name is Olesya, I am engaged in documenting the Maps API. I will not be speaking alone; my colleague Maxim will be with me - responsible for localization tools in Yandex.

Today we will talk about how we changed the language in the comments in the JS API Maps code. What have we done? All comments that were in Russian in the code, changed to English comments. In the course of the report, we will explain why we had to translate comments in the code, what is the complexity of this task, and how this affected our system of autogenerating documentation from code. We had to translate not just comments in the code, but documentation ones, on the basis of which we form and publish API directories in Yandex. Not only the documentation and localization service worked on this task, but also the Maps API team, these funny guys are the customers of the task, the heroes of the occasion.

')

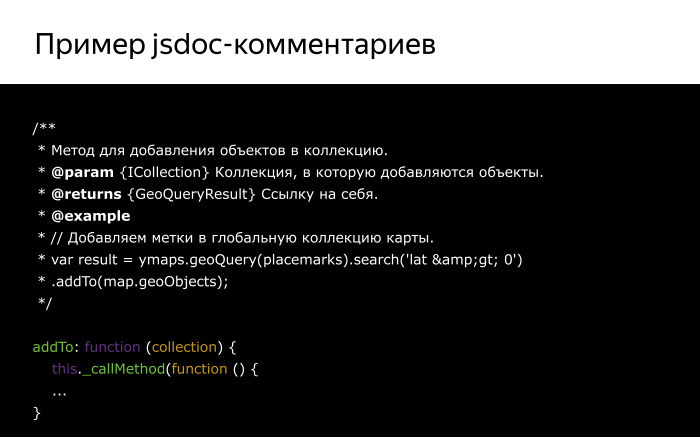

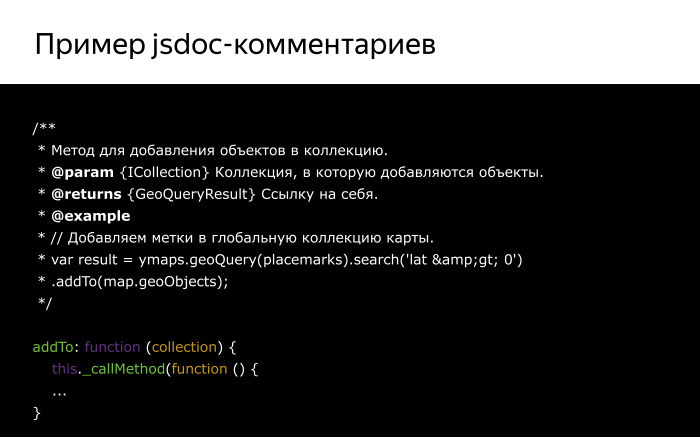

How did the task of translating comments in the code? Let's return to the past, when the code was still documented in Russian. Last year, on the last Hyperbaton, I already told you that we used the JSDoc tool to document the code.

The developers have marked the code in Russian in accordance with the requirements of JSDoc. If necessary, I read these requirements, made edits and commit to the code.

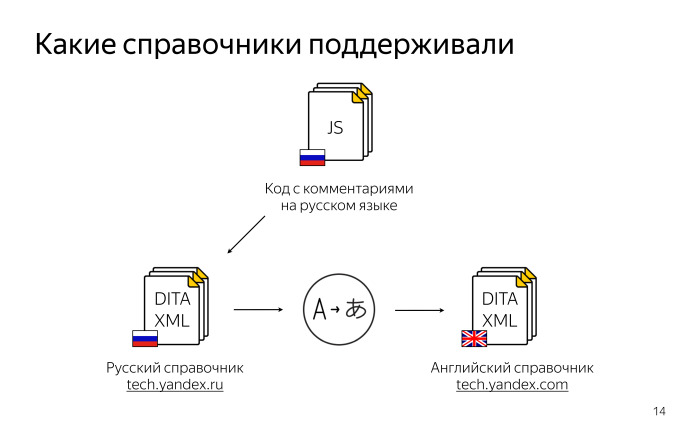

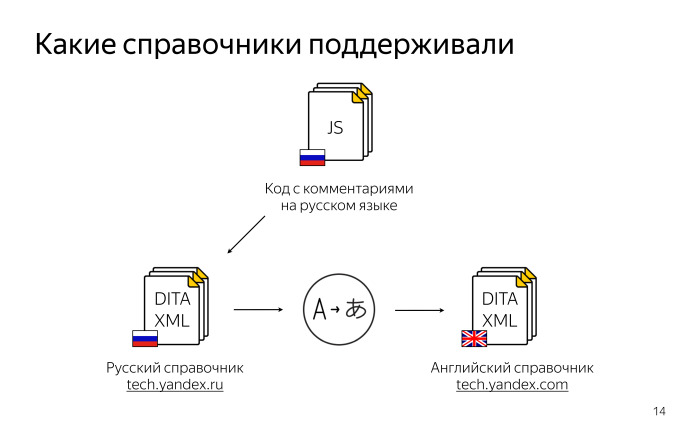

What directories did we support? First of all, the code in Russian. DITA XML is the format for the presentation of our documentation, for more details about it - in the report of Lesha Mironov. Here is an example of a compiled reference book, which can be viewed here .

We supported not only the Russian directory, but also English. We gave the collected Russian reference book for translation and received a reference book in English. It was also published, but already on the com domain .

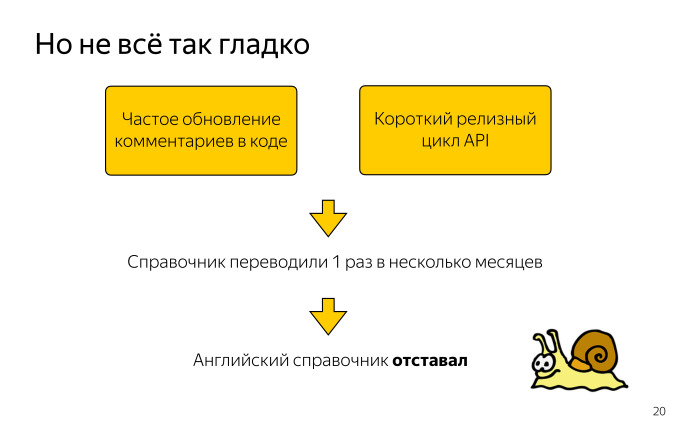

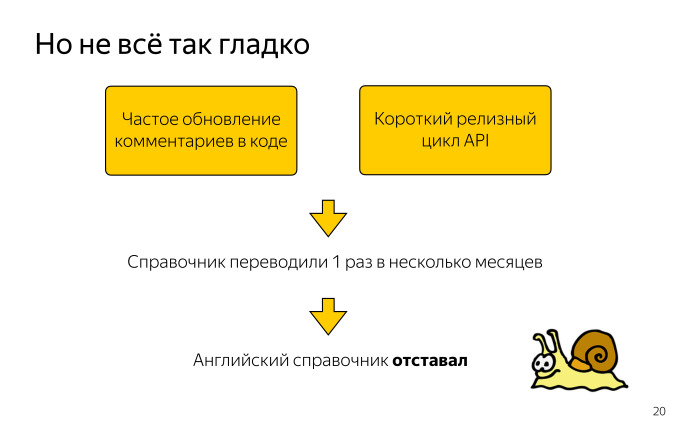

There was a problem with the English reference book. Editing comments are made quite often. The API has a rather short release cycle. And if we can publish the Russian reference book quickly, at least every day, then every day we could not afford to give it for translation.

Therefore, we accumulated a bundle of changes and gave a guide to translate every few months. And the English reference book lagged behind the Russian. At that time, the Russian documentation was of higher priority, and for us this was acceptable.

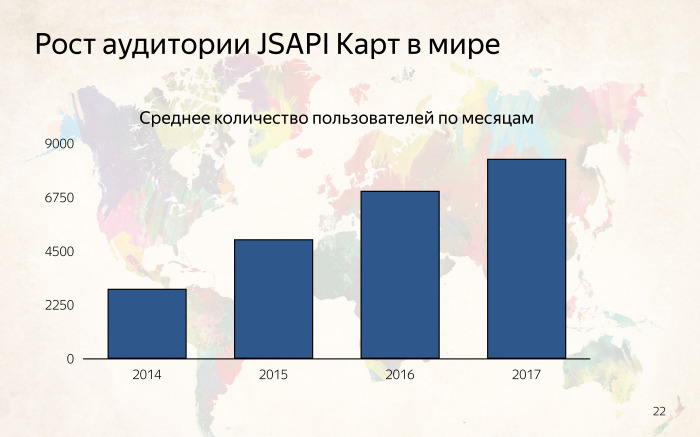

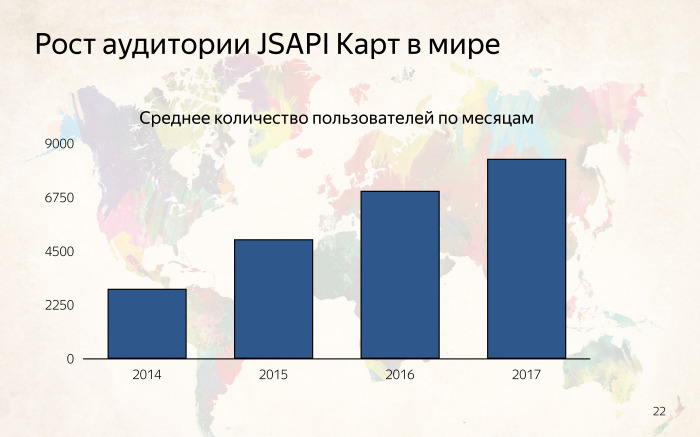

As time went on, the Maps API evolved, and audience growth in the world became significant.

We more often began to write people from different parts of the world asking about using the Maps API and documentation questions - why we have an outdated version there. At that moment, we started thinking half a year ago that, firstly, Yandex is an international company, and the Map API is a large-scale project. And any user in the world can open the code and see Russian comments. It is not right.

In addition, at that time, the developers began to issue external modules for working with the API, which are on GitHub, and the documentation for them is immediately written in English. And let's not forget the backlog of the English reference book. After weighing all this, we thought that it was necessary to translate all comments into English, and then write immediately in English.

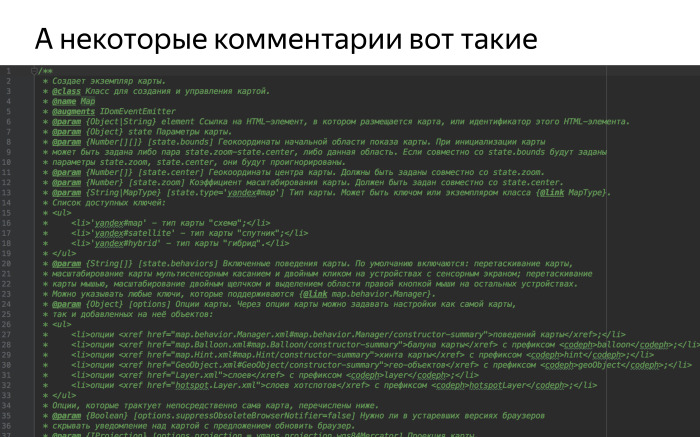

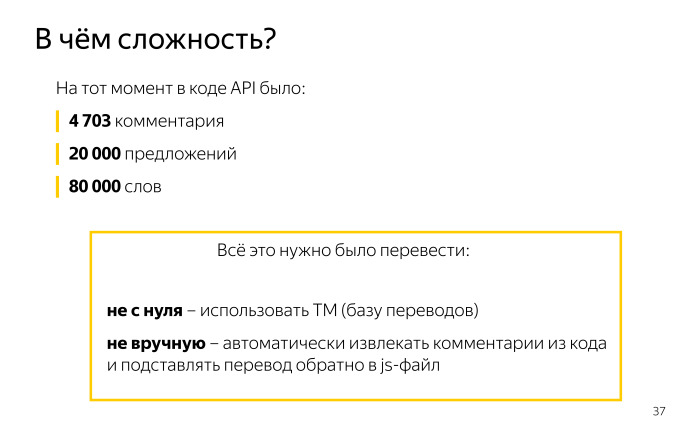

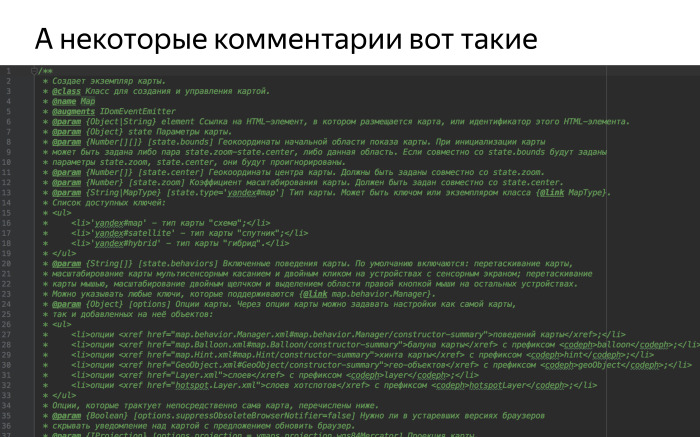

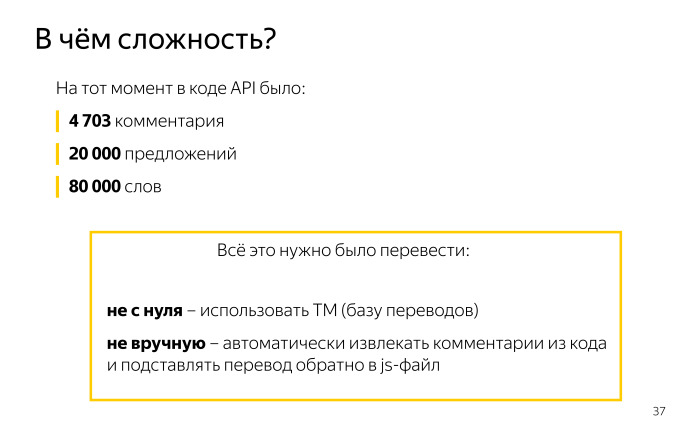

What is the complexity of the problem? We calculated that at that time there were exactly 4703 comments in the API cards code. Some of them are big enough. This is one piece, one comment. They are not all so long, but nonetheless.

All this needed to be translated. And translate not from scratch. For many years of the translation of the reference book, we have accumulated a huge translation memory, which we were able to use only when translating ordinary XML documents. We wanted to learn how to use this memory to translate comments in code.

We did not want to translate manually. We didn’t want to copy, translate and copy back to 4700 comments code. We wanted to automatically retrieve the comments, and paste the translation into their places, preserving the skeleton of the JS document, all the JSDoc tags, their validity, and without breaking the code itself. How we did it, Maxim will tell.

- Good day. Add technical details, tell you how this task looked from the point of view of the localization engineer.

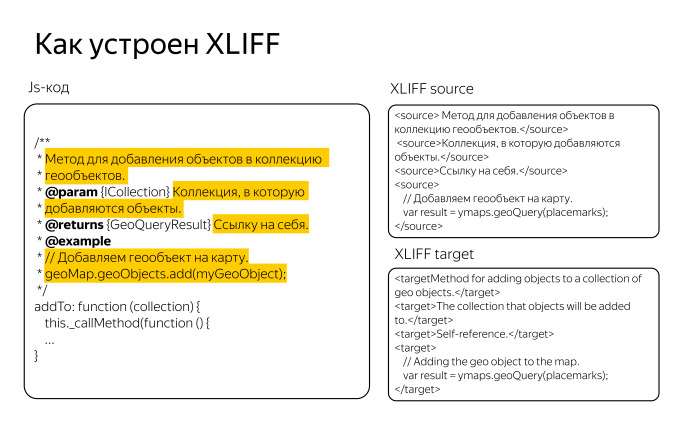

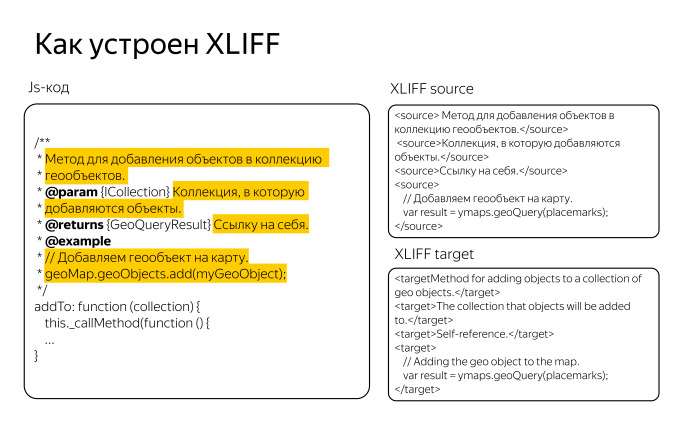

We had to find in the JS-code all descriptions of classes, objects and methods, then text descriptions to all parameters and present all this text as an XLIFF file. In Yandex, we all strive to translate via XLIFF, we have processes for this, there are translation farms, a translation memory storage server, TM. In fact, it was necessary to make XLIFF from all JS scripts and translate it into the Cat tool. For this task, we used the Okapi Framework, a powerful open source tool that we use to automate localization tasks.

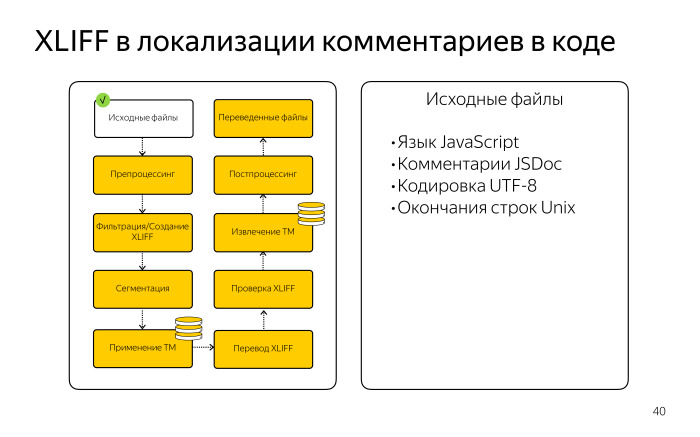

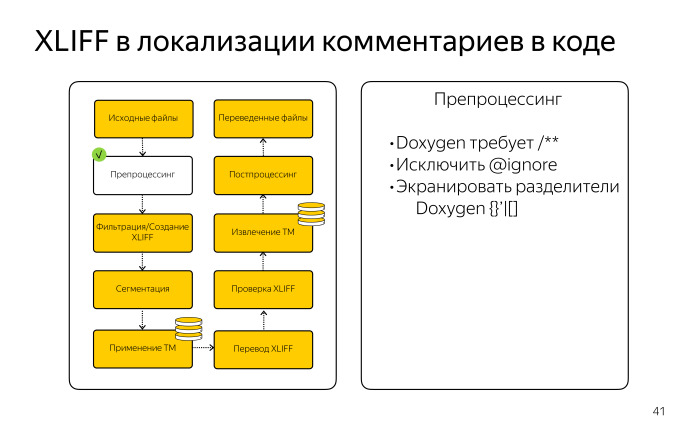

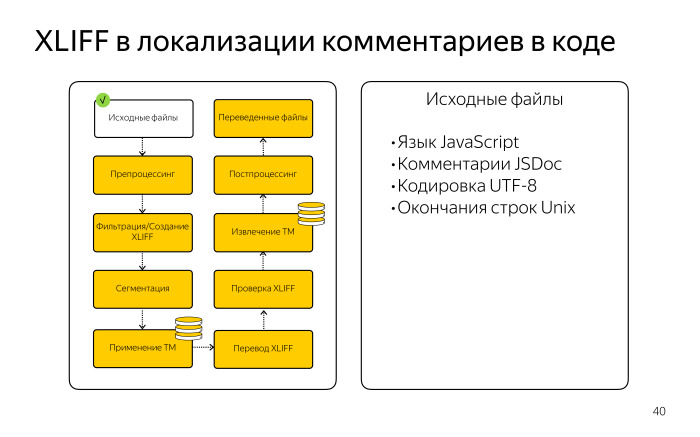

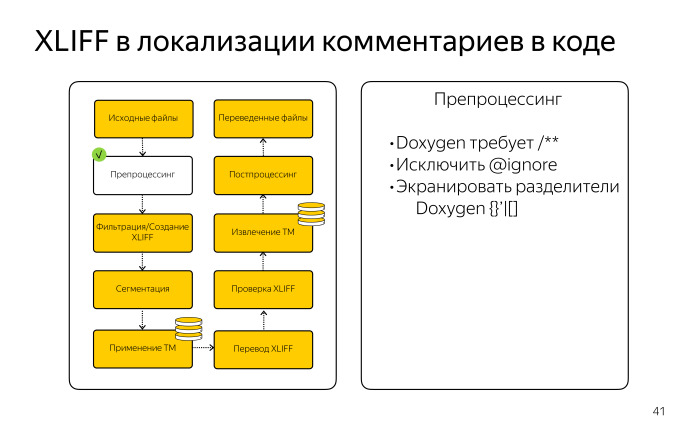

We took the original JS file from the repository, made sure that it meets the requirements for UTF-8 encoding, the same ending of lines is important for the work of the Cat tool.

Then you had to prepare them in order to apply one of the Okapi filters. Although JSDoc is the most powerful tool for documenting JS files, there is no ready filter for it. We had to somehow get out. We used the closest filter from the Okapi Framework, which extracts Doxygen comments. This is another code documentation system, which is mainly used for comments in C ++, Java- and, possibly, in Python.

The format of these comments is very similar. In fact, we had to fake these JS files, bring them to the kind that the Doxygen filter expected. The differences were mainly in asterisks, slashes. Suppose you need three slashes to discard a comment, not two, asterisks should also be two, not one. We modified this code a little bit. From the point of view of programmers, he then became invalid, but since these filters do not disassemble it, it is just like a text file, it did not matter to us.

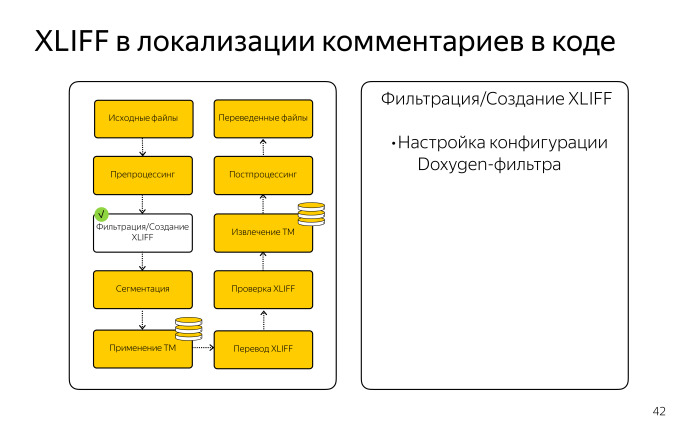

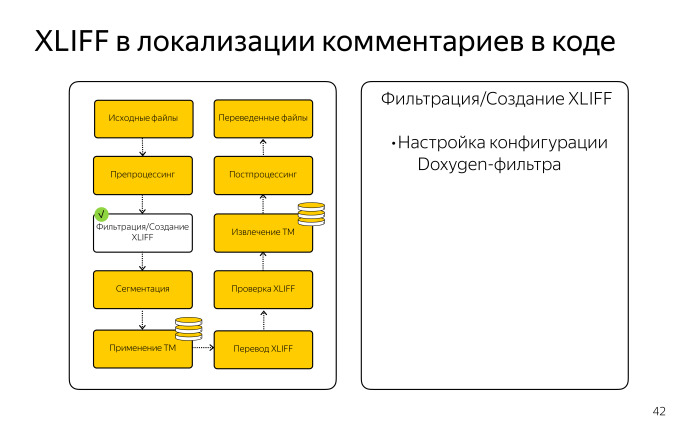

We set up the configuration, registered all the JSDoc parameters to rip out only the text. We were not interested in service programmer things, we were only interested in the Russian text.

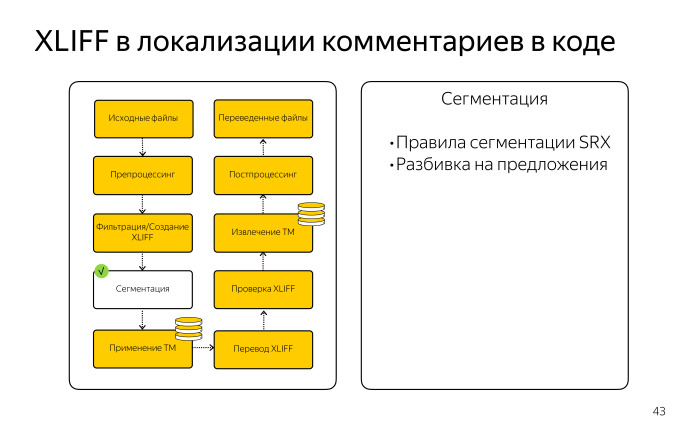

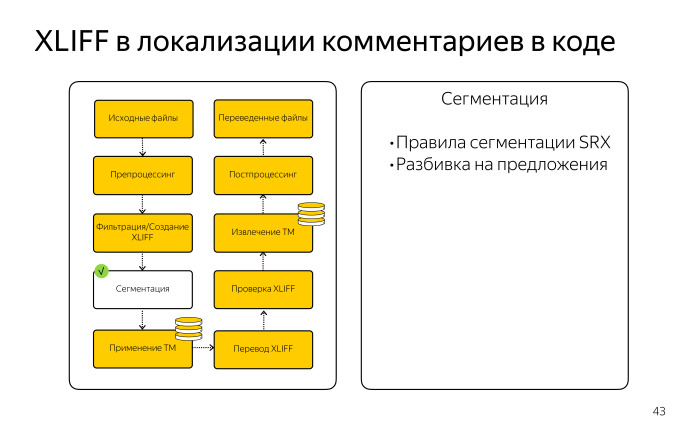

Next we made pipeline. In the terminology of the Okapi Framework, this is a sequence of steps that will lead us to the creation of XLIFF. Next was the segmentation step. We have rules in the form of SRX files for the Russian language, which allow us to break large blocks of text into separate sentences. This increases the percentage of coincidences from TM, as the fragments of memory become smaller.

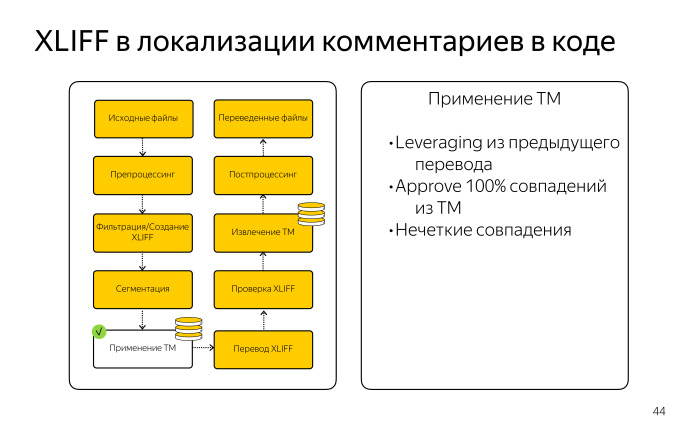

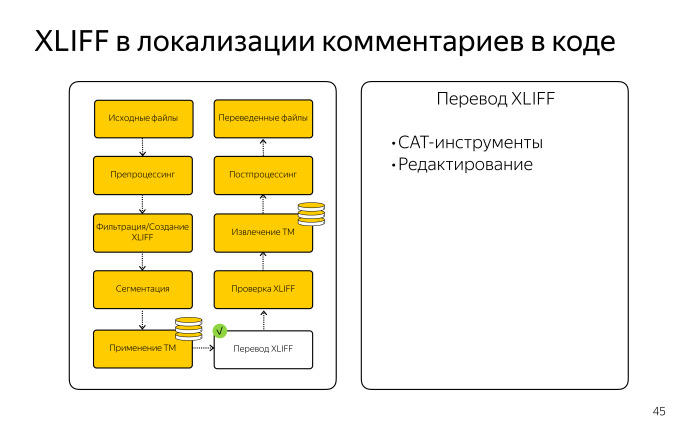

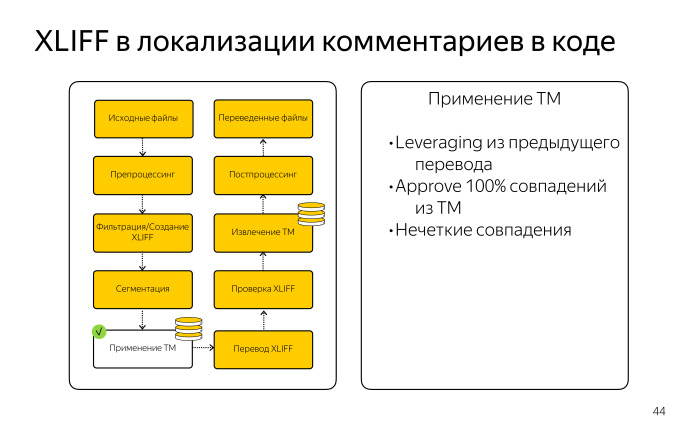

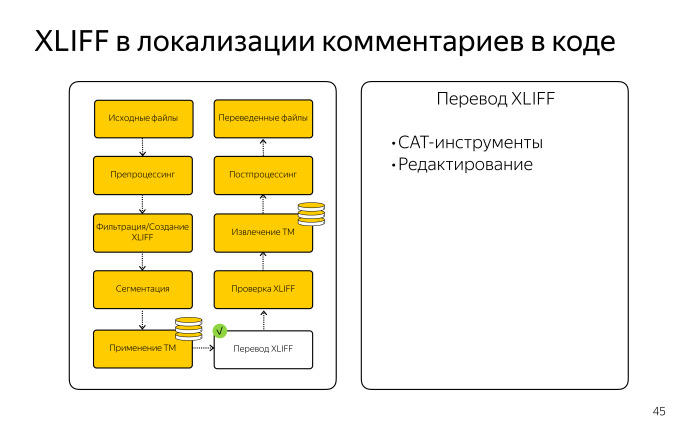

Then we applied the existing TM, which we still had from the directory, got fuzzy and clear matches and then transferred the huge XLIFF file to the Cat tool.

Here we used the XLIFF file because it is good friends with Okapi, and these XLIFF files can be translated into an online tool.

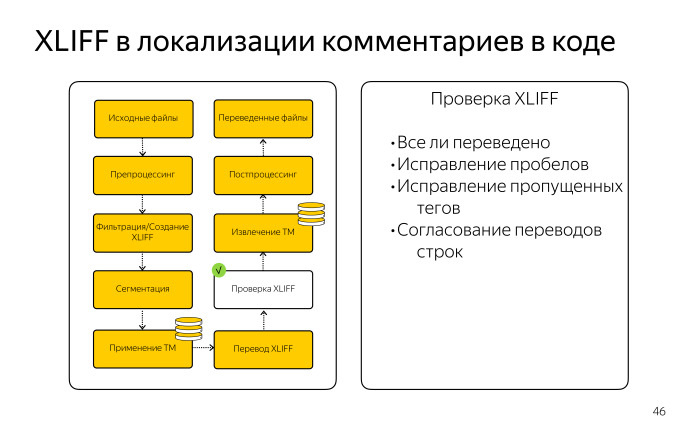

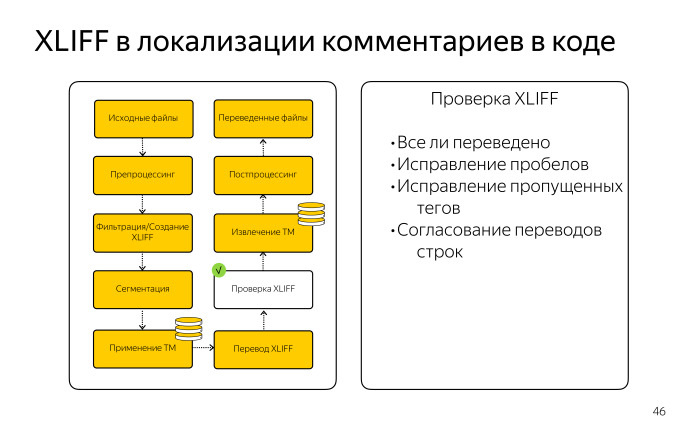

Then it was necessary to edit the received translation and check whether everything worked out on the spot. There was a rather difficult task due to the specificity of comments and sprocketing asterisks. It was necessary to make sure that if in our version there were five lines, then the translation should also have the same five lines, otherwise the huge sheet will be distributed, the formatting will be broken. To do this, we wrote a special patch for missing spaces, line breaks, and also slyly checked for inline tags. If something was missing, they were substituted from source.

Then we extracted the resulting TM for further use.

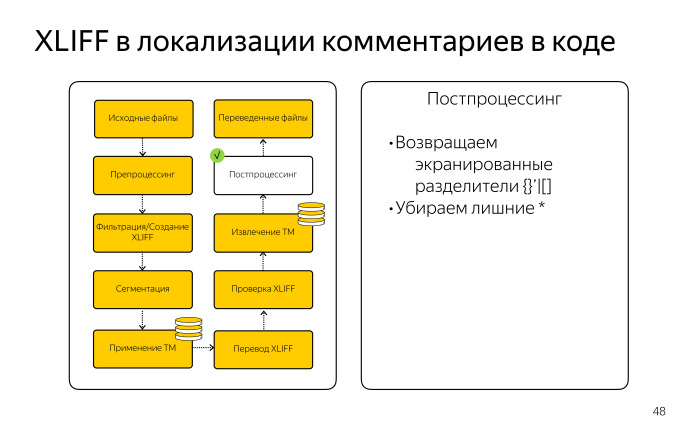

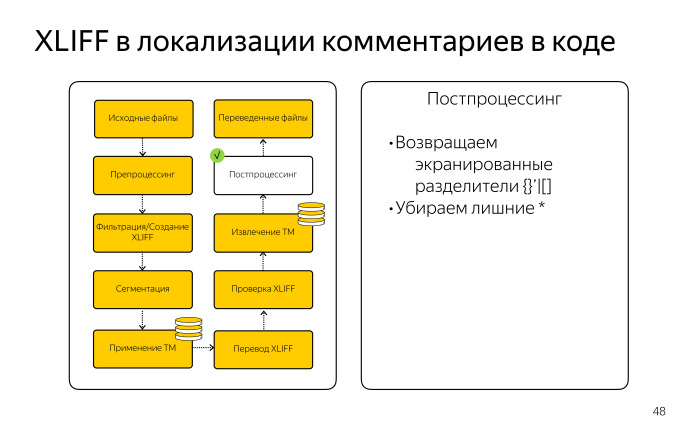

We needed to do postprocessing, a complete mirror preprocessing. We had to get back a valid JS-file, removing all that was needed for the operation of the Doxygen filter.

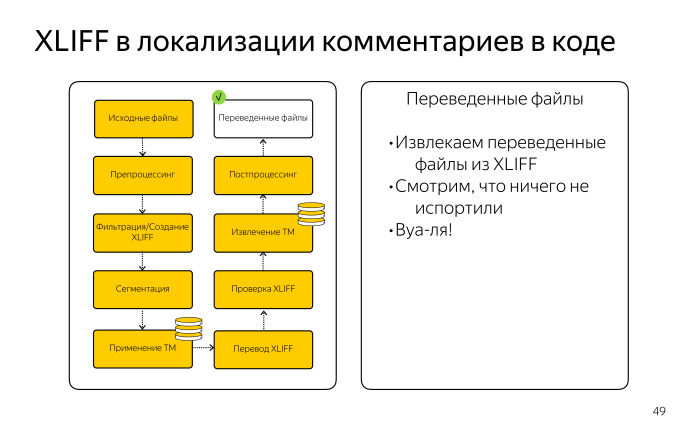

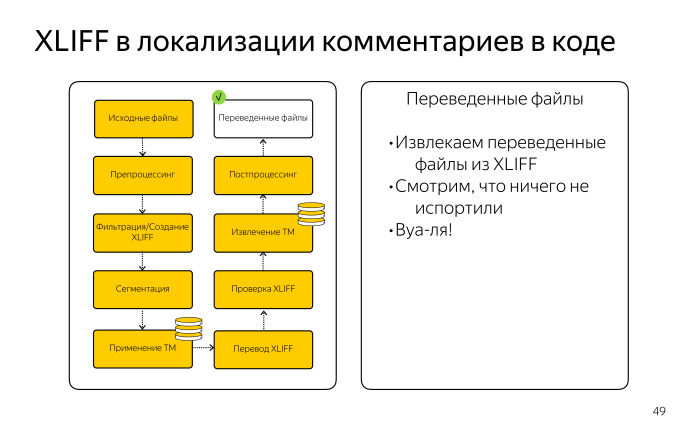

As a result, we received the same set of JS-files, but all comments were already in English. The developers of the Maps team wrote the tests and made sure that all our changes occurred only in those areas where the comments were located, that is, we did not spoil any code. That was an adventure.

- As a demonstration - a fragment from the current source code. But this task has not been fully resolved. It was necessary to think over who would edit the English comments in the code and, most importantly, how?

And we had to adapt the auto-generation system, because the comment language has now changed. How did we solve this? We chose our translator Amy as our translation editor. Amy translates virtually all technical documentation in Yandex, in particular the Maps API. Map developers wanted to make life as simple as possible for Amy. They did not want Amy to manually track all the changes in the code, in the comments, and so that she did not have to work on the console with the Git commands herself.

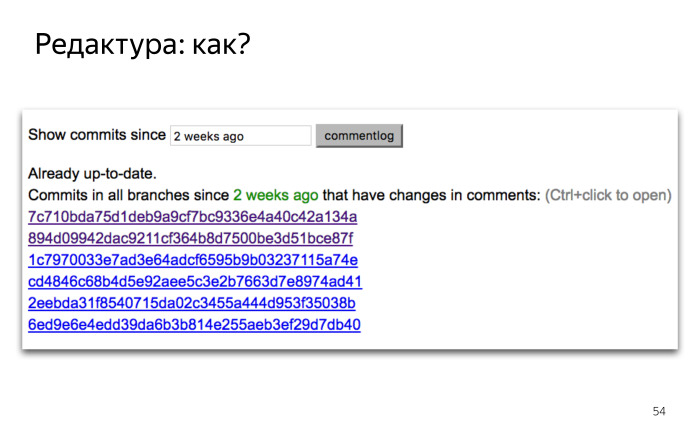

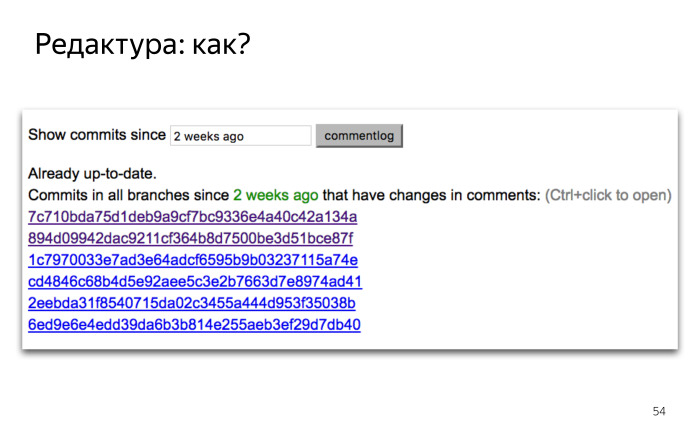

Therefore, the guys wrote a simple script on Node.js, where Amy drives in a date, and she displays a list of all the commits in the code during this time, which contain exactly the edits in the comment.

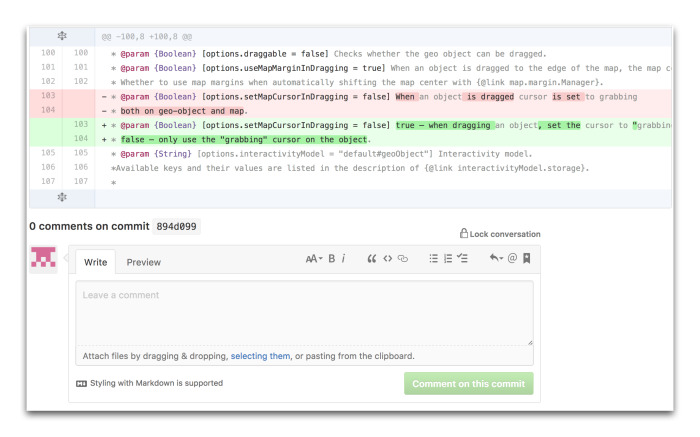

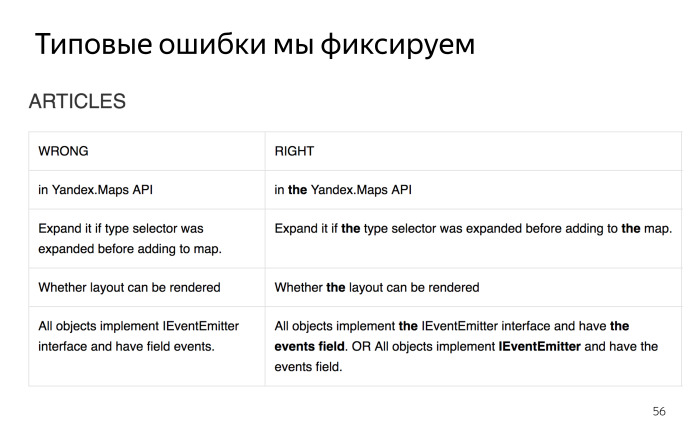

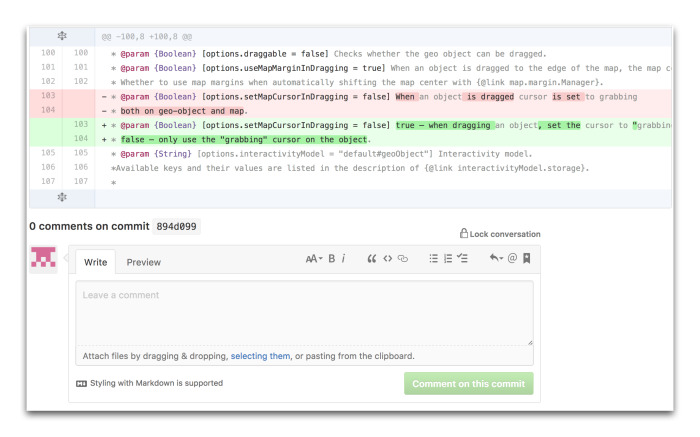

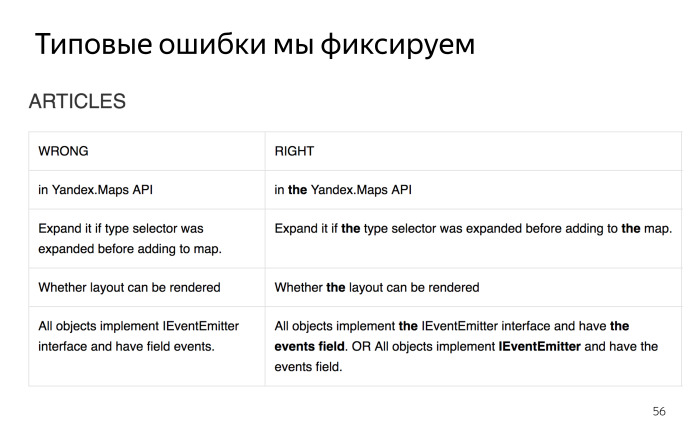

For example, Amy goes through one of them, gets into the interface of our internal GitHub, and there is highlighted in greenback what changes were made to the comment. Amy reads out, by necessity rules, and commits to the code right on the button. It's simple. Of course, here you need to be careful not to commit anything extra. Amy also records all typical errors that we make by reading the code. Speech about the mistakes that we make when writing texts in technical English. Here is an example with errors in the articles, but there are much more such plates. It is good practice to avoid similar mistakes in the future.

How we set up and adapted the autogeneration system. Let's go back to the past and imagine that we have comments in the code in Russian. How did the APIs form before?

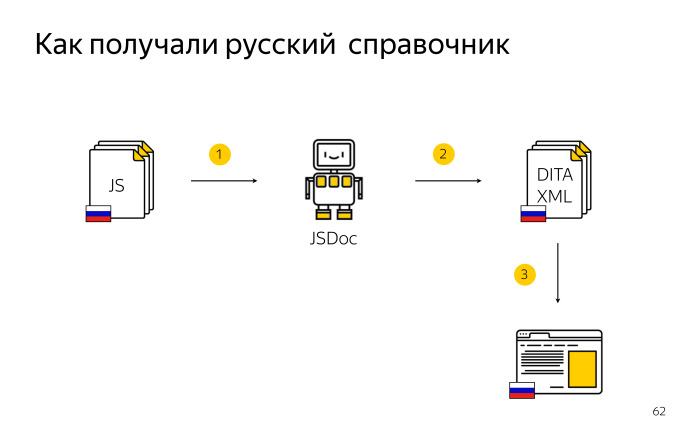

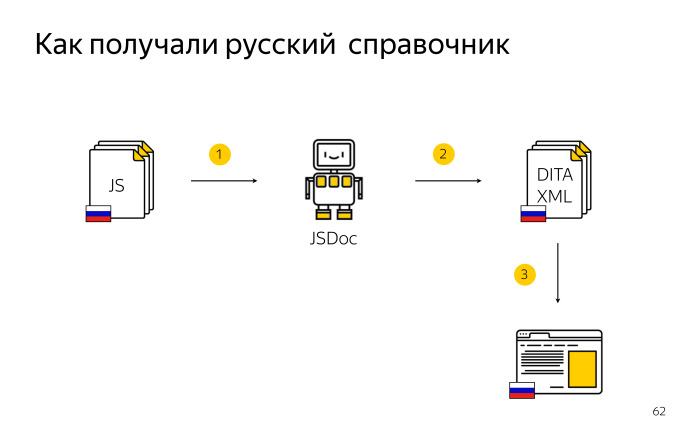

For the formation of the Russian directory, we source API code with Russian comments were driven through JSDoc. It was necessary to wait for some time, and JSDoc gave the necessary DITA XML, a ready reference, which we published on our domain ru. It's simple.

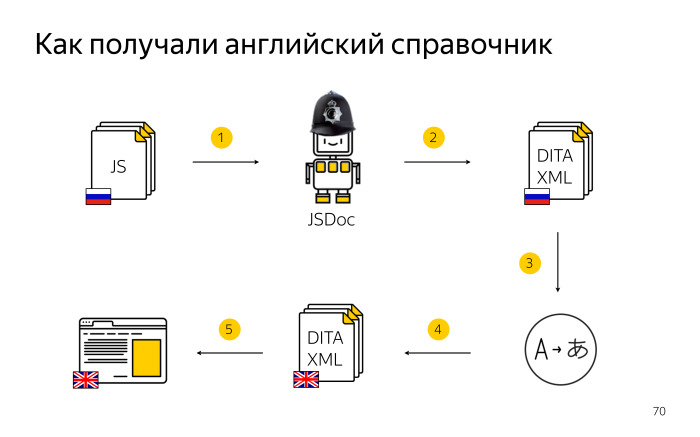

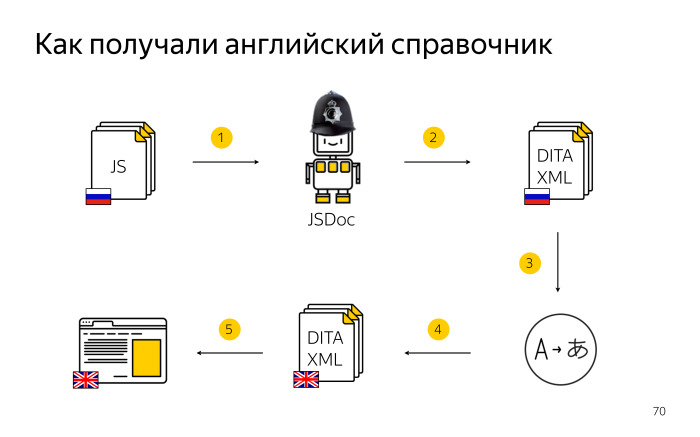

How did we get the English reference book from Russian comments?

We took the Russian code, again chased it through JSDoc with a separate iteration, but we set up a special template localized to English. For example, the name of the tables was immediately in English. I will mark the presence of such a template with an English helmet. We have the English JSDoc gave DITA XML, but still with the Russian description, which we gave to the translation. Amy translated and returned to me DITA XML in English, a ready reference guide that can be published on the com domain.

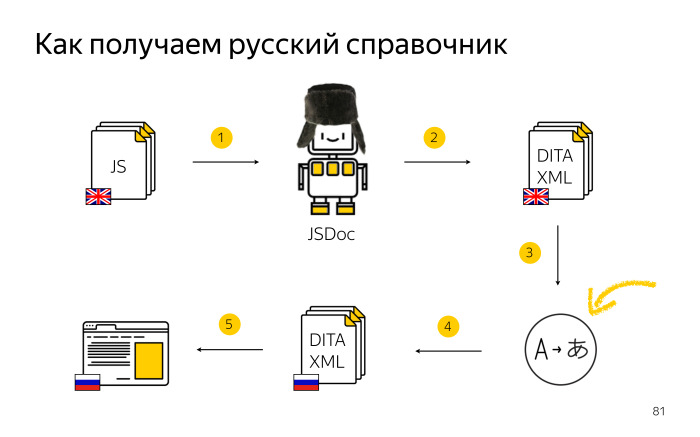

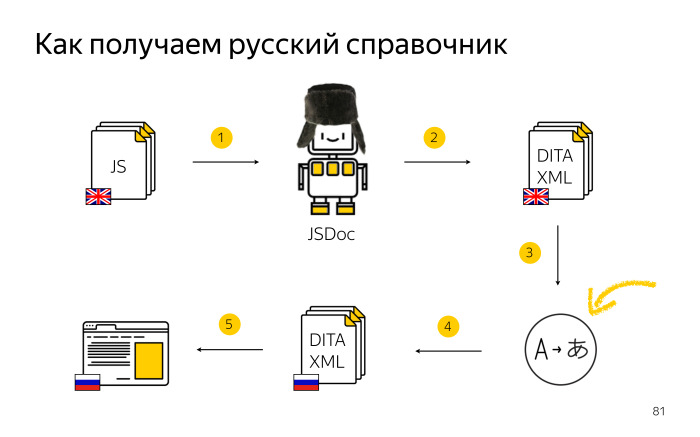

Good, but it was before. Now everything has changed, comments in English. How do we compile the English handbook? Just as before collected Russian. We take the source code, run it through the JSDoc English program, and get the English sources, which we publish on the com domain. What about the Russian directory? The code with English comments, and we have a TM, a translation memory that we have accumulated over the years.

We used TM when translating from Russian to English. And then we thought that if it worked in the opposite direction, when translating from English to Russian? Amy and Max conducted a series of experiments, and indeed it turned out to be so. We can apply the TM accumulated over the years in the opposite direction.

This means that we are now chasing the Russian directory along the same lines as we used to compile English. The main thing here is not to get confused: we take the source code with English comments, we run it through JSDoc, but already with the template localized under the Russian language, with the names of the tables in Russian. We give the received reference books on translation and we publish them translated into Russian.

I already speak as a translator here. Every time when I need to publish a Russian reference book, I first compile English, translate the missing text fragments previously untranslated in the Swordfish tool, and then we publish it. Remember the snail, which shows that at first the directory that we translated always lagged behind? Now the main thing was that the Russian directory did not lag behind. First of all, time was reduced due to the fact that I was engaged in translation. No need to stand in line for translation: opened, translated a couple of lines and collected everything himself.

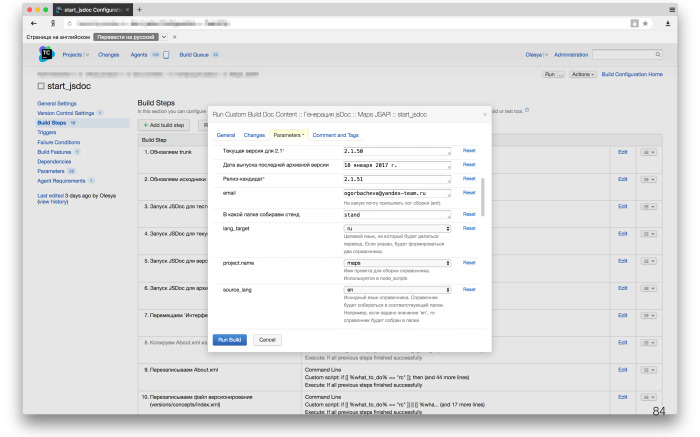

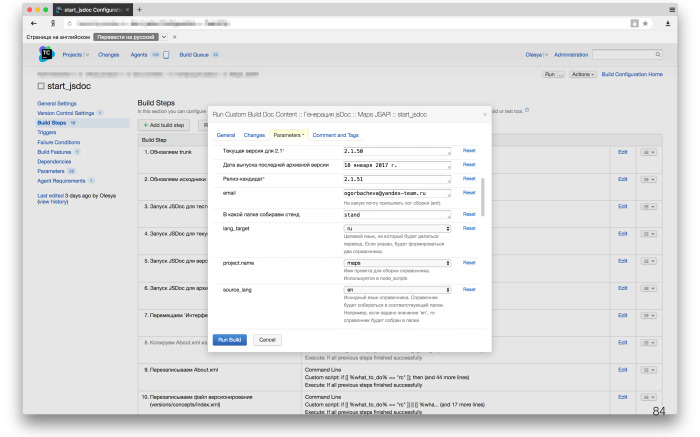

And the TeamCity program helped us synchronize the directories. This tool from JetBrains, which allows you to automate many processes of your project. Powerful enough stuff.

This is an example of an internal customized TeamCity interface. This is an example configuration for building directories. There you can set the necessary parameters, the language of the directory, the version number, and so on, start using the button, the necessary sources are prepared. Finally, we also generate a PDF for the English version. We have not done this before.

What are the advantages of localization of comments in the code? First, the Maps API now contains English comments, so any developer from anywhere in the world can see English. Secondly, we finally set up the operational layout of English documents. Thirdly, we removed the load from the translator - now Amy does not need to translate these directories every time. Finally, if someday we have to translate directories into other languages, then translating from English is much easier than from Russian.

These are lists of tools that we use in the project. Thank!

- My name is Olesya, I am engaged in documenting the Maps API. I will not be speaking alone; my colleague Maxim will be with me - responsible for localization tools in Yandex.

Today we will talk about how we changed the language in the comments in the JS API Maps code. What have we done? All comments that were in Russian in the code, changed to English comments. In the course of the report, we will explain why we had to translate comments in the code, what is the complexity of this task, and how this affected our system of autogenerating documentation from code. We had to translate not just comments in the code, but documentation ones, on the basis of which we form and publish API directories in Yandex. Not only the documentation and localization service worked on this task, but also the Maps API team, these funny guys are the customers of the task, the heroes of the occasion.

')

How did the task of translating comments in the code? Let's return to the past, when the code was still documented in Russian. Last year, on the last Hyperbaton, I already told you that we used the JSDoc tool to document the code.

The developers have marked the code in Russian in accordance with the requirements of JSDoc. If necessary, I read these requirements, made edits and commit to the code.

What directories did we support? First of all, the code in Russian. DITA XML is the format for the presentation of our documentation, for more details about it - in the report of Lesha Mironov. Here is an example of a compiled reference book, which can be viewed here .

We supported not only the Russian directory, but also English. We gave the collected Russian reference book for translation and received a reference book in English. It was also published, but already on the com domain .

There was a problem with the English reference book. Editing comments are made quite often. The API has a rather short release cycle. And if we can publish the Russian reference book quickly, at least every day, then every day we could not afford to give it for translation.

Therefore, we accumulated a bundle of changes and gave a guide to translate every few months. And the English reference book lagged behind the Russian. At that time, the Russian documentation was of higher priority, and for us this was acceptable.

As time went on, the Maps API evolved, and audience growth in the world became significant.

We more often began to write people from different parts of the world asking about using the Maps API and documentation questions - why we have an outdated version there. At that moment, we started thinking half a year ago that, firstly, Yandex is an international company, and the Map API is a large-scale project. And any user in the world can open the code and see Russian comments. It is not right.

In addition, at that time, the developers began to issue external modules for working with the API, which are on GitHub, and the documentation for them is immediately written in English. And let's not forget the backlog of the English reference book. After weighing all this, we thought that it was necessary to translate all comments into English, and then write immediately in English.

What is the complexity of the problem? We calculated that at that time there were exactly 4703 comments in the API cards code. Some of them are big enough. This is one piece, one comment. They are not all so long, but nonetheless.

All this needed to be translated. And translate not from scratch. For many years of the translation of the reference book, we have accumulated a huge translation memory, which we were able to use only when translating ordinary XML documents. We wanted to learn how to use this memory to translate comments in code.

We did not want to translate manually. We didn’t want to copy, translate and copy back to 4700 comments code. We wanted to automatically retrieve the comments, and paste the translation into their places, preserving the skeleton of the JS document, all the JSDoc tags, their validity, and without breaking the code itself. How we did it, Maxim will tell.

- Good day. Add technical details, tell you how this task looked from the point of view of the localization engineer.

We had to find in the JS-code all descriptions of classes, objects and methods, then text descriptions to all parameters and present all this text as an XLIFF file. In Yandex, we all strive to translate via XLIFF, we have processes for this, there are translation farms, a translation memory storage server, TM. In fact, it was necessary to make XLIFF from all JS scripts and translate it into the Cat tool. For this task, we used the Okapi Framework, a powerful open source tool that we use to automate localization tasks.

We took the original JS file from the repository, made sure that it meets the requirements for UTF-8 encoding, the same ending of lines is important for the work of the Cat tool.

Then you had to prepare them in order to apply one of the Okapi filters. Although JSDoc is the most powerful tool for documenting JS files, there is no ready filter for it. We had to somehow get out. We used the closest filter from the Okapi Framework, which extracts Doxygen comments. This is another code documentation system, which is mainly used for comments in C ++, Java- and, possibly, in Python.

The format of these comments is very similar. In fact, we had to fake these JS files, bring them to the kind that the Doxygen filter expected. The differences were mainly in asterisks, slashes. Suppose you need three slashes to discard a comment, not two, asterisks should also be two, not one. We modified this code a little bit. From the point of view of programmers, he then became invalid, but since these filters do not disassemble it, it is just like a text file, it did not matter to us.

We set up the configuration, registered all the JSDoc parameters to rip out only the text. We were not interested in service programmer things, we were only interested in the Russian text.

Next we made pipeline. In the terminology of the Okapi Framework, this is a sequence of steps that will lead us to the creation of XLIFF. Next was the segmentation step. We have rules in the form of SRX files for the Russian language, which allow us to break large blocks of text into separate sentences. This increases the percentage of coincidences from TM, as the fragments of memory become smaller.

Then we applied the existing TM, which we still had from the directory, got fuzzy and clear matches and then transferred the huge XLIFF file to the Cat tool.

Here we used the XLIFF file because it is good friends with Okapi, and these XLIFF files can be translated into an online tool.

Then it was necessary to edit the received translation and check whether everything worked out on the spot. There was a rather difficult task due to the specificity of comments and sprocketing asterisks. It was necessary to make sure that if in our version there were five lines, then the translation should also have the same five lines, otherwise the huge sheet will be distributed, the formatting will be broken. To do this, we wrote a special patch for missing spaces, line breaks, and also slyly checked for inline tags. If something was missing, they were substituted from source.

Then we extracted the resulting TM for further use.

We needed to do postprocessing, a complete mirror preprocessing. We had to get back a valid JS-file, removing all that was needed for the operation of the Doxygen filter.

As a result, we received the same set of JS-files, but all comments were already in English. The developers of the Maps team wrote the tests and made sure that all our changes occurred only in those areas where the comments were located, that is, we did not spoil any code. That was an adventure.

- As a demonstration - a fragment from the current source code. But this task has not been fully resolved. It was necessary to think over who would edit the English comments in the code and, most importantly, how?

And we had to adapt the auto-generation system, because the comment language has now changed. How did we solve this? We chose our translator Amy as our translation editor. Amy translates virtually all technical documentation in Yandex, in particular the Maps API. Map developers wanted to make life as simple as possible for Amy. They did not want Amy to manually track all the changes in the code, in the comments, and so that she did not have to work on the console with the Git commands herself.

Therefore, the guys wrote a simple script on Node.js, where Amy drives in a date, and she displays a list of all the commits in the code during this time, which contain exactly the edits in the comment.

For example, Amy goes through one of them, gets into the interface of our internal GitHub, and there is highlighted in greenback what changes were made to the comment. Amy reads out, by necessity rules, and commits to the code right on the button. It's simple. Of course, here you need to be careful not to commit anything extra. Amy also records all typical errors that we make by reading the code. Speech about the mistakes that we make when writing texts in technical English. Here is an example with errors in the articles, but there are much more such plates. It is good practice to avoid similar mistakes in the future.

How we set up and adapted the autogeneration system. Let's go back to the past and imagine that we have comments in the code in Russian. How did the APIs form before?

For the formation of the Russian directory, we source API code with Russian comments were driven through JSDoc. It was necessary to wait for some time, and JSDoc gave the necessary DITA XML, a ready reference, which we published on our domain ru. It's simple.

How did we get the English reference book from Russian comments?

We took the Russian code, again chased it through JSDoc with a separate iteration, but we set up a special template localized to English. For example, the name of the tables was immediately in English. I will mark the presence of such a template with an English helmet. We have the English JSDoc gave DITA XML, but still with the Russian description, which we gave to the translation. Amy translated and returned to me DITA XML in English, a ready reference guide that can be published on the com domain.

Good, but it was before. Now everything has changed, comments in English. How do we compile the English handbook? Just as before collected Russian. We take the source code, run it through the JSDoc English program, and get the English sources, which we publish on the com domain. What about the Russian directory? The code with English comments, and we have a TM, a translation memory that we have accumulated over the years.

We used TM when translating from Russian to English. And then we thought that if it worked in the opposite direction, when translating from English to Russian? Amy and Max conducted a series of experiments, and indeed it turned out to be so. We can apply the TM accumulated over the years in the opposite direction.

This means that we are now chasing the Russian directory along the same lines as we used to compile English. The main thing here is not to get confused: we take the source code with English comments, we run it through JSDoc, but already with the template localized under the Russian language, with the names of the tables in Russian. We give the received reference books on translation and we publish them translated into Russian.

I already speak as a translator here. Every time when I need to publish a Russian reference book, I first compile English, translate the missing text fragments previously untranslated in the Swordfish tool, and then we publish it. Remember the snail, which shows that at first the directory that we translated always lagged behind? Now the main thing was that the Russian directory did not lag behind. First of all, time was reduced due to the fact that I was engaged in translation. No need to stand in line for translation: opened, translated a couple of lines and collected everything himself.

And the TeamCity program helped us synchronize the directories. This tool from JetBrains, which allows you to automate many processes of your project. Powerful enough stuff.

This is an example of an internal customized TeamCity interface. This is an example configuration for building directories. There you can set the necessary parameters, the language of the directory, the version number, and so on, start using the button, the necessary sources are prepared. Finally, we also generate a PDF for the English version. We have not done this before.

What are the advantages of localization of comments in the code? First, the Maps API now contains English comments, so any developer from anywhere in the world can see English. Secondly, we finally set up the operational layout of English documents. Thirdly, we removed the load from the translator - now Amy does not need to translate these directories every time. Finally, if someday we have to translate directories into other languages, then translating from English is much easier than from Russian.

These are lists of tools that we use in the project. Thank!

Source: https://habr.com/ru/post/341192/

All Articles