Virtuozzo Storage: Actual Operating Experience, Optimization and Problem Solving Tips

This article focuses on the actual experience of operating clusters based on Virtuozzo Storage.

During the year of active implementation and use of the platform on our hosting servers, as well as in creating clusters for our clients, we have gathered quite a lot of tips, comments and recommendations. If you are thinking about the implementation of this platform, you can take into account our experience in designing your cluster.

Our other publications

- Zabbix 2.2 riding on nginx + php-fpm and mariadb

- HAPRoxy for Percona or Galera on CentOS. Its configuration and monitoring in Zabbix

- “Perfect” www-cluster. Part 1. Frontend: NGINX + Keepalived (vrrp) on CentOS

- "Perfect" cluster. Part 2.1: Virtual hetzner cluster

- "Perfect" cluster. Part 2.2: Highly available and scalable web server, the best technologies to guard your business

- "Perfect" cluster. Part 3.1 Implementing MySQL Multi-Master Cluster

- Acceleration and optimization of PHP-site. What technologies should be chosen when setting up a server for PHP

- Comparison of Drupal code execution speed for PHP 5.3-5.6 and 7.0. "Battle of code optimizers" apc vs xcache vs opcache

- Bitrix Start Performance on Proxmox and Virtuozzo 7 & Virtuozzo Storage

To be or not to be? Pros and Cons Virtuozzo

In short, be. Here is a list of the pros and cons that we found when using Virtuozzo: the pros :

- Cluster is convenient. You can turn off the virtual machine on one server and immediately turn it on on another, no copying of data or time for transfer. If the server fell, then you can immediately lift all the virtual machines from the fallen server on one of the free ones.

- The concept of free space on a particular server no longer exists. All servers can share space, limited only by the number of disks in the cluster and your license.

- Technical support exists and responds very promptly by phone and email.

- There is a direct and only dealer in the Russian Federation, so you can purchase licenses for a legal entity in rubles.

- Virtual machines based on vz work quite productively and even much more efficiently than, for example, on Proxmox

- There is a convenient monitoring tool that allows you to get all the necessary information about the work of the cluster visually and informatively. You can freely parse the data for Zabbix, Monin or Nagios.

- Thanks to readykernel, most of the 0-day vulnerabilities in the kernel are eliminated day-to-day and without rebooting the hypervisor.

- The pfcache technology saves memory on the hypervisor (see below for more on this).

- Anyway, Virtuozzo Storage is a network file system, and its operation is highly dependent on network performance. But in Virtuozzo, you can use a local ssd data cache for quick reading and a local ssd log for writing. Plus, Virtuozzo Storage is trying to transfer the data associated with a particular virtual machine to the hypervisor, where this virtual machine is running.

- You can have several types of disks in a cluster (ssd, hdd + ssd-cache and hdd), while you can freely move the virtual machines between them. In the event that the fast drives run out, your machines will automatically start using disks of a different type until a place appears on the fast ones.

- It is strictly contraindicated to keep disks in a local raid. The main advantage for us is that copies of data are not stored on one server, but on several at once, which is much more reliable than Raid.

Unfortunately, there are also disadvantages (:

- This is quite expensive (compared to ceph + kvm), especially on large projects and data volumes.

- Once or twice a month, one of the hypervisors may hang for no apparent reason.

- To physically free up space in the cluster from remote data inside virtual machines, it is necessary to run a very heavy pcompact procedure (you can read about it below).

- Support is trying very hard, but it cannot solve really complex issues. Usually such problems are solved in the next update. In the meantime, you will be offered to upgrade and reboot (in the best traditions of The IT Crowd).

- There is a live migration, but if the container uses half-open sockets, it falls off with an error (that is, it does not exist).

- There is an automatic migration and launch of virtual machines from a fallen hypervisor, but the minimum number of servers in a cluster should be 5, the replication level is 3: 2 (that is, 3 copies of the same data block in the cluster). Automation does not save, if the virtual machine has the status of running, but hung.

- Virtuozzo Storage components are completely unable to work normally in conditions of a small amount of completely free RAM. The banal Linux disk cache will lead to the crash of specific CS (daemons working with Virtuozzo Storage disks) and even to the fall of virtual machines or the entire hypervisor.

- The Virtuozzo Automator control panel is not suitable for real use, rather, to view resource and load statistics, and other alternative control panels are not found.

- Api to automate typical operations not detected. I had to write my own, but not everything went smoothly with him. In fact, we perform typical operations through the bash console, but, as a result, some operations may not be performed for no apparent reason. For example, we have automated the procedure for migrating a virtual machine from the hypervisor to the hypervisor through a series of simple actions: shutting down the container on the old hypervisor, migrating and actually launching on the final hypervisor. Sometimes the startup procedure does not work, because it may happen too slowly, and our self-written api just falls off. Plus, it is not clear how to be if several tasks are run simultaneously on a single hypervisor.

So, with pluses and minuses more or less figured out, now the real recommendations :

')

Installation

If you want to have at least some ability to manage installation settings, or you need to add a new node to the old cluster created six months ago (for example, when booting from disk), select the second item - the cli setting.

In this case, however, you lose the opportunity to get a beautiful cluster control panel.

If you want to add disks to the cluster during the installation phase (but do not do it better), then make sure that they are fully formatted, otherwise the installer will draw you beautiful errors in the middle of the installation.

For swap and pfcache systems, fast ssd disks are needed. Do not miss this moment, otherwise it will be difficult to redo it. If everything is less clear with the swap, this is what pfcache is and what it is with, it is not immediately clear. In fact, in all virtual machines, folders are scanned at a specific path, all libraries and executable files are cached, and the hash log is placed in a special local virtual disk, which, in turn, is stored on the system partition. Further, when launching any binary file inside a virtual machine, the hash log (which is on the disk) is analyzed, and if such a binary is already running, the new one does not start, but simply creates a link in memory to the existing one. Now imagine what happens if the log disk is not ssd. And if there still will be stored swap? )

Here's how to transfer the log:

service pfcached stop ploop umount /vz/pfcache.hdd/DiskDescriptor.xml mv /vz/pfcache.hdd /mnt/ssd2/ sed '/^PFCACHE_IMAGE=/ s~.*~PFCACHE_IMAGE="/mnt/ssd2/pfcache.hdd"~' -i /etc/vz/pfcache.conf ploop mount -m /vz/pfcache /mnt/ssd2/pfcache.hdd/DiskDescriptor.xml service pfcached restart There is a lot of information on the Internet, how to connect a file swap on a fast disk and disable the old slow swap, we will not duplicate it. But be sure to consider all the recommendations on the disks and the network, which we analyze below. And also plan that the cluster servers should be at least three.

Be sure to turn on the automatic time synchronization during the installation phase ; if not, it’s not hard to fix:

yum install ntp -y systemctl stop ntpd ntpdate 165.193.126.229 0.ru.pool.ntp.org 1.ru.pool.ntp.org 2.ru.pool.ntp.org 3.ru.pool.ntp.org systemctl start ntpd systemctl enable ntpd After installation, we immediately make a number of changes to the standard sysctl kernel settings , but I do not recommend doing this for no apparent reason. Better to consult with support.

echo "fs.file-max=99999999" >> /etc/sysctl.d/99-files.conf echo "kernel.sysrq=1" >> /etc/sysctl.d/99-reboot.conf echo "kernel.panic=1" >> /etc/sysctl.d/99-reboot.conf sysctl -w fs.file-max=99999999 sysctl -w kernel.panic=1 sysctl -w kernel.sysrq=1 File limit settings, bash prompts, screen options

cat > /etc/security/limits.d/nofile.conf << EOL root soft nofile 1048576 root hard nofile 1048576 * soft nofile 1048576 * hard nofile 1048576 * hard core 0 EOL cat > /etc/profile.d/bash.sh << EOL PS1='\[\033[01;31m\]\u\[\033[01;33m\]@\[\033[01;36m\]\h \[\033[01;33m\]\w \[\033[01;35m\]\$ \[\033[00m\] ' EOL sed -i 's/Defaults\ requiretty/#Defaults\ requiretty/g' /etc/sudoers I highly recommend having a local data cache for virtual machines , it should be on the ssd disk. This will allow your virtual machines not to receive data via the network, but to take them locally from the cache, which will speed up the work of the cluster and virtual machines many times.

To do this, edit / etc / fstab, where livelinux is the cluster name, / vz / client_cache, cachesize = 50000 0 0 is the log path to the ssd with the size of MB.

vstorage://livelinux /vstorage/livelinux fuse.vstorage _netdev,cache=/vz/client_cache,cachesize=50000 0 0 Network

You need a fast network. You should not raise a cluster with a network of 1 Gbps, because it is immediately shot in the leg. When there are a lot of machines and data, you will have serious problems. The file system is networked, work with the disk occurs through the network, balancing and restoring replication too. If you score 100% of the net, avalanche drops and brakes will begin. In no case do not need to run a cluster on a 1 GB network, unless of course it is a test cluster.

Not very good, but a possible alternative to 10 GB of optics is to bond 2 gigabit network cards. This can give a peak of up to 1.5 GB, and in very good and clear weather even 2 GB.

At the same time, the type of bonding is preferably 802.3ad. He, in turn, requires configuration on the side of your network equipment. Also, make sure that you choose xmit_hash_policy = layer3 + 4, since this is the most productive option (according to Virtuozzo).

Example from / etc / sysconfig / network-scripts / ifcfg-bond0

BONDING_OPTS="miimon=1 updelay=0 downdelay=0 mode=802.3ad xmit_hash_policy=layer3+4" In this case, note that bonding will work only when the server is working simultaneously with two other servers. Those. direct exchange between only two servers will occur at a speed of 1 GB, but when there are already three servers, you will be able to exchange data at a speed of about 1.5 GB.

When the first node of the cluster is installed, the network should already work, and Virtuozzo should be able to send broadcast requests. In other words, if you don't have uplink in the network interface, during the final stage of the installation, Virtuozzo will silently stop and hang for no reason. In fact, she simply tries to send a request to the network via broadcast, and if she doesn’t get it, she stops the installation.

And, of course, it is highly desirable for you to have separate network interfaces and networks for virtual machines and for cluster operation.

After installing Virtuozzo, you need to create a network bridge and add a real network interface there . This is necessary for the operation of your virtual machines with a shared network.

prlsrvctl net add network1 prlsrvctl net set network1 -t bridged --ifname enp1s0d1 #enp1s0d1 Discs

Do not add disks to Storage during the installation of the hypervisor . Rather, one still have to, but after installation it will need to be removed and added again. The fact is that by default, Virtuozzo included all the disks in the tier0 group. This is the cluster's default disk group, and they are also the slowest. To divide the types of disks in a cluster into ssd and not ssd, you will have to unload all the disks that have already been added, and add them again to the desired tier. Changing a tier disk that is already in a cluster is not possible. Even if today you do not plan to have such a division, just do it now. It will not be worse, but in the future you will get rid of many unnecessary difficulties.

Another problem is that when removing disks, according to the instructions, tails remain in the form of services that try to start CS for a disk that is no longer there. And if you deleted and added the same disk 2 times? Then, at any restart of the service, the CS-disk will try to start twice. I give a simple recipe for how to properly unload and remove CS without problems:

cs=1071 # - cluster=livelinux # vstorage -c $cluster rm-cs --wait $cs systemctl stop vstorage-csd.$cluster.$cs.service systemctl disable vstorage-csd.$cluster.$cs.service systemctl reset-failed vstorage-csd.$cluster.*.service systemctl | grep vstorage-csd To add a disc to the correct shooting range, you definitely need to prepare a completely empty disc with the command:

/usr/libexec/vstorage/prepare_vstorage_drive /dev/sdc --noboot blkid # UUID mkdir /vstorage/livelinux-cs4 # # /etc/fstab UUID="3de71ff9-724f-483a-8984-3b78fdb3b327" /vstorage/livelinux-cs4 ext4 defaults,lazytime 1 2 # mount -all # livelinux ( ) 2 ( ) vstorage -c livelinux make-cs -r /vstorage/livelinux-cs4/data -t 2 # hdd- ssd-, # -t 1 hdd+ssd , /vz/livelinux-cs6-sata-journal - ssd, #-s 30240 . vstorage -c livelinux make-cs -r /vstorage/livelinux-cs7-sata/data -t 1 -j /vz/livelinux-cs6-sata-journal -s 30240 # CS, systemctl restart vstorage-csd.target In addition, Linux reserves some space on the disk for logging to the / home folder.

In our case, it is pointless, turn off (in the latest versions of the cluster, this is done when preparing disks, but it's better to be convinced)

tune2fs -m 0 /dev/sdc1 Memory

Imagine the situation: the server is the hypervisor for virtual machines and contains disk chunks (CS) for Virtuozzo Storage. As a result, Linux diligently puts all data on physical disks into the disk cache of RAM and in every way seeks to fill it completely in order to speed up the system operation with disks. CS (disks) and MDS (distributed file system) services cannot quickly get free pages of memory and have to try every time to free memory from a cache that is long. Plus, the old editions of Virtuozzo Cluster could not work with memory if it is fragmented, i.e. they required a whole block of free memory in RAM. The result is simple - CS chunk services are falling, non-replicated copies of data are formed in the cluster, the cluster starts to replicate them, disk work increases, CS drops even more, replication blocks are even more ... As a result, we have a completely buried cluster and barely live virtual machines .

We begin to understand and it turns out that, among other things, by default, Virtuozzo has memory management enabled, which does not imply any overcommit at all. In other words, virtual machines expect that the memory will be free for their work, and that no disk cache will interfere. But that is not all. By default, containers can go beyond their own disk cache into the shared system disk hypervisor cache. In this case, as a rule, the system cache is already filled with the CS data cache.

I present a number of recommendations that need to be implemented immediately, even before the first virtual machine on the cluster starts:

# echo "PAGECACHE_ISOLATION=\"yes\"" >> /etc/vz/vz.conf #c CS , hdd-, , CS 32 cat > /etc/vz/vstorage-limits.conf << EOL { "VStorage": { "Path": "vstorage.slice/vstorage-services.slice", "Limit": { "Max": 34359738368 "Min": 0, "Share": 0.7 }, "Guarantee": { "Max": 4294967296, "Min": 0, "Share": 0.25 }, "Swap": { "Max": 0, "Min": 0, "Share": 0 } } } EOL service vcmmd restart # , prlsrvctl set --vcmmd-policy density prlsrvctl info | grep "policy" In addition, you can make some changes to the Linux settings in terms of working with memory:

touch /etc/sysctl.d/00-system.conf echo "vm.min_free_kbytes=1048576" >> /etc/sysctl.d/00-system.conf echo "vm.overcommit_memory=1" >> /etc/sysctl.d/00-system.conf echo "vm.swappiness=10" >> /etc/sysctl.d/00-system.conf echo "vm.vfs_cache_pressure=1000" >> /etc/sysctl.d/00-system.conf sysctl -w vm.swappiness=10 sysctl -w vm.overcommit_memory=1 sysctl -w vm.min_free_kbytes=1048576 sysctl -w vm.vfs_cache_pressure=1000 And periodically flush the disk cache:

sync && echo 3 > /proc/sys/vm/drop_caches Resetting the cache allows you to temporarily solve the above problems, but you need to do it carefully. Unfortunately, this is bad for the performance of the disk subsystem, since instead of the cache, data begins to be re-read from disks.

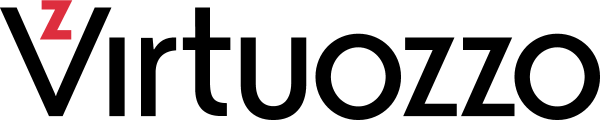

This is what the normal memory mode of the Virtuozzo Storage looks like.

As you can see, the disk cache is stable, there is enough free memory on the server. Of course, there can be no talk of any real overcom memory. You must take this into account.

Freeing up disk space, cleaning up trash

Containers and virtual machines in a cluster are stored as large flat files. When something is removed inside a container or virtual machine, in fact, the place does not become free, just the data blocks in the cluster are reset. To solve this problem, the developers of Virtuozzo wrote the pcompact tool. This utility is started by crown on all servers simultaneously at 3 am and tries to defragment the images of virtual machines, as well as to delete and clear unallocated memory pages in the cluster (that is, to reclaim free space). The operation itself creates a very high load on the network, on the disks, and also requires additional RAM for its work (sometimes quite a lot). This can lead to a drop in cluster performance during this operation due to high utilization of the network and disks. Also, high memory consumption (just free memory, disk cache is not unloaded) can lead to the effect described in the paragraph about memory.

Those. we have top network and disk utilization. Plus, if there is not enough free memory, CS chunks start to fall, replication of missing data blocks begins. As a result, avalanche-like problems occur on all nodes, on all virtual machines, and so on.

In addition, by default, this operation lasts only 2 hours, after which it automatically stops. This means that if you have a dozen very large virtual machines, there is a chance that cleaning up garbage will never reach them all, since it will not fit into a two-hour timeout.

To reduce the negative impact of this process, we moved this task from the crown to anacron. Then the procedure starts at an arbitrary time at night and is not interrupted until completion. As a result, as a rule, the process works only on one node of the cluster at a time, which generally reduces the load and the risk of cluster degradation at night.

Monitoring

The cluster has good proprietary monitoring tools, such as:

vstorage stat --cluster livelinux # vstorage top --cluster livelinux # It is also possible to get data for zabbiks and build all the necessary charts right in it.

On the example of our internal company cloud :

Free space in various shooting galleries and a common licensed cluster location.

The status of the cluster, how many percent are rebalanced, how many chunks are urgently replicated. Perhaps one of the most important graphs.

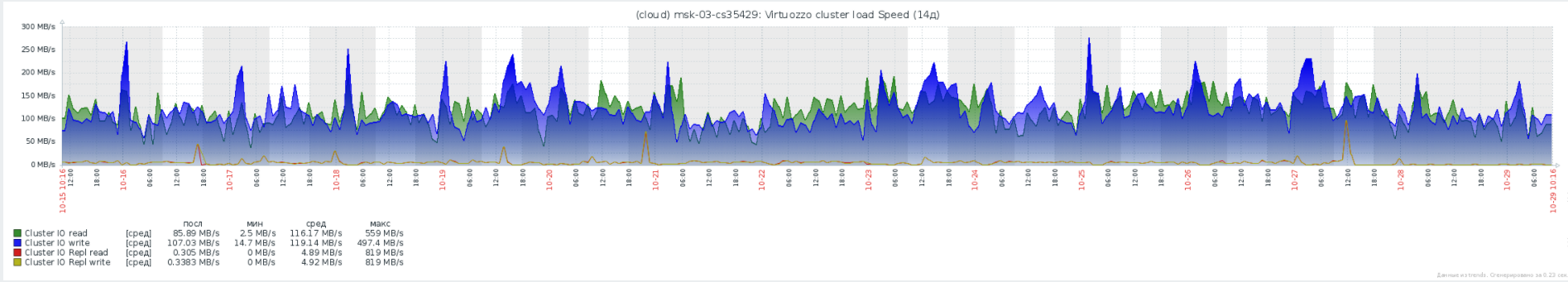

The total network disk load of all virtual machines in the cluster and the speed of replication and rebalancing.

The average and maximum disk queue in the cluster.

General statistics on IOPS cluster.

Also, do not forget, by default, the firewall on Virtuozzo prohibits the operation of everything that is not explicitly allowed. For zabbix-agent you will need a couple of firewall permission rules:

firewall-cmd --permanent --add-port=10050/tcp firewall-cmd --permanent --add-port=10051/tcp firewall-cmd --permanent --add-port=5001/tcp systemctl restart firewalld And finally, some useful commands for working with a cluster

Find out which virtual machine lives in the dash:

(for i in `ls /vstorage/livelinux/private`; do grep HOSTNAME /vstorage/livelinux/private/$i/ve.conf; vstorage -c livelinux get-attr /vstorage/livelinux/private/$i|grep tier; done) | (grep "HOSTNAME\|tier" | tr '\n ' ' ' | tr 'H' '\n' | sed 's#tier=2#SSD#g' | sed 's#tier=1#HDD#g' | sed 's#tier=2#SSD#g' | sed 's#OSTNAME=##g' | sed 's#"##g') | awk '{print $2" "$1}' | sort Manually starting the pcompact process

/usr/sbin/pcompact -v By default, Virtuozzo Storage reserves some disk space, just in case. If you strongly pressed, then you can temporarily lower the percentage of the reservation:

vstorage -c livelinux set-config mds.alloc.fill_margin=1 If you need to unmount the cluster file system, but you do not want to overload the server, you need to shut down all the virtual machines and run:

fusermount -uz /vstorage/livelinux # Find out the statistics of the local data cache

watch cat /vstorage/livelinux/.vstorage.info/read_cache_info Find out the status of your licenses

pstorage -c livelinux view-license # vzlicupdate vzlicview Learn your hwid

pstorage -c livelinux stat --license-hwid Sometimes it happens that the size of the pfcache log does not fit into the allocated virtual disk. There is the following solution

prl_disk_tool resize resize --size 15G --hdd /vz/pfcache.hdd/DiskDescriptor.xml Delete MDS service from server

vstorage -c livelinux rm-mds 11 #11-id mds Start the MDS service on the local server

vstorage -c livelinux make-mds -a 172.17.0.254:2510 # -r /vz/mds/data # mds, ssd -b 172.17.0.255 -b 172.17.0.249 -b 172.17.0.248 -b 172.17.0.4 # mds systemctl restart vstorage-mdsd.target systemctl enable vstorage-mdsd.target Change the default number of copies of virtual machine data on cluster servers

vstorage set-attr -R /vstorage/livelinux replicas=2 Change the number of copies of specific virtual machine data

vstorage set-attr -R /vstorage/livelinux/private/a0327669-855d-4523-96b7-cf4d41ccff7e replicas=1 Change the shooting range of all virtual machines, and set the default shooting range

vstorage set-attr -R /vstorage/livelinux/private tier=2 vstorage set-attr -R /vstorage/livelinux/vmprivate tier=2 Change the shooting range of a specific virtual machine

vstorage set-attr -R /vstorage/livelinux/private/8a4c1475-4335-40e7-8c95-0a3a782719b1 tier=2 Stop and migrate all virtual machines on the hypervisor

hp=msk-07 for vm in `prlctl list | grep -v UUID | awk '{print $5}'`; do prlctl stop $vm; prlctl migrate $vm $hp; ssh $hp -C prlctl start $vm; done Forcing a virtual machine to be forced on another server , even if it was already running somewhere, but it was completely frozen or was not correctly migrated:

prlctl register /vz/private/20f36a7f-f64d-46fa-b0ef-85182bc41433 --preserve-uuid --force Listing commands for creating and configuring a container

prlctl create test.local --ostemplate centos-7-x86_64 --vmtype ct prlctl set test.local --hostname test.local --cpus 4 --memsize 10G --swappages 512M --onboot yes prlctl set test.local --device-set hdd0 --size 130G prlctl set test.local --netif_add netif1 prlctl set test.local --ifname netif1 --network network1 prlctl set test.local --ifname netif1 --ipadd 172.17.0.244/24 prlctl set test.local --ifname netif1 --nameserver 172.17.0.1 prlctl set test.local --ifname netif1 --gw 172.17.0.1 prlctl start test.local prlctl enter test.local Thank you very much for your attention.

Source: https://habr.com/ru/post/341168/

All Articles