Nearly 2018, and we love callbacks

If at first the idea does not seem absurd, it is hopeless.

- Albert Einstein

We collected for you the most popular topics from the Node.js discussions on Habré and asked recognized experts to talk about them: non-commercial Node-hacker Mathias Madsen and author of many books and courses on Node, Azat Mardan.

Here is the exact list of topics:

- Node.js streams and methods for parallelizing calculations;

- Asynchrony in Node.js;

- Debugging and logging in Node.js;

- Performance monitoring issues in production;

Tools for monitoring nodes.

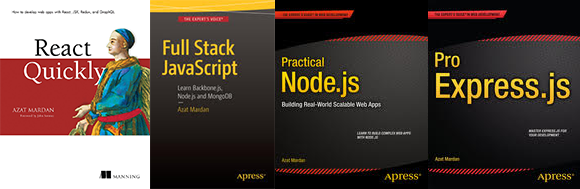

Azat Mardan - Tech Fellow, manager of Capital One , and a JavaScript / Node.js expert with several online courses at Udemy and Node University , and the author of 14 books on the same topics, including “ React Quickly ”(Manning, 2017),“ Full Stack JavaScript ”(Apress, 2015),“ Practical Node.js ”(Apress, 2014) and“ Pro Express.js ”(Apress, 2014).

Azat Mardan - Tech Fellow, manager of Capital One , and a JavaScript / Node.js expert with several online courses at Udemy and Node University , and the author of 14 books on the same topics, including “ React Quickly ”(Manning, 2017),“ Full Stack JavaScript ”(Apress, 2015),“ Practical Node.js ”(Apress, 2014) and“ Pro Express.js ”(Apress, 2014).

In his spare time, Azat writes about technology on Webapplog.com , speaks at conferences and contributes to open-source. Before becoming an expert at Node.js, Azat completed a master's degree in information systems, and worked in US federal government agencies, small startups, and large corporations with various technologies, such as Java, SQL, PHP, Ruby, etc.

Azat is passionate about technology and finance, as well as new, stunning, ways of learning and empowering people.

Mathias Buus Madsen is a non-commercial Node.js hacker based in Copenhagen, Denmark. He works full-time with open source and in the Dat project ( http://dat-data.com ), creating open source tools that allow scientists to share datasets. Currently it supports more than 400 modules per npm, which in itself is impressive.

Mathias Buus Madsen is a non-commercial Node.js hacker based in Copenhagen, Denmark. He works full-time with open source and in the Dat project ( http://dat-data.com ), creating open source tools that allow scientists to share datasets. Currently it supports more than 400 modules per npm, which in itself is impressive.

We remind you that you can meet them live at the conference HolyJS 2017 Moscow .

The appearance of server-side JavaScript on the stage for a long time divided the programming community into those who accepted it, and all the rest ... You and I are not used to holivar, especially when it comes to something obvious, such as the idea that websites are well written in PHP (hmm ..., or in Perl? or in Python? .. however, it does not matter, post something about Node.js), it will be much more interesting for us to discuss, not on what to write, but as of this, the only right well-suited language / stack get decent results. Moreover, Node is developing, the community is expanding, versions are appearing, the server engine is only being improved, and the arrival of a bright tomorrow (amid gray yesterday) is at least not far off! Let's see what the experts say ...

Azat

(I am answering questions on the state of affairs at the end of 2017, which means Node 8, npm 5, and so on; today something has changed compared to the early days of Node, but something has remained more or less the same).

1. Streams in Node.js and methods for parallelizing calculations

As many know, Node is single threaded; in this and strengths and weaknesses Node. Strong because it's easier to implement asynchronous non-blocking code that allows your systems to perform more I / O operations, which usually means handling more traffic. Weak due to the fact that you can write code that will be blocked . You will help the initiation of multiple threads. There is a cluster module at the core of the language, but most Node developers use pm2 . It supports development ( pm2-dev ) and containers ( pm2-docker ). To get started with pm2, just install it using npm and run it in the background:

npm i -g pm2 pm2 start server.js -i 0 If pm2 does not fit all your requirements, and you still need to work at a lower level, you can use cluster . In new versions of Node (current version 8) it has load balancing, as in pm2 . As a result, several of your processes will be able to listen on the same port, and will be able to interact with each other and with the main process. You should use fork() with cluster . Here is a good example:

const cluster = require('cluster') const numCPUs = require('os').cpus().length if (cluster.isMaster) { for (var i = 0; i < numCPUs; i++) { cluster.fork() } } else if (cluster.isWorker) { // your server code }) Finally, there are two methods for manually creating processes in addition to fork() : spawn() and exec() . The first is for lengthy processes, streaming, and large amounts of data, while the second is suitable for small data output.

2. Asynchronous programming in Node.js

Yes, Node has async / await functions. I still like callbacks, but asynchronous functions are great. They are easy to understand even for beginners. The async code function is shorter than the promise code. Take a look at this Mocha test with two nested async database calls. He is short and cute:

describe('#find()', () => { it('responds with matching records', async () => { const users = await db.users.find({ type: 'User' }) expect(users).to.have.length(3) for (let user of users) { const comments = await db.comments.find({ user: user.id }) expect(comments).to.be.ok } }) }) By the way, you can use the function() {} syntax, not just the syntax with arrows:

const async function() { const {response: res} = await axios. get('https://webapplog.com/api/cupcakes') } Another bonus of the async functions is that they are compatible with promises . Yes that's right. You can use them together, for example, create an async function, then use the then , or use a promise-based library (for example, axios from my example), or a function created by util.promisify() (new Node 8 method!) As the async function .

3. Debugging in Node.js

The debugger in Node has improved significantly in comparison with what it was before. I remember the days when I worked at Storify (the company - one of the very first Node users), I just placed console.log all over the code. Today you can debug in VS Code. This is a terrific editor. I use it every day.

Further, there is a Node Inspector , which, in fact, Chrome DevTools for programs on Node.js. Google Chrome V8 Inspector appeared in Node v8, integrated into Node, and all you need to do to get started with the GUI debugger is to simply write:

node --inspect index.js then open §chrome://inspect/#devices in the Chrome browser. In version 7, you need to copy the URL and open it in the Chrome browser. The URL will contain the line chrome-devtools:// at the beginning. Just remember that your script on Node should work long enough for the debugger from DevTools to connect to the program, or you will have to set brepppoites in the debugger or in the code.

Node is built on Chrome V8 and uses Chrome DevTools for debugging, not just because there is a nice GUI, but also to ensure reliable functions in the future.

4. Node.js performance issues

Most of the problems with the production of Node are associated with either memory leaks, or with the network, or with I / O problems. Stress testing helps you understand how your application and system work under real load conditions. A good tool is artillery . Some memory leak issues can be fixed or mitigated by modifying the code slightly and using the latest version of Node, which comes with the new JIT JavaScript compiler called Turbofan. Read this great post GET READY: NEW V8 COMES, THE PRODUCTIVITY OF NODE.JS CHANGES about optimization, as well as the technician, and the code that would be worth it to either avoid or adopt.

5. Good Node.js monitoring tools

First, make sure the Node code is ready for use. In 2018, this will mean the use of containers, clouds and automation methods. You might want to see my Node in Production course for more details.

The node should scale both vertically (see point 1) and horizontally. This, of course, makes it difficult to monitor and collect logs. You will need to collect metrics and magazines.

Create a simple dashboard yourself, and you will see the statistics and metrics that form the individual Node servers and processes ... or use an open-sourced dashboard, say, Hygieia , created in Capital One (in case: I work in Capital One).

Winston and bunyan are good tools for working with logs. You can send logs to any place, say, to a third-party SaaS, for example Loggly, Splunk or Papertail (we used it in Storify). If you want to keep all your data, expand Elastic Search with Kibana, and send your journals there. This is what we did in DocuSign when we could not use a third-party service for security and privacy reasons. We developed our own solution based on Winston, Elastic and Kibana.

A few more tools and services to consider (not all are free), especially for production monitoring: this is N | Solid , NewRelic and AWS CloudWatch .

Completion

Node is growing at a crazy pace. Even without new versions, this is already an amazing technology. It is fast, reliable, and, most importantly, brings the joy of carefree coding to developers. When moving to the Node stack, I saw a lot of happy Java and C # developers.

Matthias

We do several things in parallel

Node.js is single-threaded in its design. This is actually a useful feature, because it means that it will be easier to deal with things like race conditions from memory than with languages like Java, where your program’s execution may be interrupted at any time.

It can allow itself to be single-threaded, since all I / O operations are performed asynchronously and, therefore, do not block program execution. Moreover, although JavaScript in Node has one execution thread for a program, it itself uses several more threads to cope with helping with background I / O tasks and other service tasks.

This approach has only one drawback. Sometimes you need to run code that consumes only the processing power of the processor. Since no I / O operations are being performed at this time, your Node.js program is blocked until this code is completed. If we are talking about a processor-intensive operation (for example, cryptography), this can have a negative effect, since your Node program cannot do anything until the operation is completed - you probably want to avoid this.

There are several ways to achieve this. One of these approaches (which I often use) is to write a native module (as a rule, all my processor-intensive operations still require the use of a native module) and use a really nice worker api . With this API, your (in the past, synchronous) operation will be able to use a callback, or return a promise, and your task, created using C ++ and Worker API, will work in another system thread of execution.

Another approach would be to split the program into small parts and simultaneously launch them as a set of processes. In essence, this means remaking your program into a small distributed system. In this way, you can use many processor cores in a completely native way.

In any case, when you start implementing parallel execution of the Node code, you get a much more asynchronous code. Managing asynchronous code in Node is one of those things that seem very difficult at first, but become much easier to understand as your experience grows. In the current version of Node, you can use features like async / await to make your asynchronous code look synchronous if you also use promise. One complication arising from this is that since you are forced to use try-catch syntax, you will have to understand including. and with more serious bugs, because try-catch in JavaScript also intercepts situations that in other languages would lead to compilation errors (for example, typos in the code, etc.). In other words, the search for bugs will become more complex, since you will receive in the error handling code both syntax errors and errors during program execution.

As a result, personally, I most often use callbacks for asynchronous programming, along with a pack of auxiliary libraries, such as the after-all module, etc.

Debugging and monitoring

One of the features I like about Node is the number of excellent tools that help you debug and monitor the behavior of the application (and I myself wrote a lot of them). When we work with Node, we work with JavaScript code that will run on V8. In turn, the V8 has a rich API functionality that allows you to squeeze out excellent performance from it. This allows you to track down the actual cause of the problems (the very “bottleneck”) in your application without any guessing.

I especially love the 0x module ( https://github.com/davidmarkclements/0x ) written by David Mark Clement and his friends. This module easily turns the benchmark into a real flamegraph. Just run your benchmark with 0x instead of node (plus create a flamegraph, and then open it in the browser):

0x -o my-benchmark.js This graph will clearly tell which JavaScript function uses the most CPU time during program execution. This approach gives a visual representation of what to pay the most attention to when optimizing.

Another module that I often use for ops tasks is my own module called respawn . It simply helps you to start the process, and then restart it if the process crashes.

There is a nice cli-wrapper called lil-pids to respawn . lil-pids does not have an interface, and only requires a file called ./services: you simply specify in it all the commands that you would like to see running on your system, lil-pids looks after them, and tries to achieve with the help of the respawn module, so that they all run.

Finally, another problem that I most often have to solve in Node when using production code is random memory leaks. Even though JavaScript has its own garbage collector, we often allow memory leaks, say, adding items to the list, and forgetting to remove them from there, etc. Sometimes we do not allow memory leaks, but we implement algorithms that consume so much memory that at some point the system is forced to stop the program program. To determine if there is a memory leak, I often use a Thomas Watson module called memory-usage . The only thing he does is giving you an endless stream of data about how much memory your program uses over time. If you draw a graph of this value, you will see when a memory leak begins.

Whatever the well-known proverb says, there is a better option than “to see once” - in our case, “see and hear reports, and then ask questions and talk on the sidelines.” We invite you to the conference HolyJS 2017 Moscow !

')

Source: https://habr.com/ru/post/341070/

All Articles