How we participated in the hackathon M.Video

In the last weekend of September, our team took part in the hackathon M.Video on data analysis. The choice was offered two tasks: first - to generate a product description based on reviews of products, the second - to highlight the most important characteristics of products based on the directory, data on the joint views and adding to the basket. We solved both tasks. Under the cut, the story of why we filled up this hackathon and what we learned.

Formulation of the problem

When our team met before the start of the hackathon, we were confused by the lack of a clear statement of the task or wishes. In the absence of the best, we began to generate ideas ourselves:

- customer treatment system;

- recommendation system;

- an algorithm for navigating a variety of products;

- a system for collecting data on products on other sites.

We dreamed of provable increase in business metrics: views, conversion, average check and loyalty. For several days we tried to pull information from an insider, but as a result we only got more confused. When we arrived at M.Video’s office on Saturday, we had several options in our head about what could be done - and not one of them ended up coinciding with what we were really asked to do.

It turned out that you need to generate descriptions of goods or identify their most significant characteristics. And there will be no metrics, but there will be jury eyes.

All three engineers in our team sat down to solve the tasks that we thought were correct. Given the novelty of the data and a large number of experiments, it took the whole evening. By late night, we had intermediate solutions, which we finished all morning. By the deadline, we had three algorithms that were little related to each other. Nobody did a review of algorithms, except for their tired creators. It seems no wonder that we are not among the top three winners. But the algorithms themselves seem worthy of attention.

Extract compressed product descriptions from reviews

The first of the two tasks of the hackathon was formulated as a task for automatic summatization of the text . It was necessary to create an algorithm that, according to a multitude of customer reviews for the product, generates one synthetic review containing the most important information about the product. There were no formal requirements for synthetic reviews, so we ourselves came up with our Wishlist:

- A synthetic review consists of separate statements about a product (some kind of structure is needed!);

- From each statement, you can go to the reviews on the basis of which it was created (to make it easier for them to believe);

- Each statement should, as far as possible, be based on more than one real recall (we love reliable information);

- Statements must show how goods differ from their peers (otherwise they are useless).

From these requirements it is already possible to proceed to the general scheme of the algorithm:

- Pre-processing text reviews. Each was broken up into words, and each word led to normal form (verbs to infinitive, nouns to nominative singular, etc.) using pymorphy2 . This is necessary to more correctly calculate the words. In addition, we introduced several special words: the sequence of numbers was replaced by <NUMBER>, the smiles with positive and negative colors were replaced by <SMILE_POS> and <SMILE_NEG>.

- Weighing words. We counted how many times each normalized word occurs in reviews for each product. Each occurrence of the word in the review assigned the weight, the reverse share of reviews in which it is used, among all products in this category. Thus we tried to reduce the influence of uninformative words. For example, the word "HEADPHONES" does not bear much information in the response to headphones. But the words that are found only in the response to this product, we also assigned a weight of 0, because these are usually very specific concepts that are difficult to compare. For example, the word "JBLAWAREBLKI" in the response to the same headphones also does not carry meaning - it's just their name. Thus, we executed Wishlist (4).

- Cutting each review for approval. To do this, we broke it into sentences (just by punctuation). The sentences were broken into various pieces (n-grams) in 2 and 3 words. Assertions considered every sentence and every n-gram.

- Conversion of statements into numerical form. For each statement, we found the average attachment of its words, weighted by the weights from paragraph 3. The attachment is a vector of 100 numbers unloaded from someone’s trained word2vec model. The main property of investments is that the words with similar meanings usually also have similar attachments, and vice versa. And we counted the "weighted average word" for each statement, hoping that it would somehow convey a meaning.

- Grouping For each product, we have launched the DBSCAN clustering algorithm on all vectorized statements about this product. In other words, we found groups (clusters) from statements similar to each other (that is, described by similar vectors-embeddings). Each cluster found should contain some important fact about the product. Assertions that are not included in any cluster (DBSCAN is one of the few algorithms that can do this), we have excluded from consideration.

- Cluster ranking. For each cluster, we calculated its rating - the sum of the word weights of all the statements included in it. We applied a reduction factor to the rating of clusters that contain assertions from just one review, in order to fulfill the wishlist (3). For each product, we took 5 clusters with the highest rating.

- The ranking of assertions within clusters. In each of the selected clusters, it remains to find the only statement that best describes it. To do this, we calculated the rating of each statement in the cluster as the average weight of words in this statement divided by the average distance from its investment to the attachments of other statements in the cluster. 1. The numerator is responsible for ensuring that the statement is informative, and the denominator is responsible for ensuring that the statement fits well enough with the entire cluster. And for each of the selected clusters in a synthetic review, we made a statement with the maximum rating, satisfying Wishlist (1) and (2).

As a result, we got some synthetic reviews like this (on TV):

- Horizontally excellent viewing angles

- Good image quality

- The picture is great

- Bought into the kitchen

- Sound good

For the first example, the pros and cons of our approach are visible. Plus - that review is readable. Minus - for example, that the 2 and 3 statements could be combined, but our clustering turned out to be too detailed. Unfortunately, no way to check the quality of the generation of synthetic reviews, except for the method of gaze, we did not come up with. A method of close (but quick) look showed that the reviews lack the structure, but in general look adequate.

Extract important product properties from reviews

Another branch of the work was associated with emotional coloring ( tonality ) reviews. For most of the reviews, a rating of goods from 1 to 5 was attached. During the selection round, we also solved the problem of determining the tonality - predicting it from the text of the review. To do this, each normalized word was turned into a binary variable, and linear regression was trained on them. A large positive coefficient in front of a word in such a model means that it is usually found in positive reviews, and a negative one - in negative ones. The greater the coefficient (in absolute value), the more the word influences the assessment of the product.

For each product, we built such a regression on all the reviews on it (if there are enough of them. By sorting out the coefficients, you can find the most "positive" and "negative" words about this product. or “disgusting.” To exclude them, we had to make a special black list. To do this, we simply built a model of tonality on absolutely all products, and dragged the most significant coefficients out of it. They basically corresponded to non-informative value judgments ipa "good / bad".

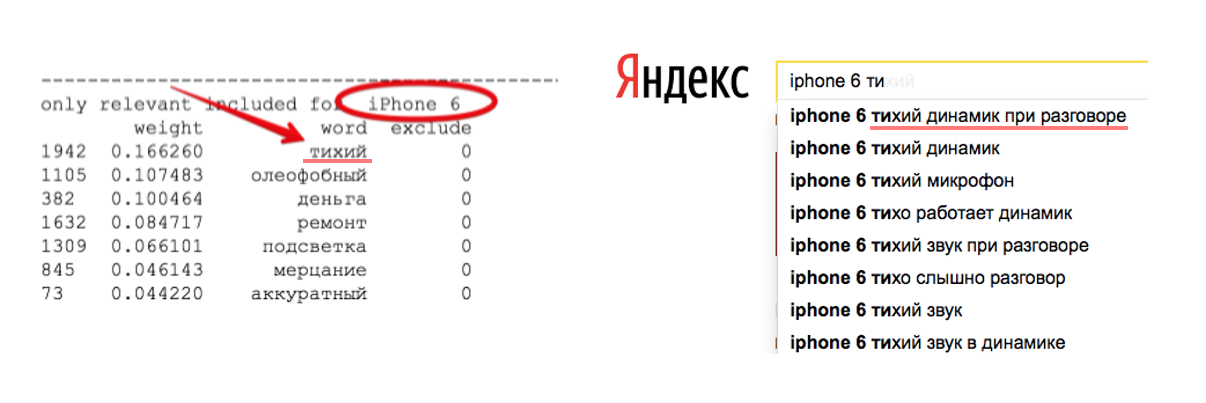

The words that remained after filtering through the black list turned out to be really quite informative. For example, for IPhone 6, the word “quiet” turned out to be the biggest negative factor - and indeed, this is one of the main problems that its owners are complaining about.

Probably, if we had embedded an emotional assessment of the properties of goods in synthetic reviews, this would make them attractive enough to ensure our victory. But each of us, until the last moment, debugged our own model, and instead of normal integration, we simply recorded the output of one algorithm under another.

Search for important signs on user behavior

In addition to reviews, we had information about product views and adding them to the basket. We decided to find out what properties of the product determines the conversion of views into purchases. For any product, we had characteristics from the reference book: dimensions, screen resolution, processor brand, and so on. We converted them to binary signs. For them, for each category of goods, a logistic regression was built, predicting the probability of adding goods to the basket. The obtained coefficients for the properties of goods can also be interpreted as the importance of these properties when making a purchase decision. Then we did with them in much the same way as with the words in the reviews: weighed the importance of frequency, and selected the most important feature from each semantic group. As a result, for each product we received its most important characteristics from the point of consumer behavior, but we did not have time to compare them with the results of the analysis of reviews, much less merge with them.

Profound conclusions

I would like to thank the organizers of the hakaton for an interesting task, qualitative data, and delicious food. According to the general impression, these days and a half were very good.

From the point of view of the organization, the hackathon lacked only the quality criteria announced from the very beginning, by which decisions would be compared. Maximizing an unknown function is not the most productive. There was not enough clear formulation of the problem before the hackathon began - the phrase “aggregation of reviews on the site” and “assistant seller for the selection of goods in the store” could be interpreted as you please.

In hindsight, it is already clear that even in such conditions our team should not have rushed in different directions, like a swan, cancer and pike. It was necessary to immediately determine how the ideal product description that we want to get at the output should look like. From the first hours of the hackathon, we could concentrate on any one direction (not so much what it was), and by the evening have a working prototype. Then for the second day of joint debugging, we would bring it to an already well-functioning product.

In any case, we received a code that can be reused, a large number of merch, and, most importantly, experience. To solve the simple tasks of the store, it took almost half of the introductory course on machine learning. But everything was decided, as usual, not by knowledge in programming and mathematics, but by organizational skills.

')

Source: https://habr.com/ru/post/340694/

All Articles