Create live streaming CDN for low latency WebRTC video streams

Where may be required to broadcast with a guaranteed low latency? - in fact, many where. For example in online video auctions. Imagine yourself leading such an event.

- “Two hundred thousand raaaaz”

- "Sales!"

With a high delay, you will have time to say “two hundred thousand three” and sell the lot before the video reaches the participants. To bidders have time to react, the delay must be guaranteed to be low.

In general, low latency is vital in any gaming scenario, whether it is an online video auction, video broadcasts of horse racing or an intelligent online game “What, Why” - and there and there a guaranteed low delay and real-time video and audio transmission are required. .

')

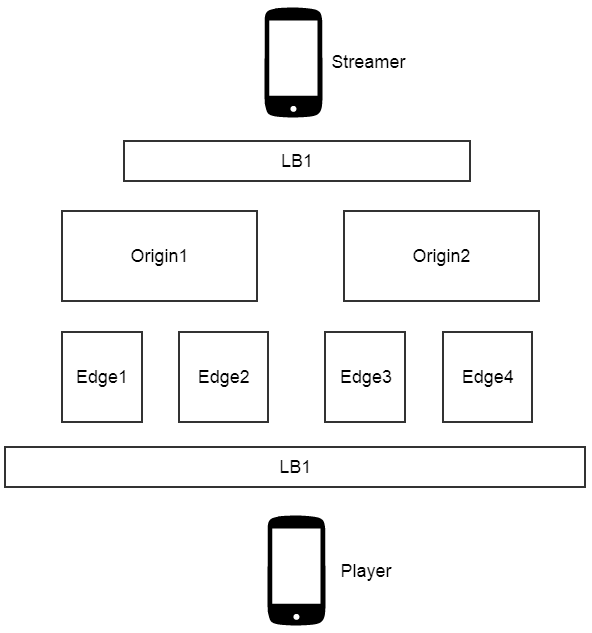

Why CDN

One server is often not able to deliver streams to everyone just because all viewers are geographically distributed and have communication channels of different bandwidth. In addition, a single server may not be enough resources. Therefore, we need a bunch of servers that are engaged in the delivery of the stream. And this is the CDN - content delivery network. The content in this case will be a low-latency streaming video that is transmitted from the webcam of the transmitting user to viewers.

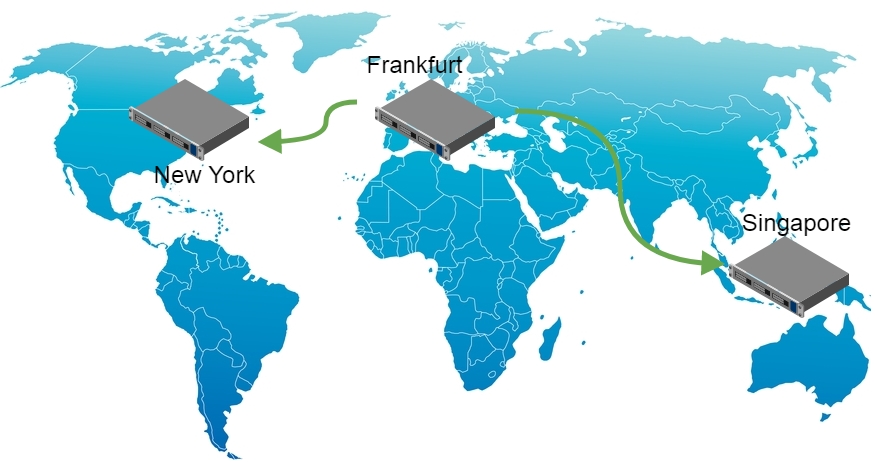

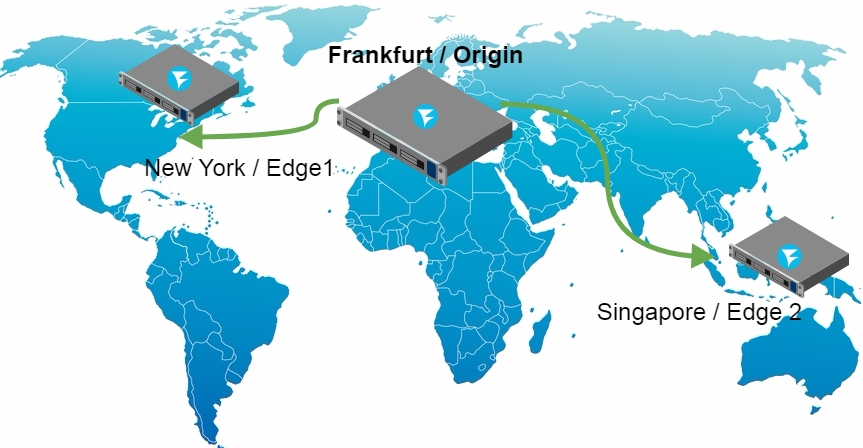

In this article, we describe the process of building a geographically-distributed mini-CDN and test the result. Our CDN will consist of four servers and work as follows:

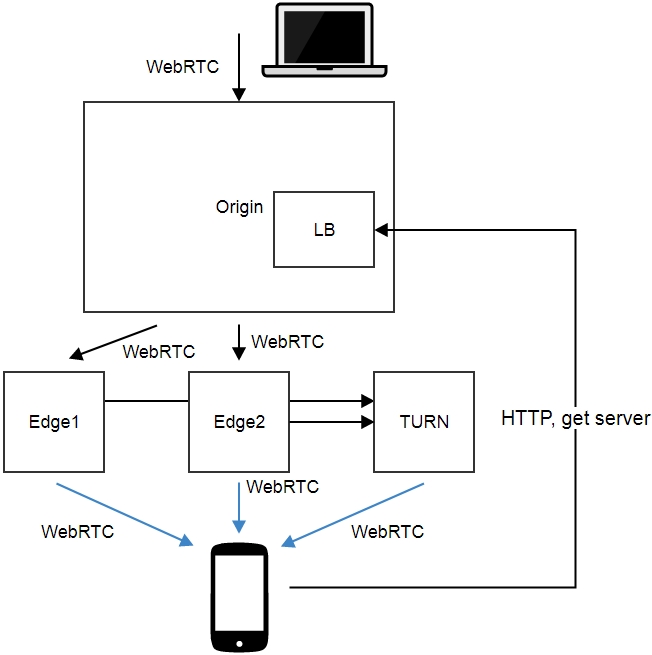

This scheme uses the minimum required number of servers: 4.

1) Origin + LB

This is the server that receives the broadcast video stream from the user's webcam.

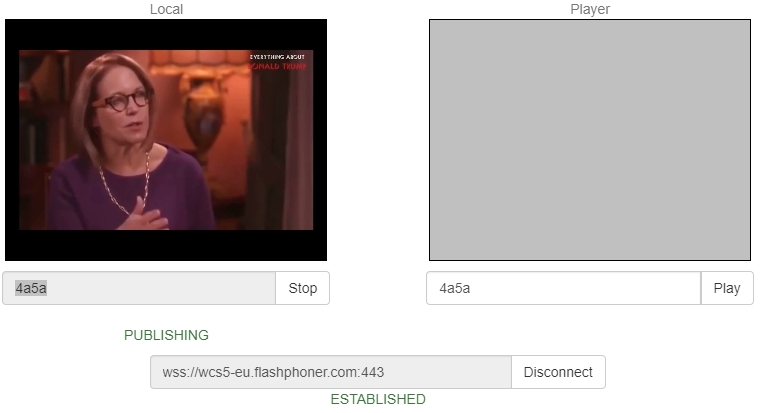

Sending a stream in a browser might look like this:

This is an example of sending a video stream from a web page opened in the Google Chrome browser. The stream is sent to the origin.llcast.com test server.

After Origin has received the video stream, it retransmits the received stream to two Edge1 and Edge2 servers. The Origin server itself does not distribute threads.

To simplify, in this scheme, we assigned to Origin the role of load balancer (LB). He polls edge-nodes and informs connected viewer clients to which of the nodes they should connect to.

Balancing works like this:

- The browser refers to the balancer (in this case coincides with the Origin).

- The balancer returns one of the servers: Edge1 or Edge2.

- The browser establishes a connection with the Edge2 server (in the example), using the Websocket protocol.

- Edge2 server sends video traffic via WebRTC.

Origin periodically polls the servers Edge1 and Edge2, checking their availability and collects data on the load. This is enough to make a decision on which of the servers to "land" a new connection. If one of the servers is unavailable for some reason, the connections will “land” on the remaining Edge server.

2) Edge1 and Edge2

These are servers that deal directly with the distribution of audio and video traffic to viewers. Each of them receives a stream from the Origin server and each provides the Origin server with information about its download.

3) TURN relay - server.

To ensure a really low latency, all of the above audio and video delivery schemes work over UDP. However, this protocol can be closed on firewalls and sometimes you have to let all traffic through HTTPS port 443, which is usually open. For this, TURN relay is used. If a normal UDP connection fails, the video will go through TURN and over TCP. This will inevitably worsen the latency, but if most of your viewer users are behind corporate firewalls, one way or another you will have to use TURN for WebRTC or another way to deliver content via TCP, for example Media Source Extension / Websocket .

In this case, we allocate 1 server specifically for passing firewalls. He will distribute video over WebRTC / TCP for those viewers who are not lucky enough to receive a normal connection via UDP.

Geographical distribution

Origin-server will be located in the data center of Frankfurt. While Edge1 server will be located in New York, and Edge2 will be located in Singapore. Thus we get a distributed broadcast, covering most of the globe in length.

Installation and start of servers

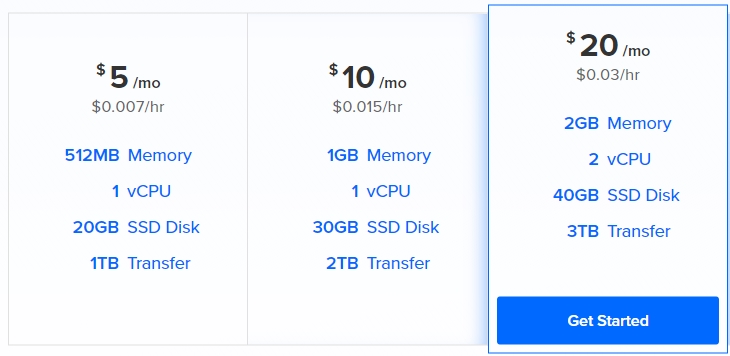

For low-cost testing, take the Digital Ocean virtual servers.

We will prepare 4 drops (virtual servers) on DO, 2 Gb of RAM each. It will cost us 12 cents per hour, and one day about 3 dollars.

The Origin and Edge1, Edge2 servers will use the WCR5 WebRTC server. It must be downloaded and installed on each of the droplets.

The installation process for a WCS server looks like this:

1) Download the distribution.

wget https://flashphoner.com/download-wcs5-server.tar.gz 2) Unpack and install.

tar -xvzf download-wcs5-server.tar.gz cd FlashphonerWebCallServer-5.0.2505 ./install.sh Do not forget to install haveged, which may be required to speed up the launch of the WCS server on the Digital Ocean.

yum install epel-release yum install haveged haveged chkonfig on haveged 3) Run.

service webcallserver start 4) Create Let'sencrypt certificates

wget https://dl.eff.org/certbot-auto chmod a+x certbot-auto certbot-auto certonly Certbot will put the certificates in the / etc / letsencrypt / live folder

Example:

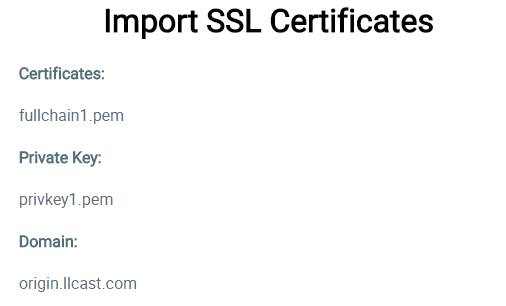

/etc/letsencrypt/live/origin.llcast.com 6) Import Let'sencrypt certificates

Cer── cert.pem

Chain── chain.pem

Full── fullchain.pem

Full── fullchain_privkey.pem

Priv── privkey.pem

RE── README

From these files we need only two: a chain of certificates and a private key:

- fullchain.pem

- privkey.pem

Download them via Dashboard / Security / Certificates. To enter the WCS server admin area, open the address origin.llcast.com : 8888, where origin.llcast.com is the domain name of your WCS server.

The import result looks like this:

All is ready. The import was successful and now you need to perform a short test to make sure everything works. Go to the Dashboard server in the demo - an example of Two Way Streaming. Press the Publish button and after a few seconds Play. We are convinced that the server works, receives streams and gives them to play.

After checking one server, we do the same with the other two (Edge1 and Edge1). We install WCS5 on them, get Let's Encrypt certificates and import them into the installed WCS server for correct operation. At the end of each installation we carry out a simple test that shows that the server responds and plays streams.

As a result, we have configured three servers that are available at the following addresses:

Origin server configuration

It remains to configure and combine the servers in the CDN. We start from the Origin server.

As we have already mentioned above, Origin is also a balancer. We find the WCS_HOME / conf / loadbalancing.xml file in the configs and write Edge nodes in it. As a result, the config will look like this:

<loadbalancer mode="roundrobin" stream_distribution="webrtc"> <node id="1"> <ip>edge1.llcast.com</ip> <wss>443</wss> </node> <node id="2"> <ip>edge2.llcast.com</ip> <wss>443</wss> </node> </loadbalancer> This config describes the following settings:

- On which node the video stream with Origin (edge1 and edge2) should be relayed.

- What technology will be used for relaying (webrtc).

- What port should be used for the Websocket connection with the edge node (443).

- Balancing mode - in a circle (roundrobin).

Currently, there are two ways to rebate Origin - Edge, which are specified by the attribute stream_distribution, which are:

- webrtc

- rtmp

We expose here webrtc, because we need low latency. Next, you need to tell the Origin server that it will deal with balancing. Enable the balancer in the WCS_HOME / conf / server.properties config

load_balancing_enabled =true In addition, we will assign port 443 to the balancer so that connecting clients can receive data from the balancer via the standard HTTPS port, again for the successful passage of firewalls. In the same server.properties config we add

https.port=443 Next, we restart the WCS server to apply the changes.

service webcallserver restart As a result, we enabled the balancer and configured it on port 443 in the server.properties config, and also configured the Origin-Edge relays in the loadbalancing.xml config.

Edge Server Configuration

Edge servers do not require special configuration. Just take a stream from the Origin server, as if the user sent the stream from the webcam directly to this Edge server.

Although one setting will still have to be added. It is necessary to switch Websocket - the port of the distributing video servers on 443 for the best passability through firewalls. If the Edge servers make connections on port 443 via HTTPS and TURN will do the same, we will get a firewall bypass solution that will help increase the availability of broadcast for corporate users behind firewalls.

Change the WCS_HOME / conf / server.properties config and set the configuration

wss.port=443 Reboot the WCS server to apply the changes.

service webcallserver restart This completes the Edge server setup and you can begin testing the CDN.

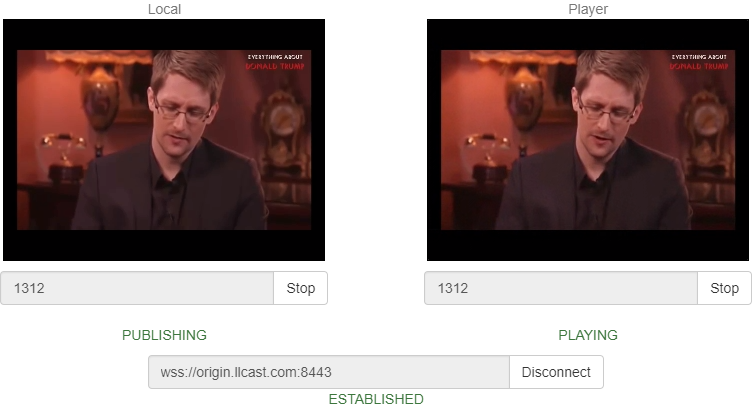

Webcam streaming to Origin

Users of our mini-CDN are divided into streamers and players. Streamers - broadcast video on CDN, and players - viewers, this video is played. The interface of the tape drive may look like this:

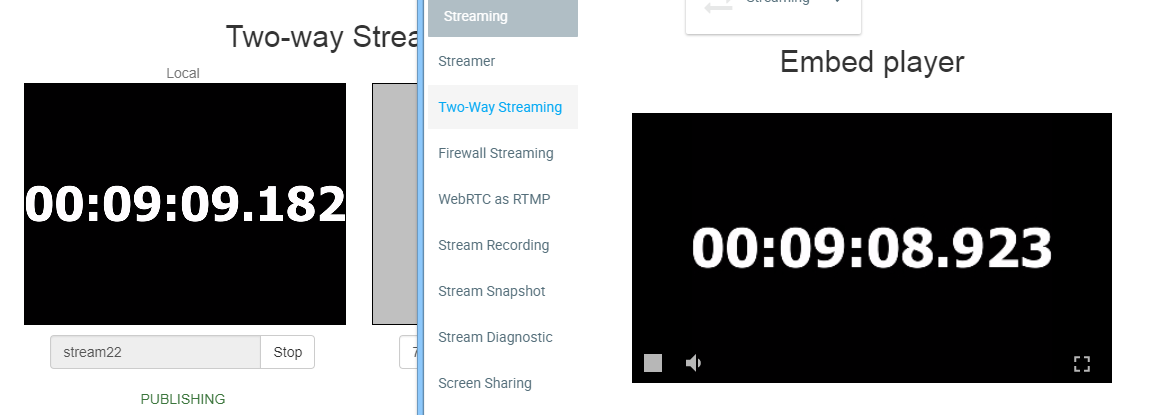

The easiest way to test this interface is to open the Two Way Streaming demo on the Origin server.

Example streamer on the Origin server:

https://origin.llcast.com:8888/demo2/two-way-streaming For testing, you need to establish a Websocket connection to the server using the Connect button and then send the video stream to the server using the Publish button. As a result, if everything is correctly configured, the stream will appear on the Edge1 and Edge2 servers and it can be lost from them.

This interface is just a demo of an HTML page based on a client-side JavaScript API (Web SDK) and can be customized and brought to any design using HTML / CSS.

Replay stream from Edge1 and Edge2 servers

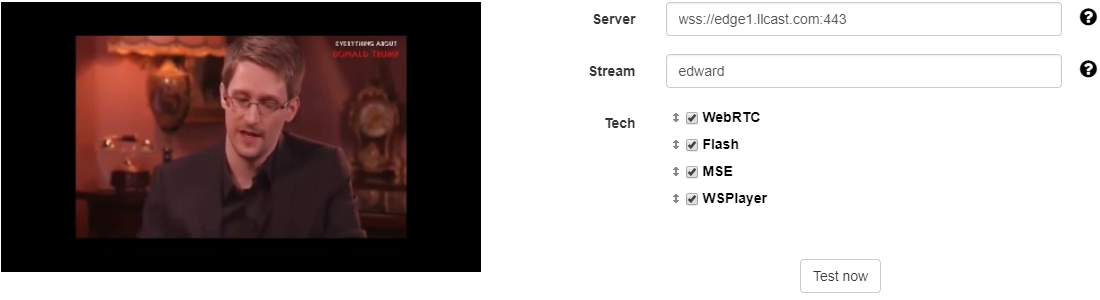

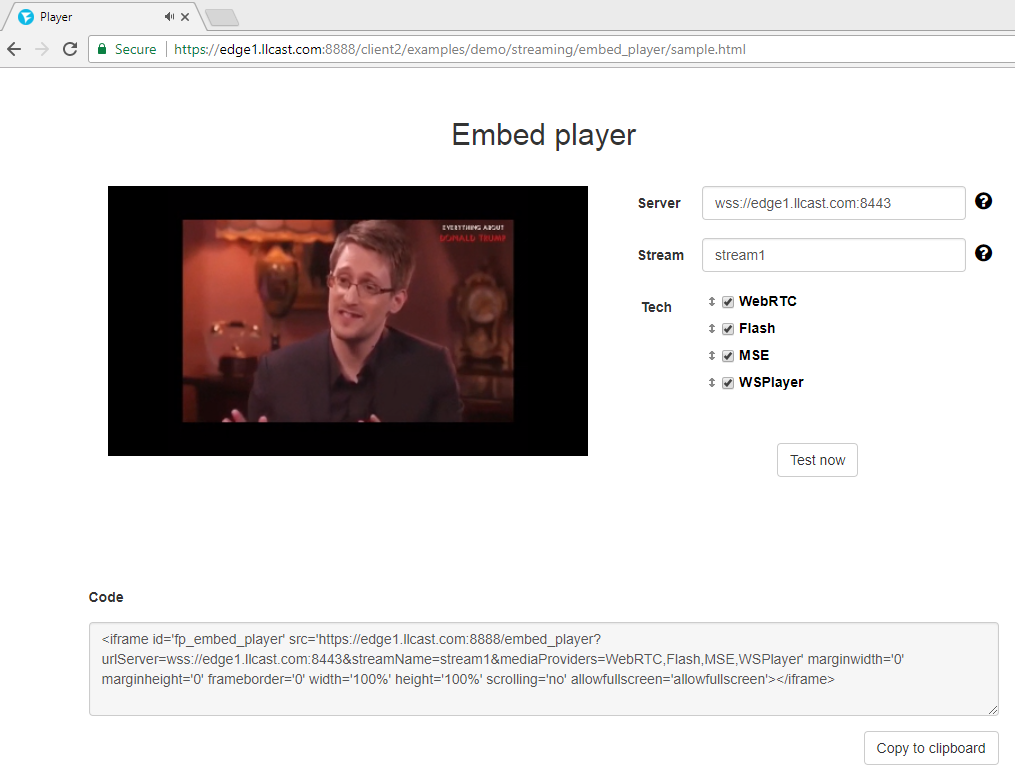

The player interface can be taken from one of the servers edge1.llcast.com or edge2.llcast.com - an example of Demo / Embed Player

Testing can be done by connecting directly to the edge-server via these links:

Edge1

https://edge1.llcast.com:8888/demo2/embed-player Edge2

https://edge2.llcast.com:8888/demo2/embed-player The player establishes a Websocket connection with the specified Edge server and retrieves the video stream from WebRTC from this server. Notice that on the Edge Websocket servers, the connection is established on port 443, which was configured above to pass through firewalls.

This player can be embedded in the website as an iframe or you can more closely integrate using the JavaScript API and develop your own HTML / CSS design.

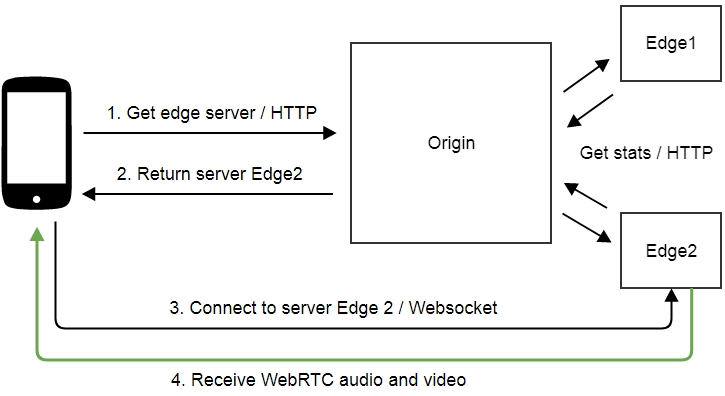

Connecting the load balancer to the player

Above, we made sure that Origin receives a video stream and replicates this stream to two Edge servers. We connected to each of the Edge servers separately and tested the playback of the stream.

Now you need to use the balancer so that the player selects the Edge server from the pool automatically.

To do this, in the JavaScript code of the player you need to specify the address of the balancer - lbUrl . If you specify it, the Embed Player example will first contact the balancer and take the recommended server address from it, and after that establish a connection with the received address using the Websocket protocol.

URL balancer is easy to test. Open it in the browser:

https://origin.llcast.com/?action=server_list The result will be a piece of JSON in which the balancer shows the recommended Edge server.

{ "server": "edge1.llcast.com", "flash": "1935", "ws": "8080", "wss": "443" } In this case, the balancer returned the first server to edge1.llcast.com and showed that a secure Websocket connection (wss) can be established with the server on port 443.

If you refresh the page in the browser, the balancer will give one Edge server, then another, in a circle. This is how roundrobin works.

It remains to turn on the balancer in the player. To do this, in the player.js player code, add the lbUrl parameter when setting up a session with the server:

Flashphoner.createSession({urlServer: urlServer, mediaOptions: mediaOptions, lbUrl:'https://origin.llcast.com/?action=server_list'}) The full player code is listed below:

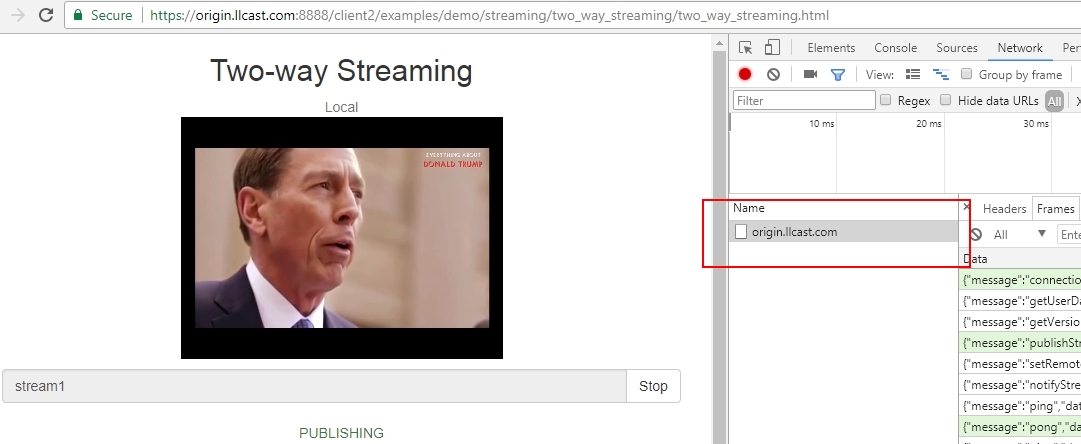

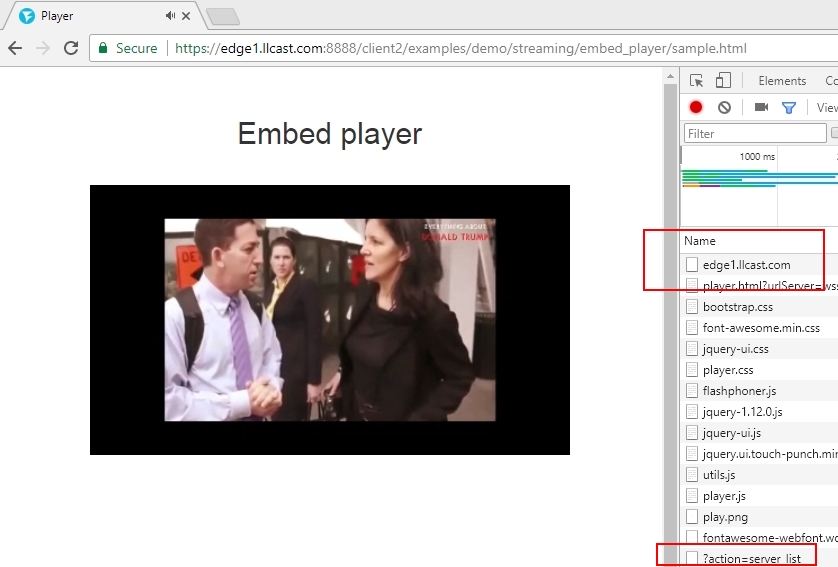

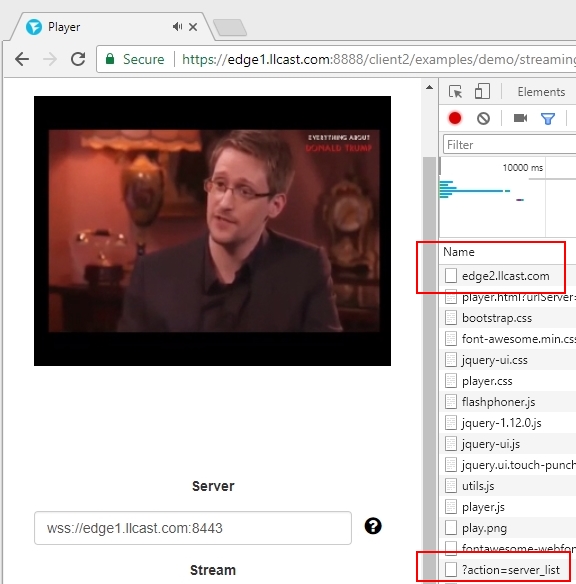

https://edge1.llcast.com:8888/client2/examples/demo/streaming/embed_player/player.js We check the work of the balancer and make sure that it correctly scatters connections on the nodes of edge1.llcast.com and edge2.llcast.com. To do this, simply update the player page and look in the Developer Tools Network tab, where you can see the connection to the balancer and to the server edge1.llcast.com (highlighted in red).

https://edge1.llcast.com:8888/client2/examples/demo/streaming/embed_player/player.html

Final test

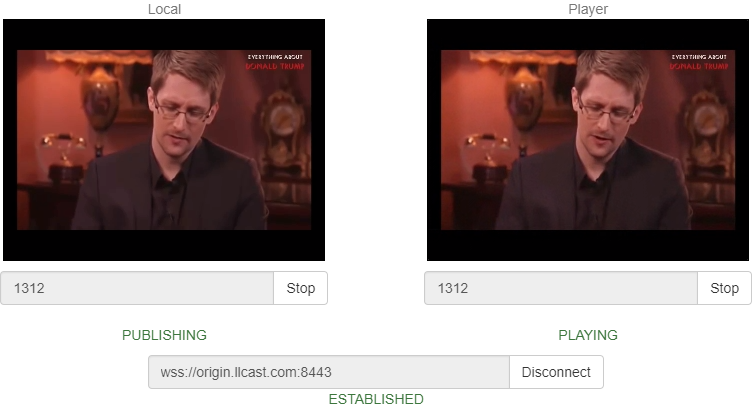

We set up Orign-Edge, relaying, balancing and connecting the player based on the balancer data. It remains to put everything together and conduct a test demonstrating the work of the resulting CDN.

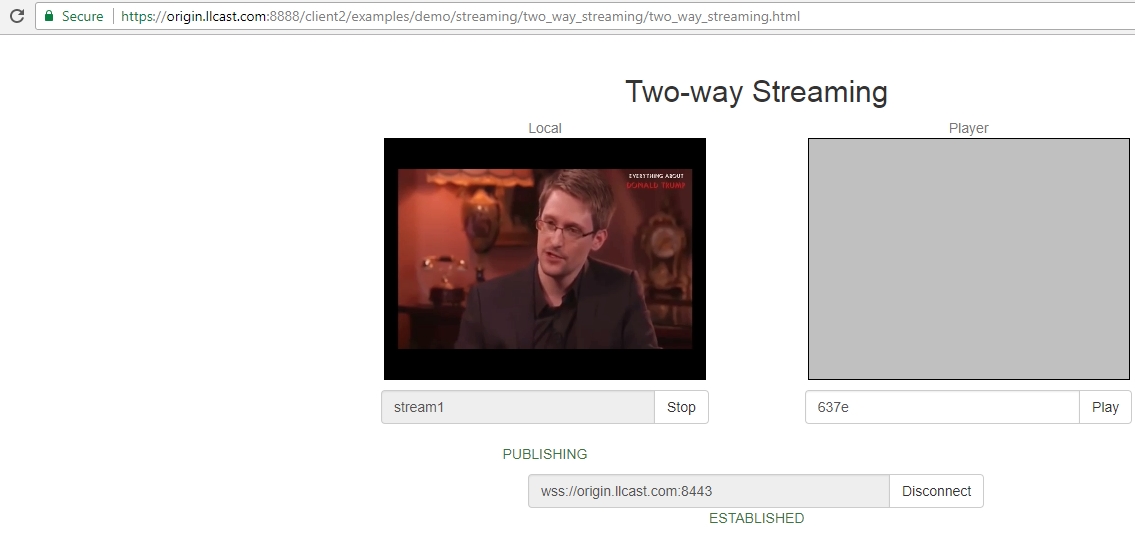

1. Open the streamer page

https://origin.llcast.com:8888/client2/examples/demo/streaming/two_way_streaming/two_way_streaming.html We send a video stream from a webcam to the Origin server, named stream1 from the Google Chrome browser.

We go to the Dev Tools - Network and make sure that the translating browser has connected to the Origin server and sent a stream to it.

2. Open the player page on the Edge server and play stream1 stream.

https://edge1.llcast.com:8888/client2/examples/demo/streaming/embed_player/sample.html

We see that the balancer issued edge1.llcast.com and the stream is currently being taken from it.

Then we update the page again and the balancer connects the player to the server edge2.llcast.com, which is located in Singapore.

Thus, we coped with the first task and completed the configuration of a geographically distributed CDN based on WebRTC with low latency.

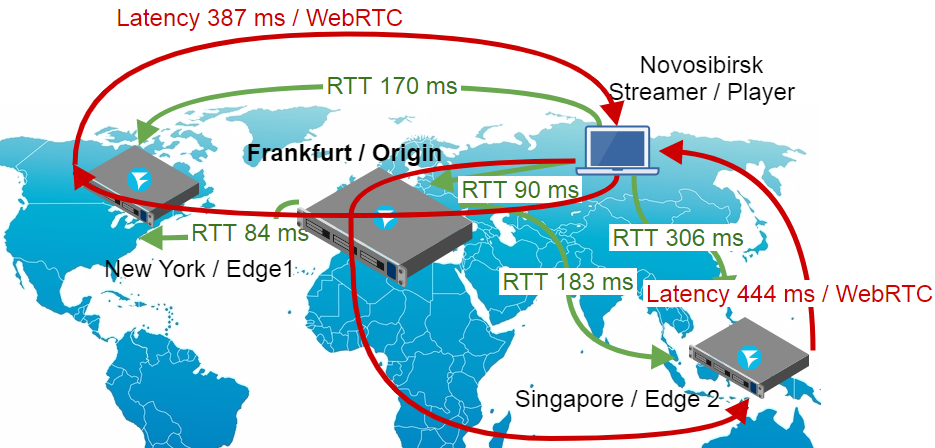

Next, you need to check the delay and make sure that it is really low relative to RTT. A good delay in the global network is a stable delay, not exceeding 1 second. Check what happened with us.

Measure the delay

We assume that the delay value will differ depending on which server we connected to the player - on Edge1 in New York or on Edge2 in Singapore.

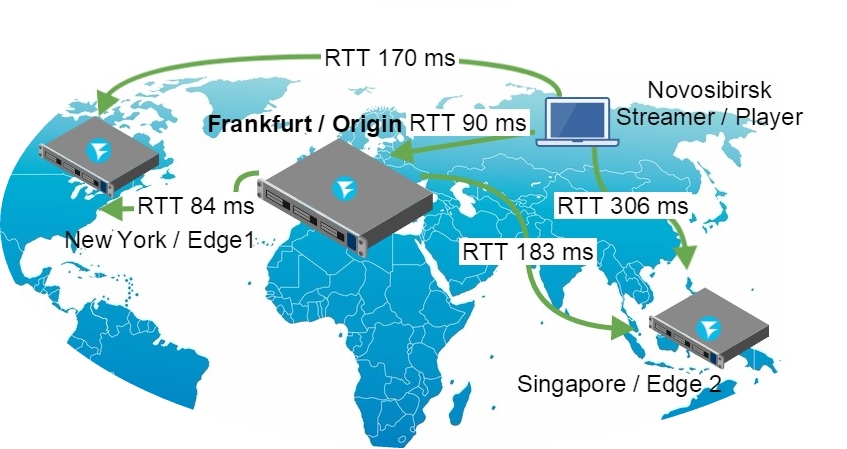

In order to have some point of reference, we will make an RTT map in order to understand how our test network is arranged and what to expect from its participants. We assume that there are no special losses and measure only the ping time (RTT).

As you can see, our test network is quite global and non-uniform in delay. Streamer and player will be located in Novosibirsk. Ping to Edge1 server in New York will be 170 ms, and to Edge2 in Singapore 306 ms.

We will take this data into account when analyzing the test results. For example, it is clear that if we broadcast the stream on Origin in Frankfurt, transfer it to Edge 2 in Singapore and take it from Novosibirsk, then the delay is expected to be no less (90 + 183 + 306) / 2 = 290 milliseconds.

Let's check the assumptions in practice. To obtain data on the delay, we use the test with a millisecond timer from here , using it as a virtual camera.

We send the stream to Origin and make some screenshots when playing from

Edge1 - server. On the left is the local video from the camera with zero delay, and on the right is the video that came to the player through the CDN.

Test results:

Test number | Latency (ms) |

one | 386 |

2 | 325 |

3 | 384 |

four | 387 |

Average latency: 370 ms | |

As you can see, the average latency of the video stream on the way the content delivery Novosibirsk (streamer) - Frankfurt (Origin) - New York (Edge1) - Novosibirsk (player) was 370 milliseconds. RTT on the same path = 90 + 84 + 170 = 344 milliseconds. Thus, we get the result of 0.9 RTT, which is very, very good for delivering a live video stream, considering that the ideal result would be 0.5 RTT.

Delay and RTT for the route Edge1, New York

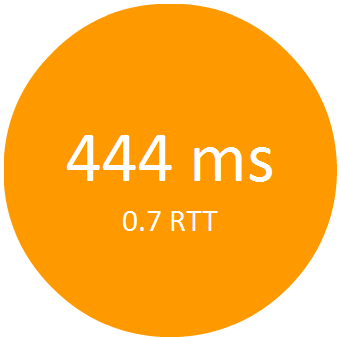

Now we will take the same stream from the Edge2 server in Singapore and understand how increasing RTT affects latency. We do a similar test with the Edge2 server and arrive at the following results:

Test number | Latency (ms) |

one | 436 |

2 | 448 |

3 | 449 |

Average latency: 444 ms | |

From this test we see that the ratio of delay to RTT is about 0.7, that is, better than in the previous test.

Delay and RTT for route Edge2, Singapore

The test results suggest that with an increase in RTT, the delay value will not increase dramatically, but will smoothly approach 0.5 RTT.

Anyway, the goal of building a geographically distributed CDN with a delay of less than a second is achieved and there is a field for future tests and optimizations.

Finally

As a result, we deployed three servers: Origin, Edge1 and Edge2, and not four, as promised at the beginning of the article. Setting up, configuring, and testing TURN servers behind a firewall greatly increases already bloated material. In this regard, we believe that it makes sense to issue it in a separate article. In the meantime, just do not forget that the fourth server in this scheme can be a TURN server that serves WebRTC browsers on port 443, providing passability through firewalls.

So, the CDN for WebRTC streams is configured and the delay is measured. The final scheme of work came out like this:

How can this scheme be improved and expanded? Can be improved in width. For example, we add several Origin servers and set the balancer between them. Thus, we will balance not only viewers, but also streamers - those who directly broadcast content from webcams:

CDN is still open for the demo access on the llcast.com domain. Maybe we will freeze it soon, and the demo links will stop working. Therefore, if there is a desire to measure the delay - welcome. Good streaming!

Links

https://origin.llcast.com:8888 - Origin Demo Server

https://edge1.llcast.com:8888 - Edge1 demo server

https://edge2.llcast.com:8888 - Edge2 demo server

Two Way Streaming - demo streaming interface from webcam to Origin.

Embed Player - demo player interface with balancer between Edge1 and Edge2

WCS5 - WebRTC server, on the basis of which the CDN is built

Source: https://habr.com/ru/post/340344/

All Articles