VPC statistics service

In this article, we will discuss what components are used to collect, process and store the metrics of virtual machines, and give examples of how to configure the use of these components in a VPC project.

For obtaining metrics and their primary processing, the component OpenStack Ceilometer is responsible .

')

For a long time, it was the only component of OpenStack providing all the basic telemetry capabilities.

In the future, the developers have divided the functions of the Ceilometer between several products:

- Aodh - alert service;

- Gnocchi - storage service of aggregated measurements;

- Panko - event storage service;

- Ceilometer is a measurement collection service.

Aodh and Panko will remain outside the scope of this article.

Measurement Collection - OpenStack Ceilometer

Ceilometer can collect measurements about the use of CPU, RAM, network interfaces, disks, temperature values from various sources:

- directly from hypervisors ( KVM , Hyper-V , XEN , VMware );

- from the message queue ( RabbitMQ , ZeroMQ , Kafka );

- by polling other OpenStack components through the REST API;

- by polling equipment using SNMP or IPMI protocols.

Previously, Ceilometer also provided an API for working with collected dimensions, metrics, and resources. But since the release of OpenStack Ocata, the corresponding functionality has been removed from this component, since the main backend for the Ceilometer has become Gnocchi , which has its own full-featured API for working with all these objects.

We tried using the Ceilometer from the OpenStack Juno release a few years ago.

Our first version of the billing for the VPC project sent reports to it, where they were available to service programs and users authorized by the standard OpenStack Keystone token.

The main backends for Ceilometer at that time were SQL database and MongoDB . Unfortunately, both options after six months of use ceased to cope with the load. This was due to the fact that the structure of the collected data was too loose, not indexed and too detailed.

Each measurement was stored in its original form, without any aggregations or changes. On large amounts of data, simple operations of sampling measurements for a specified time period began to take a very long time and the service became impossible to use.

To overcome architectural problems, in 2014, a team of telemetry service developers for OpenStack founded a new project called Gnocchi. A detailed description of the causes and the chosen solution can be found in the review article on the blog of the main developer of Gnocchi - Julien Dunge: OpenStack Ceilometer and the Gnocchi experiment .

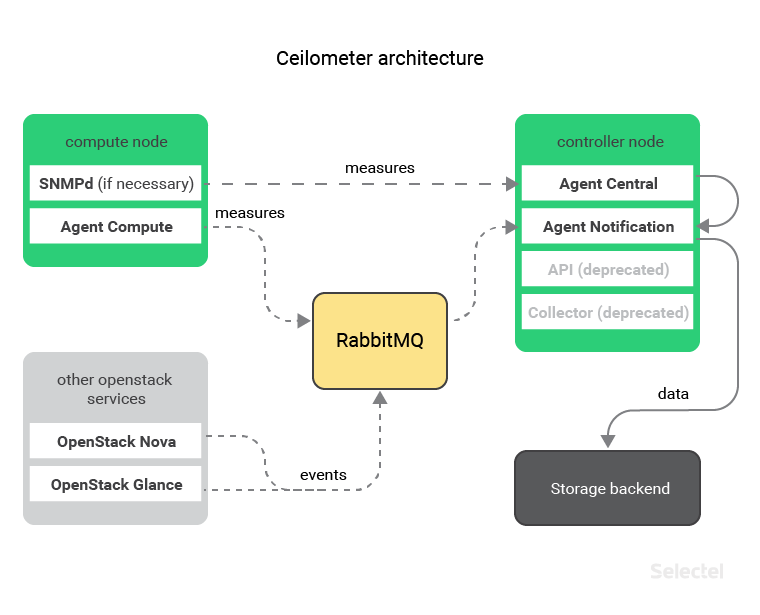

Ceilometer architecture

The architecture of Ceilometer is clearly shown in the diagram:

On each host where the hypervisor is installed, there is a component of the Ceilometer Agent Compute , which collects data from virtual machines. The collected data is then transmitted to the central Ceilometer Agent Notification agent via a message queue.

If you need to collect data on the loading of physical hosts, then you can install and use an SNMP agent on them. In this case, the data will be collected by the central agent Ceilometer Agent Central .

After processing, this data will be transferred to the Ceilometer Agent Notification Agent, which will send it to the selected backend for storage.

Another central agent, the Ceilometer Agent Notification, can collect information about events from other OpenStack components while listening to a message queue. This can be useful if you want to monitor and save information about creating, changing or deleting servers, disks or ports.

Until recently (and more precisely, before the release of OpenStack Ocata , which took place in February of this year), along with Ceilometer Agent Notification and Ceilometer Agent Central, it was necessary to install another component on the control hosts - Ceilometer Collector.

Now this component is no longer used. All of its functions are performed by the Ceilometer Agent Notification.

Ceilometer Setup

The main configuration files of the Ceilometer are:

- ceilometer.conf - a file that describes all the basic parameters of Ceilometer (logging, using message queue, authentication, coordination of Ceilometer processes, etc.);

- pipeline.yaml - a file that describes what information and where it should be collected from;

- gnocchi_resources.yaml - a description of the correspondence between Ceilometer and Gnocchi objects (we will discuss this later).

The template file ceilometer.conf with all available options can be downloaded or generated using the official documentation: Ceilometer Configuration Options .

The file pipeline.yaml is required to be filled in by yourself.

Example:

--- sources: # - interval: 600 # () meters: # - memory.usage # () - memory.resident # , () name: memory_meters # sinks: # - memory.sink # - interval: 600 # () meters: # - disk.device.read.bytes # - disk.device.write.bytes # name: disk_meters # sinks: # - disk_sink # sinks: # - name: memory_sink # ( "memory_meters") publishers: # , - gnocchi:// # Gnocchi - name: disk_sink # ( "memory_meters") publishers: # - gnocchi:// # Gnocchi ( ) In this file, we can additionally specify transformations for the collected data. For example, we can convert all obtained values of memory.usage and memory.resident metrics from megabytes to kilobytes. At the same time, we rename these metrics and add units of measurement to them.

To do this, we need to edit the memory_sink consumer in the following way:

sinks: - name: memory_sink transformers: - name: "unit_conversion" # unit_conversion parameters: source: map_from: name: "memory\\.(usage|resident)" # target: map_to: name: "memory.\\1.kilobytes" # scale: "volume * 1024.0" # unit: "KB" # publishers: - gnocchi:// Detailed information about the format of this file and the application of other transformations can be found in the official documentation: Data processing and pipelines .

Next we look at the Gnocchi project, which allows you to store collected measurements in an aggregated form and provides an API for working with them.

Data Storage - Gnocchi

The Gnocchi project is a time series database (time-series database).

It should be noted that, in early May 2017, the developers of Gnocchi decided to bring this project out of the OpenStack infrastructure.

The codebase was moved to GitHub , and the GitHub Issues mechanism is used as a bugtracker. One of the main reasons is the desire to position Gnocchi not only as a backend to Ceilometer, but as a more versatile product suitable for other solutions. For example, the Gnocchi repository already has a plugin for saving data from Collectd and a plugin for plotting graphs in Grafana .

Consider the device and features of Gnocchi in more detail.

Gnocchi Architecture

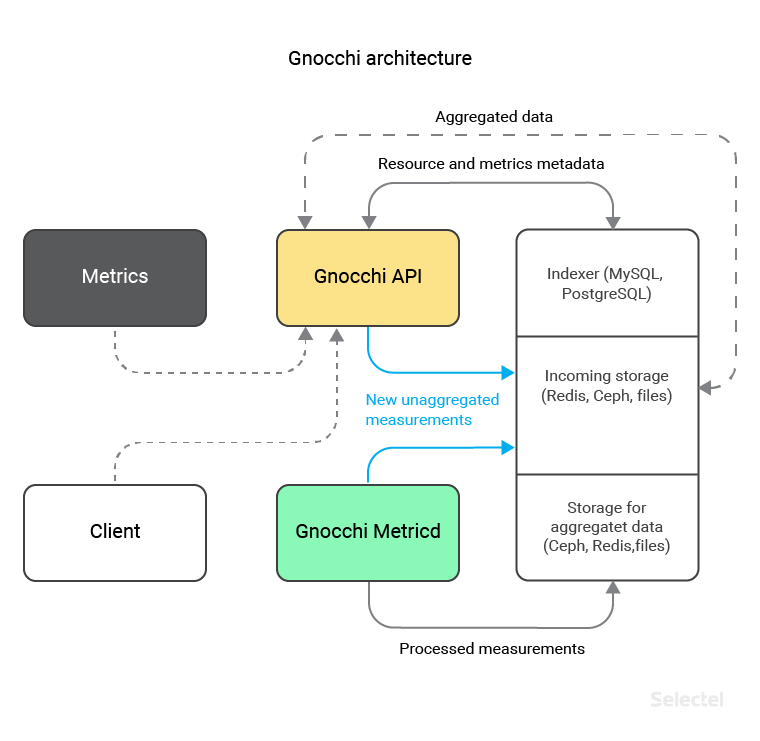

Gnocchi consists of 2 main components:

- API , through which data is saved and updated (for example, from a Ceilometer, or manually), as well as receiving aggregated data;

- Metricd is a daemon that processes just received and not yet sorted data, and saves it in the required form to the selected storage ( Ceph , Redis , OpenStack Swift or to the local file system).

Starting with version 3.1, Gnocchi introduced support for various types of repositories for processed and raw data. This separation allows you to place “hot” non-aggregated data on fast storage (for example, Redis, whose support appeared in Gnocchi version 4.0), and save the data in an aggregated form to a more reliable, scalable backend (for example, Ceph, which was still in the most first version of Gnocchi).

In addition to direct data storage, Gnocchi additionally requires a SQL database, where metrics indices and their metadata are stored. You can use MySQL or PostgreSQL for this. Gnocchi uses its own approach to the preservation of measurements, since all these technologies do not provide the ability to store data in the form of time series.

Saving data in Gnocchi

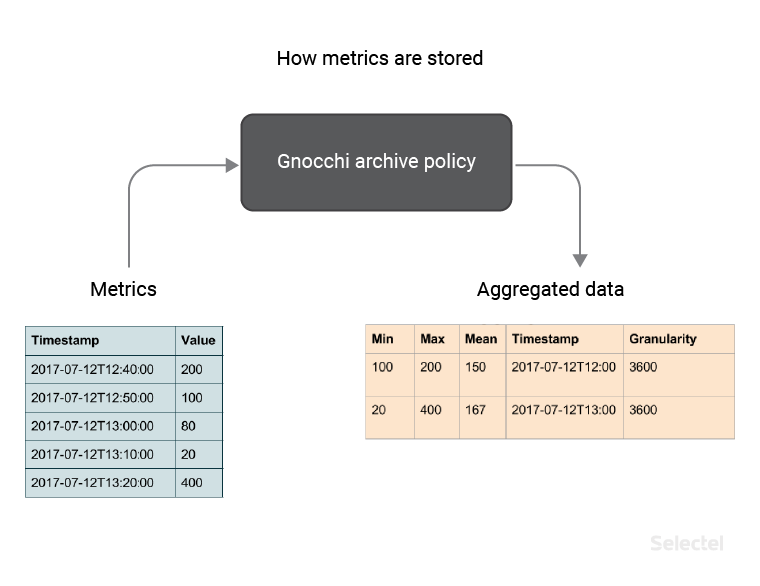

When new data is received, the values are not saved in their original form. Instead, they are aggregated using the methods selected by the user (in our example - methods minimum, maximum, average) and saved with the specified accuracy (in our example - 3600 seconds), in accordance with the established archival policy.

We can create an archive policy using the python-gnocchiclient console client. For example, create a policy named memory_policy , which contains 3 aggregation methods — minimum, maximum, and average, and 2 definitions of how the data will be stored. The first definition says that we will store data with an accuracy of 1 hour for 7 days. The second says that we will store data with an accuracy of 1 day for 1 year.

In addition, let us specify the value of the back window equal to 72, which will allow us to save not only the metrics created now, but also the old, for some reason, hung metrics for a period of not more than 72 days.

controller:~# gnocchi archive-policy create memory_policy \ > -b 72 \ > -m min -m max -m mean \ > -d 'granularity:1h,timespan:7d' \ > -d 'granularity:1d,timespan:365d' In response, we will receive a description of the created policy:

+---------------------+-------------------------------------------------------------------------+ | Field | Value | +---------------------+-------------------------------------------------------------------------+ | aggregation_methods | max, min, mean | | back_window | 72 | | definition | - points: 168, granularity: 1:00:00, timespan: 7 days, 0:00:00 | | | - points: 365, granularity: 1 day, 0:00:00, timespan: 365 days, 0:00:00 | | name | memory_policy | +---------------------+-------------------------------------------------------------------------+ Note that for the definitions, the points value was also automatically calculated. This value is calculated by the formula:

Points = Timespan / Granularity

It is required to calculate the space that the values of stored metrics will occupy.

Gnocchi developers suggest using the following formula to calculate the required space:

Size (in bytes) = 8 * points * aggregation methods

This formula is a calculation of the place for the worst case, since the information is always stored in a compressed form. Using this formula, we can calculate the amount of space for the memory_policy archive policy. For the first definition (accuracy 1 hour for 7 days) we get 4032 bytes:

4032 (byte) = 8 * 168 * 3

And for the second definition (accuracy 1 day throughout the year) we get 8760 bytes:

8760 (byte) = 8 * 365 * 3

The resulting values represent the used space for one metric stored according to the memory_policy policy.

If we, for example, start collecting 2 metrics - memory.usage and memory.resident for 2000 virtual machines, then we will need:

2000 * 2 * 4032 (byte) = 16.128 MB (for 7 days)

2000 * 2 * 8760 (byte) = 35.04 MB (for 1 year)

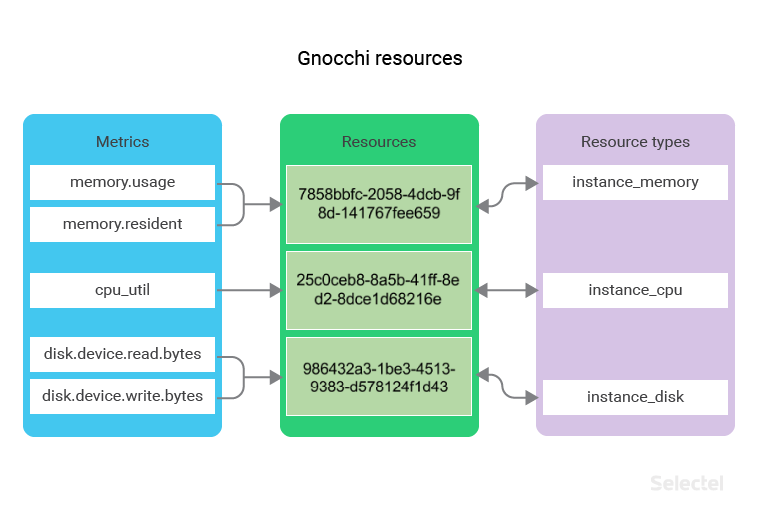

Gnocchi Resources

All metrics stored in Gnocchi belong to some resources. A resource is an abstract object that can contain several metrics and can also have additional fields described in the type of this resource.

The relationship of objects metric - resource - type of resource looks like this:

For example, we have a set of resources like instance_memory . Each resource of this type will contain the metrics memory.usage and memory.resident .

If we collect metrics from 2000 virtual machines, the number of instance_memory resources will also be equal to 2000.

For such resources, it may be useful to store additional information, for example, the host name of the hypervisor running the virtual machine or the identifier of the operating system image (stored in the OpenStack Glance service) from which the virtual machine was created.

Create the instance_memory resource type using the console command. Let's specify the described additional host fields, image_ref , to store the host name and image identifier, while making the host field mandatory:

controller:~# gnocchi resource-type create instance_memory \ > -a host:string:true:min_length=0:max_length=255 \ > -a image_ref:string:false:min_length=0:max_length=255 In response, we will receive a description of the created resource type:

+----------------------+-----------------------------------------------------------+ | Field | Value | +----------------------+-----------------------------------------------------------+ | attributes/host | max_length=255, min_length=0, required=True, type=string | | attributes/image_ref | max_length=255, min_length=0, required=False, type=string | | name | instance_memory | | state | active | +----------------------+-----------------------------------------------------------+ Similarly, create the instance_cpu and instance_disk resource types. The first one will have the same additional fields as the instance_memory , and the second one will have an instance_id field indicating the server id to which the drive is connected:

controller:~# gnocchi resource-type create instance_cpu \ > -a host:string:true:min_length=0:max_length=255 \ > -a image_ref:string:false:min_length=0:max_length=255 +----------------------+-----------------------------------------------------------+ | Field | Value | +----------------------+-----------------------------------------------------------+ | attributes/host | max_length=255, min_length=0, required=True, type=string | | attributes/image_ref | max_length=255, min_length=0, required=False, type=string | | name | instance_cpu | | state | active | +----------------------+-----------------------------------------------------------+ controller:~# gnocchi resource-type create instance_disk \ > -a instance_id:uuid:true +------------------------+--------------------------+ | Field | Value | +------------------------+--------------------------+ | attributes/instance_id | required=True, type=uuid | | name | instance_disk | | state | active | +------------------------+--------------------------+ Now let's look at how we can begin to save the Ceilometer metrics in Gnocchi, using the created resource types.

Ceilometer + Gnocchi Configuration

In order for the Ceilometer to start using Gnocchi as a backend, you need:

1. Add the meter_dispatchers option to the ceilometer.conf file:

[DEFAULT] meter_dispatchers = gnocchi 2. Create a file gnocchi_resources.yaml , which describes the correspondence between Ceilometer metrics and Gnocchi resource types, and also indicates the additional fields of resource types:

--- resources: - resource_type: instance_memory # metrics: - 'memory.usage' # - 'memory.resident' # attributes: host: resource_metadata.instance_host # image_ref: resource_metadata.image_ref # - resource_type: instance_disk # metrics: - 'disk.device.read.bytes' # - 'disk.device.write.bytes' # attributes: instance_id: resource_metadata.instance_id # id - resource_type: instance_cpu # metrics: - 'cpu_util' # attributes: host: resource_metadata.instance_host # image_ref: resource_metadata.image_ref # After starting the Ceilometer, the data collection and sending to Gnocchi will begin (provided that it was configured according to the documentation ).

You can monitor the processing status of new data and save it in an aggregated form to the selected storage using the command:

controller:~# gnocchi status +-----------------------------------------------------+-------+ | Field | Value | +-----------------------------------------------------+-------+ | storage/number of metric having measures to process | 93 | | storage/total number of measures to process | 93 | +-----------------------------------------------------+-------+ In the above output, you can see that now the Gnocchi-metricd processes process 93 measurements distributed over 93 metrics.

With this command, you can see if the Gnocchi-metricd component is coping with incoming metrics.

The processing speed will directly depend on the number of Gnocchi-metricd processes and the performance of the selected data warehouse.

Display aggregated information

After the resulting measurements are processed and stored, it will be possible to access the resources and measurements of Gnocchi.

Let's output resources with the instance_memory type, limit the output to three resources, and display only 4 columns - the resource identifier of the resource itself, its type, the hostname of the virtual machine and the identifier of its image:

controller:~# gnocchi resource list --type instance_memory --limit 3 --column id --column type --column host --column image_ref +--------------------------------------+-----------------+-----------+--------------------------------------+ | id | type | host | image_ref | +--------------------------------------+-----------------+-----------+--------------------------------------+ | 945ad3cc-2617-4b19-a681-5a1cb96d71e1 | instance_memory | compute00 | 3456e843-b7fe-42be-8c4c-0c7d1c2d09c7 | | e33c895f-e38a-4f8e-be07-8fe0d7c8275f | instance_memory | compute02 | 27bbfeb7-0706-4d11-bb59-f98d6b08dc1c | | 023fed66-3fdd-43b6-b02e-325da55b62cc | instance_memory | compute04 | f0f5e0aa-4615-462e-8340-b8258aae90e2 | +--------------------------------------+-----------------+-----------+--------------------------------------+ Let's display columns with metrics and type for the resource 945ad3cc-2617-4b19-a681-5a1cb96d71e1 :

controller:~# gnocchi resource show --column metrics --column type 945ad3cc-2617-4b19-a681-5a1cb96d71e1 +---------+-------------------------------------------------------+ | Field | Value | +---------+-------------------------------------------------------+ | metrics | memory.resident: f56b76ad-5ce8-49fe-975f-6e5da412df31 | | | memory.usage: aa757f46-52b9-40de-898c-524dfe29b7bc | | type | sel_instance | +---------+-------------------------------------------------------+ Let's look at the average values for measurements of the metric aa757f46-52b9-40de-898c-524dfe29b7bc , in the range of 12:00 - 18:00 for the date of August 25, 2017, and with an accuracy of one hour:

controller:~# gnocchi measures show --aggregation mean --granularity 3600 --start 2017-08-25T12:00 --stop 2017-08-25T18:00 aa757f46-52b9-40de-898c-524dfe29b7bc +---------------------------+-------------+---------------+ | timestamp | granularity | value | | +---------------------------+-------------+---------------+ | 2017-08-25T12:00:00+00:00 | 3600.0 | 2231.75 | | 2017-08-25T13:00:00+00:00 | 3600.0 | 2238.66666667 | | 2017-08-25T14:00:00+00:00 | 3600.0 | 2248.58333333 | | 2017-08-25T15:00:00+00:00 | 3600.0 | 2259.08333333 | | 2017-08-25T16:00:00+00:00 | 3600.0 | 2240.41666667 | | 2017-08-25T17:00:00+00:00 | 3600.0 | 2249.66666667 | +---------------------------+-------------+---------------+ Aggregated information can be obtained not only with the help of the console utility, but also through the REST API. Consider a few examples:

Getting the values of memory.usage metrics for a resource of type instance_memory , with an accuracy of 24 hours, starting from 08.28.2017

controller:~# curl -XGET \ > -H 'Content-Type: application/json' \ > -H 'X-Auth-Token: KEYSTONE_TOKEN' \ > 'controller:8041/v1/resource/instance_memory/684f5b56-2c06-485a-ae1a-66ab5c4175fb/metric/memory.usage/measures?start=2017-08-28&granularity=86400' \ > | python -m json.tool [ [ "2017-08-28T00:00:00+00:00", 86400.0, 2645.9444444444443 ], [ "2017-08-29T00:00:00+00:00", 86400.0, 2671.625 ], [ "2017-08-30T00:00:00+00:00", 86400.0, 2681.5438596491226 ] ] Search for a resource with an instance_memory type created after August 29, 2017, with the value “compute19” in the host field:

controller:~# curl -XPOST \ > -H 'Content-Type: application/json' \ > -H 'X-Auth-Token: KEYSTONE_TOKEN' \ > 'controller:8041/v1/search/resource/instance_memory \ > -d '{ > "and": [ > { > "=": { > "host": "compute19" > } > }, > { > ">": { > "started_at": "2017-08-29" > } > } > ] > }' \ > | python -m json.tool [ { "created_by_project_id": "99288fd3d178459f808c1e8bc2cf9e49", "created_by_user_id": "7dd0582d38aa471bbe8995f63a1293a9", "creator": "7dd0582d38aa471bbe8995f63a1293a9:99288fd3d178459f808c1e8bc2cf9e49", "ended_at": null, "host": "compute19", "id": "9052e237-ad17-47be-8aa5-10aacbf6717f", "image_ref": "e12ef13d-783c-4030-9251-ad2c8e270453", "metrics": { "memory.resident": "365db8ce-f4f7-4e59-ac9f-03dcdfe81195", "memory.usage": "084157b7-09d3-45e7-a869-fad62062025a" }, "original_resource_id": "9052e237-ad17-47be-8aa5-10aacbf6717f", "project_id": "99288fd3d178459f808c1e8bc2cf9e49", "revision_end": null, "revision_start": "2017-08-30T20:35:19.214598+00:00", "started_at": "2017-08-30T20:35:19.214577+00:00", "type": "instance_memory", "user_id": "7dd0582d38aa471bbe8995f63a1293a9" } ] Getting aggregation by the maximum values of memory.resident metrics with up to one hour accuracy for virtual machines running on the compute19 host; metrics are systematized by project identifiers:

controller:~# curl -XPOST \ > -H 'Content-Type: application/json' \ > -H 'X-Auth-Token: KEYSTONE_TOKEN' \ > 'controller:8041/v1/aggregation/resource/instance_memory/metric/memory.resident&aggregation=max&granularity=3600&groupby=project_id' \ > -d '{ > "=": { > "host": "compute19" > } > }' \ > | python -m json.tool [ { "group": { "project_id": "eef577b17cfe4432b7769d0078dbb625" }, "measures": [ [ "2017-08-29T09:00:00+00:00", 3600, 735 ], [ "2017-08-30T11:00:00+00:00", 3600, 949 ] ] }, { "group": { "project_id": "b998dbbb9b02474c9dc49ffc289eae8c" }, "measures": [ [ "2017-08-29T09:00:00+00:00", 3600, 612 ], [ "2017-08-30T11:00:00+00:00", 3600, 642 ] ] } ] Other examples of using the REST API are available in the official documentation .

Statistics in the VPC service

We currently use the described components in the VPC service.

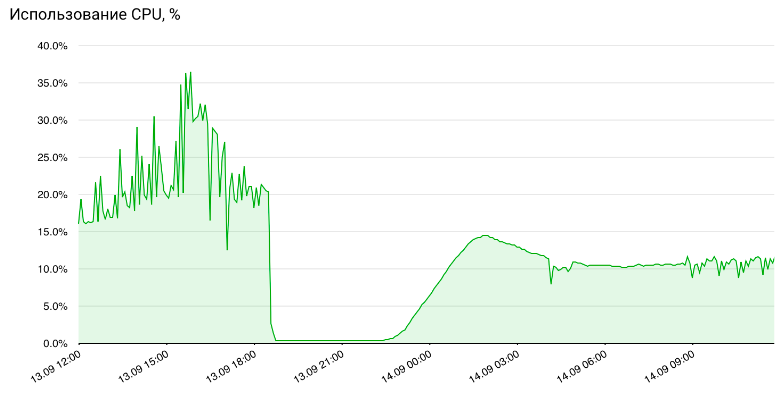

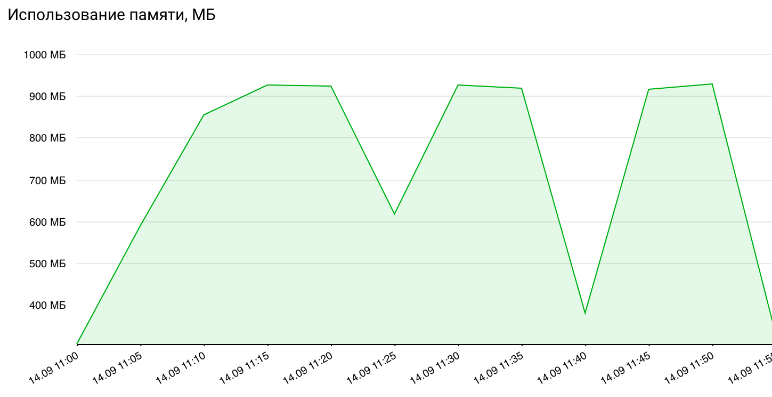

The following metrics for virtual machines are available to users:

- processor utilization,% ( cpu_util );

- memory usage, in MB ( memory.usage ).

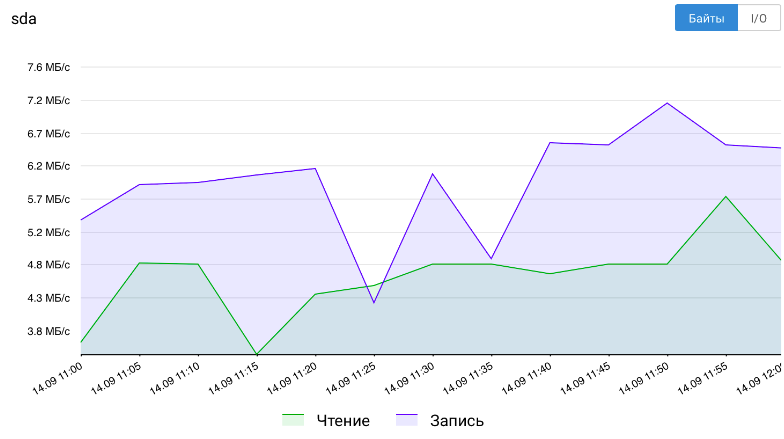

Disk metrics:

- the number of bytes / c to read and write ( disk.device.read.bytes , disk.device.write.byte s);

- the number of read and write requests per second ( disk.device.read.requests , disk.device.write.requests ).

And metrics for network interfaces:

- the number of bits / c of incoming and outgoing traffic ( network.incoming.bits , network.outgoing.bits );

- the number of incoming and outgoing traffic packets per second ( network.incoming.packets , network.outgoing.packets ).

All data can be obtained either through the REST API or the console client Gnocchi, or as embedded graphs in the server control panel.

CPU usage graphs

RAM Charts

Disk load charts

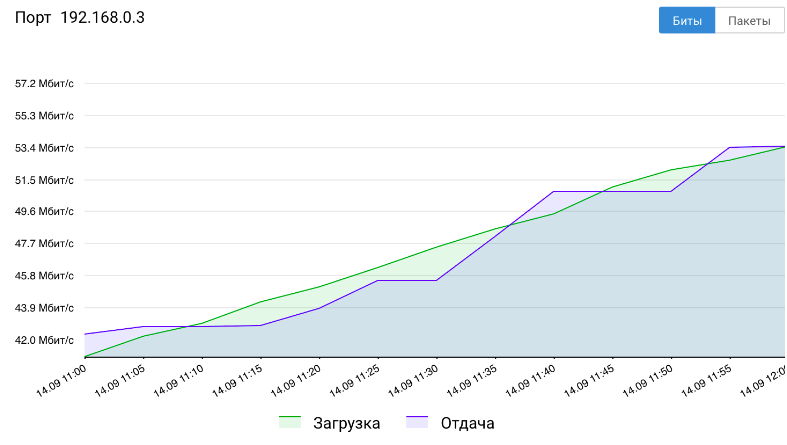

Network Interface Load Charts

Conclusion

In this article, we looked at two components for collecting, storing, and displaying statistics about OpenStack objects.

If you have any questions, welcome to comments.

If you would be interested in trying gnocchi as a service to which you could write your own data - write us about it.

We would also be happy if you share your own experience using a Ceilometer or Gnocchi.

Source: https://habr.com/ru/post/340054/

All Articles