CRI-O - Docker alternative to launch containers in Kubernetes

Many DevOps engineers and system administrators, especially those who have worked with Kubernetes, have already heard about the CRI-O project, entitled “the lightweight executable environment for containers in Kubernetes”. Often, however, ideas about its purpose, capabilities, and status are very blurred — due to the youth of the project, lack of practical experience and the growing number of changes in the standards for containers. This article is an educational program about CRI-O, which tells about the appearance of the project, its features and current status.

Appearance history

Following the growing popularity of Linux containers, in mid-2015, the non-profit organization The Linux Foundation, with the assistance of CoreOS, Docker, Red Hat, and several other companies, presented the Open Container Project, now known as the Open Container Initiative (OCI) . His goal is to create open industry standards for container formats and executable environments.

')

It was assumed that the project will combine the base of competing products for containers, such as Docker runc and CoreOS appc, into common standards. The actual result of the OCI activity was the release last summer of version 1.0 for two standards:

- for the executable container environment ( Runtime Specification , runtime-spec ) - determines how the “filesystem bundle” is launched with the contents of the container (the reference implementation is the same runc);

- for the container image format ( Image Specification , image-spec ) - defines the format from which the container image will be unpacked into the filesystem bundle (for further launch).

At the same time and independently of the Open Container Initiative, but “with a focus on promoting standards for containers through OCI”, Red Hat set about creating the Open Container Initiative Daemon OCID , placing it in the incubator of the Kubernetes project and announcing in September 2016 .

As part of OCID, Red Hat engineers were going to develop innovations in the field of containers: the executable environment, the distribution of images, storage, their signatures, etc. At the same time, the project was positioned as “not competing with Docker”, because “in reality it uses the same OCI runc executable environment that is used in the Docker Engine, the same image format and allows you to use

docker build and related tools.”Even then, OCID was called the “implementation of the standard interface for the executable environment for containers in Kubernetes”, in which the UNIX philosophy followed, when one utility performs one task. Therefore, the OCID functionality was immediately broken down into the following components:

- OCI Container Runtime Environment (runc);

- OCI Runtime Tools (developed in OCI toolkit for working with runtime-spec) ;

- containers / image (a set of Go language libraries for working with container images and registries - these features are also available through the console skopeo utility created in Red Hat for Project Atomic) ;

- containers / storage (Go library, which provides methods for storing file system layers, container images and containers themselves; includes a wrapper with a console interface) ;

- CNI (Container Network Interface) (specification and libraries for managing network interfaces in containers - we’ve written more about CNI in this article ) .

However, the name “OCID”, which directly refers to the OCI initiative, was not approved by the Open Container Initiative itself, since it did not belong to the list of official OCID projects. And soon after the first announcement, at the request of OCI , the project received a new name - CRI-O (“CRI” stands for “Container Runtime Interface”).

Essentially, we can conclude that OCI has become a necessary forerunner for CRI-O, and in Red Hat they used the standardization trend, promoting it on the one hand and pursuing their goals (low-level stack for Project Atomic and OpenShift) on the other. The Linux and Open Source community rather only won from this, having received competition and diversity among free products, and the OCI also stressed that their discontent was related solely to the name of the project, but not to its very purpose:

“The Open Container Initiative (OCI) focuses on creating a formal specification for the executable environment and the container image format, since standardization in these areas will also contribute to other innovations. Any other projects are currently beyond the scope of OCI, but we encourage our participants to continue working on the specification for the executable environment and the format of the images, since this is useful for the entire industry. Innovation is happening quickly at the implementation level, and we intend to integrate all relevant improvements in the specification. Our goal is to support the efficient growth and health of container technology and the entire Open Source community. ”

To this day, Red Hat continues to promote CRI-O in the world of IT professionals, as exemplified by the recent (June 2017) publication of " 6 reasons why CRI-O is the best executable environment for Kubernetes " on the Project Atomic blog. As such reasons, the authors call:

- An open project management scheme and its development within the parent Kubernetes community.

- "A truly open project", as evidenced by a pleasant comment from an engineer from Intel:

... as well as the activity of contributors , the transfer of some improvements to the code base of Kubernetes itself. - The minimum code base (accessible for simple auditing and fairly reliable), the rest of the components to which are connected from other projects.

- Stability achieved through full orientation to Kubernetes, using upstream tests from K8s (node-e2e and e2e) before accepting patches (if the release of CRI-O breaks something in K8s, it is blocked), as well as its integration tests.

- Security, because it has all the necessary Kubernetes functions like SELinux, Apparmor, seccomp, capabilities.

- The use of standardized components, since The project follows OCI specifications.

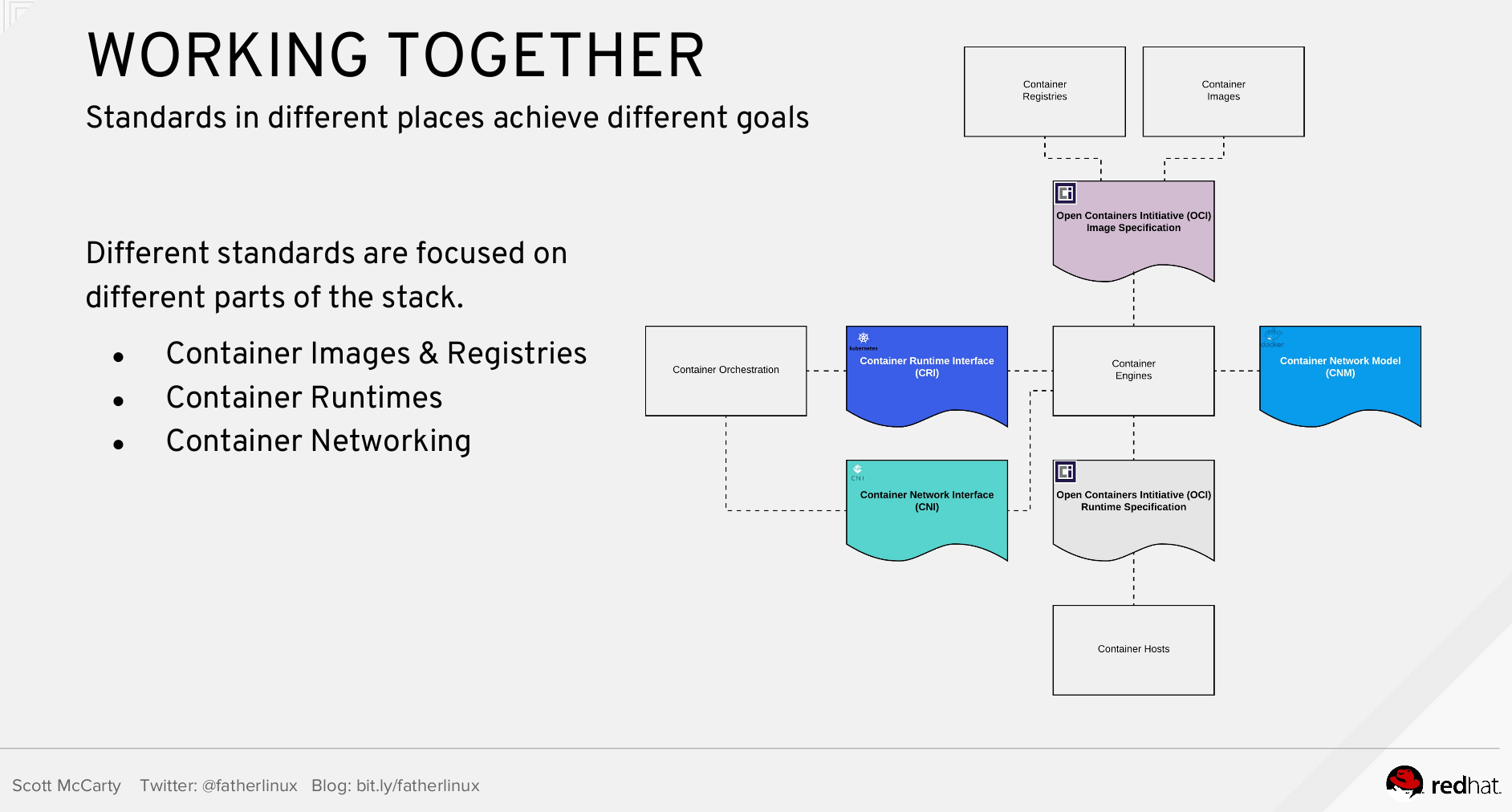

Another example of technological evangelism in this direction is a very recent (September 2017) report “ Understanding standards for containers ” ( video , slides ) performed by Scott McCarty from Red Hat:

But back to the technological component of CRI-O. How does this project work?

CRI-O architecture and features

Today, thanks to the efforts of Red Hat, Intel, SUSE, Hyper and IBM (all of them are listed among the main CRI-O development companies) , we have an alternative to Docker for Kubernetes, which allows launching subnets using any executable environment for containers that are compatible with the specification Oci. Officially, the well-known runc is supported, as well as Intel's Clear Containers (they are part of a more global Clear Linux project; last month they released their version 3.0 , rewritten to Go) .

The CRI-O components have not changed much since the OCID announcement: in addition to the executable environment (compatible with OCI), these are the old OCI Runtime Tools, containers / storage, containers / image, CNI, as well as a new small conmon utility for monitoring containers out of memory errors (OOM) and log handling from the container process.

The overall CRI-O architecture and its place in Kubernetes is as follows:

The principle of functioning of Kubernetes in conjunction with CRI-O is reduced to the following scheme:

- Kubernetes calls the kubelet to start the hearth, and the kubelet redirects this request to the CRI-O daemon via the Kubernetes CRI interface.

- CRI-O using the containers / image library takes the image from the registry.

- CRI-O, using the containers / storage library, unpacks the downloaded image into the container root file system (stored in COW).

- Having created rootfs for the container, CRI-O using the generate utility from the OCI Runtime Tools prepares a JSON file that conforms to the OCI runtime specification and contains a description of how to start the container.

- CRI-O runs an executable environment (for example, runc) using the generated specification.

- The container is in the field of view of monitoring performed by the conmon utility (a separate process in the system), which also serves logging for the container and records the completion code of the container process.

- The network for the hearth is configured using any of the CNI plugins.

Status

The release of Kubernetes 1.8 , which took place at the end of September, “raised” the support status of CRI-O to stable , since all e2e tests were passed.

To convert your Kubernetes installation from Docker to CRI-O, you will need:

- Install and configure CRI-O (

/etc/crio/crio.conf,/etc/containers/policy.json, etc .; the service itself runs as a system service — recommended in conjunction with systemd) on each node where the migration takes place . - Correct the requirements of the kubelet service in systemd (

/etc/systemd/system/kubelet.service) and configure its parameters (/etc/kubernetes/kubelet.env). - Prepare a CNI plugin.

- Start the crio service and restart the kubelet .

More detailed instructions can be found here .

cri-containerd

Finally, it will be useful to mention the parallel activity on the part of the Docker company called cri-containerd . This is another open source implementation of the already mentioned Kubernetes CRI interface for those who have chosen containerd as the only executable environment for containers (along with the same runc and CNI for solving the corresponding tasks) . With alpha status , cri-containerd supports Kubernetes 1.7+, passed all CRI validation tests and all node e2e tests.

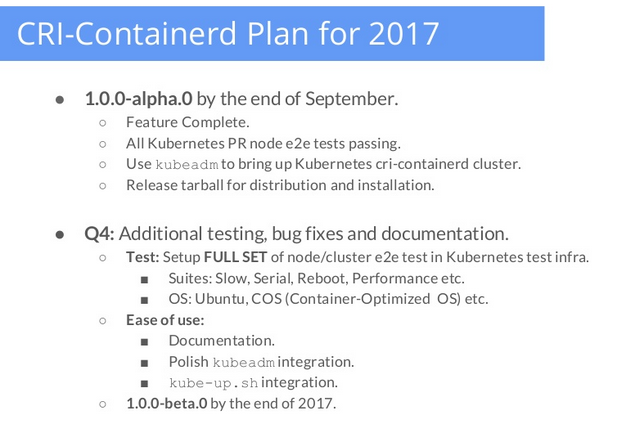

At a recent speech by Liu Lantao (Google) and Abhinandan Prativadi (Docker), it was announced that the beta version of this decision is expected by the end of the year ( report slides ):

PS

And at the end of this article, I can not fail to quote a wonderful quote from Kelsey Hightower, well known in the DevOps community, at least thanks to “ Kubernetes The Hard Way ”:

cri-o, containerd, rkt or docker? I look forward to the day when the answer will be: "All the same."

Read also in our blog:

- “ Why do you need containerd and why it was separated from Docker ”;

- “ What is the essence of the Moby project and why did the main Docker repository suddenly become moby / moby? ";

- “ Container Networking Interface (CNI) - network interface and standard for Linux containers ”;

- " Our experience with Kubernetes in small projects " (video of the report, which includes an introduction to the technical device Kubernetes).

Source: https://habr.com/ru/post/340010/

All Articles