In search of reason: is it possible to make a “universal” chat bot using neural networks?

Interactive systems, they are chat bots, today multiplied to the obscene. But their level of "intelligence" is often depressing. Popular chat bots work either with the help of templates, or using the “intent + entities” model. They do an excellent job with simple tasks (set an alarm clock, a reminder, find the answer to a frequently asked question), but the narrowness and limitations of their “thinking” are easily revealed even with a superficial survey. Can neural networks help create something more perfect, perhaps approaching the really intelligent one?

I remember very well my feelings from reading the classic work nowadays [1] where Google researchers taught the seq2seq neural network to generate responses using a huge base of movie subtitles. The possibilities seemed unlimited:

D: My name is John, what is my name?

H: John

Q: How many legs does a dog have?

N: Four

Q: What color is the sky?

H: Blue

Q: Does the cat have a tail?

H: Yes

Q: Does the cat have wings?

N: No

Conversations are translated by me into Russian. H - man, N- neural network

Is it not surprising that the network, without any rules, based on very noisy data, can correctly answer such questions (which are not found in the data itself in such formulations)? But soon, this approach revealed a number of problems:

')

As a result, most practical systems are still based on pre-prepared answers and ranking options (using rules and machine learning), and the idea of synthesizing answers from scratch has, by the way, faded into the background. Even Google Smart Reply is now ranking [2], albeit using neural networks. Ranking can be effective without understanding the meaning - only due to syntactic transformations and simple rules [7]. In our experiments with ranking, even if it is carried out by a neural network, surface analysis dominates, and only very large neural networks demonstrate only some of the rudiments of general mathematical knowledge [8].

Also, all popular chat bots, virtual assistants and similar programs work pragmatically. Siri and Cortana seem to be highly intelligent systems only due to the fact that each subject they understand is carefully tuned by hand [6], which makes it possible to achieve high quality answers. But, on the other hand, according to some studies, with all the enormous efforts invested by companies in the development of these assistants, only 13% of phone users actually use them (46% have ever tried it, but 46% have refused to use it [11]). In addition, this technology is known, put on stream, and bored with them. I would like something potentially more intellectual, but at the same time, not completely divorced from practice.

Many years ago, while reading various fiction novels, I liked the idea of the “Planning Machine”

Yes, in novels, such a machine is often a negative character. But how convenient it would be to have a similar planning machine in the company, so that she would tell whom and what to do. She also solved various issues on her own.

You can, of course, program each such function separately. This way, the developers of intellectual functions in existing CRM / BPM systems. But by doing so, we will deprive the system of flexibility and will make the staff of programmers continually writing and rewriting these functions. Can I go another way?

The main direction to overcome the above disadvantages is to provide the neural network with external memory. This memory is mainly of two types - differentiable, for example in [3] and non-differentiable. Differentiable memory assumes that the mechanisms for writing and reading from memory are themselves neural networks and are trained together. To simulate a dialogue, a variant is mainly used where only the reading mechanism is trained, and the writing mechanism contains hard-coded elements (for example, n memory slots, and the recording goes like a stack) [4]. Such a mechanism is difficult to scale, because To find an element in memory for each element, it is necessary to perform calculations using a neural network. In addition, the contents of such memory cannot be interpreted, edited by hand, it may not be accurate, which significantly complicates the use of the system in practice.

Ideally, we want the neural network to work with something resembling a traditional database. Therefore, we drew attention to the work related to question-answer systems that interface with large knowledge bases. In particular, the article [6] seemed interesting to me, where the neural network generates queries to the graph database in the LISP language based on the questions asked by users. Those. the neural network does not directly access the data, but creates a small program, the execution of which results in obtaining the necessary answer.

A graph representation of knowledge as an external memory for neural networks is generally quite a popular solution, since on the one hand it is easier to put diverse knowledge about the world on the one hand, and on the other hand, this scheme is reminiscent of a method of storing information in the human brain.

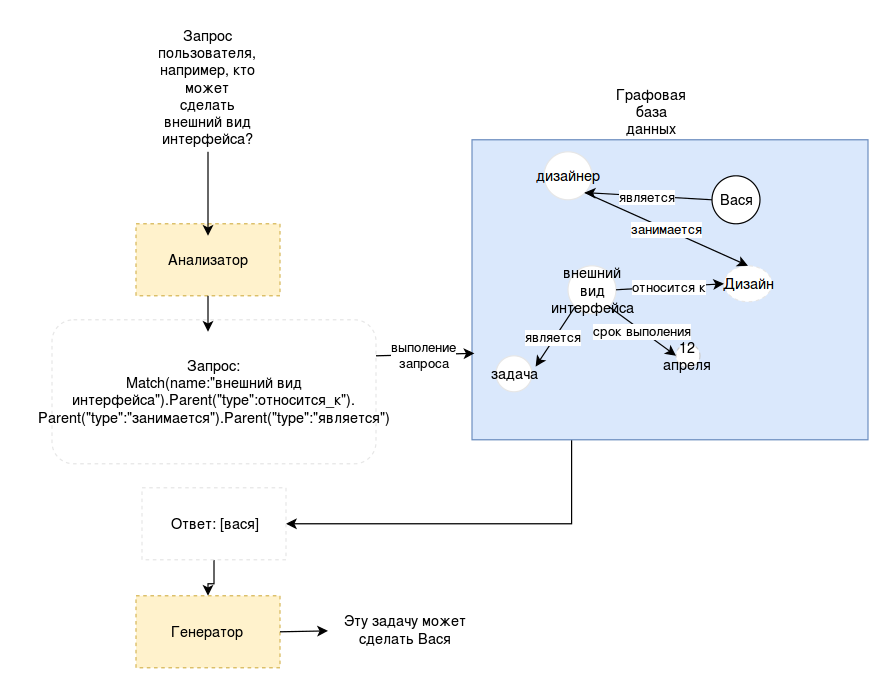

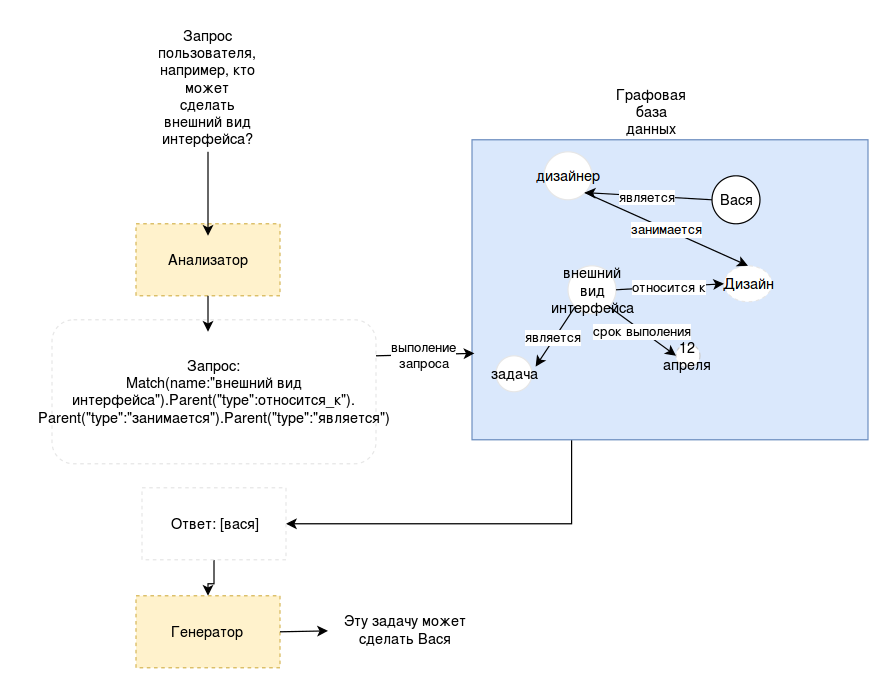

With reference to our task, the following scheme is obtained:

Of course, we are dealing with a more complex problem. First, for the system to work, the neural network must be able not only to extract information, but also to record new information. Obviously, one and that information can be recorded in completely different ways and, if we want the system to be flexible, we cannot fix all kinds of relationships in advance. This means that the program will have to choose the correct way to record information, and then be able to get what it has written.

In addition, in practice other questions will arise:

It is clear that answering all the questions at once will not work out, therefore the most correct approach is gradual implementation.

At the first stage, you need a graph database and some language for querying it. There are quite a few implementations of graph databases and several popular languages, such as SPARQL, Cypher, and others. It is clear that the structure of the language will strongly influence the ability of the neural network to generate requests for it, so we decided to make our own add-on above the existing graph database engines, including a special language that can be further optimized. To simplify development, the language was originally implemented using Python classes and was in fact a subset of Python.

Example (very simple):

Please note that the language describes how to navigate the graph. There is another way to look at the same thing: imagine that each node in the graph is a separate neuron. Some information comes to the input: the activation vector of the input neurons and the vector of the history of previous activations. The neuron works as a classifier - it chooses which path (from the available connections) the process will go on. This is much more like a process that can actually occur in the human brain [9,10], at least in some of the existing theories of the work of memory.

In the examples of requests used model subjects of goods in stock. To begin with, we made a sample of the dialogs manually, and assigned corresponding requests for them. The goal was to make sure that the query language and the scheme as a whole cover a sufficient variety of cases. But, very quickly, it became clear that the limited model world does not allow to check all aspects of the approach. In fact, there are a lot of works in which systems that work on model situations are proposed, but it is not clear how to translate all this into practice. Yes, and compose requests manually is not very productive.

Therefore, we decided to implement the entire scheme in the model system, replacing, temporarily, the neural network with the rule system. This approach is quite reasonable, because it allows you to debug the work of important components, without immediately drowning in the complexities of the problem as a whole, and at the same time avoid “toy” model problems.

As a subject of the application, we chose a project management system (with an eye on getting sometime a “planning machine”). How the implementation process looked from the point of view of using the system can be found in the previous article (empirically, we learned something interesting about how useful the voice interface is and how to organize work on projects correctly, but this is not the main theme of the story now).

As for the main scheme of things, the experience gained was very important. The main changes concerned the organization of the search and the introduction of short-term memory.

For the process of dialogue (which has been known for a long time), the context of the conversation is very important. In the use of our system, the context was absolutely necessary. A circuit with one large data repository entrusts tracking completely to the “analyzer”. For the rules, this task was overwhelming, and, for neural networks, as is known from other studies, it is also difficult. Explicit presentation of short-term external memory is now a common approach in conversational systems based on neural networks [4], and also allows you to show the contents of the working context to the user, which is very important. Therefore, an additional block appeared in the scheme, storing the nodes of the graph, to which the recent access occurred (or recently created).

Further, in practice, most nodes received long names (for example, task names). Comparison of tasks on exact matching ceased to be effective, therefore each node of the graph was supplied with a vector representation (for a start, we tried a bag of words and a sum of word2vec name vectors). This made it possible, on the one hand, to integrate search commands into the language by a fuzzy coincidence, and on the other hand, it brought us closer to the data structures used in differentiated external memory. This is a kind of key-value structure, where the key is a vector and the value is an arbitrary data type. Value vectors can be learners, and can also be part of the addressing mechanism in the neural attention model.

In general, the resulting set of components turned out to be viable and suitable for use in other projects. So, then we started working on replacing the analyzer on the rules (which is very limited in capabilities) with a neural network, especially since a certain set of dialogues was accumulated during the implementation of the version on the rules. However, this topic does not seem to fit into the framework of one article, since the text has already turned out to be quite long, but you will have to write about the neural network as much as at least. Therefore, the continuation will be in our blog one of the following articles, if the topic raised will cause interest among readers.

I remember very well my feelings from reading the classic work nowadays [1] where Google researchers taught the seq2seq neural network to generate responses using a huge base of movie subtitles. The possibilities seemed unlimited:

D: My name is John, what is my name?

H: John

Q: How many legs does a dog have?

N: Four

Q: What color is the sky?

H: Blue

Q: Does the cat have a tail?

H: Yes

Q: Does the cat have wings?

N: No

Conversations are translated by me into Russian. H - man, N- neural network

Is it not surprising that the network, without any rules, based on very noisy data, can correctly answer such questions (which are not found in the data itself in such formulations)? But soon, this approach revealed a number of problems:

')

- The inability to update long-term memory. For example, “My name is John,” will soon be forgotten as soon as it is out of context. It follows from this that it is impossible to memorize new facts and somehow operate with them.

- Inability to obtain information from external sources

- The inability to transfer knowledge to other topics. It takes a lot of data to train a new model.

- Inability to take any action

- The tendency to give short / frequent answers (for example, to answer any question with the word “yes”)

As a result, most practical systems are still based on pre-prepared answers and ranking options (using rules and machine learning), and the idea of synthesizing answers from scratch has, by the way, faded into the background. Even Google Smart Reply is now ranking [2], albeit using neural networks. Ranking can be effective without understanding the meaning - only due to syntactic transformations and simple rules [7]. In our experiments with ranking, even if it is carried out by a neural network, surface analysis dominates, and only very large neural networks demonstrate only some of the rudiments of general mathematical knowledge [8].

Also, all popular chat bots, virtual assistants and similar programs work pragmatically. Siri and Cortana seem to be highly intelligent systems only due to the fact that each subject they understand is carefully tuned by hand [6], which makes it possible to achieve high quality answers. But, on the other hand, according to some studies, with all the enormous efforts invested by companies in the development of these assistants, only 13% of phone users actually use them (46% have ever tried it, but 46% have refused to use it [11]). In addition, this technology is known, put on stream, and bored with them. I would like something potentially more intellectual, but at the same time, not completely divorced from practice.

Many years ago, while reading various fiction novels, I liked the idea of the “Planning Machine”

Excerpt from the text

“Information,” Steve tapped on his keys. - “Stephen Ryland, op. ABC – 38440, OBOporto, op. HUZ – 99942, arrived at ... - a quick glance at the plate with the code attached to the teletype housing, - station 3, radius 4–261, Reykjavik, Iceland. Request. What instructions follow? ”

A moment later, the answer from the Planning Machine came — just one letter: “P”. This meant that the machine accepted the message, understood it and entered it into the memory bank. Orders will follow.

The teletype signal jingled. Ryland read the message:

"Actions. Proceed to train 667, track 6, coupe 93 ".

Reefs of space. Frederick Paul and Jack Williamson, 1964

A moment later, the answer from the Planning Machine came — just one letter: “P”. This meant that the machine accepted the message, understood it and entered it into the memory bank. Orders will follow.

The teletype signal jingled. Ryland read the message:

"Actions. Proceed to train 667, track 6, coupe 93 ".

Reefs of space. Frederick Paul and Jack Williamson, 1964

Yes, in novels, such a machine is often a negative character. But how convenient it would be to have a similar planning machine in the company, so that she would tell whom and what to do. She also solved various issues on her own.

You can, of course, program each such function separately. This way, the developers of intellectual functions in existing CRM / BPM systems. But by doing so, we will deprive the system of flexibility and will make the staff of programmers continually writing and rewriting these functions. Can I go another way?

The main direction to overcome the above disadvantages is to provide the neural network with external memory. This memory is mainly of two types - differentiable, for example in [3] and non-differentiable. Differentiable memory assumes that the mechanisms for writing and reading from memory are themselves neural networks and are trained together. To simulate a dialogue, a variant is mainly used where only the reading mechanism is trained, and the writing mechanism contains hard-coded elements (for example, n memory slots, and the recording goes like a stack) [4]. Such a mechanism is difficult to scale, because To find an element in memory for each element, it is necessary to perform calculations using a neural network. In addition, the contents of such memory cannot be interpreted, edited by hand, it may not be accurate, which significantly complicates the use of the system in practice.

Ideally, we want the neural network to work with something resembling a traditional database. Therefore, we drew attention to the work related to question-answer systems that interface with large knowledge bases. In particular, the article [6] seemed interesting to me, where the neural network generates queries to the graph database in the LISP language based on the questions asked by users. Those. the neural network does not directly access the data, but creates a small program, the execution of which results in obtaining the necessary answer.

A graph representation of knowledge as an external memory for neural networks is generally quite a popular solution, since on the one hand it is easier to put diverse knowledge about the world on the one hand, and on the other hand, this scheme is reminiscent of a method of storing information in the human brain.

With reference to our task, the following scheme is obtained:

Of course, we are dealing with a more complex problem. First, for the system to work, the neural network must be able not only to extract information, but also to record new information. Obviously, one and that information can be recorded in completely different ways and, if we want the system to be flexible, we cannot fix all kinds of relationships in advance. This means that the program will have to choose the correct way to record information, and then be able to get what it has written.

In addition, in practice other questions will arise:

- Work speed Is this mechanism effective enough? Programmers spend a lot of effort to design an efficient database structure. What happens if the data is organized by a neural network?

- What happens after a few months / years of work? There is a risk of accumulation of incorrect, meaningless and poorly organized information that will litter the system and make work impossible.

- Security. Not everyone can be told everything, and not everyone is allowed to change the data. Not all information reported to the system can be reliable.

- Lack of a training sample - where to get data for training a neural network?

- Other pitfalls

It is clear that answering all the questions at once will not work out, therefore the most correct approach is gradual implementation.

At the first stage, you need a graph database and some language for querying it. There are quite a few implementations of graph databases and several popular languages, such as SPARQL, Cypher, and others. It is clear that the structure of the language will strongly influence the ability of the neural network to generate requests for it, so we decided to make our own add-on above the existing graph database engines, including a special language that can be further optimized. To simplify development, the language was originally implemented using Python classes and was in fact a subset of Python.

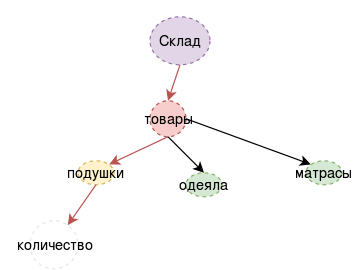

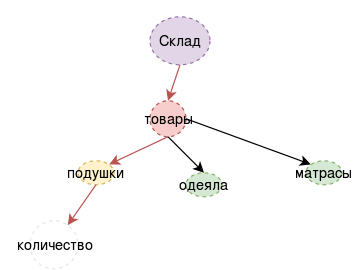

Example (very simple):

| Text | Request |

| Three pillows arrived at the warehouse. | MatchOne ({"type": "warehouse")). Add ("name": "pillows", "quantity": "3") |

| Are there cushions in stock? | MatchOne ({"type": "warehouse")). Child ({"name": "pillows"}). NotEmpty () |

Please note that the language describes how to navigate the graph. There is another way to look at the same thing: imagine that each node in the graph is a separate neuron. Some information comes to the input: the activation vector of the input neurons and the vector of the history of previous activations. The neuron works as a classifier - it chooses which path (from the available connections) the process will go on. This is much more like a process that can actually occur in the human brain [9,10], at least in some of the existing theories of the work of memory.

In the examples of requests used model subjects of goods in stock. To begin with, we made a sample of the dialogs manually, and assigned corresponding requests for them. The goal was to make sure that the query language and the scheme as a whole cover a sufficient variety of cases. But, very quickly, it became clear that the limited model world does not allow to check all aspects of the approach. In fact, there are a lot of works in which systems that work on model situations are proposed, but it is not clear how to translate all this into practice. Yes, and compose requests manually is not very productive.

Therefore, we decided to implement the entire scheme in the model system, replacing, temporarily, the neural network with the rule system. This approach is quite reasonable, because it allows you to debug the work of important components, without immediately drowning in the complexities of the problem as a whole, and at the same time avoid “toy” model problems.

As a subject of the application, we chose a project management system (with an eye on getting sometime a “planning machine”). How the implementation process looked from the point of view of using the system can be found in the previous article (empirically, we learned something interesting about how useful the voice interface is and how to organize work on projects correctly, but this is not the main theme of the story now).

As for the main scheme of things, the experience gained was very important. The main changes concerned the organization of the search and the introduction of short-term memory.

For the process of dialogue (which has been known for a long time), the context of the conversation is very important. In the use of our system, the context was absolutely necessary. A circuit with one large data repository entrusts tracking completely to the “analyzer”. For the rules, this task was overwhelming, and, for neural networks, as is known from other studies, it is also difficult. Explicit presentation of short-term external memory is now a common approach in conversational systems based on neural networks [4], and also allows you to show the contents of the working context to the user, which is very important. Therefore, an additional block appeared in the scheme, storing the nodes of the graph, to which the recent access occurred (or recently created).

Further, in practice, most nodes received long names (for example, task names). Comparison of tasks on exact matching ceased to be effective, therefore each node of the graph was supplied with a vector representation (for a start, we tried a bag of words and a sum of word2vec name vectors). This made it possible, on the one hand, to integrate search commands into the language by a fuzzy coincidence, and on the other hand, it brought us closer to the data structures used in differentiated external memory. This is a kind of key-value structure, where the key is a vector and the value is an arbitrary data type. Value vectors can be learners, and can also be part of the addressing mechanism in the neural attention model.

In general, the resulting set of components turned out to be viable and suitable for use in other projects. So, then we started working on replacing the analyzer on the rules (which is very limited in capabilities) with a neural network, especially since a certain set of dialogues was accumulated during the implementation of the version on the rules. However, this topic does not seem to fit into the framework of one article, since the text has already turned out to be quite long, but you will have to write about the neural network as much as at least. Therefore, the continuation will be in our blog one of the following articles, if the topic raised will cause interest among readers.

Literature

- Vinyals, Oriol, and Quoc Le. “A neural conversational model.” ArXiv preprint arXiv: 1506.05869 (2015).

- Henderson, Matthew, et al. "Efficient Natural Language Response Suggestion for Smart Reply." ArXiv preprint arXiv: 1705.00652 (2017).

- Kumar, Ankit, et al. "Ask me anything: Dynamic memory networks for natural language processing." International Conference on Machine Learning. 2016

- Chen, Yun-Nung, et al. "End-to-End Memory Networks for Multi-Turn Spoken Language Understanding." INTERSPEECH. 2016

- Liang, C., Berant, J., Le, Q., Forbus, KD, & Lao, N. (2016). Neural symbolic machines: Learning semantic parsers on freebase with weak supervision. arXiv preprintarX iv: 1611.0 0 020.

- Sarikaya, Ruhi, et al. “Spoken Language Technology Workshop (SLT), 2016 IEEE. IEEE 2016

- Ameixa, David, et al. "Luke, I am your father: dealing with out-of-the-domain requests by using movies subtitles." International Conference on Intelligent Virtual Agents. Springer, Cham, 2014.

- Tarasov, DS, and ED Izotova. "Common Sense Knowledge in Large Scale Neural Conversational Models." International Conference on Neuroinformatics. Springer, Cham, 2017.

- Fuster, Joaquín M ... "Network memory." Trends in neurosciences 20.10 (1997): 451-459.

- Fuster, Joaquín M. “Cortex and memory: the emergence of a new paradigm.” Cortex 21.11 (2009).

- Liao, S.-H. (2015). Awareness and Usage of Speech Technology. Masters thesis, Dept. Computer Science, University of Sheffield

Source: https://habr.com/ru/post/339872/

All Articles