Testing in Openshift: Openstack Integration

Hello, dear members of the IT community. This material is an unplanned continuation of a series of articles ( first article , second article , third article ), which are devoted to software testing in Openshift Origin . This article will cover aspects of integrating containers and virtual machines through Openshift and Openstack .

What goals I pursued integrating Openshift with Openstack:

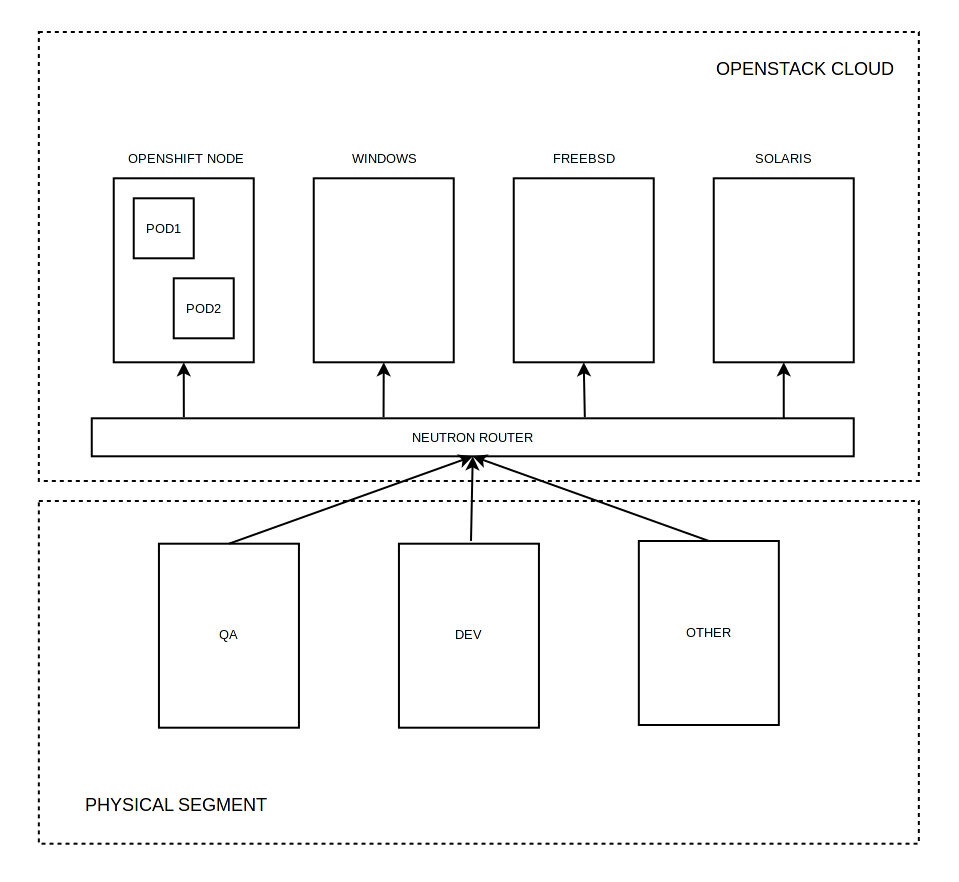

- Add the ability to run containers and virtual machines on a single network ( L2 , no nested networks).

- Add the ability to use published services in Openshift virtual machines.

- Add the ability to integrate a physical network segment with a network of containers / virtual machines.

- Have the ability to mutually allow FQDN for containers and virtual machines.

- Have the ability to embed the deployment process of hybrid environments (containers, virtual machines) into an existing CI / CD .

Note: this article will not talk about the automatic scaling of the cluster and the provision of data warehouses.

In your own words about the software that contributed to the achievement of the goals:

Openstack - the operating system for creating cloud services. A powerful designer who has collected many projects and vendors for his start. In my personal opinion, there are simply no Openstack competitors in the private cloud market. Openstack installations can be very flexible and multi-element, with support for various hypervisors and services. Jenkins plugins are available [1] [2] . Supported orchestration , workflow , multi tenancy , zoning , etc.

Openshift Origin - standalone solution from Red Hat (as opposed to Openshift Online and Openshift Dedicated ) designed to orchestrate containers. The solution is based on Kubernetes , but has several advantages / additions that provide convenience and efficiency of work.

Kuryr is a young Openstack project (a big plus is that the development is carried out in the Openstack ecosystem), which allows integrating containers (nested, baremetal) into the Neutron network in various ways. It is a simple and reliable solution with far-reaching plans for expanding the functionality. Currently, there are many NFV / SDN solutions on the market (Kuryr is not), most of which can be excluded as not supported by Openstack / Openshift natively, but even significantly reducing the list of solutions that are very rich in functionality, but they are quite complex in terms of integration and maintenance ( OpenContrail , MidoNet , Calico , Contiv , Weave ). Kuryr, on the other hand, allows integrating Openshift containers ( CNI plugin) into the Neutron network (classic scripts with OVS ) without too much difficulty.

Typical integration schemes:

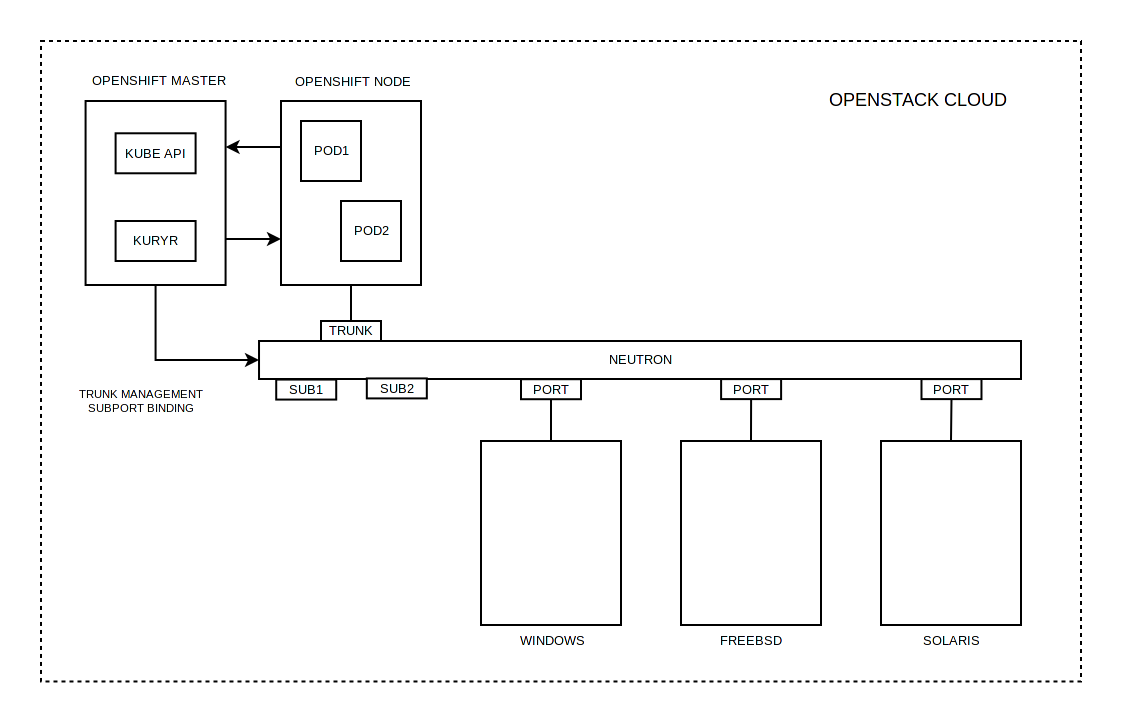

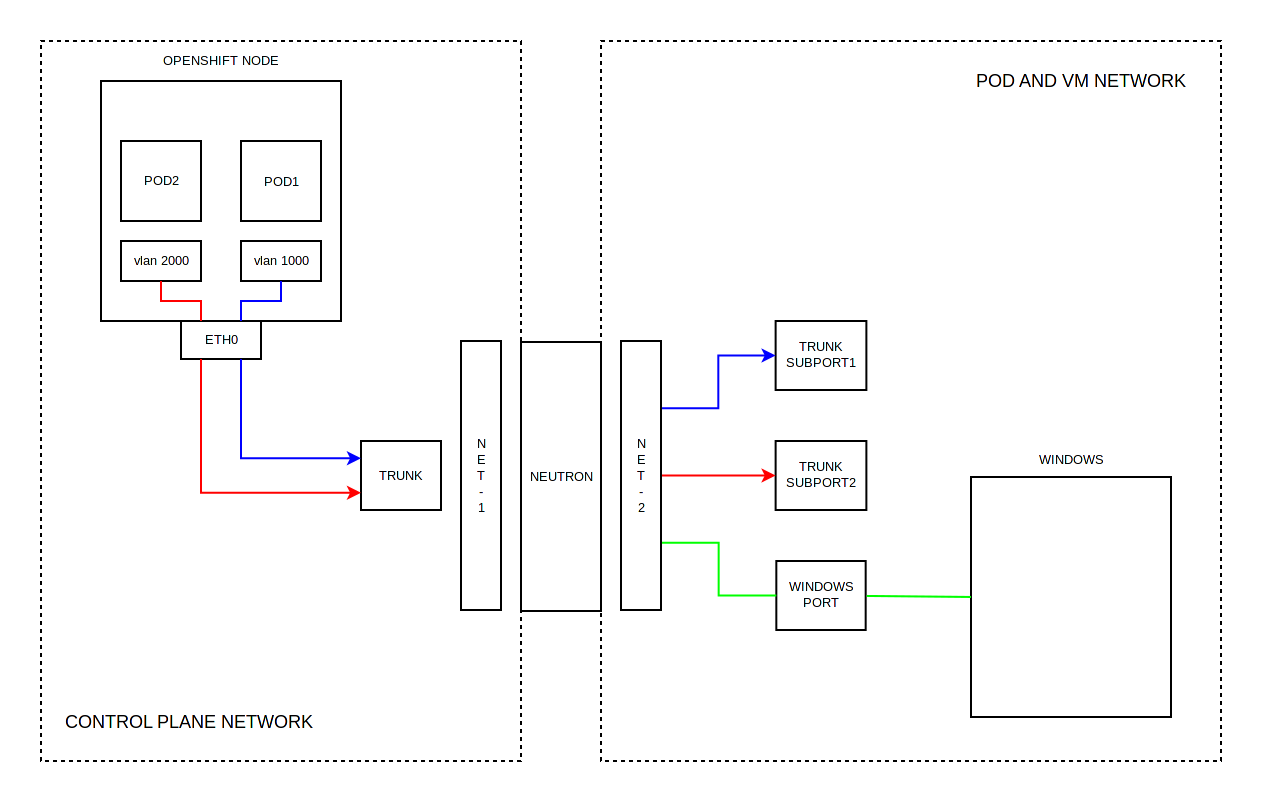

1. Openshift cluster is located in the Openstack cloud

The integration scheme, when all elements are located in the Openstack cloud, is very attractive and convenient, but the main disadvantage of this scheme is that containers run in virtual machines and all speed advantages are reduced to nothing.

With this integration scheme, the working nodes of Openshift are assigned a TRUNK port, which contains a certain amount of SUBPORT. Each SUBPORT contains a VLAN identifier. If the TRUNK port is on the same network, then the SUBPORT is on the other. SUBPORT should be viewed as a bridge between two networks. When an Ethernet frame arrives in TRUNK with a VLAN tag (which corresponds to no one SUBPORT), then such a frame will be sent to the corresponding SUBPORT. From all this it follows that a normal VLAN is created on the working node of Openshift, which is placed in the container's network namespace .

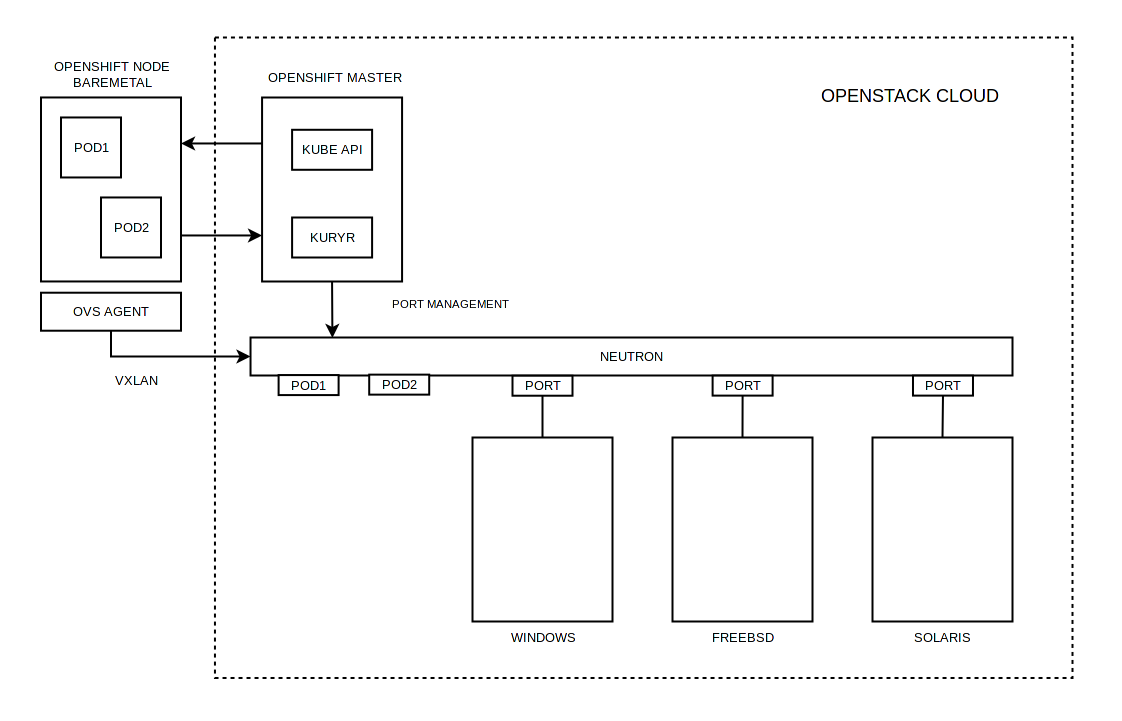

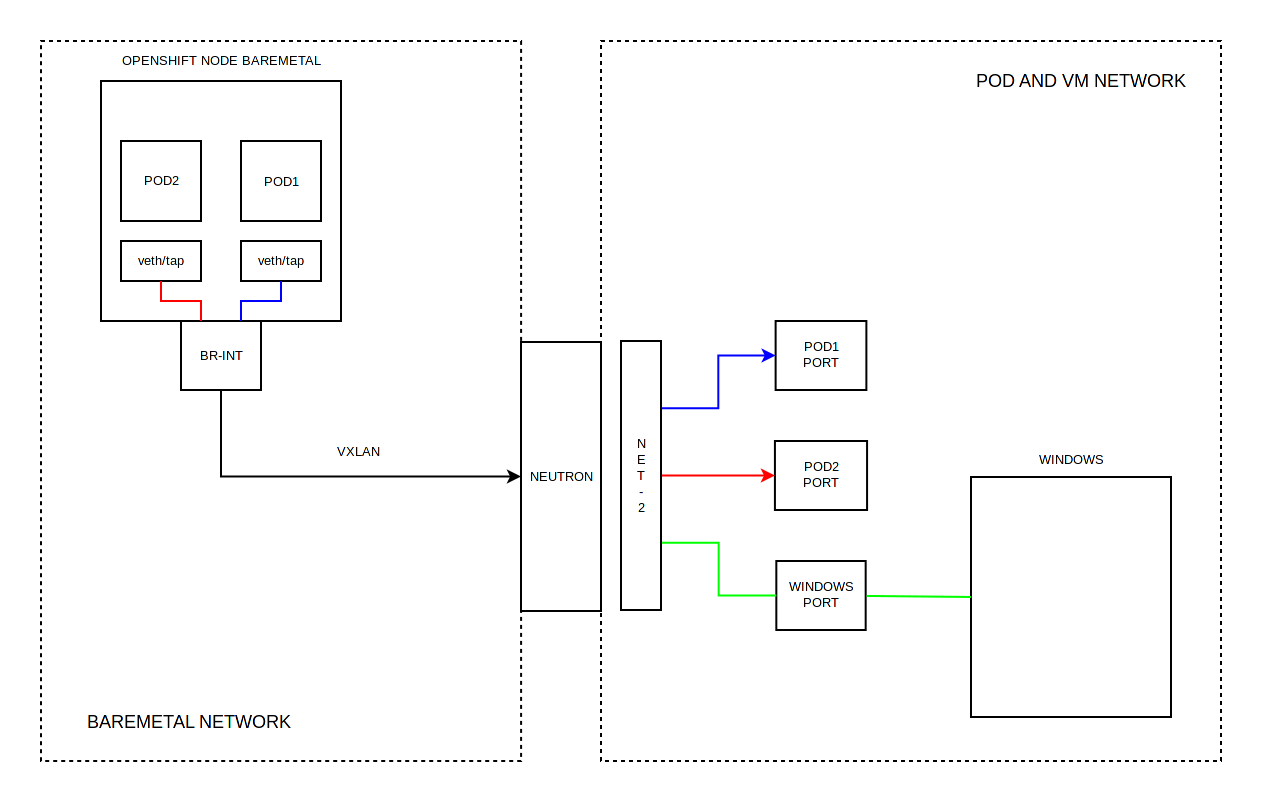

2. The working nodes of the Openshift cluster are physical servers, the master is located in the Openstack cloud

The integration scheme, when the containers are running on dedicated servers, is not more complicated than the scheme with all the elements located in the Openstack cloud. VXLAN allows you to organize virtual networks without the need for segmentation of an enterprise network.

With this integration scheme, an Open vSwitch Agent runs on Openshift work nodes, which is integrated with Neutron. The launched container is assigned a VETH device that works directly with the Open vSwitch bridge, that is, the container is integrated into the Neutron network directly. Subsequently, the Open vSwitch Agent initiates the VXLAN connection to the Neutron Router for subsequent packet routing.

The role of Kuryr in all variants comes down to:

- When creating the container, the kuryr CNI plugin will be used, which will send a request (all communications are carried out via the standard Openshift / Kubernetes API) kuryr-controller for network connectivity.

- kuryr-controller, upon receiving a request, will "ask" Neutron to allocate a port. After the port is initialized, the kuryr-controller will transfer the network configuration back to the CNI plugin, which will be applied to the container.

Integration of the physical network segment with the network of containers and virtual machines:

In the simplest version, the development participants have a routable access to the network of containers and virtual mice via the Neutron Router; for this, it is enough to register the gateway address for the required subnet on the workstations. This possibility is difficult to overestimate from the point of view of testing, since the standard mechanisms ( hostNetwork, hostPort, NodePort, LoadBalancer, Ingress ) Openshift / Kubernetes are clearly limited in capabilities, as is the LBaaS in Openstack.

It is especially difficult to overestimate the opportunity to deploy and have access to the necessary applications, the catalog of which is available through the Openshift web interface (if projects such as Monocular began to appear relatively recently, then this functionality is present in Openshift from the first versions). Any contributor can deploy an available application without wasting time learning Docker , Kubernetes, the application itself.

Allow FQDN of containers and virtual machines:

In the case of containers, everything is very simple, for each published service, a FQDN record is created in the internal DNS server as follows:

<service>.<pod_namespace>.svc.cluster.local

In the case of virtual machines, the dns extension for ml2 plugin is used:

extension_drivers = port_security,dns

When creating a port in Neutron, the dns_name attribute is set, which forms the FQDN:

[root@openstack ~]# openstack port create --dns-name hello --network openshift-pod hello +-----------------------+---------------------------------------------------------------------------+ | Field | Value | +-----------------------+---------------------------------------------------------------------------+ | admin_state_up | UP | | allowed_address_pairs | | | binding_host_id | | | binding_profile | | | binding_vif_details | | | binding_vif_type | unbound | | binding_vnic_type | normal | | created_at | 2017-10-04T15:25:21Z | | description | | | device_id | | | device_owner | | | dns_assignment | fqdn='hello.openstack.local.', hostname='hello', ip_address='10.42.0.15' | | dns_name | hello | | extra_dhcp_opts | | | fixed_ips | ip_address='10.42.0.15', subnet_id='4e82d6fb-9613-4606-a3ae-79ed8de42eea' | | id | adfa0aab-82c6-4d1e-bec3-5d2338a48205 | | ip_address | None | | mac_address | fa:16:3e:8a:94:38 | | name | hello | | network_id | 050a8277-e4b3-4927-9762-d74274d9c8ff | | option_name | None | | option_value | None | | port_security_enabled | True | | project_id | 2823b3394572439c804d56186cc82abb | | qos_policy_id | None | | revision_number | 6 | | security_groups | 3d354277-2aec-4bfb-91ac-d320bfb6c90f | | status | DOWN | | subnet_id | None | | updated_at | 2017-10-04T15:25:21Z | +-----------------------+---------------------------------------------------------------------------+ The FQDN for the virtual machine can be resolved using a DNS server that serves DHCP for the network.

It remains only to place the DNS resolver on Openshift master (or in any other place), which will resolve *.cluster.local using SkyDNS Openshift , and *.openstack.local using the DNS server of the Neutron network.

Demonstration:

Conclusion:

- I would like to thank the development teams: Openshift / Kubernetes, Openstack, Kuryr.

- The solution was as simple as possible, but it remained flexible and functional.

- Thanks to Openstack, the opportunity to organize testing on such processor architectures as ARM and MIPS has opened.

Interesting:

')

Source: https://habr.com/ru/post/339474/

All Articles