How is the testing of the BIM-system Renga

Earlier, we already wrote how the KOMPAS-3D testing and the KOMPAS-3D interface testing automation are arranged, today we’ll tell you about the testing of the Renga BIM system.

Many companies in the process of software development are faced with the problem of regression errors. And we, unfortunately, were no exception. In this article I would like to tell you how this problem manifested itself in us and what solutions we found. But first it is worth explaining what system we are developing and how the testing process is arranged in our company.

Renga Architecture is an architectural and construction BIM system developed by Renga Software (a joint venture of ASCON and 1C companies) to create the exterior of a building, an information model, and a quick layout of drawings. Its users are architects, designers and designers.

')

Renga contains many tools needed to create an architectural model of a building, to design a structural part, as well as to obtain drawings and specifications.

Of course, we test all the functionality of the system. When testing, we must take into account that the user can work with the model in both 3D and 2D, and all the project entities are interconnected. First, the tester checks the functionality of the manual operation, there is a check for compliance with the requirements. At this stage, there are errors that further developers will fix. After manual testing and error correction, we proceed to writing integration tests, covering the functionality with autotests. Next will be described in more detail the testing process, and how we came to it.

We test a lot of time manually. To test the operation of a new object, for example, a wall, you need to check:

This is not a complete list of what needs to be checked when adding a new object. Now imagine what the number of these objects and connections!

In the process of manual testing are errors that are difficult to reproduce, or they appear in a certain state of the system. I think that errors in the user interface will not surprise anyone, and the errors listed below are very interesting:

The railing “shoots” up instead of sitting on a ladder of a small radius.

As a result, quite unusual playback steps were revealed: you need to call the context menu on the 3D view, then click a few buttons on the toolbar with the left mouse button, go away and return to the current tab with the toolbar and ... the buttons are swapped! The error began to play steadily according to the steps described and was quickly eliminated. It took more time to learn how to reproduce it.

Development of our BIM-system was started 6 years ago. At the very beginning of development, the application was tested manually and with the help of unit tests. All the functionality had test coverage with modular tests, but despite this, we began to notice that we were breaking already done before. The problem was that the modules worked separately, but their integration led to errors. The problem was growing, and it was decided to add integration testing to the unit tests to verify that all modules of the system work together.

Search for ready-made solutions

To create the interface, we use the QT- library, so when searching for ready-made products for writing integration tests, we chose those that could work with this library. We considered TestComplete and Squish as possible solutions, but the versions of these products did not suit us at that time: Renga uses controls that these products could not identify and work with them. And without them, it was inappropriate to use these automation products.

Own framework

We decided to write our own framework, which will repeat the user's actions, work with the controls we need and cover most of the necessary checks. The development of this framework began back in 2012, and the application initially looked like this:

In the process of creating a new functional in Renga, the utility is being finalized. That is, in fact, we are developing two applications at the same time: Renga and the testing framework.

The principle of the framework

The principle is to record and then play the script, comparing the reference and resulting snapshots of the system. The system snapshots are described by xml-files that are created when recording and playing the test. Such xml-files in the test are reference and resultant. When recording a test, a set of reference xml-files of the fixed state of the system is generated; during playback, a resulting set of files is created. There are the following types of xml-files that are responsible for the different functionality of the application:

As described above, the utility is being finalized with Renga, new types of system snapshots appear in it. For example, only in preparation for the latest release to test specifications , the utility added Specification shots and Specification view shots. And also, for the convenience of finding certain tests, a filtering system was recently implemented using python scripts.

Now using the framework you can check: drawing objects, printing drawings, exporting and importing objects / drawings in various formats, creating and editing objects, changing parameters and custom properties of an object, data and drawing tables / specifications, etc.

At the current time, the framework looks like this:

At the top of the utility, the path to the folder in which the tests will be stored (xml files and scripts), as well as the path to the new Renga assembly, is set. In the right part, the tester describes the steps to be taken when recording the test. Below are the buttons for recording, playing, renaming the test, etc.

The test is recorded on the hands-tested version of the application, so this behavior can be considered as a reference.

To record a new test:

After the test is successfully recorded, it must be sent to the server:

How the framework catches errors:

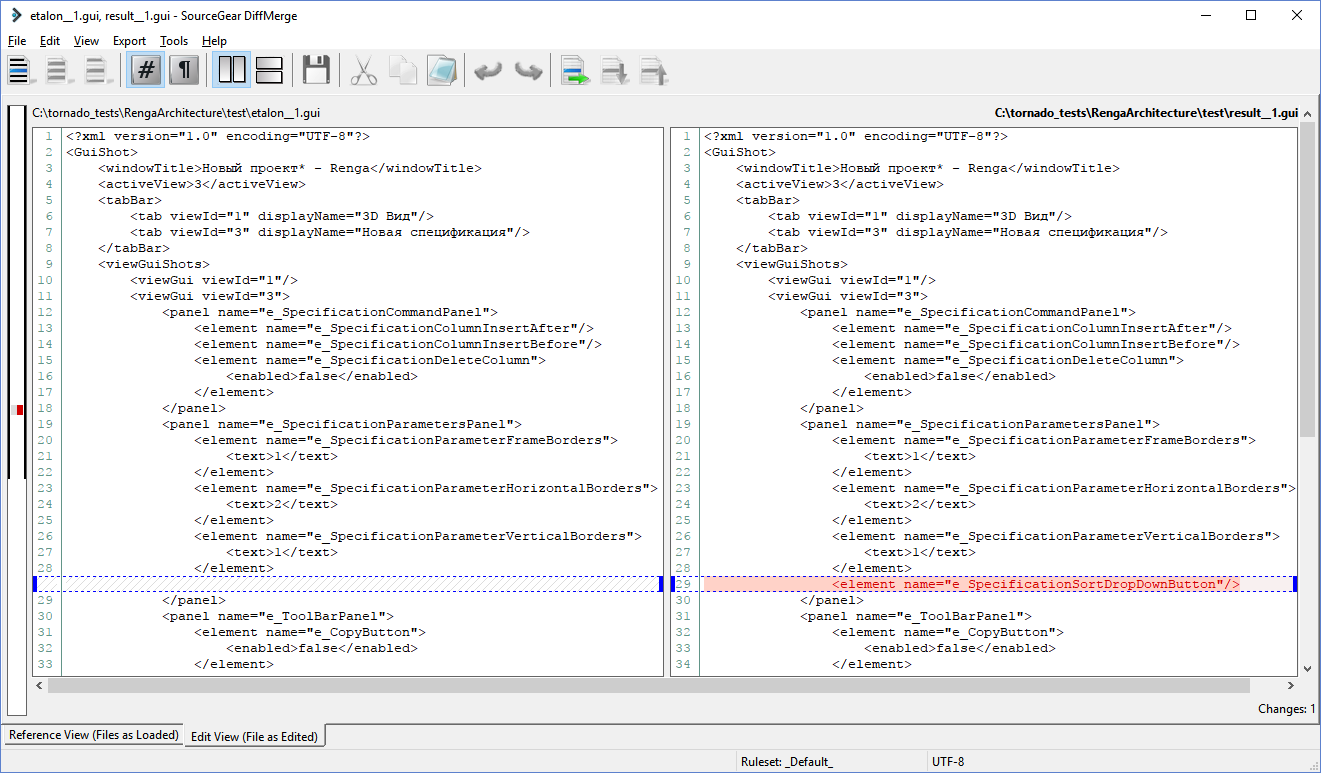

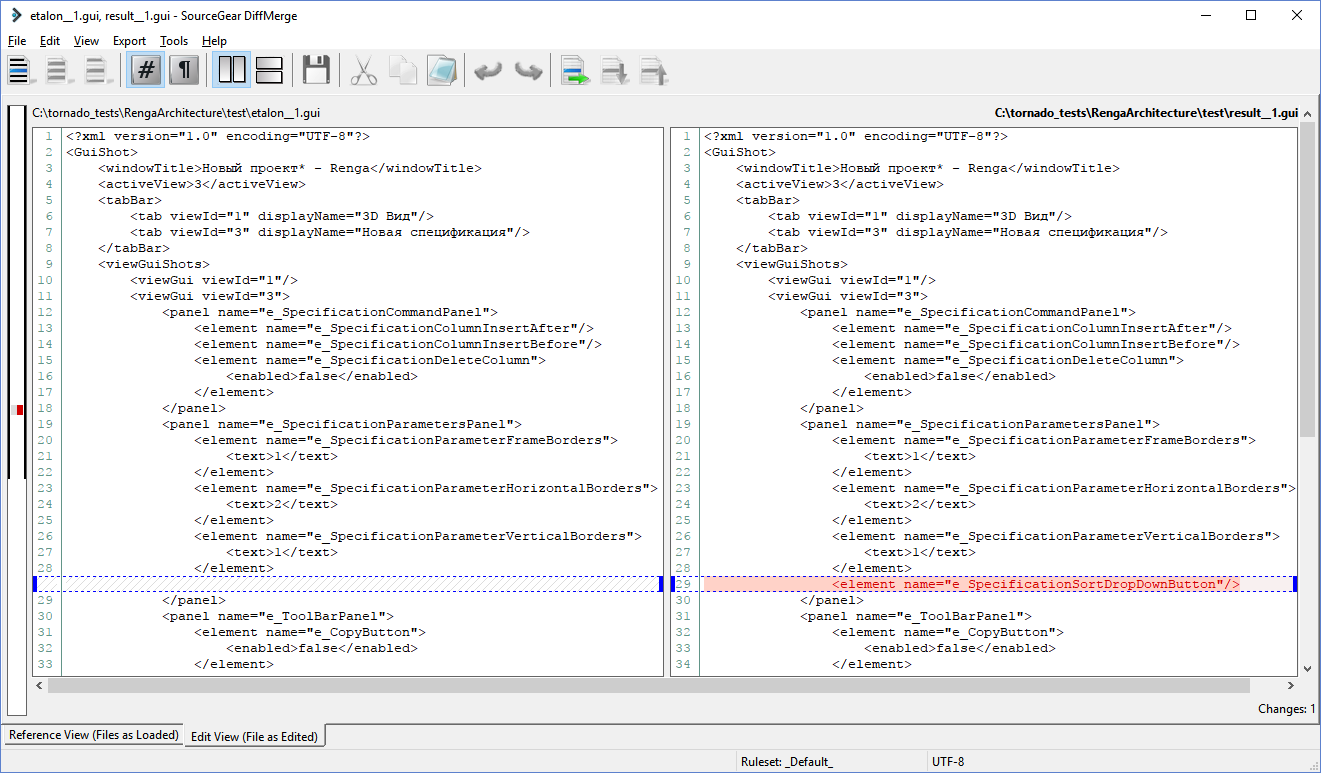

If the snapshots are the same - the Passed test, if at least one snapshot is different - the Failed test and these xml files are indicated. If the test fails, you need to deal with the specified files and look for the cause of the discrepancies. Below is an example of comparing the reference and the resulting xml file - Gui shots:

On it we see that in the resulting file on the “e_SpecificationParametersPanel” parameter panel there appeared a button “e_SpecificationSortDropDownButton”, which is not in the reference file. For this reason, the test failed. This behavior is expected, since this button has been added for the new functionality. Now you need to consider the resulting image to be correct and make it a reference. Accordingly, the next test play will be successful.

This is how the test plays in our utility:

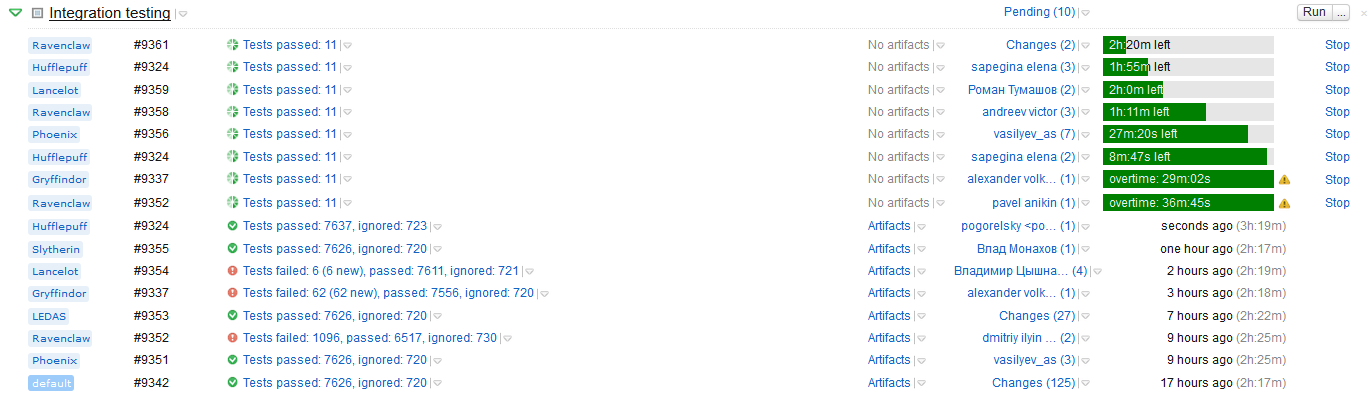

Organization of tests on the server

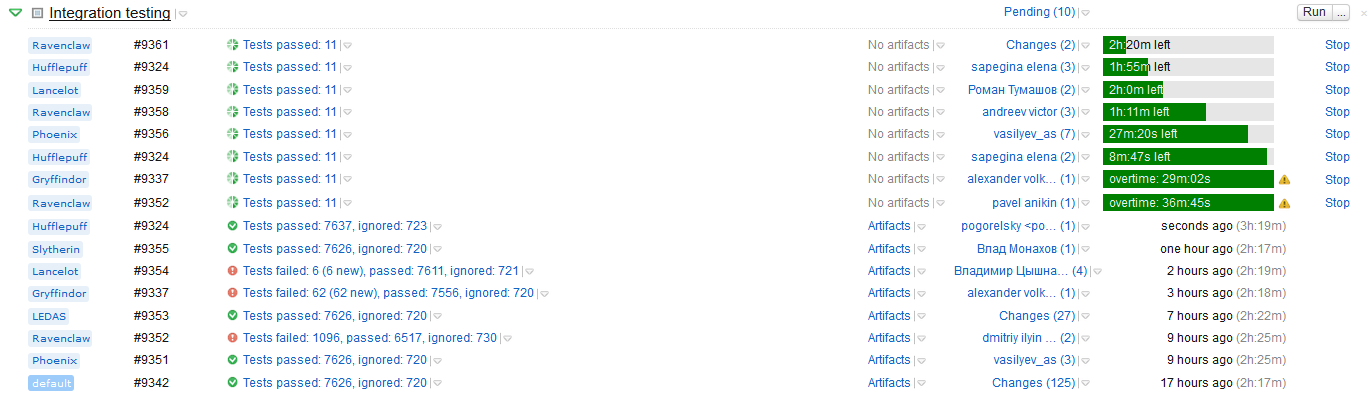

Tests are played on the server after each code is flooded into a branch.

If at least one test fails, the build is not laid out and is not available for testing. This rule allows us not to waste time on testing probably broken functionality, because while the tests were not successful, the developer cannot be sure that nothing is broken from the old functionality.

At the moment we have 8 thousand tests, it takes about 2-3 hours to play them. That is, the delivery time of the new functionality from the developer to the tester is from 2-3 hours to one working day, if something went wrong. Such a number of tests allows us to cover the current functionality, but in some cases you need to be patient to wait for a working build. Now we are thinking about how to speed up the results from playing tests.

What problems remained unresolved

The testing utility solved the module integration problem, but it cannot be used to test the application GUI. And this means that the user interface, the work of the focus, the emulation of keys from the keyboard, scrolling, working with several windows we have to test only with our hands.

Very often there are errors associated with this functionality. Trying to solve this problem, we began to look for a finished product to automate the GUI. We tested the Ranorex program, but, unfortunately, not all the scenarios could be tested using it, and some controls could not be reached due to the current implementation of Renga. Therefore, we continue to search for ready-made solutions for testing the user interface of desktop applications and those who can work with Qt.

We also have to test the installer with our hands. In recent months, we have advanced in this area by starting to use python scripts with pywinauto. So far, this solution is in its infancy, but now we see the direction in which to work.

In our project, approximately ⅔ of the time, the tester is engaged in manual testing, trying to find as many errors and vulnerabilities as possible, and also to check whether all requirements have been met. And only after that goes on to write autotests, which guarantee that this functionality will not break. With the advent of automated testing, we secured ourselves against repeated regression errors from iteration to iteration. Developers are not afraid to upload their code to the work branch, because they know that tests will show them problematic places in a few hours. And testers can devote themselves entirely to testing new functionality.

This approach allows us not to freeze the code for a long time before release. In fact, one week is enough for us to test the release and fix critical errors. And this is with a small number of test engineers and frequent releases: we release three or four releases per year (on average, similar CAD systems release one release per year).

And, of course, we do not forget about our problems. We will try to solve them so that Renga users can get new functionality even faster. We will be glad to hear the advice if your company had similar problems and you have found ways to solve them.

Elena Makarova, Test Engineer, Renga Software.

Elena Makarova, Test Engineer, Renga Software.

Many companies in the process of software development are faced with the problem of regression errors. And we, unfortunately, were no exception. In this article I would like to tell you how this problem manifested itself in us and what solutions we found. But first it is worth explaining what system we are developing and how the testing process is arranged in our company.

What is Renga

Renga Architecture is an architectural and construction BIM system developed by Renga Software (a joint venture of ASCON and 1C companies) to create the exterior of a building, an information model, and a quick layout of drawings. Its users are architects, designers and designers.

')

Read more about the Renga product family (Careful marketing!)

Renga Architecture - a system for architectural and construction design. The program was created for maximum assistance to the designer in solving his tasks: the creation of the architectural appearance of the building, the information model and the quick layout of the drawings according to the standards of SPDS and much more.

Renga Structure - a system for designing structural parts of buildings / structures. A program for design engineers and designers to create an information model of a building or structure and to obtain drawings of KR / KZh / KZH / KM / AU brands.

The Renga product family is designed for BIM design. High system performance allows you to work with large projects without a visible reduction in the quality of work with the 3D model:

Object Design

Creation in Renga of a 3D model of a building / structure with object design tools (wall, column, window, etc.)

Teamwork

Sharing storage and data management is carried out using BIM-Server Pilot

Interaction with estimated systems

Renga integration by means of API with 1C-estimate budget systems and ABC estimates for interaction between project and budget departments.

Data exchange

Renga allows you to exchange data with other systems through various formats (.ifc, .dwg, .dxf, .obj, .dae, .stl, .3ds, .lwo and .csv)

Automate the receipt of specifications and statements

Renga has the function of receiving reports for the formation of specifications, statements and explications.

Automation of drawing

According to the data of the 3D model, views are automatically obtained (facades, sections and plans) and placed on the drawings in the specified scales.

Renga Structure - a system for designing structural parts of buildings / structures. A program for design engineers and designers to create an information model of a building or structure and to obtain drawings of KR / KZh / KZH / KM / AU brands.

The Renga product family is designed for BIM design. High system performance allows you to work with large projects without a visible reduction in the quality of work with the 3D model:

Object Design

Creation in Renga of a 3D model of a building / structure with object design tools (wall, column, window, etc.)

Teamwork

Sharing storage and data management is carried out using BIM-Server Pilot

Interaction with estimated systems

Renga integration by means of API with 1C-estimate budget systems and ABC estimates for interaction between project and budget departments.

Data exchange

Renga allows you to exchange data with other systems through various formats (.ifc, .dwg, .dxf, .obj, .dae, .stl, .3ds, .lwo and .csv)

Automate the receipt of specifications and statements

Renga has the function of receiving reports for the formation of specifications, statements and explications.

Automation of drawing

According to the data of the 3D model, views are automatically obtained (facades, sections and plans) and placed on the drawings in the specified scales.

Renga contains many tools needed to create an architectural model of a building, to design a structural part, as well as to obtain drawings and specifications.

Of course, we test all the functionality of the system. When testing, we must take into account that the user can work with the model in both 3D and 2D, and all the project entities are interconnected. First, the tester checks the functionality of the manual operation, there is a check for compliance with the requirements. At this stage, there are errors that further developers will fix. After manual testing and error correction, we proceed to writing integration tests, covering the functionality with autotests. Next will be described in more detail the testing process, and how we came to it.

Manual testing

We test a lot of time manually. To test the operation of a new object, for example, a wall, you need to check:

- Building a wall in 3D and 2D.

- Editing the wall for characteristic points, changing its properties and parameters.

- The behavior of the wall at the intersection with other objects (for example, floors, columns and beams undercut the wall).

- Design drawing with walls.

- The correctness of the withdrawal of the calculated characteristics of the wall (length, area, volume, etc.).

- Application and display of wall materials.

- The work of the walls when inserting window and door openings.

- Reinforcement of walls: parametric reinforcement, layout of grids and frames in the walls.

- Localization of wall parameters.

This is not a complete list of what needs to be checked when adding a new object. Now imagine what the number of these objects and connections!

In the process of manual testing are errors that are difficult to reproduce, or they appear in a certain state of the system. I think that errors in the user interface will not surprise anyone, and the errors listed below are very interesting:

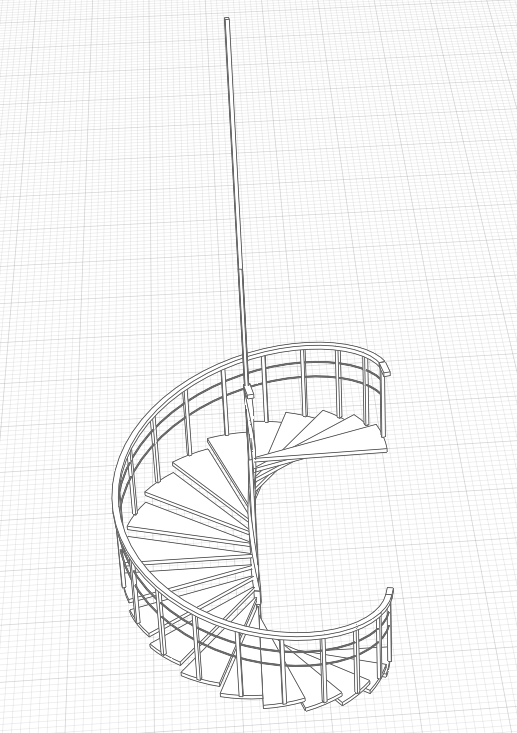

- “Incorrect display of the railing on the arc stairs of small radius.” This is how this error looks in the application:

The railing “shoots” up instead of sitting on a ladder of a small radius.

- "Swap buttons on the toolbar." For about a month we tried to catch in which cases the buttons change places, since in different cases this happened differently.

As a result, quite unusual playback steps were revealed: you need to call the context menu on the 3D view, then click a few buttons on the toolbar with the left mouse button, go away and return to the current tab with the toolbar and ... the buttons are swapped! The error began to play steadily according to the steps described and was quickly eliminated. It took more time to learn how to reproduce it.

Automated Testing

Development of our BIM-system was started 6 years ago. At the very beginning of development, the application was tested manually and with the help of unit tests. All the functionality had test coverage with modular tests, but despite this, we began to notice that we were breaking already done before. The problem was that the modules worked separately, but their integration led to errors. The problem was growing, and it was decided to add integration testing to the unit tests to verify that all modules of the system work together.

Search for ready-made solutions

To create the interface, we use the QT- library, so when searching for ready-made products for writing integration tests, we chose those that could work with this library. We considered TestComplete and Squish as possible solutions, but the versions of these products did not suit us at that time: Renga uses controls that these products could not identify and work with them. And without them, it was inappropriate to use these automation products.

Own framework

We decided to write our own framework, which will repeat the user's actions, work with the controls we need and cover most of the necessary checks. The development of this framework began back in 2012, and the application initially looked like this:

In the process of creating a new functional in Renga, the utility is being finalized. That is, in fact, we are developing two applications at the same time: Renga and the testing framework.

The principle of the framework

The principle is to record and then play the script, comparing the reference and resulting snapshots of the system. The system snapshots are described by xml-files that are created when recording and playing the test. Such xml-files in the test are reference and resultant. When recording a test, a set of reference xml-files of the fixed state of the system is generated; during playback, a resulting set of files is created. There are the following types of xml-files that are responsible for the different functionality of the application:

- Gui shots - GUI application logging

- Model shots - model data

- Sheets shots - drawing data and drawing

- Aux shots - the position of the characteristic points of the object and additional geometry

- Screen shots - picture in png format, which is not automatically compared, but only used for visual comparison

- Drawer shots - drawing objects

- ReportCSV shots - export data in CSV format

- Dxf shots - export \ import data to Dxf format

- Xps shots - print in xps

- API shots - API testing

- Table shots - drawing and data tables

- Specification shots - specification data

- Specification view shots - drawing specifications

As described above, the utility is being finalized with Renga, new types of system snapshots appear in it. For example, only in preparation for the latest release to test specifications , the utility added Specification shots and Specification view shots. And also, for the convenience of finding certain tests, a filtering system was recently implemented using python scripts.

Now using the framework you can check: drawing objects, printing drawings, exporting and importing objects / drawings in various formats, creating and editing objects, changing parameters and custom properties of an object, data and drawing tables / specifications, etc.

At the current time, the framework looks like this:

At the top of the utility, the path to the folder in which the tests will be stored (xml files and scripts), as well as the path to the new Renga assembly, is set. In the right part, the tester describes the steps to be taken when recording the test. Below are the buttons for recording, playing, renaming the test, etc.

The test is recorded on the hands-tested version of the application, so this behavior can be considered as a reference.

To record a new test:

- We describe the sequence of steps in the test and every moment in time when you need to fix the state of the system.

- Press the Record button: Renga starts, and repeat the steps described above.

- We close Renga - we created reference xml-files and a test script, according to which in the future it will be possible to identify an error in the functionality for which the test was written.

After the test is successfully recorded, it must be sent to the server:

- We place the test in the version control system Mercurial .

- We look forward to playing the tests on the server using TeamCity .

How the framework catches errors:

- The developer pours new code into the work branch.

- Going new version of Renga.exe.

- This version of the product plays tests and compares the reference (recorded on the previously tested version) and the resulting snapshots (recorded on the current version).

If the snapshots are the same - the Passed test, if at least one snapshot is different - the Failed test and these xml files are indicated. If the test fails, you need to deal with the specified files and look for the cause of the discrepancies. Below is an example of comparing the reference and the resulting xml file - Gui shots:

On it we see that in the resulting file on the “e_SpecificationParametersPanel” parameter panel there appeared a button “e_SpecificationSortDropDownButton”, which is not in the reference file. For this reason, the test failed. This behavior is expected, since this button has been added for the new functionality. Now you need to consider the resulting image to be correct and make it a reference. Accordingly, the next test play will be successful.

This is how the test plays in our utility:

Organization of tests on the server

Tests are played on the server after each code is flooded into a branch.

If at least one test fails, the build is not laid out and is not available for testing. This rule allows us not to waste time on testing probably broken functionality, because while the tests were not successful, the developer cannot be sure that nothing is broken from the old functionality.

At the moment we have 8 thousand tests, it takes about 2-3 hours to play them. That is, the delivery time of the new functionality from the developer to the tester is from 2-3 hours to one working day, if something went wrong. Such a number of tests allows us to cover the current functionality, but in some cases you need to be patient to wait for a working build. Now we are thinking about how to speed up the results from playing tests.

What problems remained unresolved

The testing utility solved the module integration problem, but it cannot be used to test the application GUI. And this means that the user interface, the work of the focus, the emulation of keys from the keyboard, scrolling, working with several windows we have to test only with our hands.

Very often there are errors associated with this functionality. Trying to solve this problem, we began to look for a finished product to automate the GUI. We tested the Ranorex program, but, unfortunately, not all the scenarios could be tested using it, and some controls could not be reached due to the current implementation of Renga. Therefore, we continue to search for ready-made solutions for testing the user interface of desktop applications and those who can work with Qt.

We also have to test the installer with our hands. In recent months, we have advanced in this area by starting to use python scripts with pywinauto. So far, this solution is in its infancy, but now we see the direction in which to work.

Let's sum up

In our project, approximately ⅔ of the time, the tester is engaged in manual testing, trying to find as many errors and vulnerabilities as possible, and also to check whether all requirements have been met. And only after that goes on to write autotests, which guarantee that this functionality will not break. With the advent of automated testing, we secured ourselves against repeated regression errors from iteration to iteration. Developers are not afraid to upload their code to the work branch, because they know that tests will show them problematic places in a few hours. And testers can devote themselves entirely to testing new functionality.

This approach allows us not to freeze the code for a long time before release. In fact, one week is enough for us to test the release and fix critical errors. And this is with a small number of test engineers and frequent releases: we release three or four releases per year (on average, similar CAD systems release one release per year).

And, of course, we do not forget about our problems. We will try to solve them so that Renga users can get new functionality even faster. We will be glad to hear the advice if your company had similar problems and you have found ways to solve them.

Elena Makarova, Test Engineer, Renga Software.

Elena Makarova, Test Engineer, Renga Software.Source: https://habr.com/ru/post/339352/

All Articles