Few prefab tasks on bash

Hi Habr!

In bash, you can often come across a situation where you seem to have figured it out, and suddenly there is some kind of magic. Pick it up, and there is still a whole layer of things that I had never suspected before ...

Under the cut, there are some funny bash puzzles that (hopefully) can be interesting even for average ones. I don’t hope to surprise the guru .. but still, before you get under the cat, first promise to answer the tasks at least out loud for yourself - without man / info / google.

The task is simple.

What one command needs to be executed for the next command from the example to output Hello to your terminal?$ echo "Hello" > 1Answer$ cd /proc/$$/fd $ echo "Hello" > 1 HelloHow does it work under the hood?For standard streams (STDIN, STDOUT, STDERR) of each process, file descriptors (0, 1, 2) are automatically created.

We go to the subdirectory on procfs (/ proc), we define the subdirectory of our process through / proc / $$ (a special variable in which the PID of the current process is stored), and finally into the subdirectory with the descriptors "/ proc / $$ / fd ". Descriptors here and are 0 (stdin), 1 (stdout), 2 (stderr). You can work with them as with ordinary character devices. Descriptors of other files that are open in the specified process will be created immediately.

')

The superuser can also write to the process handles of other users, displaying text on their terminals.

It is through this mechanism that the popular write utility works - when a user can write a message to another user without launching some kind of messenger - simply to his terminal. And in order for write to write to another user's descriptor, the SGID flag is on the write binary (users must be added to the tty group).

Through the same mechanism, the system notifies connected users about reboots and other system alerts.2. Not so much a task as a reminder question.

What will the next command output?$ cat /home/*/.ssh/authorized_keys

Will give an error? Will output the first file? List all files?

Where do we go next team:$ cd /home/*/.ssh

What is the result of the last command:$ cp /home/*/.ssh/authorized_keys .AnswersI am sure that everyone answered correctly:

The cat command will print all files, bypassing all the matching directories.

cd will enter the first directory matching the template. It will not go around, just pick up the first alphabetically.

cp will copy the first matching file to the current directory, and will swear at the rest with an error, because cp cannot be overwritten at the same destination within the execution of one instance.

Just in case - what will happen if you do:cp /home/*/.ssh/authorized_keys /home/*/ssh/authorized_newAnswerNo magic, just a syntax error;)3. And this is a really fun task!

Even wanted to throw her first, but decided to leave for a snack. So the situation is this:# : $ touch file{1..9} $ ls -1 file1 file2 file3 file4 file5 file6 file7 file8 file9

Now we will output them through " ls -1 " and filter the first five with a simple regular schedule:$ ls -1 | grep file[1-5]

The result is empty? What the? where are my files?Right teamEverything is very simple. It will be right:$ ls -1 | grep "file[1-5]" file1 file2 file3 file4 file5But why?Everyone knows that the following characters are used in wildcards: * ,? and ~ .

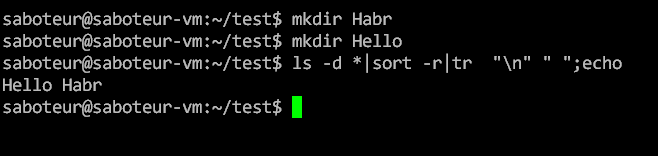

And if there are file entities that fit your wildcards, then the last one will be expanded by a shell to the list of values separated by a space, and only after that the command will be executed with the already changed list of arguments. If there are no suitable file entities, the pattern will remain unchanged:simple illustrative exampleThat is, when using a wildcard, we can get a command that then works, then does not work, then it works incomprehensibly how. This is fixed by simply enclosing the wildcard in quotes.$ mkdir test $ cd test $ echo file* file* $ touch file1 $ echo file* file1 $ touch file2 $ echo file* file1 file2

It is not necessary to quote everything in a row, so often simple words or regulars that are not wildcards are used without quotes, and this works fine.

The above is well known, but not everyone knows that * nix also supports enumeration of characters in the form of [abc] in wildcards .

In our case, the shell “opened” the mask and passed a long string to grep , trying to execute the command “ls -1 | grep file1 file2 file3 file4 file5 ". In this case, grep will look for the string file1 in the files file2, file3, file4, file5, but since the files are empty, it will not return anything (thanks to mickvav for the clarification).

If we execute the command containing wildcard in the directory where there are no matching files, it will not change and we will get as in the previous example with ' * ':$ cd ..;echo file[1-5] file[1-5]

By the way, quite often, even with old familiar masks, many newbies make a mistake, for example, when they run the find command , and get something like:$ find . -name file* find: paths must precede expression: file2

Conclusion: Use quotes!

The enumeration of characters in wildcard supports both ranges and inversion . Examples:# , 1-5 $ echo file[1-5] file1 file2 file3 file4 file5 # , 1-5: $ echo file[^1-5] file6 file7 file8 file94. What is a simple way to cut the file extension?

AnswerThe standard and popular way is to use the basename utility, which cuts all the way to the left, and if you specify an additional parameter, it additionally cuts the right suffix. For example, write file.txt and suffix .txt$ basename file.txt .txt file

But you can not run a whole separate process for such a simple action, and get by with internal conversions in bash (bash variable expansions):$ filename=file.txt; echo ${filename%.*} file

Or vice versa, cut off the file name and leave only the extension:filename=file.txt; echo ${filename##*.} txtHow it works?% - cuts all characters from the end to the first matching pattern (the search goes from right to left)

%% - cuts off all characters from the end to the last matching pattern (from right to left)

# - cuts from the beginning to the first matching pattern (the search goes from left to right)

## - cuts from the beginning to the last matching pattern (from left to right)

Thus, " $ {filename%. *} " Means - starting from right to left we go through all the characters (*) and reach the first point. We cut off the found.

If we used "$ {filename %%. *)", Then in files where a point occurs more than once, we would have reached the last point, cutting off the excess.$ filename="file.hello.txt"; echo "${filename%%.*}" file5. Just a little about redirects < , << and <<<

First redirect "<" from a named stream or from a file. It has long been known for years and ground with calluses of harsh administrators. Therefore, we immediately move on to two others that are less common.

<< , the so-called construction here document . Allows you to place multi-line text directly in the script and redirect it, as if from an external stream.Example$ cat <<EOF \ hello, \ Habr \ EOF hello, Habr

Cat reads data from a file. We redirect it to the STDIN file - the construction here document generates it right on the spot, so there is no need to create a separate file.

This is really a convenient way to call some external utility and feed it a lot of data. But lately I prefer to use <<<And that's whyFirstly, <<< is better readable, and secondly, through <<< it is also possible to transmit multiline data. In the third - ... in the third is no more, but the first two were enough for me. Compare two examples for readability:#!/bin/bash . load_credentials sqlplus -s $connstring << EOF set line 1000 select name, lastlogin from users; exit; EOF#!/bin/bash . load_credentials SLQ_REQUEST=" set line 1000 select name, lastlogin from users; exit;" sqlplus -s ${connstring} <<<"${SQL_REQUEST}"

In my opinion the second option looks potentially more convenient. We can set a multi-line variable in a convenient place, and use it in <<< .

And with a short request, everything looks great at all:#!/bin/bash . load_credentials sqlplus -s ${connstring} <<<"select name, lastlogin from users;exit;"

If you handle the scripts more, and the requests are more authentic, then using <<< with redirection from variables (we can declare the variables themselves in advance, in a specially designated and equipped with comments) place, the code is much more readable.

Just imagine that you need to call several external commands redirecting them to a heap of multi-line data, and place these commands, for example, inside several if / loop structures of different nesting.

here document badly spoils the formatting and readability of such code will be terrible.6. Is it possible to create a hardlink to a folder?

Detailed answerSure you may! But not all. POSIX file systems actively use hardlinks and we see them all the time! Example:# test $ mkdir test # iNode test $ stat -c "LinkCount:%h iNode:%i" test LinkCount:2 iNode:522366

How? Just created and already two links?# test $ cd test # "." $ stat -c "LinkCount:%h iNode:%i" . LinkCount:2 iNode:522366

In both cases, we see the same iNode number. That is, test and " . " Inside it is the same directory. And " . " Is not some special alias bash, and not even an operating system. This is just a hard link at the file system level. Check one more thing:# test2 test $ mkdir test2 # test2 $ cd test2 # ".." $ stat -c "LinkCount:%h iNode:%i" .. LinkCount:3 iNode:522366

" .. " has the same iNode 522366, corresponding to the test directory. And the link count has increased.

Bottom line: hard links to folders are an indispensable part of the file system that is used to build the directory tree. However, if you allow the user to create arbitrary hardlinks on directories, he may make a mistake and create a looped link.

In this case, all the commands that run through the directory tree (find, du, ls) will go into an infinite loop, terminated only by an interrupt or stack overflow, so there is no user command.

I have it all.

I take this opportunity to thank in advance thanks to those who are listed in the survey!

Updated : formatting has been slightly corrected and thanks to mickvav for fixing inaccuracies.

Source: https://habr.com/ru/post/339246/

All Articles