From web developer to machine learning specialist

Not everyone has the courage to change the mastered profession, which has already reached some peaks. After all, it requires a lot of effort, and a positive result is not guaranteed. A year and a half ago, we told how one of our server development team names was re-trained as an iOS programmer. And today we want to talk about an even more "sharp turn": Alan Chetter2 Basishvili, who was engaged in frontend development, was so interested in machine learning that he soon became a serious specialist, became one of the key developers of the popular Artisto project, and now he is engaged in recognizing faces in the Cloud Mail.Ru. Interviews with him read under the cut.

Why did you want to be a programmer?

Understanding that I want to be a programmer came to the class in the sixth and seventh thanks to the problem with the launch of one game. There was no one to consult with, and I sat at the computer for several days, but I solved the problem. And I was very pleased. I wanted to create my own game. Because began to attend local programming courses.

In which projects did you work on the front-end, what did you like most about what you used?

Started, like many, with CMS. This job itself has found me. I think many programmers, even if they have nothing to do with the web, at least once, but asked to make an online store. Next was a whole network of shops, there I wrote admin panel. This was done without frameworks, inventing bicycles, but it was very exciting. I also loved the design of software architecture. And then I went to work on the frontend. Wrote chats, p2p video calls and more.

What is common between a raven and a desk? In a sense, between the front end and the neural networks? Why so quickly managed to study them?

Nothing to do except to write code. A mathematical education helped. In addition, it is easier for a programmer to study deep learning, as it seems to me.

What is the reason for the interest in the transition from the frontend to the neural network?

I was always interested in this, and my graduation project was connected with machine learning, although I didn’t understand very much what I was doing. At Coursera, I took the course "Introduction to machine learning." Gradually, there was an understanding of how things I use every day work, such as personal recommendations, searching and much more, and understanding delighted me. This is probably one of the main motivators - the thirst to understand how modern machine learning works. And when I met deep learning, I lost interest in everything else. Frontend has become just a chore. I came to work, and, although I had quite interesting and challenging tasks, they faded into the background compared to what I did at night.

What was your class schedule?

At first, when there was only an introduction to machine learning, I spent only a weekend on it. Then I started to compete. Both weekends and nights were spent on him. Until three o'clock in the morning I usually sat and practiced. And after that, a fuse remained for quite a long time, so I continued to study neural networks every day at night. So I lived for six months.

What do you recommend to read about neural networks from the level that has emerged over the past six months, after which you can make a real contribution to the development?

Now there are many courses where everything is laid out on the shelves. They can give a very quick start. On neural networks there is a remarkable Stanford course cs231n , it is led by Andrey Karpaty. Then you can read and outline the “ Deep Learning ” by Jan Goodfellow. Another good resource is Neural Networks and Deep Learning . But, of course, it’s better to begin with the basics of ML.

What do you think, what training format is better now: books, courses, videos on YouTube, something else, maybe?

It seemed to me reasonable to finish the courses first, and then read the books, because the courses are pretty simple, they chew information there, and the books already give a full understanding. Today, there are many courses in machine learning. The one that I graduated from Coursera is called “Introduction to Machine Learning,” a team from Yandex, including Vorontsov, worked on it.

That is, you first need an understanding of basic concepts. And if you immediately start reading a book, it may be too difficult, and you will dig in the details. We must go from simple to complex, gradually delving.

It also helps to write code. Only then do you begin to notice important details and get real experience. You can read 50 articles, and the output you have something left in your head, but at the level of concept. And in order to really understand something and learn how to apply it, you need to sit down and start programming. The most effective is to participate in some kind of competition like Kaggle. Or just take and make your project based on what you read.

What blogs on neural networks do you read and why?

Carpathoi has a great blog , but new posts have not appeared for a long time. More OpenAI Blog , inFERENCe . I read on Twitter and Facebook the latest news from leading developers. I like the search engine Carpathian scientific publications. There are more recommendations and a very interesting “Top HYIP” column - these are the most frequently mentioned articles in tweets.

If you had the opportunity to master a new technology this year, what would it be?

I want to work more closely with GAN-architectures. This is an approach to learning generative networks. Suppose we want to generate believable images of bedrooms. To do this, we train the generator (the network accepting a random vector and outstanding images) to deceive the discriminator (the network is trained to distinguish real bedrooms from generated ones). That is, the networks confront each other and improve during training. In the end, the generator can produce images that can sometimes deceive a person. In practice, GANs showed themselves well in the task of increasing the resolution of images ( SRGAN ), and also allowed us to generate believable seals by sketching in pix2pix .

Is it possible to use neural network technology for the frontend. And if so, where?

Not so long ago, I stumbled upon the news about generating HTML and CSS in a picture using recurrent networks. I don’t really like to make up, so this idea seems interesting.

What other interesting applications of neural networks are there now? We all know about the processing of photos, videos, now still generating all kinds of faces. And what other applications are possible in principle?

Among other modern applications of neural networks, speech generation can be noted, for example, the WaveNet project. Already it turns out very much like a real speech. Work is also being actively carried out to automatically adjust the video sequence to a specific speech, for example, it will be possible to “remove”, as some politician says certain words. Soon we will have a world in which it will be incomprehensible what is fake and what is not.

How do you optimize your code?

Like the others: I profile and eliminate bottlenecks. If we are talking about optimization of the inference-network, then everything is usually done for us, except for cases with self-written layers. They have to tinker with them.

Do you have any personal project or, perhaps, a hobby that allows you to reboot your brain?

Not now. Work is interesting enough to be engaged in it as a hobby. To get distracted, I read books and watch serials.

What tasks for solving using neural networks do you consider the most difficult / interesting?

Unmanned vehicles - a very difficult and interesting problem. Such a system should work very accurately. To recognize cars, road, trees, pavement, pedestrians, the most difficult thing is to connect all this together and give the car a command where to turn, go faster or slower. Everything else the responsibility is very great. Replacing all cars with unmanned vehicles will be difficult, but this is a completely solvable task. Already there are cars with some pilotless skills. Mistakes, of course, still happen. Google is on the path of accumulating huge samples (cars drove 3 million miles). A large number of their cars drive around every day, gather information, identify marginal cases of AI errors, and experts retrain them all the time. As a result, they are now ready to go into commercial operation, they have launched a beta program . I think they probably will have the best drone. In addition, at first, a person can sit behind the wheel and control. And if you look at how people drive in Russia, then drones are much safer and they need to be implemented as soon as possible.

Medicine is also one of the most important areas for machine learning. Imagine that you will be examined not by one human doctor, but by the combined expert opinion of the whole world - Western, Asian, Ayurvedic, or whatever you like - medicine, which combines expertise and statistics from around the world. Or look at the accuracy with which the cancer was found in the biopsy images. And most importantly, these techniques are easy to scale.

Does artificial intelligence have a notion of updating in software? The first version, then rolled the second version? Once programmed - and he learns?

It must be emphasized that we are talking about weak artificial intelligence. Of course, he has the concept of renewal: we can replace the old neural network, which worked less efficiently. After all, a neural network is a conditional set of weights and operations that need to be done with them. These weights can be updated at least every day. Almost all of these algorithms are not trained online, they are specially trained once. Yes, there is reinforcement learning - methods that are designed to train on feedback from the environment. The technology is actively developing, although there are few examples of implementation.

That is, in this form of software can not be serious mistakes?

Of course it can. A classic example: the American army wanted to use neural networks to automatically recognize enemy tanks in camouflage among trees. The researchers obtained a small dataset of marked-up pictures and taught a classification model in photos of camouflaged tanks among trees and photos of trees without tanks. Using standard methods of supervised learning, the researchers trained the neural network to assign the necessary classes to the images and were convinced of its correct work on the test pending dataset. But good results on samples do not guarantee that retraining has not occurred, and in production everything will work correctly. In general, the researchers gave the result, and a week later the customer stated that the recognition result turned out to be completely random. It turned out that in the sample there were tanks with camouflage in cloudy weather, and forests - in the sun, and the network learned to distinguish weather conditions.

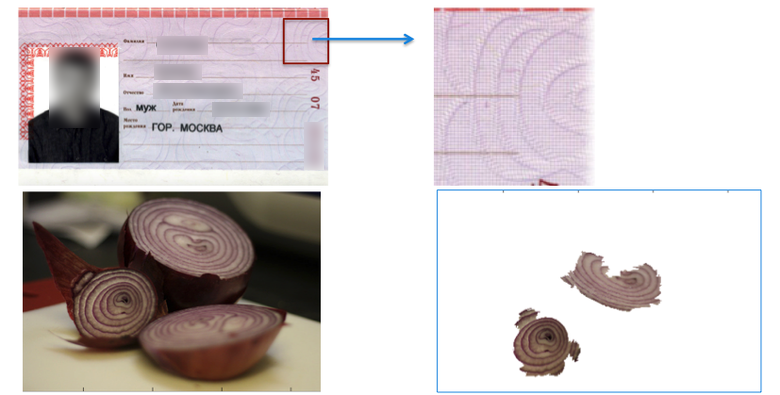

And there are many such examples. You can retrain for anything. For example, we recently recognized passports. The network has learned round patterns in the document. Then she saw a photo of a sliced onion, which has very similar patterns, and said that it was a passport. And such regional cases can be caught a lot and for a long time.

That is, it may be that the car in its previous version understood that a person is walking in the picture, and then a new version is rolled in and she no longer understands?

Easily. There are quite a few articles on how to update machine systems so that they do not forget previously acquired knowledge. For example, you can teach a model so that it still recognizes everything that has happened before, or does not greatly change the distribution of weights. Even if you start to train a model, it can go to another point of optimum, not related to the current model. Here you need to be very careful.

You worked on the project Artisto, tell how it started.

We interacted with Mail.Ru Search, we had a team, about five people at the first stage. The project was done on enthusiasm. For two weeks we got sane results, another two weeks brought it to the state required for production, in parallel we finished the backend. For the month released a product that works with video. Initially, we tried to implement photo processing, but then decided that we should not repeat Prisma, we need to create something new. Then people started to leave, because they had their own business.

What is the difference between processing photos and videos?

In Artisto video is divided into frames, and then they are stylized independently of each other. There is, for example, another video styling method that gives a smoother result. There it turns out more difficult with the so-called optical flow, when, for consistency of stylization, we keep track of where the pixels flow from frame to frame. In particular, we stylize one frame, and then use its modification to stylize the next. We know how the object is located in the next frame, move all the pixels that are in the picture, and start from this frame. Then we take the next frame, again the optical flow, move the pixels, start from this frame, stylize it. And so on.

In Artisto, not the entire frame is stylized, but only the changed fragments?

Almost so, but not quite. The video is processed in such a way that we keep the styling of the previous frame. The main problem is that you may have different stylization for each frame, and then the image will be “feverish”. To solve this problem, we trained the neural network in such a way that it was less sensitive to all sorts of noise, so that nothing changed from the change in lighting, and also to modify the loss function. Read habrapos on this topic.

What projects of our company already use machine learning?

In many: in the Post, Search, Odnoklassniki, VKontakte, Yulia, Bipkara. For example, it analyzes the text of publications in social networks and on sites indexed by our search engine. In general, the term “machine learning” refers to a wide range of disciplines, including deep learning, that is, neural networks. This direction is now very actively developing. Especially striking results were achieved in the field of computer vision. Older machine learning methods had low image recognition accuracy, but now there are already highly efficient approaches. Thanks to this, machine learning has received a new impetus of development, because photo recognition is a practical, understandable and close task, demonstrating the benefits of neural networks.

With the text things are worse, but not bad either. Machine translation is still inferior to man, and in recognition of images deep learning in many cases overtakes man. Neural networks do an excellent job with some computer games, especially simple ones based on reaction. With others it is weak. Especially when it comes to heavy strategies, where you need to manage a large number of units. Here, reinforcement learning does not work very effectively. I think we need more research on this topic.

But more recently, the guys from OpenAI thundered with their bot for Dota 2. The bot smashed the world's best players in 1 × 1 battles. Dota is a difficult game, because this is a significant event.

Not so long ago on social networks there was a very bright conflict between Mask and Zuckerberg regarding government regulation in the field of artificial intelligence. What camp are you adjoining to and why? Whose arguments seem stronger to you, whose weaker?

It seems to me that it is too early to talk about strong artificial intelligence. But when we get closer to him, it will be clear how to regulate it. While we are programming just some tasks. We do it ourselves and know what happens at the exit. That is, there will not be such that the machine that ran the search results, suddenly begins to build conspiracies.

Yes - unmanned car can knock down a pedestrian. But not on purpose, but because of an error. When we create a strong intellect, then the problem of his learning will arise in such a way that he shares the goals of humanity. For example, today during training we say for sure that the error in the sample was lower, the loss function is such and such. But in reality, we want the machine to recognize objects well. To do this, we minimize the loss function. The minimization of the loss function is a mathematical notation to indicate the network “do not make mistakes on a given set of images”. The network adjusts and acquires a generalizing ability, that is, it reveals patterns and learns to correctly predict a class for images that it has never seen. These patterns are wrong. In particular, the model can call a bow a passport, and so on. And in a person in the process of growing up moral principles are laid, which he validates and adapts on the move. So AI must somehow impart our moral principles.

What applications of neural networks on the market do you consider to be the most impressive / advanced and why?

Neural networks, in principle, are amazing, especially when you know how they work. On the market, image classifiers, object detectors and networks are often used for face recognition. Some solutions to these problems are impressive elegance and simplicity. I can also mention unmanned vehicles and machine translation. For example, in Google, the neural network uses an intermediate language through which it performs translations from other real languages (more precisely, we are talking about vector representations from which phrases are compiled in any other languages). The system receives a sentence in English at the input, forms sets of numbers, and then another part of the network converts these sets, for example, into a sentence in French. And when the same neural network learns to transform between many languages, it forms some kind of universal representation of the text, thanks to which the network can connect different languages with each other, which it did not learn to translate directly. For example, it can be trained to translate EN ⇄ FR and EN ⇄ RU - and then the model will be able to translate FR ⇄ RU.

What knowledge / skills should a neural network specialist have?

Need erudition in a number of mathematical disciplines and ML in general. The more knowledge a specialist has in his head, the easier and faster he can solve problems. In addition to the baggage of knowledge you need curiosity. Every day there are new architectures and approaches to learning neural networks. The specialist needs to keep their knowledge up to date.

And how are we in the company with vacancies for specialists in deep training?

In our company, we have specialists in machine learning in almost every business unit. In the Mail, we are actively looking for specialists to improve anti-spam and to create new "smart" functions (mainly working with text). We are also interested in specialists for the development of computer vision. In the Cloud - computer vision specialists. Another interesting area where we use in-depth training and are looking for specialized specialists is the development and improvement of recommender systems, big data analysis and work with text in various projects (for example, predicting the correct answers in Mail.Ru Search). ML exists both in the advertising campaign, in the formation of a smart tape of social networks and in the Search.

That is, in the company, all the functions of people are gradually replaced by artificial intelligence?

It is necessary to understand that programming from this is not simplified in any way, but only becomes complicated. Programmers will be in demand for a long time. In addition, specialists in AI should also be primarily programmers: it is much easier to teach a programmer how to create AI. And they will bring companies much more benefit, because they will very quickly implement their ideas, unlike pure researchers. In general, many companies, including ours, invest huge amounts of money in artificial intelligence. For example, now China wants to become a leader in this area until 2030. Baidu alone employs 1,300 machine learning professionals.

What direction in the field of neural networks do you consider the most promising?

The most promising is a strong AI. Here is the question: can we move from solving small specific problems to a strong artificial intelligence. How to combine all this? I'm not sure that the way to a strong artificial intelligence lies through the solution of simple problems. But in general, if we exclude a strong AI, then yes, it is the replacement of a person in all areas of activity.

Do you think it will be possible to create an AI, which in all respects will exceed a person? And if so, when?

It is the matter of time. According to surveys of scientists, its appearance can be expected in the 2050-2090s. But it seems to me that this does not work. We copy the individual functions of the brain, but I don’t know how to move from this to a strong AI. However, today we have already managed to achieve good results in some narrow directions, for example, in image recognition.

')

Source: https://habr.com/ru/post/339228/

All Articles