Video Game Development Magic id Software

From the translator: this article was written by David Kushner in 2002, a year later he published the famous book “Masters of Doom”. The article seemed interesting to me, because it contains the details of the implementation of id technologies, which are somehow missing from the book.

Over the past 12 years, the evolution of realism graphics Id Software has raised the bar for the entire video game industry. Among the innovator games are [bottom to top, right to left] Commander Keen (1990) [app. Trans .: in fact on the screenshot Dangerous Dave in the Haunted Mansion], Hovertank (1991), Wolfenstein 3D (1992), Doom (1993), Quake (1996) [approx. Trans .: in fact Quake II] and Return to Castle Wolfenstein (2001).

')

After midnight, the fight begins. Soldiers are chasing the Nazis through the corridors of the castle. The flamethrower belches a monstrous tongue of flame. This is Return to Castle Wolfenstein, a computer game that has become not only a spinal adventure, but also a miracle of science. She is the latest product from Id Software (Mesquite, Texas). Thanks to its technologically innovative games, id had a tremendous impact on the world of computer computing: from the usual high-speed, full-color high-resolution graphics cards used in modern PCs to the advent of an army of programmers and online gamers who contributed to popular culture.

id declared itself 10 years ago with the release of its original game about the hunt for the Nazis - Wolfenstein 3D. In it and in its followers — Doom and Quake — the players drove the threatened infantry soldiers running through the maze and hunting monsters or each other. In order to bring these games to the consumer PC market and win the title of market leader, id masterfully simplified complex graphic tasks and masterly used the technological progress of graphic cards, computing power and memory sizes. To date, according to the NPD Group, the game companies have earned more than 150 million in sales.

Id owes much of its success to the technical skills of John Carmack, a 31-year-old lead programmer and co-founder of the company that creates games from adolescence.

In the late 1980s, specialized video game consoles dominated the electronic gaming industry. Most of the games were distributed on cartridges inserted into consoles, so writing them required expensive development systems and corporate support.

The only alternative was the programming of games for home computers, the underground world, in which beginners could develop and distribute their software. To create games on weak machines, all that was needed was programming skills and love for games.

There were four people passionately in love with games: artist Adrian Carmack, programmer John Carmack (not a relative), game designer Tom Hall, and programmer John Romero. While working for a small Softdisk software publisher (Shreveport, Louisiana), these avid gamers began to secretly create their own games.

In those days, the PC was treated as an exclusively working platform. In the end, he had only a dozen screen colors, and the sounds were made by a squeaking little speaker. Nevertheless, gamers from Softdisk realized that this would be enough to make a PC gaming platform.

At first they decided to test whether Super Mario Brothers 3 could recreate the largest gaming industry of that time on a PC. This is a two-dimensional game for the Super Nintendo Entertainment System [app. Trans .: in fact, for Nintendo Entertainment System] , which was played on a regular TV screen. The goal of the game is to drive a mustached plumber named Mario, jump on platforms, dodge threats and run around the world under a blue sky and clouds. When Mario ran, the level scrolled from left to right, so that the character was always around the middle of the screen. To achieve the required graphics speed, Nintendo used specialized hardware. “We had vivid examples of console games [such as Mario], in which smooth scrolling was present,” says John Carmack. "But [in 1990] no one could do the same on the IBM PC."

After several nights of experimentation, Carmack figured out how to emulate side-scrolling on a PC. The image of the screen in the game was rendered or rendered by assembling an array of tiles 16 by 16 pixels in size. Usually more than 200 such square tiles were required for a screen background: a blue sky tile here, a cloud tile there, and so on. The graphics of the active elements, such as Mario, were drawn over the background.

All attempts to redraw the entire background in each frame resulted in too slow work of the game, so Carmack came up with how to redraw only a small number of tiles for each frame, which significantly increased the speed of the game. This technique used the capabilities of the recently released type of graphic cards and was based on the observation that the player’s movement occurs gradually, that is, most of the objects in the next frame are already drawn in the current one.

The new graphics cards were known as the Enhanced Graphics Adapter (EGA) cards. They had more built-in video memory that the Color Graphics Adapter (CGA) had, and they could display at the same time not four, but 16 colors. For Carmack, the extended video memory had two important consequences. First, although the memory of the card was designed for a single screen image with a relatively high resolution, it could store several video screens of low-resolution images, usually 300 by 200 pixels, which is quite a lot for video games. Pointing to different addresses in video memory, the card could choose an image transmitted to the screen about 60 times per second, providing smooth animation without annoying flicker. Secondly, the card could move data inside the video memory much faster than it was possible to copy the image data from the PC's main memory to the card. This allowed to get rid of the main "bottleneck" speed graphics.

Carmack wrote the so-called graphics display engine, in which both of these properties were used to the maximum. It applied a technique originally developed in the 1970s to scroll large images, such as satellite photos. First, he collected the entire screen in video memory, tile behind tile, plus a border the width of one tile [see “Scrolling with action” illustration]. If the player moved one pixel in any direction, the display slider moved the starting point of the image transmitted to the screen one pixel in the corresponding direction. There was no need to draw new tiles. When the player's movements finally moved the screen image to the outside of the border, the engine still did not redraw much of the screen. Instead, he copied almost all the finished image - the part that has not changed - to another part of the video memory. Then he added new tiles and moved the original display point of the screen to point to a new image.

Scrolling with action: to create a two-dimensional scrolling in a PC game, programmer John Carmack cheated a bit because he refused to redraw the screen in each frame. He built a video-stored background from graphic tiles [left], but sent only part of the image to the screen [top left, inside the orange border]. When the player's character [the yellow circle] moved, the background transmitted to the screen was changed so as to include tiles outside the border [top right]. New background elements were required only after shifting to the width of one tile. Then most of the background was copied to another area of memory [bottom right] and the screen image was centered on a new background.

In short, instead of forcing the PC to redraw tens of thousands of pixels with each player movement, it was usually enough for the engine to change only a single address in the memory — one that pointed to the starting point of the screen image — or, in the worst case, draw a relatively thin strip. pixels with new tiles. Therefore, the computer CPU had a lot of time for other tasks, such as drawing and animation of moving game platforms, hostile characters and other active elements with which the player interacted.

Hall and Carmack prepared a clone of Mario for the PC, which they called Dangerous Dave in Copyright Infringement. But their employer, Softdisk, was not interested in publishing games for then expensive EGA cards, he preferred to stick to the market for CGA applications. So the emerging company id Software went into the shadows and used its technology to create its own side-scroller for the PC called Commander Keen. When it came to the release of the game, they contacted the game publisher Scott Miller, who convinced them to use a distribution scheme as new as their engine: shareware.

In the 1980s, hackers began selling their programs on a shareware model that relied on a code of honor: try the software, and if you like it, pay. But it was used only for utilitarian programs, such as file tools or word processors. Miller decided that the next milestone would be games. He said: instead of giving up the game entirely, why not give away only the first part to get the player to buy the rest? id agreed that Miller’s Apogee company release the game. Before Commander Keen, the most successful shareware game Apogee sold only a few thousand copies. But a few months after the release of Keen in December 1990, its circulation was 30,000 copies. According to Miller, for the nascent world of PC games "it was like a small atomic bomb."

Meanwhile, programmer Carmack continued to expand the possibilities of graphics. He experimented with 3D-graphics from the middle grades of the school, creating on his Apple II frame logos MTV. Since then, some game creators have been experimenting with three-dimensional first-person graphics, in which flat tiles of two-dimensional games have been replaced with polygons that form the surface of the surrounding player space. The player no longer felt outside the world, he saw it from the inside.

However, the results were inconsistent. The PCs were too slow to redraw detailed 3D scenes as the player moved. They had to draw many surfaces for each frame transmitted to the screen, including those that were overlapped by other surfaces closer to the player.

Carmack had an idea that would allow the computer to draw only those surfaces that are visible to the player. “If you are ready to limit the universality of your approach,” he says, “you can almost always achieve more.”

Therefore, he did not begin to solve the general problem of drawing arbitrary polygons, which can be in an arbitrary place in space, but developed a program that will draw only trapeziums. At that time, he was worried about the walls (which are trapezoid-shaped in 3D), and not the ceiling or the floor.

For his program, Carmack simplified the technique used on the powerful systems of the time to render realistic images. In the so-called ray tracing (raycasting), the computer draws the scene, drawing lines from the player's location in the direction of his gaze. When a line collides with a surface, the pixel corresponding to this line on the player’s screen is colored with the corresponding color. Computational time of the computer is not spent on drawing surfaces that still will not be visible. Drawing only the walls, Carmack could very quickly recreate scenes using ray tracing.

Carmack's last task was to decorate the 3D world with treasure chests, enemies and other objects. He again simplified the task, this time using two-dimensional graphic icons called sprites. He forced the computer to scale the size of the sprite, depending on the position of the player, so that he would not have to simulate objects as three-dimensional figures (otherwise the game performance would have greatly decreased) Combining sprites with ray tracing, Carmack managed to place players in a fast 3D world. The result of this work was the game Hovertank, released in April 1991. It was the first fast 3D first person shooter for the PC.

At about the same time, his fellow programmer Romero heard about a new graphic technique called texture mapping. This technique allows you to impose on the surface instead of a solid solid color realistic textures. In the next game of the company, Catacombs 3D, the player ran through the maze, shooting fireballs at his enemies with the help of another novelty - a hand drawn at the bottom of the screen. As if the player was looking at his own hand, stretched into the computer screen. By adding a 3D hand to Catacombs, id Software made a serious psychological statement to the audience: now you are not just playing the game - you are in it.

For the next game id, Wolfenstein 3D, Carmack improved his code. The key decision that allowed the graphics engine to do as little work as possible was the following: in order to simplify the drawing of the walls, all of them should be the same height.

This greatly accelerated ray tracing. With standard ray tracing, one line is projected through space for each displayed pixel. At that time, the usual 320 by 200 pixel screen image required 64,000 lines. But since Carmack's walls were the same from top to bottom, he only needed to trace along one horizontal plane, that is, only 320 lines [see the “Ray Tracing in 3D-rooms” diagram].

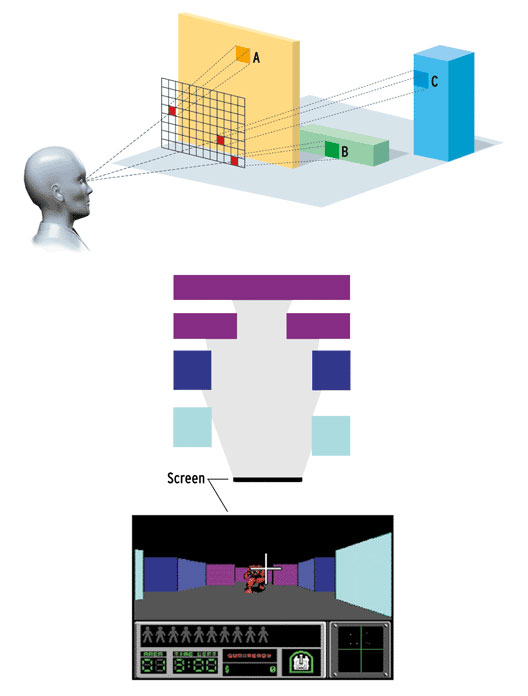

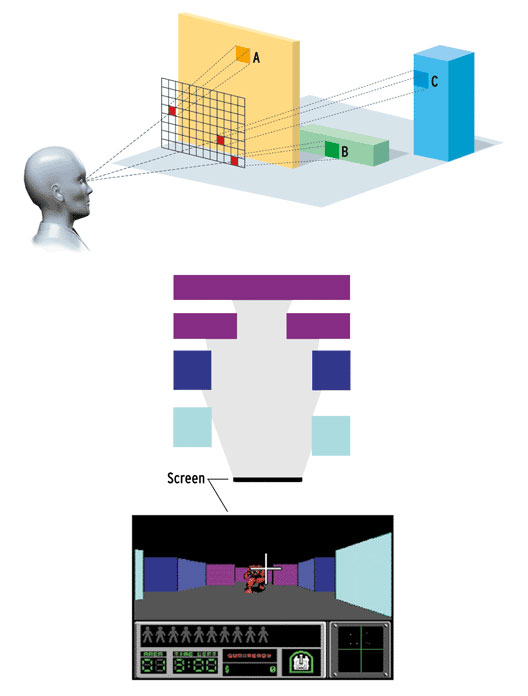

Ray tracing in 3D-rooms: for fast rendering of three-dimensional rooms without rendering overlapped, that is, unnecessary surfaces, Carmack used a simplified system of ray tracing, a technique used to create realistic 3D images. When tracing rays, the computer draws scenes by drawing lines from the player's point of view [above] through an imaginary grid so that they collide with surfaces visible to the player. In this case, only such surfaces are drawn.

Carmack simplified the technique by making all the walls the same height. This allowed to emit rays from the player in only one horizontal 2D-plane [in the middle] and scale the apparent height of the wall according to its distance from the player instead of defining each individual point of the wall. The result was a final 3D image of the walls [bottom].

Carmack's engine was incredibly fast, and Romero, Adrian Carmack and Hall decided to create a brutal game in which an American soldier had to destroy the Nazis, making his way through the maze of levels. After the release in May 1992, Wolfenstein 3D instantly became a sensation and a bit of a PC business card. When Intel wanted to show journalists the speed of its new Pentium processor, it showed a system running Wolfenstein.

In addition, Wolfenstein gave players unexpected freedom - they could change the game, complementing it with their own levels and graphics. Instead of Nazi officers, players could, for example, use the star of American television shows - the purple dinosaur Barney. Carmack and Romero did not in any way try to pursue the creators of such "mutated" versions of Wolfenstein. They, like hackers, such activities only pleased.

In their next game, Doom, two important effects were added, on which Carmack experimented while working on another game of 1992, Shadowcaster, at Raven. The first is the imposition of textures on ceilings and floors, and not just on the walls. The second is the addition of light attenuation. Such lighting meant that, as in real life, distant objects were shaded, while in Wolfenstein each room was brightly lit, with no variations in shades.

By then, Carmack had already programmed for the Video Graphics Adapter (VGA) cards, which had supplanted the EGA cards. VGA allowed the use of 256 colors - a lot of progress compared to 16 EGA colors, but still not enough to implement all the shading required for dimming effects.

The solution was to limit the graphics of the game, thanks to which it was possible to use 16 shades of each of 16 colors. Then Carmack programmed the computer to display different shades based on the position of the player in the room. The darkest shades of color were applied to the far parts of the room. Near surfaces have always been brighter than distant ones. This added to the game the atmosphere of immersion.

Both Carmack and Romero wanted to get rid of the simple schemes used in their previous game levels. “My whole approach was this - let's not repeat anything that was in Wolfenstein at all,” says Romero, “let there be no such levels of illumination, let there not be the same height of ceilings, let the walls not be at an angle of 90 degrees [relative to each other]. Let's demonstrate Carmack’s new technology by creating something completely different. ”

Using the progress in speed and memory capacity of computers, Carmack began work on the task of drawing polygons with more arbitrary shapes than the trapezium in Wolfenstein. "It seemed that [the graphics engine] would not be fast enough," he recalls, "so we had to look for a new approach ... I understood that in order to ensure speed, we still need to have strictly horizontal floors and vertical walls." The solution to the problem was a technique known as binary space partitioning (BSP). Henry Fuchs, Zvi Kedem and Bruce Naylor, who worked at Bell Labs, in the 1980s popularized the BSP techniques used to render 3D models of objects on the screen.

The fundamental problem of transforming a 3D model of an object into a screen image is to determine which surfaces are visible, that is, it comes down to calculations: where is surface Y relative to surface X, in front of or behind it? Traditionally, these calculations were performed with each change in the position of the model.

The following observation was used in the BSP technique: the model itself is static, and although its various positions create different images, the relationship between the surfaces actually remains unchanged. BSP made it possible to determine these relationships once, and then store them in such a way that in order to determine surfaces hidden by other surfaces from any arbitrary point of view, it was necessary to find relevant information, rather than calculate everything anew.

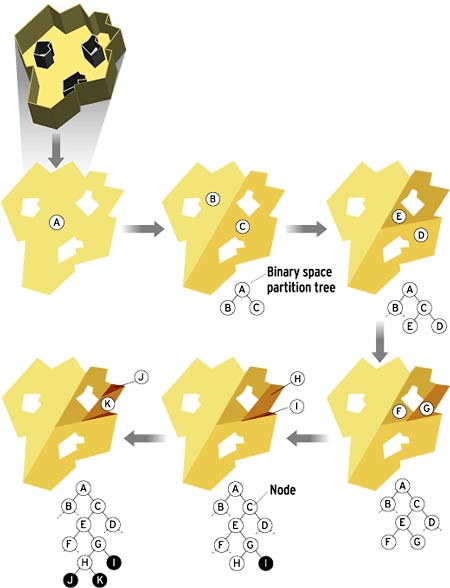

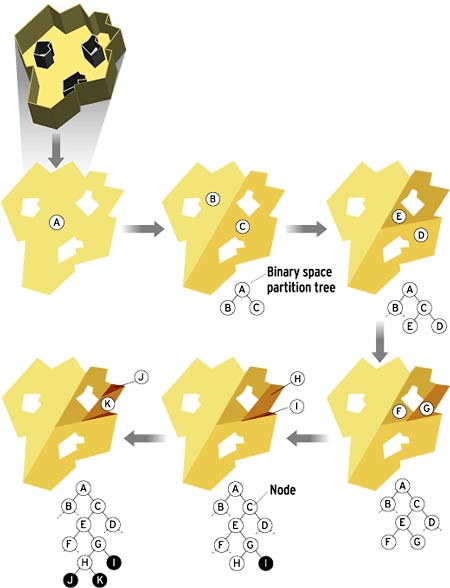

BSP takes the space occupied by the model and breaks it into two parts. If one of the parts contains more than one surface of the model, then it is broken again, and so on, until the space is completely divided into parts containing one surface. The resulting hierarchy of branching is called a BSP-tree and extends to all operations, from the initial splitting of the space to individual elements. Moving along a certain path through the nodes of the saved tree, you can generate key information about the connections between the surfaces from a specific point of view of the model.

Carmack wondered: is it possible to use BSP to create not just a single 3D model of an object, but a whole virtual world? He again simplified the task by introducing a restriction: the walls should be vertical, and the floors and ceilings should be horizontal. That is, BSP can be used to split not the 3D space itself, but a much simpler two-dimensional plan of this space, while retaining all the important information about the relative position of the surfaces [see scheme "Divide and conquer"].

Illustration: Armand Veneziano

Divide and Conquer:“Doom perceives all [the surfaces of the three-dimensional world] as lines,” says Carmack, “splitting lines and sorting lines is much easier than sorting polygons ... The whole point is to take BSP [-tree] and apply them to ... the plane, and not to the polygons of the 3D world, which greatly simplifies the task. ”

In addition, Doom was designed in such a way as to facilitate the work of hackers, complementing the game with its own graphics and levels. A network game has been added to Doom, allowing several players to fight over a local network or via a modem.

The game was released in December 1993. Thanks to the multiplayer mode, extensibility, attractive graphics and quality levels, immersed the player in the futuristic world of a space paratrooper fighting against the legions of hell, it has become a real phenomenon. In the sequel, Doom II, more weapons appeared, new levels, but the game itself used the same engine. It was released in October 1994, and sales amounted to 1,500,000 copies, about $ 50 each. According to the NPD Group, it remains third in the list of best-selling computer games.

In the mid-1990s, Carmack felt that PC technology had become powerful enough to achieve the following two goals in the next game, Quake. He wanted to create an arbitrary 3D world in which real 3D objects can be viewed from any angle, in contrast to the flat Doom and Wolfenstein sprites. The solution was to use the power of the latest PC generation and use BSP to split the volume of a truly three-dimensional space, and not just areas of a two-dimensional plan. He also wanted to create a game that could be played over the Internet.

For the game over the Internet was used client-server architecture. The server (which could be running on any PC) must handle the entire game environment consisting of rooms, physics of moving objects, player positions, and so on. The client PC processes the data entered by the player through the keyboard and mouse, as well as the information displayed in the form of graphics and sound. However, when connected online, the game is subject to delays and losses of network packets, and this interferes with fast and active play. To reduce the effect of this interference, id limited the transmission of packets only with the most necessary information, for example, the position of the player.

“The solution was to use an unreliable channel to transmit all the data,” says Carmack, “we took advantage of the continuous transmission of packets and used [slower] reliable transmission only to establish communications and correct errors.” To reduce the load on the network, various methods of data compression were also used. The convenience of the multiplayer mode of the new game - Quake - was rewarded by the emergence of a huge online community after the release of the project in June 1996.

The importance of graphics quality

Games stimulated the evolution of video cards. But just multiplayer games created an insatiable demand for increasingly sophisticated graphics systems, giving the market the most significant impetus. Business users did not care if their graphic card, which they use to read e-mail, would update the screen 8 times a second, while their neighbor’s card updates the screen 10 times a second. But for a gamer playing Quake, in which the difference between life and death was measured in tenths of a second, it was a very important question.

Quake soon became the de-facto performance indicator for the consumer video card industry. David Kirk, Lead Researcher at NVIDIA, a leading graphics processor manufacturer (Santa Clara, Calif.): “Id Software games have always pushed progress.”

Quake II became an improved version of its predecessor, taking advantage of hardware acceleration, which could be used on a PC to transfer most of the work on rendering 3D scenes from the CPU to the video card. Quake III, released in December 1999, took another step forward and became the first notable game in which hardware acceleration became mandatory. This is reminiscent of how id wanted to “burn bridges” in 1990, insisting on using Commander Keen EGA instead of CGA.

Carmack himself believes that the most important innovations were contained in Quake. Everything after Quake, he says, has become, in essence, an improvement on the topic. For example, Return to Castle Wolfenstein was based on the Quake III engine, and most of the levels and game logic was created by a third-party company.

“On this path were critical points of development,” says Carmack, “first, the transition to the first-person view, then arbitrary 3D worlds, then hardware acceleration ... But we coped with the most important tasks. All these aspects can still be improved, but ... we can already create any world with a certain degree of accuracy. We can improve the quality, special effects and all that. But we already have the fundamental tools needed to create games that are simulations of the world. ”

Behind the scenes of action and aggression of id games, there is a revolution in desktop technology.

Over the past 12 years, the evolution of realism graphics Id Software has raised the bar for the entire video game industry. Among the innovator games are [bottom to top, right to left] Commander Keen (1990) [app. Trans .: in fact on the screenshot Dangerous Dave in the Haunted Mansion], Hovertank (1991), Wolfenstein 3D (1992), Doom (1993), Quake (1996) [approx. Trans .: in fact Quake II] and Return to Castle Wolfenstein (2001).

')

After midnight, the fight begins. Soldiers are chasing the Nazis through the corridors of the castle. The flamethrower belches a monstrous tongue of flame. This is Return to Castle Wolfenstein, a computer game that has become not only a spinal adventure, but also a miracle of science. She is the latest product from Id Software (Mesquite, Texas). Thanks to its technologically innovative games, id had a tremendous impact on the world of computer computing: from the usual high-speed, full-color high-resolution graphics cards used in modern PCs to the advent of an army of programmers and online gamers who contributed to popular culture.

id declared itself 10 years ago with the release of its original game about the hunt for the Nazis - Wolfenstein 3D. In it and in its followers — Doom and Quake — the players drove the threatened infantry soldiers running through the maze and hunting monsters or each other. In order to bring these games to the consumer PC market and win the title of market leader, id masterfully simplified complex graphic tasks and masterly used the technological progress of graphic cards, computing power and memory sizes. To date, according to the NPD Group, the game companies have earned more than 150 million in sales.

It all started with a guy named Mario.

Id owes much of its success to the technical skills of John Carmack, a 31-year-old lead programmer and co-founder of the company that creates games from adolescence.

In the late 1980s, specialized video game consoles dominated the electronic gaming industry. Most of the games were distributed on cartridges inserted into consoles, so writing them required expensive development systems and corporate support.

The only alternative was the programming of games for home computers, the underground world, in which beginners could develop and distribute their software. To create games on weak machines, all that was needed was programming skills and love for games.

There were four people passionately in love with games: artist Adrian Carmack, programmer John Carmack (not a relative), game designer Tom Hall, and programmer John Romero. While working for a small Softdisk software publisher (Shreveport, Louisiana), these avid gamers began to secretly create their own games.

In those days, the PC was treated as an exclusively working platform. In the end, he had only a dozen screen colors, and the sounds were made by a squeaking little speaker. Nevertheless, gamers from Softdisk realized that this would be enough to make a PC gaming platform.

At first they decided to test whether Super Mario Brothers 3 could recreate the largest gaming industry of that time on a PC. This is a two-dimensional game for the Super Nintendo Entertainment System [app. Trans .: in fact, for Nintendo Entertainment System] , which was played on a regular TV screen. The goal of the game is to drive a mustached plumber named Mario, jump on platforms, dodge threats and run around the world under a blue sky and clouds. When Mario ran, the level scrolled from left to right, so that the character was always around the middle of the screen. To achieve the required graphics speed, Nintendo used specialized hardware. “We had vivid examples of console games [such as Mario], in which smooth scrolling was present,” says John Carmack. "But [in 1990] no one could do the same on the IBM PC."

After several nights of experimentation, Carmack figured out how to emulate side-scrolling on a PC. The image of the screen in the game was rendered or rendered by assembling an array of tiles 16 by 16 pixels in size. Usually more than 200 such square tiles were required for a screen background: a blue sky tile here, a cloud tile there, and so on. The graphics of the active elements, such as Mario, were drawn over the background.

All attempts to redraw the entire background in each frame resulted in too slow work of the game, so Carmack came up with how to redraw only a small number of tiles for each frame, which significantly increased the speed of the game. This technique used the capabilities of the recently released type of graphic cards and was based on the observation that the player’s movement occurs gradually, that is, most of the objects in the next frame are already drawn in the current one.

The new graphics cards were known as the Enhanced Graphics Adapter (EGA) cards. They had more built-in video memory that the Color Graphics Adapter (CGA) had, and they could display at the same time not four, but 16 colors. For Carmack, the extended video memory had two important consequences. First, although the memory of the card was designed for a single screen image with a relatively high resolution, it could store several video screens of low-resolution images, usually 300 by 200 pixels, which is quite a lot for video games. Pointing to different addresses in video memory, the card could choose an image transmitted to the screen about 60 times per second, providing smooth animation without annoying flicker. Secondly, the card could move data inside the video memory much faster than it was possible to copy the image data from the PC's main memory to the card. This allowed to get rid of the main "bottleneck" speed graphics.

Carmack wrote the so-called graphics display engine, in which both of these properties were used to the maximum. It applied a technique originally developed in the 1970s to scroll large images, such as satellite photos. First, he collected the entire screen in video memory, tile behind tile, plus a border the width of one tile [see “Scrolling with action” illustration]. If the player moved one pixel in any direction, the display slider moved the starting point of the image transmitted to the screen one pixel in the corresponding direction. There was no need to draw new tiles. When the player's movements finally moved the screen image to the outside of the border, the engine still did not redraw much of the screen. Instead, he copied almost all the finished image - the part that has not changed - to another part of the video memory. Then he added new tiles and moved the original display point of the screen to point to a new image.

Scrolling with action: to create a two-dimensional scrolling in a PC game, programmer John Carmack cheated a bit because he refused to redraw the screen in each frame. He built a video-stored background from graphic tiles [left], but sent only part of the image to the screen [top left, inside the orange border]. When the player's character [the yellow circle] moved, the background transmitted to the screen was changed so as to include tiles outside the border [top right]. New background elements were required only after shifting to the width of one tile. Then most of the background was copied to another area of memory [bottom right] and the screen image was centered on a new background.

In short, instead of forcing the PC to redraw tens of thousands of pixels with each player movement, it was usually enough for the engine to change only a single address in the memory — one that pointed to the starting point of the screen image — or, in the worst case, draw a relatively thin strip. pixels with new tiles. Therefore, the computer CPU had a lot of time for other tasks, such as drawing and animation of moving game platforms, hostile characters and other active elements with which the player interacted.

Hall and Carmack prepared a clone of Mario for the PC, which they called Dangerous Dave in Copyright Infringement. But their employer, Softdisk, was not interested in publishing games for then expensive EGA cards, he preferred to stick to the market for CGA applications. So the emerging company id Software went into the shadows and used its technology to create its own side-scroller for the PC called Commander Keen. When it came to the release of the game, they contacted the game publisher Scott Miller, who convinced them to use a distribution scheme as new as their engine: shareware.

In the 1980s, hackers began selling their programs on a shareware model that relied on a code of honor: try the software, and if you like it, pay. But it was used only for utilitarian programs, such as file tools or word processors. Miller decided that the next milestone would be games. He said: instead of giving up the game entirely, why not give away only the first part to get the player to buy the rest? id agreed that Miller’s Apogee company release the game. Before Commander Keen, the most successful shareware game Apogee sold only a few thousand copies. But a few months after the release of Keen in December 1990, its circulation was 30,000 copies. According to Miller, for the nascent world of PC games "it was like a small atomic bomb."

Add depth

Meanwhile, programmer Carmack continued to expand the possibilities of graphics. He experimented with 3D-graphics from the middle grades of the school, creating on his Apple II frame logos MTV. Since then, some game creators have been experimenting with three-dimensional first-person graphics, in which flat tiles of two-dimensional games have been replaced with polygons that form the surface of the surrounding player space. The player no longer felt outside the world, he saw it from the inside.

However, the results were inconsistent. The PCs were too slow to redraw detailed 3D scenes as the player moved. They had to draw many surfaces for each frame transmitted to the screen, including those that were overlapped by other surfaces closer to the player.

Carmack had an idea that would allow the computer to draw only those surfaces that are visible to the player. “If you are ready to limit the universality of your approach,” he says, “you can almost always achieve more.”

Therefore, he did not begin to solve the general problem of drawing arbitrary polygons, which can be in an arbitrary place in space, but developed a program that will draw only trapeziums. At that time, he was worried about the walls (which are trapezoid-shaped in 3D), and not the ceiling or the floor.

For his program, Carmack simplified the technique used on the powerful systems of the time to render realistic images. In the so-called ray tracing (raycasting), the computer draws the scene, drawing lines from the player's location in the direction of his gaze. When a line collides with a surface, the pixel corresponding to this line on the player’s screen is colored with the corresponding color. Computational time of the computer is not spent on drawing surfaces that still will not be visible. Drawing only the walls, Carmack could very quickly recreate scenes using ray tracing.

Carmack's last task was to decorate the 3D world with treasure chests, enemies and other objects. He again simplified the task, this time using two-dimensional graphic icons called sprites. He forced the computer to scale the size of the sprite, depending on the position of the player, so that he would not have to simulate objects as three-dimensional figures (otherwise the game performance would have greatly decreased) Combining sprites with ray tracing, Carmack managed to place players in a fast 3D world. The result of this work was the game Hovertank, released in April 1991. It was the first fast 3D first person shooter for the PC.

At about the same time, his fellow programmer Romero heard about a new graphic technique called texture mapping. This technique allows you to impose on the surface instead of a solid solid color realistic textures. In the next game of the company, Catacombs 3D, the player ran through the maze, shooting fireballs at his enemies with the help of another novelty - a hand drawn at the bottom of the screen. As if the player was looking at his own hand, stretched into the computer screen. By adding a 3D hand to Catacombs, id Software made a serious psychological statement to the audience: now you are not just playing the game - you are in it.

Instant sensation

For the next game id, Wolfenstein 3D, Carmack improved his code. The key decision that allowed the graphics engine to do as little work as possible was the following: in order to simplify the drawing of the walls, all of them should be the same height.

This greatly accelerated ray tracing. With standard ray tracing, one line is projected through space for each displayed pixel. At that time, the usual 320 by 200 pixel screen image required 64,000 lines. But since Carmack's walls were the same from top to bottom, he only needed to trace along one horizontal plane, that is, only 320 lines [see the “Ray Tracing in 3D-rooms” diagram].

Ray tracing in 3D-rooms: for fast rendering of three-dimensional rooms without rendering overlapped, that is, unnecessary surfaces, Carmack used a simplified system of ray tracing, a technique used to create realistic 3D images. When tracing rays, the computer draws scenes by drawing lines from the player's point of view [above] through an imaginary grid so that they collide with surfaces visible to the player. In this case, only such surfaces are drawn.

Carmack simplified the technique by making all the walls the same height. This allowed to emit rays from the player in only one horizontal 2D-plane [in the middle] and scale the apparent height of the wall according to its distance from the player instead of defining each individual point of the wall. The result was a final 3D image of the walls [bottom].

Carmack's engine was incredibly fast, and Romero, Adrian Carmack and Hall decided to create a brutal game in which an American soldier had to destroy the Nazis, making his way through the maze of levels. After the release in May 1992, Wolfenstein 3D instantly became a sensation and a bit of a PC business card. When Intel wanted to show journalists the speed of its new Pentium processor, it showed a system running Wolfenstein.

In addition, Wolfenstein gave players unexpected freedom - they could change the game, complementing it with their own levels and graphics. Instead of Nazi officers, players could, for example, use the star of American television shows - the purple dinosaur Barney. Carmack and Romero did not in any way try to pursue the creators of such "mutated" versions of Wolfenstein. They, like hackers, such activities only pleased.

In their next game, Doom, two important effects were added, on which Carmack experimented while working on another game of 1992, Shadowcaster, at Raven. The first is the imposition of textures on ceilings and floors, and not just on the walls. The second is the addition of light attenuation. Such lighting meant that, as in real life, distant objects were shaded, while in Wolfenstein each room was brightly lit, with no variations in shades.

By then, Carmack had already programmed for the Video Graphics Adapter (VGA) cards, which had supplanted the EGA cards. VGA allowed the use of 256 colors - a lot of progress compared to 16 EGA colors, but still not enough to implement all the shading required for dimming effects.

The solution was to limit the graphics of the game, thanks to which it was possible to use 16 shades of each of 16 colors. Then Carmack programmed the computer to display different shades based on the position of the player in the room. The darkest shades of color were applied to the far parts of the room. Near surfaces have always been brighter than distant ones. This added to the game the atmosphere of immersion.

Both Carmack and Romero wanted to get rid of the simple schemes used in their previous game levels. “My whole approach was this - let's not repeat anything that was in Wolfenstein at all,” says Romero, “let there be no such levels of illumination, let there not be the same height of ceilings, let the walls not be at an angle of 90 degrees [relative to each other]. Let's demonstrate Carmack’s new technology by creating something completely different. ”

Using the progress in speed and memory capacity of computers, Carmack began work on the task of drawing polygons with more arbitrary shapes than the trapezium in Wolfenstein. "It seemed that [the graphics engine] would not be fast enough," he recalls, "so we had to look for a new approach ... I understood that in order to ensure speed, we still need to have strictly horizontal floors and vertical walls." The solution to the problem was a technique known as binary space partitioning (BSP). Henry Fuchs, Zvi Kedem and Bruce Naylor, who worked at Bell Labs, in the 1980s popularized the BSP techniques used to render 3D models of objects on the screen.

The fundamental problem of transforming a 3D model of an object into a screen image is to determine which surfaces are visible, that is, it comes down to calculations: where is surface Y relative to surface X, in front of or behind it? Traditionally, these calculations were performed with each change in the position of the model.

The following observation was used in the BSP technique: the model itself is static, and although its various positions create different images, the relationship between the surfaces actually remains unchanged. BSP made it possible to determine these relationships once, and then store them in such a way that in order to determine surfaces hidden by other surfaces from any arbitrary point of view, it was necessary to find relevant information, rather than calculate everything anew.

BSP takes the space occupied by the model and breaks it into two parts. If one of the parts contains more than one surface of the model, then it is broken again, and so on, until the space is completely divided into parts containing one surface. The resulting hierarchy of branching is called a BSP-tree and extends to all operations, from the initial splitting of the space to individual elements. Moving along a certain path through the nodes of the saved tree, you can generate key information about the connections between the surfaces from a specific point of view of the model.

Carmack wondered: is it possible to use BSP to create not just a single 3D model of an object, but a whole virtual world? He again simplified the task by introducing a restriction: the walls should be vertical, and the floors and ceilings should be horizontal. That is, BSP can be used to split not the 3D space itself, but a much simpler two-dimensional plan of this space, while retaining all the important information about the relative position of the surfaces [see scheme "Divide and conquer"].

Illustration: Armand Veneziano

Divide and Conquer:“Doom perceives all [the surfaces of the three-dimensional world] as lines,” says Carmack, “splitting lines and sorting lines is much easier than sorting polygons ... The whole point is to take BSP [-tree] and apply them to ... the plane, and not to the polygons of the 3D world, which greatly simplifies the task. ”

In addition, Doom was designed in such a way as to facilitate the work of hackers, complementing the game with its own graphics and levels. A network game has been added to Doom, allowing several players to fight over a local network or via a modem.

The game was released in December 1993. Thanks to the multiplayer mode, extensibility, attractive graphics and quality levels, immersed the player in the futuristic world of a space paratrooper fighting against the legions of hell, it has become a real phenomenon. In the sequel, Doom II, more weapons appeared, new levels, but the game itself used the same engine. It was released in October 1994, and sales amounted to 1,500,000 copies, about $ 50 each. According to the NPD Group, it remains third in the list of best-selling computer games.

Finish line

In the mid-1990s, Carmack felt that PC technology had become powerful enough to achieve the following two goals in the next game, Quake. He wanted to create an arbitrary 3D world in which real 3D objects can be viewed from any angle, in contrast to the flat Doom and Wolfenstein sprites. The solution was to use the power of the latest PC generation and use BSP to split the volume of a truly three-dimensional space, and not just areas of a two-dimensional plan. He also wanted to create a game that could be played over the Internet.

For the game over the Internet was used client-server architecture. The server (which could be running on any PC) must handle the entire game environment consisting of rooms, physics of moving objects, player positions, and so on. The client PC processes the data entered by the player through the keyboard and mouse, as well as the information displayed in the form of graphics and sound. However, when connected online, the game is subject to delays and losses of network packets, and this interferes with fast and active play. To reduce the effect of this interference, id limited the transmission of packets only with the most necessary information, for example, the position of the player.

“The solution was to use an unreliable channel to transmit all the data,” says Carmack, “we took advantage of the continuous transmission of packets and used [slower] reliable transmission only to establish communications and correct errors.” To reduce the load on the network, various methods of data compression were also used. The convenience of the multiplayer mode of the new game - Quake - was rewarded by the emergence of a huge online community after the release of the project in June 1996.

The importance of graphics quality

Games stimulated the evolution of video cards. But just multiplayer games created an insatiable demand for increasingly sophisticated graphics systems, giving the market the most significant impetus. Business users did not care if their graphic card, which they use to read e-mail, would update the screen 8 times a second, while their neighbor’s card updates the screen 10 times a second. But for a gamer playing Quake, in which the difference between life and death was measured in tenths of a second, it was a very important question.

Quake soon became the de-facto performance indicator for the consumer video card industry. David Kirk, Lead Researcher at NVIDIA, a leading graphics processor manufacturer (Santa Clara, Calif.): “Id Software games have always pushed progress.”

Quake II became an improved version of its predecessor, taking advantage of hardware acceleration, which could be used on a PC to transfer most of the work on rendering 3D scenes from the CPU to the video card. Quake III, released in December 1999, took another step forward and became the first notable game in which hardware acceleration became mandatory. This is reminiscent of how id wanted to “burn bridges” in 1990, insisting on using Commander Keen EGA instead of CGA.

Carmack himself believes that the most important innovations were contained in Quake. Everything after Quake, he says, has become, in essence, an improvement on the topic. For example, Return to Castle Wolfenstein was based on the Quake III engine, and most of the levels and game logic was created by a third-party company.

“On this path were critical points of development,” says Carmack, “first, the transition to the first-person view, then arbitrary 3D worlds, then hardware acceleration ... But we coped with the most important tasks. All these aspects can still be improved, but ... we can already create any world with a certain degree of accuracy. We can improve the quality, special effects and all that. But we already have the fundamental tools needed to create games that are simulations of the world. ”

Source: https://habr.com/ru/post/339220/

All Articles