Docker security issues

As the Docker ecosystem matures and stabilizes, the topics related to the security of this product are attracting more and more attention. When designing the infrastructure, it is impossible to avoid the issue of Docker security.

Docker has some great security features built in:

Docker containers are minimal: one or more running processes, only the necessary software. This reduces the likelihood of being affected by software vulnerabilities.

Docker containers perform a specific task. It is known in advance what should be performed in the container, paths to directories, open ports, daemon configurations, mount points, etc., are defined. In such conditions, it is easier to detect any security-related anomalies. This system organization principle goes hand in hand with microservice architecture, allowing to significantly reduce the attack surface.

Docker containers are isolated from both the host and other containers. This is achieved thanks to the ability of the Linux kernel to isolate resources using

cgroupsandnamespaces. But there is a serious problem - the kernel has to be divided between the host and the containers (we will return to this topic a little later).- Docker containers are reproducible. Thanks to their declarative build system, any administrator can easily figure out from what and how the container was made. It is highly unlikely that you end up with an unknown person who is configured a legacy system that no one wants to configure again. Familiar, is not it? ;)

However, there are weaknesses in Docker-based systems. In this article we will just talk about them, having considered 7 Docker security issues.

Each section is divided into the following parts:

- Threat description: attack vector and causes.

- Best Practices: What can be done to prevent threats of this type.

- Example (s): simple, easily reproducible exercises for practice.

Docker host and kernel security

Description

In a compromised system, insulation and other container security mechanisms are unlikely to help. In addition, the system is designed so that the containers use the host core. For many reasons already familiar to you, this increases work efficiency, but from a security point of view this feature is a threat that must be dealt with.

Best practics

The topic of securing a Linux host is quite extensive, and a lot of literature has been written about it. As for Docker exclusively:

Make sure that the configuration of the host and the Docker engine is secure (access is restricted and provided only to authenticated users, the communication channel is encrypted, etc.) I recommend using the Docker bench audit tool tool to check the configuration for compliance with best practices.

Update the system in a timely manner, subscribe to the security update of the operating system and other installed software, especially if it is installed from third-party repositories (for example, container orchestration systems, one of which you have probably already installed).

Use minimal, specifically designed for use with containers of the host system, such as CoreOS, Red Hat Atomic, RancherOS, etc. This will reduce the attack surface, as well as take advantage of convenient features such as, for example, performing system services in containers.

- You can use the Mandatory Access Control system to prevent unwanted operations from being performed both on the host and in containers. This will help you tools such as Seccomp, AppArmor or SELinux.

Examples:

Seccomp allows you to limit the actions available to the container, in particular - system calls. This is something like a firewall, but for the kernel call interface.

Some privileges are blocked by default. Try the following commands:

# docker run -it alpine sh / # whoami root / # mount /dev/sda1 /tmp mount: permission denied (are you root?) or

/ # swapoff -a swapoff: /dev/sda2: Operation not permitted It is possible to create a custom Seccomp profile, for example, by disallowing chmod calls.

Let's download the default profile for Secker Docker:

https://raw.githubusercontent.com/moby/moby/master/profiles/seccomp/default.json While editing the file, you will see the whitelist of the system calls (around line 52), remove the chmod , fchmod and fchmodat from it.

Now run the container with this profile and check the work of the established restrictions:

# docker container run --rm -it --security-opt seccomp=./default.json alpine sh / # chmod +r /usr chmod: /usr: Operation not permitted Going beyond the Docker container

Description

The term “container breakout” is used to denote a situation in which a program running inside a Docker container can overcome isolation mechanisms and gain additional privileges or access to confidential information on a host. To prevent such breakthroughs, a decrease in the number of container privileges issued to it by default is used. For example, the default Docker daemon runs as root, but it is possible to create a user-name namespace or remove potentially dangerous container privileges.

Quote from the article about the default Docker configuration vulnerabilities :

“This experimental exploit is based on the fact that the kernel allows any process to open a file by its inode. On most inodes, the root directory (/) is 2. This makes it possible to go through the directory tree of the host file system until the desired object is found, for example, a password file. ”

Best practics

The privileges (capabilities) that are not needed by the application should be removed.

- CAP_SYS_ADMIN is especially tricky in terms of security, since it gives the right to perform a significant number of superuser-level operations: mounting file systems, entering kernel namespaces, ioctl operations ...

To ensure that container privileges are equivalent to ordinary user rights, create an isolated user namespace for your containers. If possible, avoid running containers with uid 0.

If you still can’t do without a privileged container, make sure that it is installed from a trusted repository (see below, the section “Authenticity of container images”).

- Carefully monitor the mounting of potentially dangerous host resources: /var/run/docker.sock ), / proc , / dev , etc. These resources are usually needed to perform operations related to the basic functionality of containers. Make sure that you understand why and how it is necessary to limit the access of processes to this information. Sometimes it is enough just to set the “read only” mode. Never give write rights without wondering why this right is needed. In any case, Docker uses copy-on-write to prevent changes from occurring in a running container to its base image and potentially to other containers that will be created based on that image.

Examples

The root user of the Docker container can create devices by default. You will probably want to ban it:

# sudo docker run --rm -it --cap-drop=MKNOD alpine sh / # mknod /dev/random2 c 1 8 mknod: /dev/random2: Operation not permitted Root can also change the permissions of any file. It's easy to check: create a file with any ordinary user, run chmod 600 (read and write are only available to the owner), log in as root and make sure that the file is still available to you.

This can also be fixed, especially if you have folders with sensitive user data.

# sudo docker run --rm -it --cap-drop=DAC_OVERRIDE alpine sh Create a regular user and go to his home directory. Then:

~ $ touch supersecretfile ~ $ chmod 600 supersecretfile ~ $ exit ~ # cat /home/user/supersecretfile cat: can't open '/home/user/supersecretfile': Permission denied Many security scanners and malware collect their network packets from scratch. This behavior can be disabled as follows:

# docker run --cap-drop=NET_RAW -it uzyexe/nmap -A localhost Starting Nmap 7.12 ( https://nmap.org ) at 2017-08-16 10:13 GMT Couldn't open a raw socket. Error: Operation not permitted (1) A complete list of privileges can be found here . I recommend that you familiarize yourself with it and remove all the privileges that your containers do not need.

If you create a container without a namespace, then by default, processes running inside the container will be run on behalf of the superuser from the point of view of the host.

# docker run -d -P nginx # ps aux | grep nginx root 18951 0.2 0.0 32416 4928 ? Ss 12:31 0:00 nginx: master process nginx -g daemon off; However, we can create a separate custom namespace . To do this, add the conf key to the /etc/docker/daemon.json file (be careful, follow the json syntax rules):

"userns-remap": "default" Restart the Docker. This will create a user dockremap . The new namespace will be empty.

# systemctl restart docker # docker ps Run the nginx image again:

# docker run -d -P nginx # ps aux | grep nginx 165536 19906 0.2 0.0 32416 5092 ? Ss 12:39 0:00 nginx: master process nginx -g daemon off; Now the nginx process runs in a different (user-defined) namespace. Thus, we managed to improve the isolation of containers.

Docker image authenticity

Description

You can find many Docker images on the Internet that do all sorts of useful and cool things, but if you download images without using any mechanisms of trust and authentication, you are essentially running arbitrary software on your systems.

- Where did this image come from?

- Do you trust its creators? What security policies do they use?

- Do you have an objective cryptographic proof that the image was really created by these people?

- Are you sure that no one changed the image after it was uploaded?

Docker will launch whatever you ask for, so encapsulation will not help here. Even if you use exclusively images of your own production, it makes sense to check if someone changes them after creation. The solution ultimately comes down to the classic PKI-based chain of trust.

Best practics

Common Sense: Do not run untested software and / or software from untrusted sources.

Using the Docker registry servers, which can be found in this list of Docker Security Tools , expand the trust server.

- For any image that loads or runs on the system, provide a mandatory digital signature verification.

Examples

Deploying a full-fledged trusted server is beyond the scope of this article, but you can now start by signing your images.

If you don’t have an account on Docker Hub , get one.

Create a directory with a simple Dockerfile with the following contents:

# cat Dockerfile FROM alpine:latest Collect the image:

# docker build -t <youruser>/alpineunsigned . Log in to your Docker Hub account and download the image:

# docker login […] # docker push <youruser>/alpineunsigned:latest Enable Docker Trust in Docker:

# export DOCKER_CONTENT_TRUST=1 Now try to get the image you just uploaded:

# docker pull <youruser>/alpineunsigned You should receive the following error:

Using default tag: latest Error: remote trust data does not exist for docker.io/<youruser>/alpineunsigned: notary.docker.io does not have trust data for docker.io/<youruser>/alpineunsigned With DOCKER_CONTENT_TRUST turned on, collect the container again. Now it will be signed by default.

# docker build --disable-content-trust=false -t <youruser>/alpinesigned:latest . Now you can download and download signed containers without any security warnings. When you first download a trusted image, Docker will create a root key for you. You will also need a repository key. In both cases, you will be asked to set a password.

Your private keys will be saved in the ~ / .docker / trust directory, restrict access to them and create a backup.

DOCKER_CONTENT_TRUST is an environment variable that will disappear after the terminal session is closed. However, a trust check must be implemented at every stage of the process - from assembling images and placing them in registries to downloading and executing on servers.

Resource abuse

Description

On average, containers are much more numerous than virtual machines. They are lightweight, which allows you to run a lot of containers, even on a very modest iron. This is certainly an advantage, but the other side of the coin is a serious competition for host resources. Errors in software, design flaws, and hacker attacks can lead to Denial of Service . To prevent them, you must properly configure resource limits.

The situation is worsened by the fact that there are several resources that need to be monitored: CPU, memory, disk space, network load, I / O, paging, etc. There are not so obvious resources in the kernel as, for example, user identifiers ( UIDs).

Best practics

By default, in most containerization systems, the restriction of these resources is disabled. However, in the production of their customization is simply required. I recommend to adhere to the following principles:

Use the resource limit functions that come with the Linux kernel and / or containerization systems.

Try to carry out load testing of the system before its launch into commercial operation. For this purpose, both synthetic tests and “playback” of real traffic of the combat system are used. Load testing is vital for finding out the limits and normal workloads.

- Deploy monitoring and alerts for Docker . I am sure that in case of abuse of resources (malicious or not) you would prefer to receive a timely warning instead of falling into the wall at full speed.

Examples

Control groups ( cgroups ) is a tool provided by the Linux kernel that allows you to limit the access of processes and containers to system resources. Some limits can be controlled from the Docker command line:

# docker run -it --memory=2G --memory-swap=3G ubuntu bash This command will set the limit of 2 GB of available memory to a container (only 3 GB for main memory and paging). To check the constraints, run the load simulator, for example, the stress program, which is in the Ubuntu repositories:

root@e05a311b401e:/# stress -m 4 --vm-bytes 8G In the output of the program you will see the line 'FAILED'.

The following lines should appear in the host syslog :

Aug 15 12:09:03 host kernel: [1340695.340552] Memory cgroup out of memory: Kill process 22607 (stress) score 210 or sacrifice child Aug 15 12:09:03 host kernel: [1340695.340556] Killed process 22607 (stress) total-vm:8396092kB, anon-rss:363184kB, file-rss:176kB, shmem-rss:0kB With docker stats you can clarify current memory consumption and set limits. In the case of Kubernetes, in the submission definition, you can reserve the resources necessary for the normal operation of the application, as well as set limits. See requests and limits :

[...] - name: wp image: wordpress resources: requests: memory: "64Mi" cpu: "250m" limits: memory: "128Mi" cpu: "500m" [...] Container Vulnerabilities

Description

Containers are insulated black boxes. If they perform their functions, it is easy to forget which programs of which versions are running inside. The container can perfectly cope with its responsibilities from an operational point of view, while using vulnerable software. These vulnerabilities can be fixed long ago in upstream, but not in your local image. If you do not take appropriate measures, problems of this kind can go unnoticed for a long time.

Best practics

The presentation of containers as unchangeable atomic parts of the system is reasonable from an architectural point of view, however, to ensure safety, their contents should be regularly checked:

- To get the latest fixes for vulnerabilities, regularly update and rebuild your images. Of course, do not forget to test them before sending to production.

- Patching working containers is considered bad form. It is better to rebuild the image with each update. Docker implements a declarative, efficient and easy-to-understand build system, so this procedure is actually simpler than it seems at first glance.

- Use software that regularly receives security updates. Everything that you install manually, bypassing the repositories of your distribution, you need to update yourself in the future.

- Gradual rolling updates without service interruption are considered to be a fundamental feature of the system building model using Docker and microservices.

- User data is separated from container images, which makes the upgrade process safer.

- Do not complicate. Simple systems are less likely to require updates. The smaller the components in the system, the smaller the attack surface and the easier the upgrade. Break containers if they become too complex.

- Use vulnerability scanners. There are plenty of them now - both free and commercial. Try to keep abreast of developments related to the security of the software you use, subscribe to mailing lists, alert services, etc.

- Make security scanning a mandatory step in your CI / CD chains, automate as much as possible - don't rely only on manual checks.

Examples

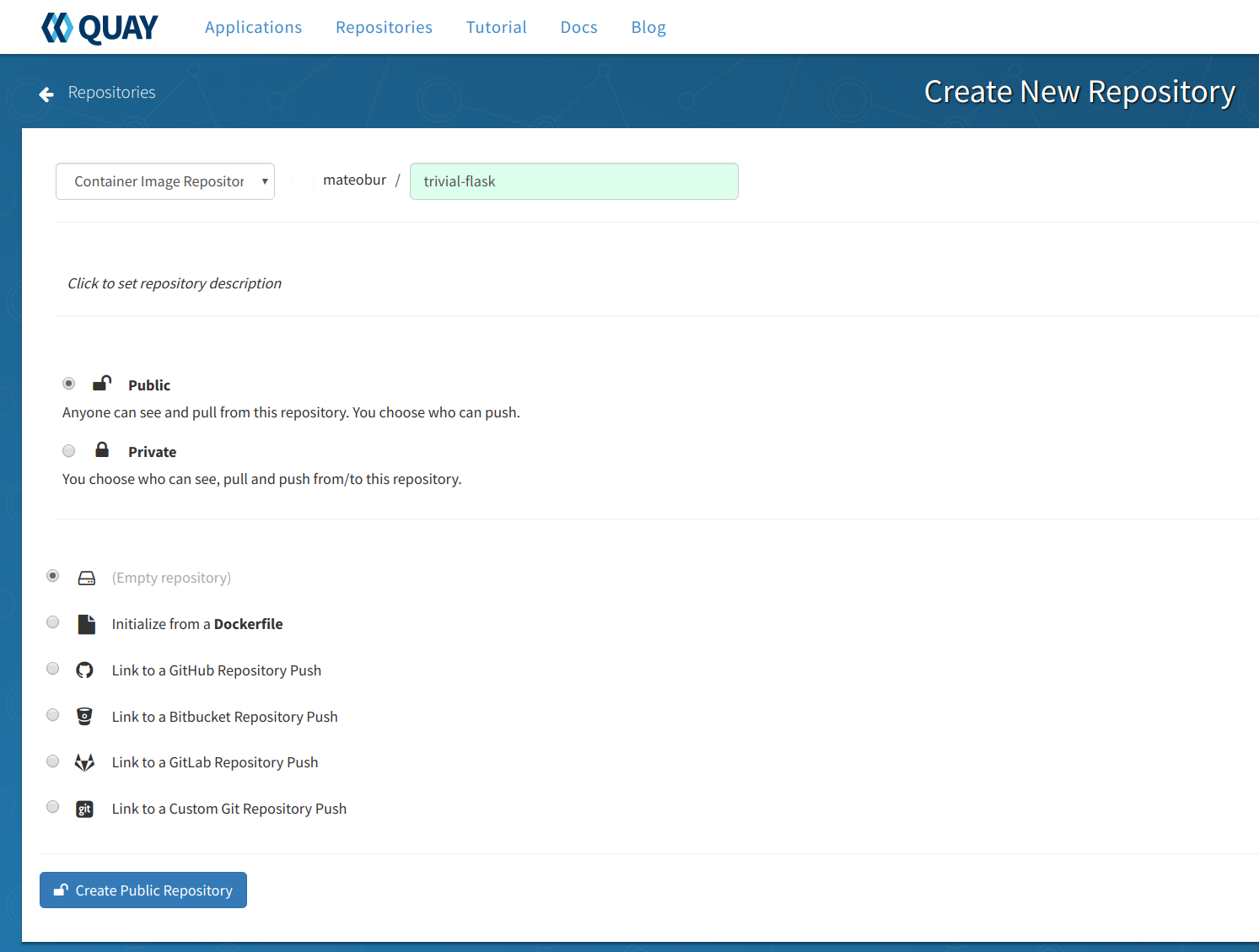

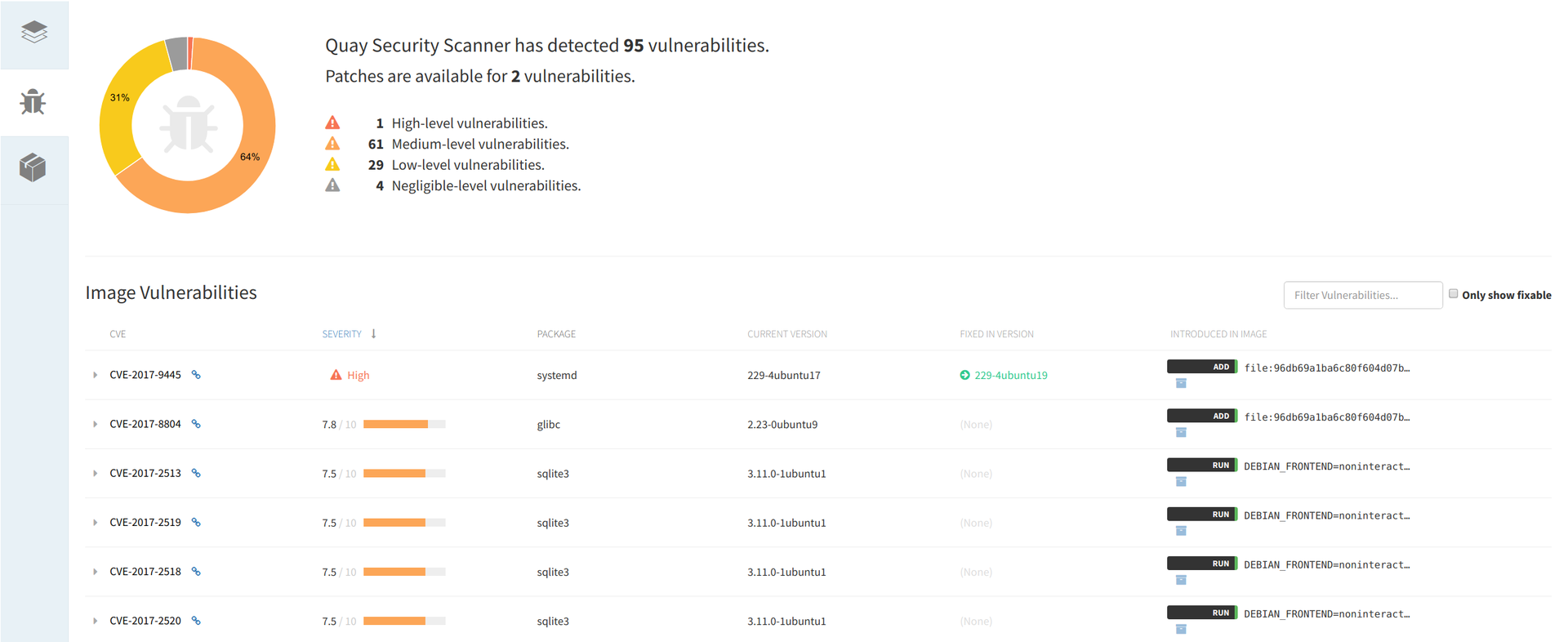

Many Docker image registries offer an image scanning service. Choose, for example, CoreOS Quay , which uses an open source Docker image security scanner called Clair . Quay is a commercial platform, but some services are free. You can create a trial account by following these instructions .

After registering your account, open Account Settings and set a new password (you will need it to create repositories).

Click + in the upper right corner and create a new public repository:

Here we will create an empty repository, but, as can be seen in the screenshot, there are other options.

Now from the console, log in to Quay and upload a local image there:

# docker login quay.io # docker push quay.io/<your_quay_user>/<your_quay_image>:<tag> If the image is already loaded, you can click on its ID and see the results of the security scan, sorted in descending order of vulnerability, which are provided with links to CVE and package versions containing fixes.

')

Docker credentials and secrets

Description

In most cases, programs need confidential data for normal operation: user password hashes, certificates, encryption keys, etc. This situation is exacerbated by the nature of the containers: you do not just raise the server, but set up an environment in which microservices can be constantly created and destroyed. In this case, an automatic, reliable and secure process of using confidential information is required.

Best practics

Do not use environment variables to store secrets. This is a common and unsafe practice.

Do not keep secrets in the images of containers. Read this discovery and resolution report for a vulnerability in one of the IBM services : “The private key and certificate were left behind in the container image by mistake.”

- If your system is complex enough, deploy Docker credential management software. In the Docker security tools article, we looked at several commercial and free solutions. Take up creating your own secrets repository (with downloading secrets using

curl, mounting volumes, etc., etc.) only if you know very well what you are doing.

Examples

First, let's see how environment variables are intercepted:

# docker run -it -e password='S3cr3tp4ssw0rd' alpine sh / # env | grep pass password=S3cr3tp4ssw0rd That is, it is elementary, even if you switch to a regular user with su :

/ # su user / $ env | grep pass password=S3cr3tp4ssw0rd . , Kubernetes secret . Docker Swarm , :

Docker Swarm (, ):

# docker swarm init --advertise-addr <your_advertise_addr> — :

# cat secret.txt This is my secret (secret resource):

# docker secret create somesecret secret.txt Docker Swarm ( uid, gid, mode . .):

# docker service create --name nginx --secret source=somesecret,target=somesecret,mode=0400 nginx nginx — :

root@3989dd5f7426:/# cat /run/secrets/somesecret This is my secret root@3989dd5f7426:/# ls /run/secrets/somesecret -r-------- 1 root root 19 Aug 28 16:45 /run/secrets/somesecret , .

Docker

Description

Docker: , , , , . . , , ?

. 0-day, ? Windows, .

, . .

- , .

Examples

Sysdig Falco — , . . Sysdig Falco Linux- Docker, , container.id, container.image, Kubernetes .

Falco . , - production.

Falco ( production; , ):

# curl -s https://s3.amazonaws.com/download.draios.com/stable/install-falco | sudo bash # service falco start nginx:

# docker run -d --name nginx nginx # docker exec -it nginx bash /var/log/syslog :

Aug 15 21:25:31 host falco: 21:25:31.159081055: Debug Shell spawned by untrusted binary (user=root shell=sh parent=anacron cmdline=sh -c run-parts --report /etc/cron.weekly pcmdline=anacron -dsq) Conclusion

Docker was created taking into account security requirements, and some of its features help in ensuring it. However, do not forget about caution, because there is no other way than to constantly monitor current trends and apply the best practices that have developed in this area. Also consider using container-specific security tools that help combat vulnerabilities and threats related to using Docker.

I hope the topic you are interested in.

References:

- Original: 7 Docker security vulnerabilities and threats .

Source: https://habr.com/ru/post/339126/

All Articles