V8 under the hood

Lead Yandex.Money developer Andrei Melikhov (also devSchacht’s editor / translator), using the example of the V8 engine, talks about how the program goes through what stages, before it turns into machine code, and why a new compiler is really needed.

The material is based on the author's report at the conference HolyJS 2017, which was held in St. Petersburg on June 2-3. Presentation in pdf can be found at this link .

A few months ago, the movie The Last Dragon Slayer was released. There, if the protagonist kills the dragon, then the magic disappears in the world. I want to act as an antagonist today, I want to kill the dragon, because in the JavaScript world there is no place for magic. Everything that works, works explicitly. We need to figure out how it works in order to understand how it works.

')

I want to share with you my passion. At some point in time, I realized that I don’t know how it works under the hood of a V8. I began to read literature, watch reports, which are mostly in English, have accumulated knowledge, systematized and want to bring them to you.

I hope everyone knows the difference, but I repeat. Compiled languages: source code in them is converted by the compiler into machine code and written to a file. They use compilation before execution. What is the advantage? It does not need to be recompiled, it is as automated as possible for the system under which it is compiled. What is the disadvantage? If your operating system changes, and if you do not have the source code, you lose the program.

Interpreted languages - when the source code is executed by an interpreter program. The advantages are that it is easy to achieve cross-platform. We deliver our source code as is, and if there is an interpreter in this system, then the code will work. JavaScript is, of course, interpretable.

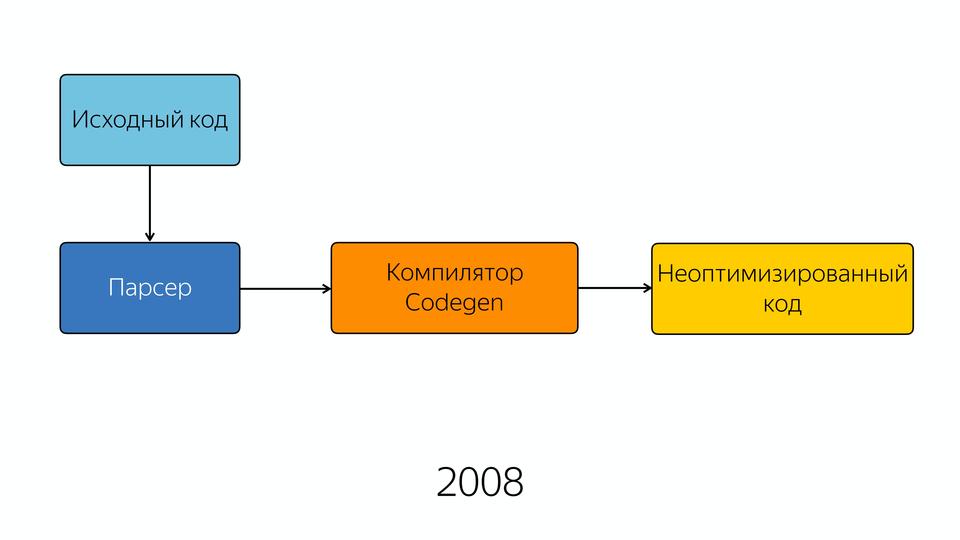

Immerse yourself in history. In 2008, Chrome browser comes out. That year, Google presented the new V8 engine. In 2009, Node.js was introduced on the same engine, which consisted of V8 and the libUV library, which provides io, i.e. accessing files, network stuff, etc. In general, two very important things for us are built on the V8 engine. Let's see what it consists of.

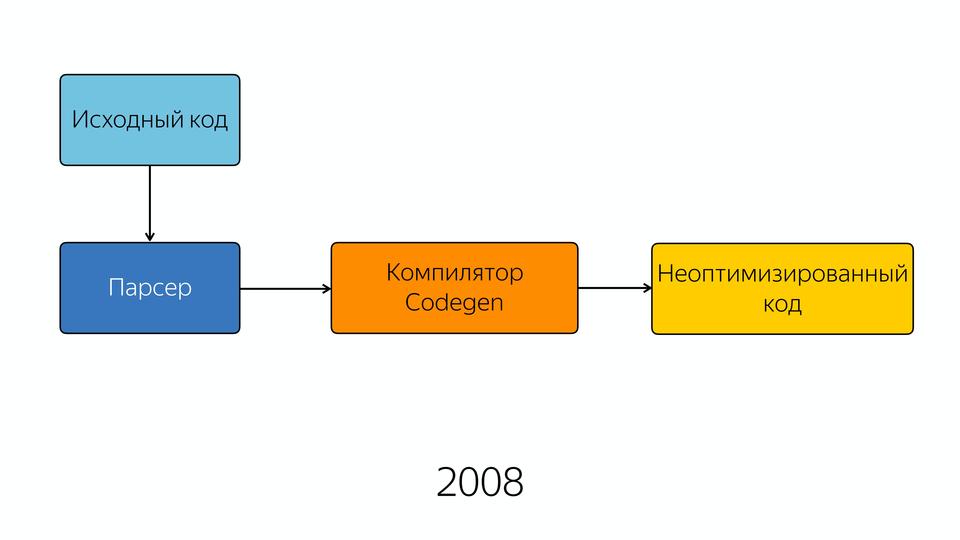

In 2008, the engine was pretty simple inside. Well, relatively simple - his scheme was simple. The source code got into the parser, from the parser into the compiler, and at the output we received a semi-optimized code. Semi-optimized, because there wasn’t good optimization yet. Perhaps, in those years, we had to write the best JavaScript, because it was impossible to hope that the optimizer would optimize it internally.

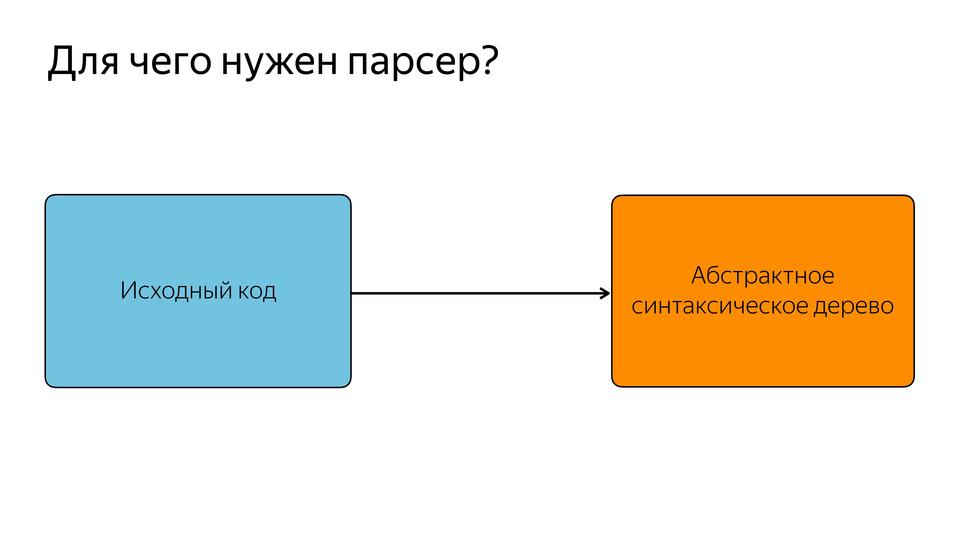

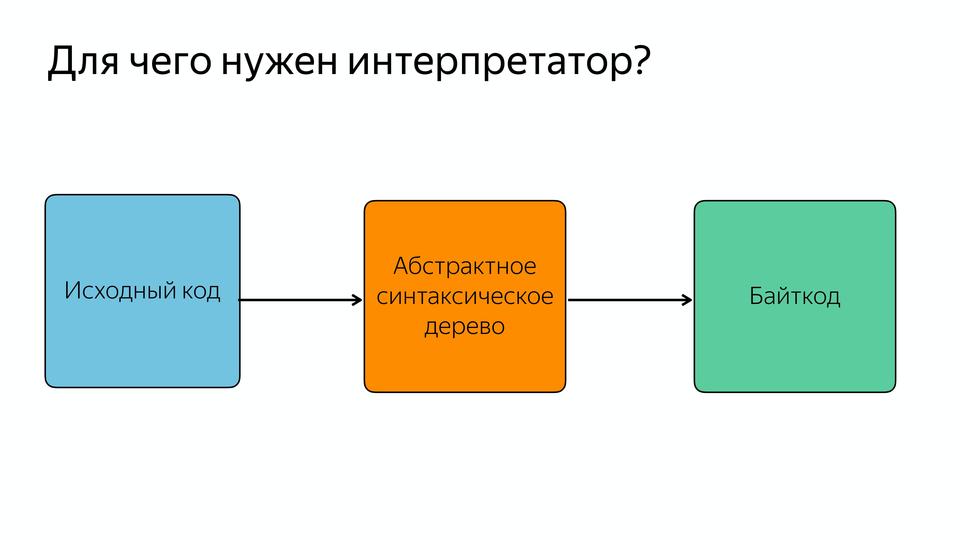

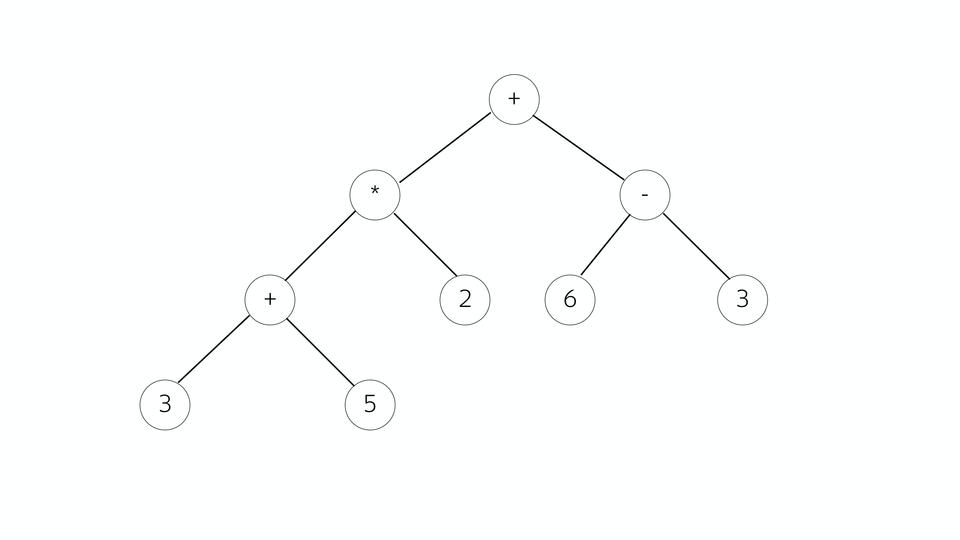

The parser is needed in order to turn the source code into an abstract syntax tree or AST. AST is a tree in which all vertices are operators, and all leaves are operands.

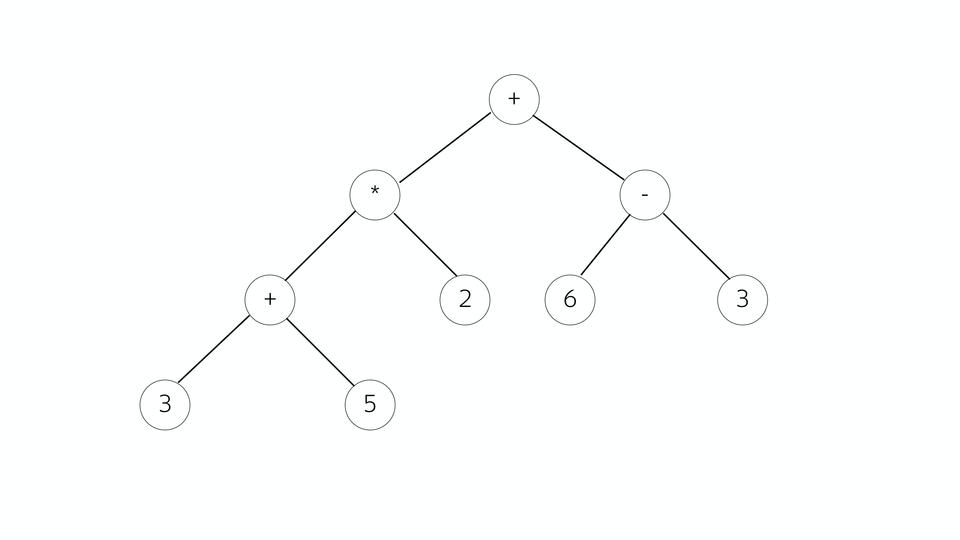

Let's look at an example of a mathematical expression. We have such a tree, all vertices are operators, branches are operands. What makes it good is that it is very easy to generate machine code from it later. Who worked with the Assembler, knows that most often the instruction consists of what to do and what to do.

And here we can just see if we are an operator or operand at the current point. If this is an operator, we look at its operands and assemble the command.

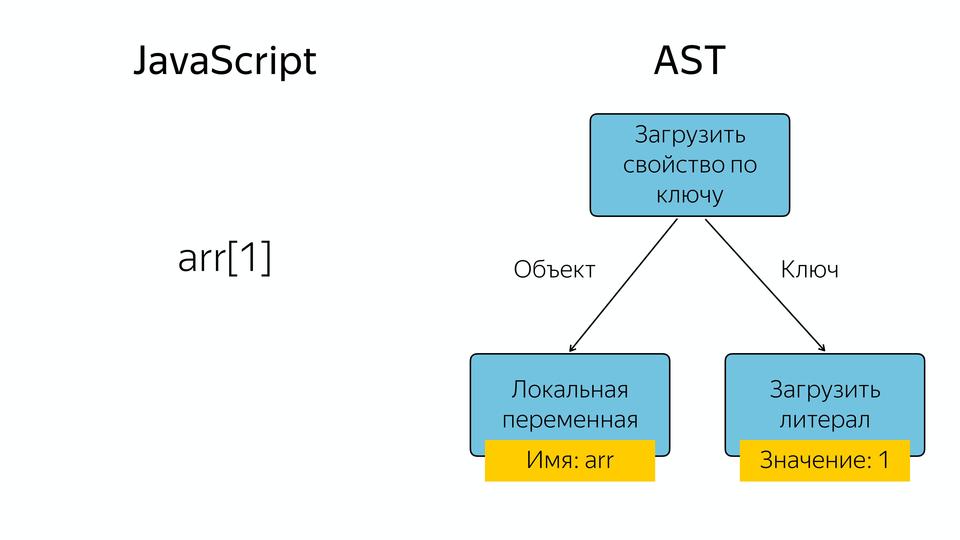

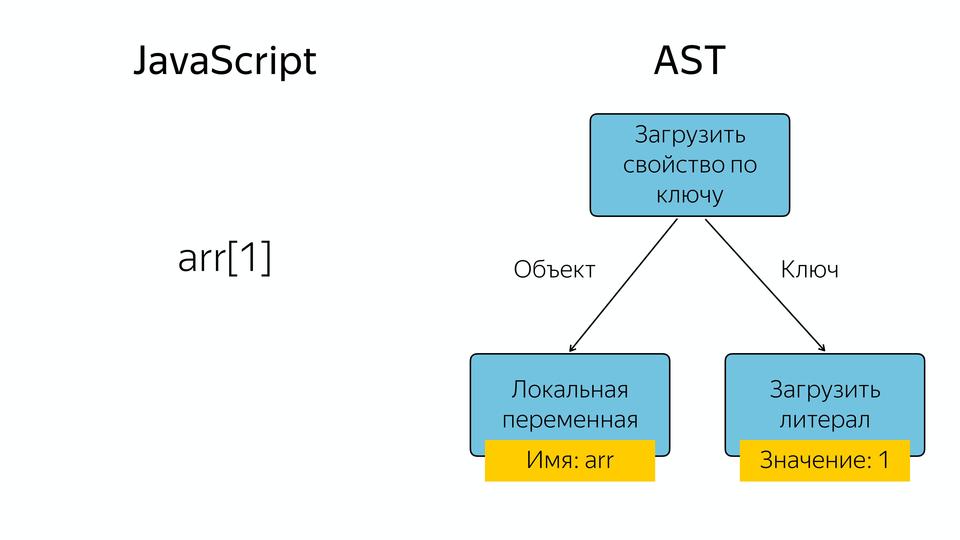

What happens in JavaScript if we have, for example, an array, and we request an element from it at index 1? An abstract syntax tree appears in which the operator “load a property by key” and the operands are the object and the key by which we load this property.

As I said, we have an interpreted language, but in its scheme we see the compiler. Why is he? There are actually two types of compilers. There are compilers (ahead-of-time) that compile before execution, and JIT compilers that compile at run time. And due to JIT compilation, good acceleration is obtained. What is it for? Let's compare.

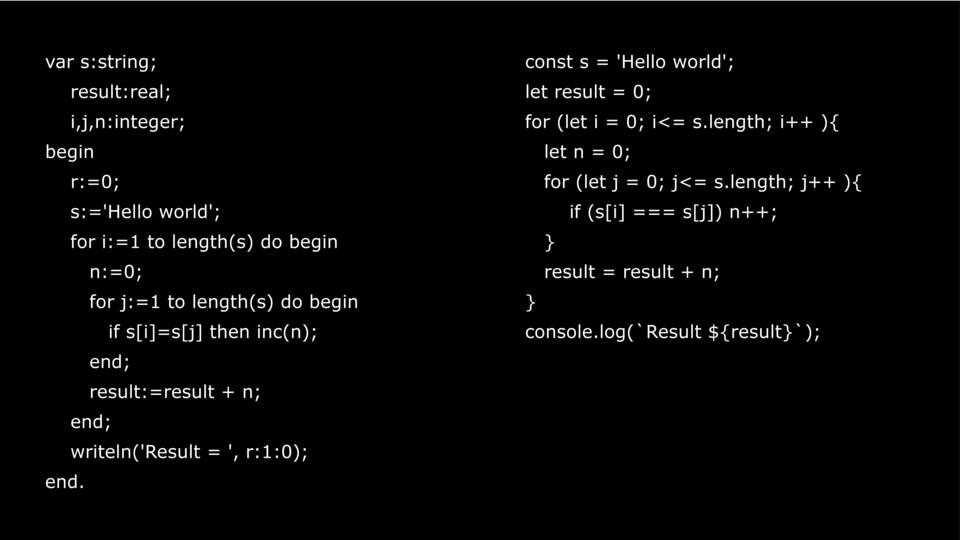

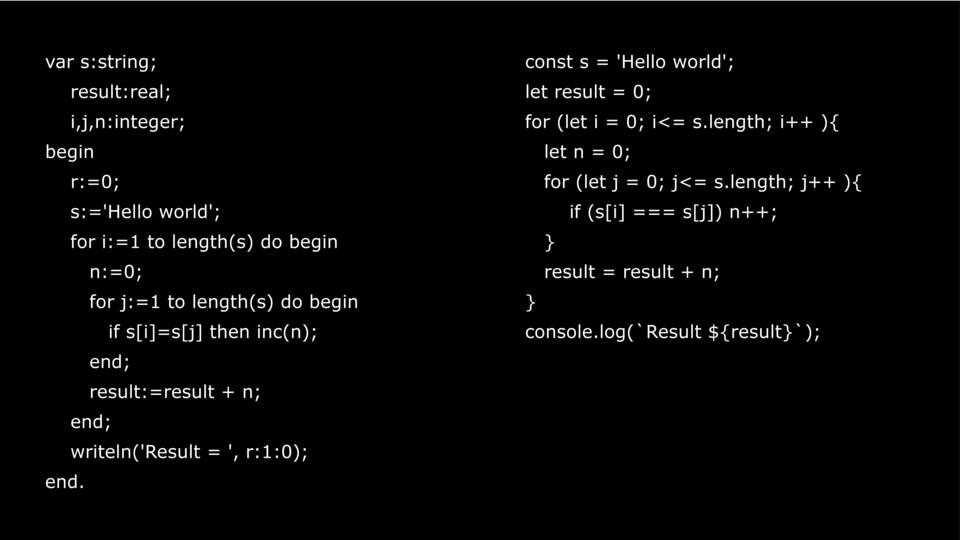

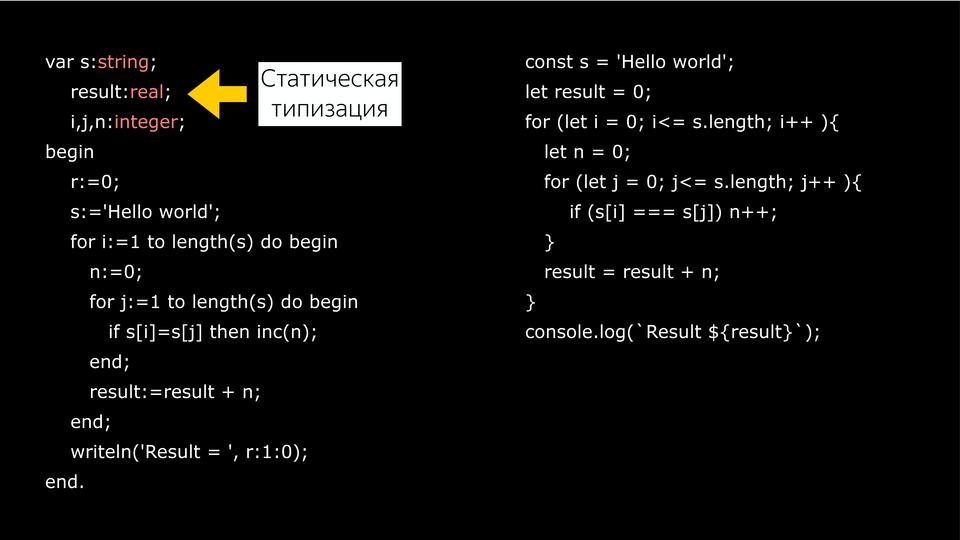

There is one and the same code. One on Pascal, another on JavaScript. Pascal is a great language. I think that it is necessary to learn how to program from it, but not with JavaScript. If you have a person who wants to learn how to program, then show him Pascal or C.

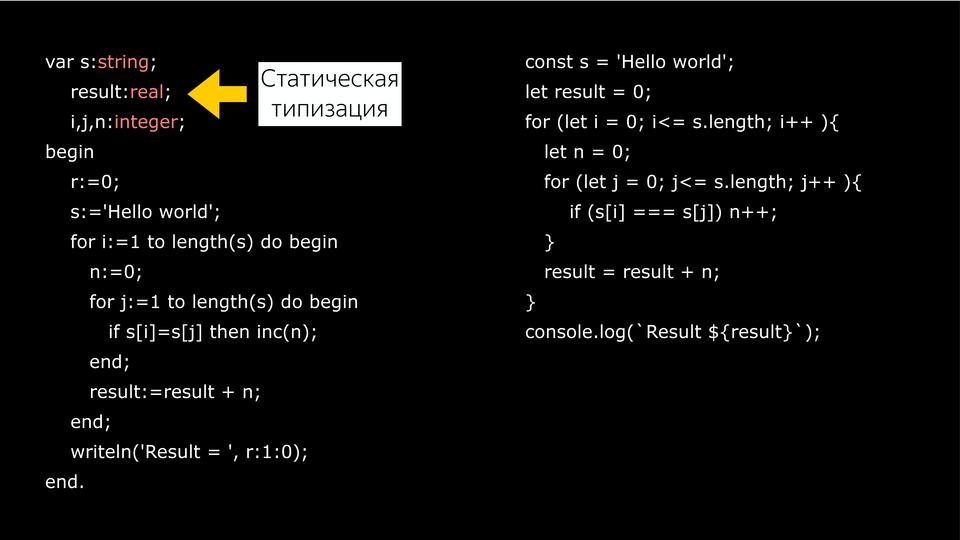

What's the Difference? Pascal can be both compiled and interpretable, and JavaScript already requires interpretation. The most important difference is static typing.

Because when we write on Pascal, we specify the variables that are needed, and then we write their types. Then the compiler can easily build a good optimized code. How do we refer to variables in memory? We have an address, and we have a shift. For example, Integer 32, then we make a shift to 32 at this address in memory and receive data.

In JavaScript there is no, we always change types at runtime, and the compiler, when it executes this code, the first time it performs it as it is, but collects information about types. And the second time, when he performs the same function, he is already based on the data he received last time, by assuming what types were there, can do some kind of optimization. If everything is clear with variables, they are determined by value, then what about our objects?

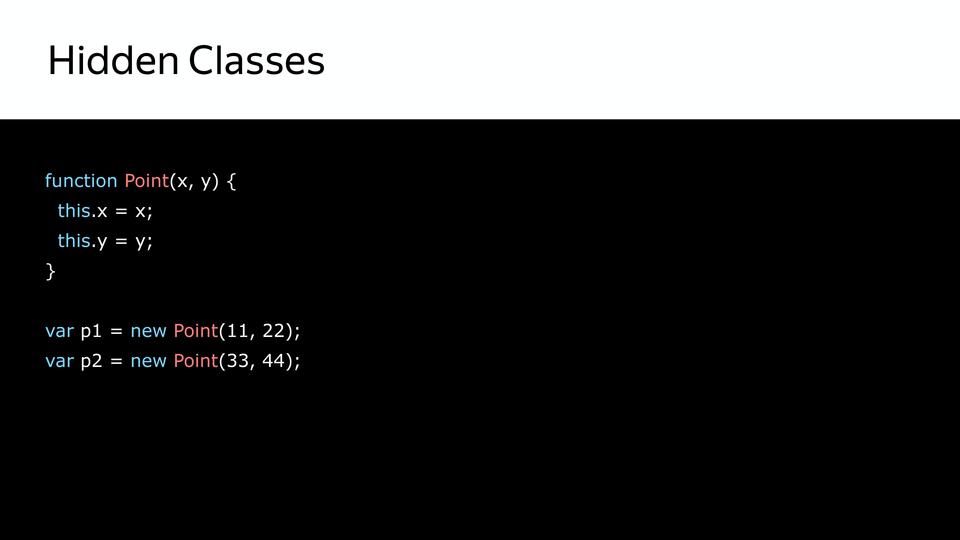

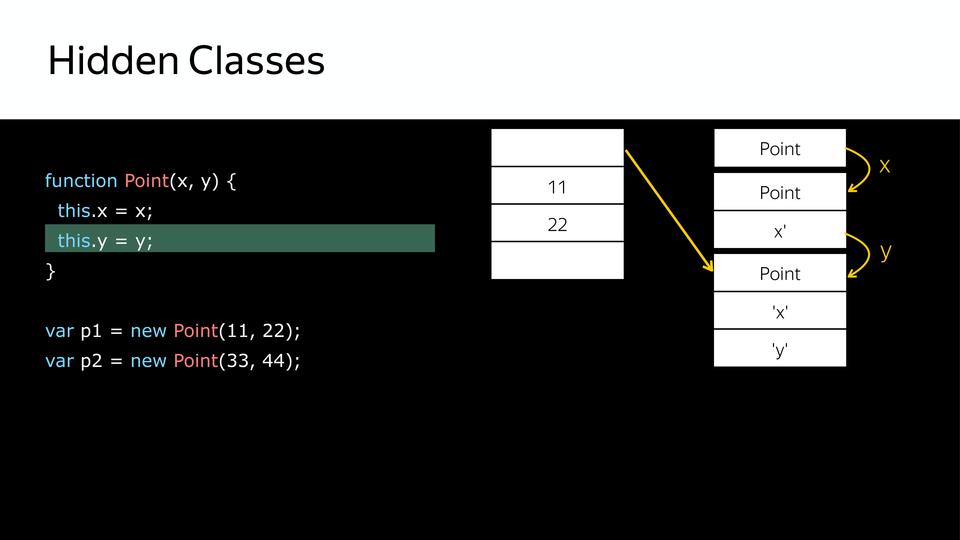

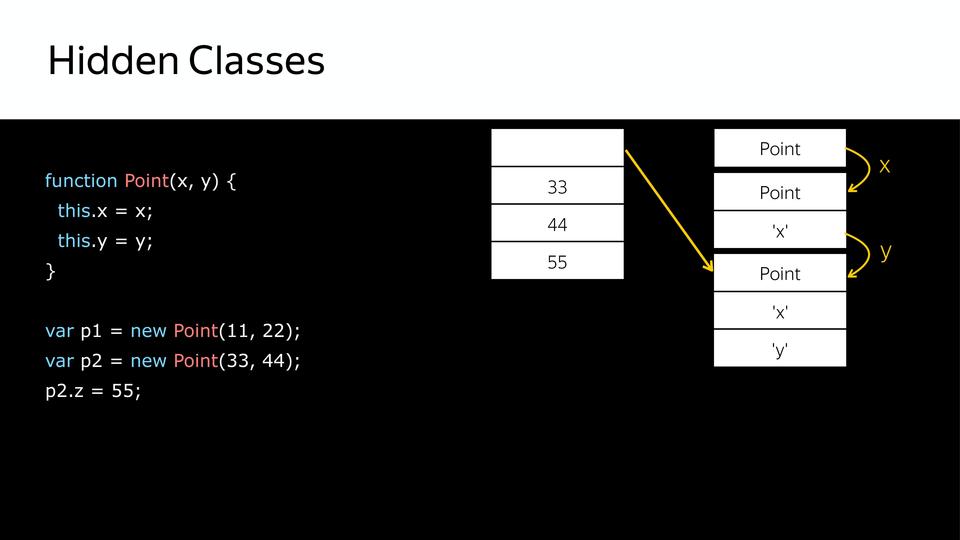

After all, we have JavaScript, it has a prototype model, and we have no classes for objects. In fact, there are, but they are not visible. This is the so-called Hidden Classes. They are visible only to the compiler itself.

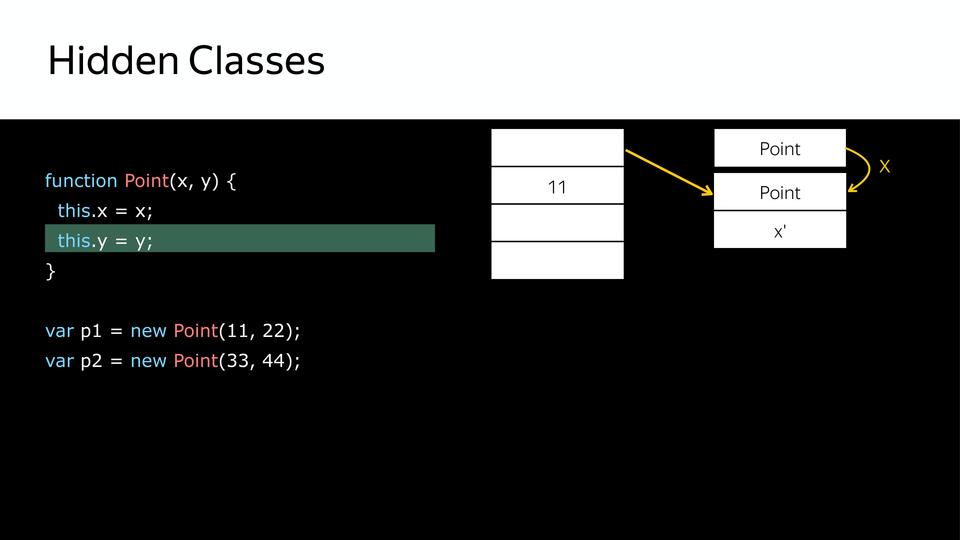

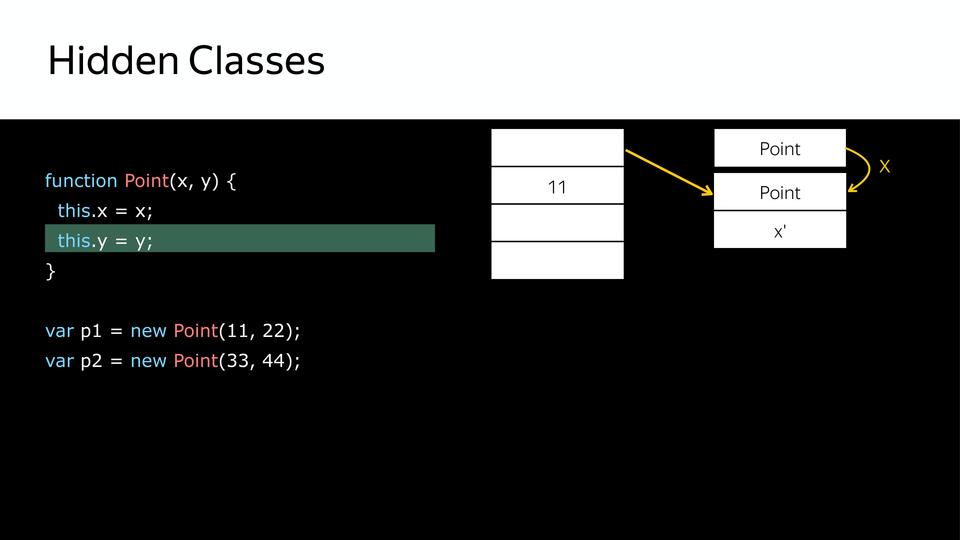

We have a point - this is a constructor, and objects are created. First a hidden class is created that contains only the point itself.

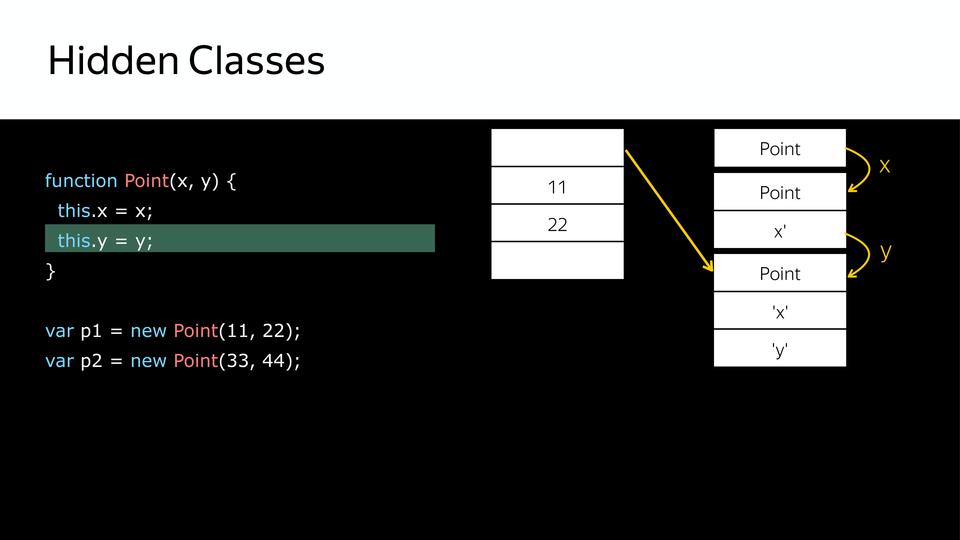

Next, we set the property of this object x and from the fact that we had a hidden class, the following hidden class is created that contains x.

Next we set y and, accordingly, we get another hidden class, which contains x and y.

So we got three hidden class. After that, when we create a second object using the same constructor, the same thing happens. There are already hidden classes, they no longer need to be created, you just need to match them. So that later we know that these two objects are identical in structure. And you can work with them.

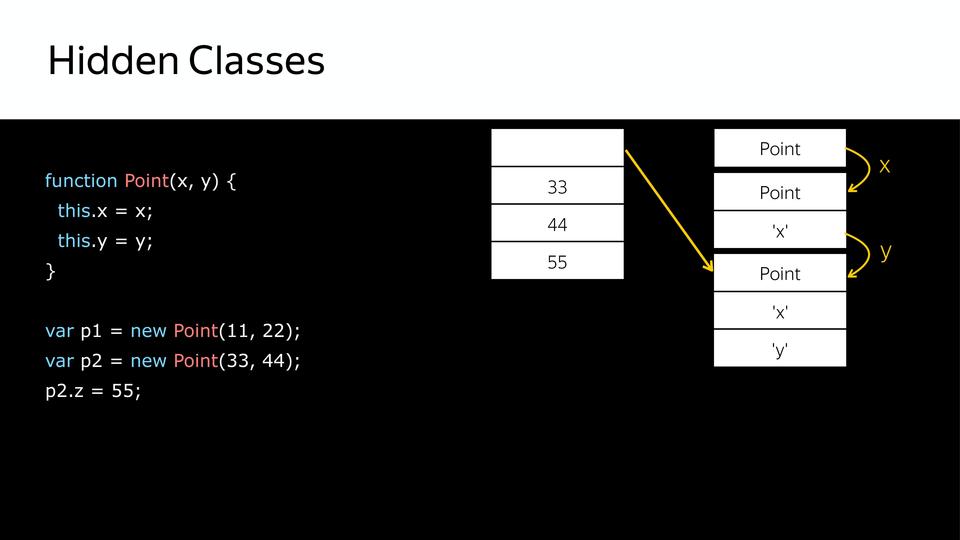

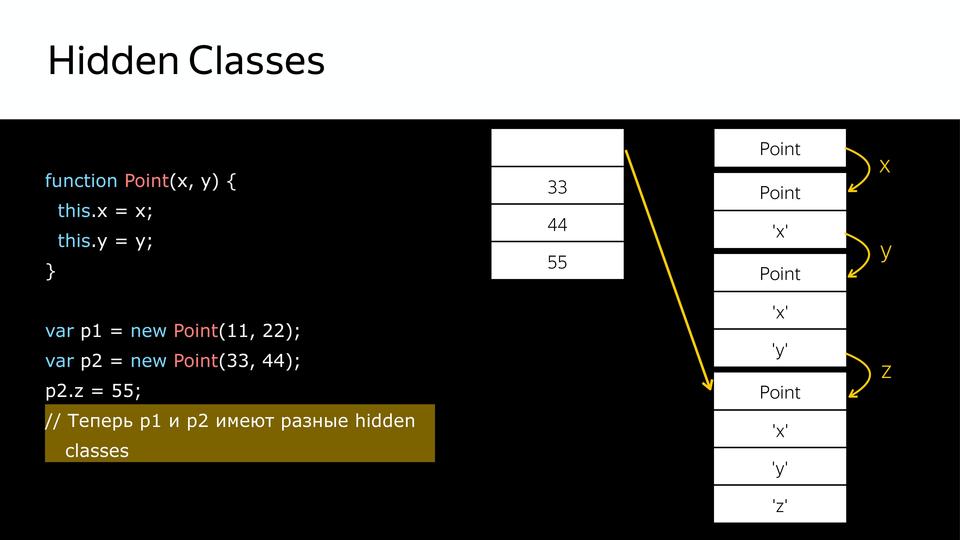

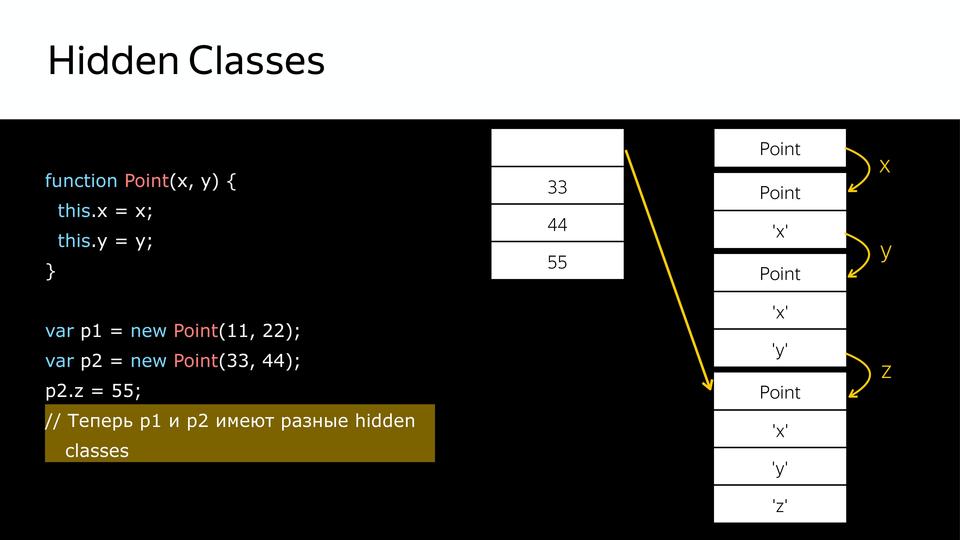

But what happens when we later add a property to the p2 object later? A new hidden class is created, i.e. p1 and p2 are no longer alike. Why is it important? Because when the compiler will iterate through the point loop, and now it will have all the same as p1, it twists, twists, twists, bumps into p2, and it has another hidden class, and the compiler goes into deoptimization, because he didn't get what he expected.

This is the so-called duck typing. What is duck typing? The expression came from American slang, if something goes like a duck, quacks like a duck, then it's a duck. Those. if p1 and p2 are the same in structure, they belong to the same class. But once we add p2 to the structure, these ducks quack in different ways, respectively, these are different classes.

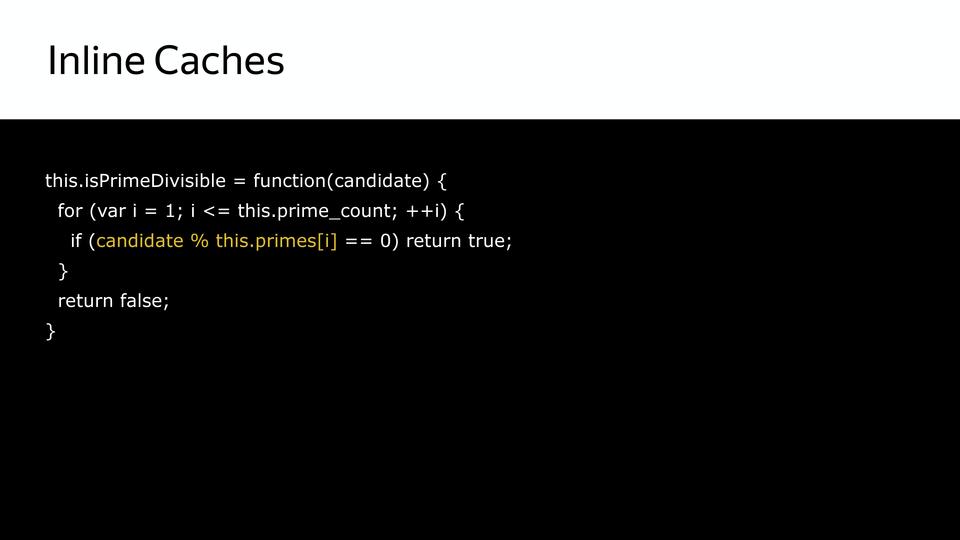

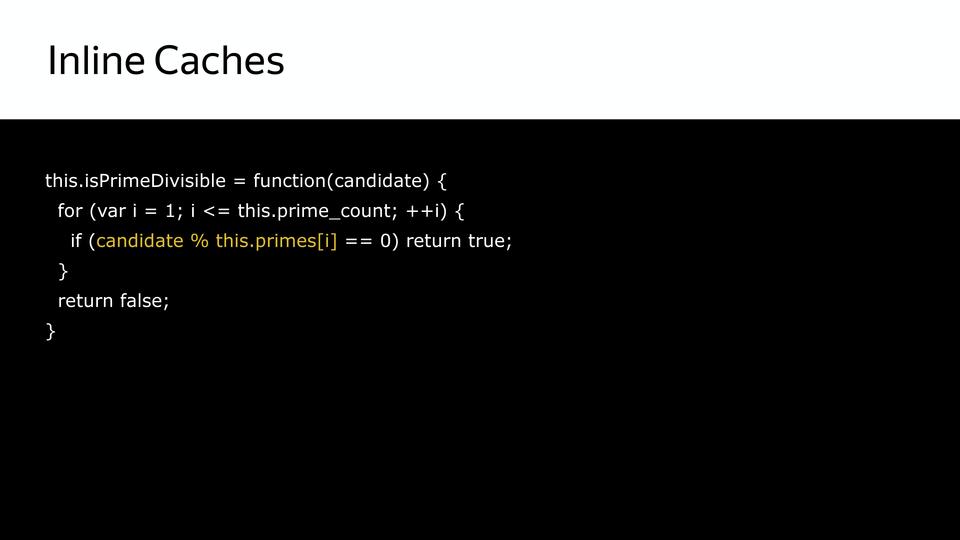

And here we received data on which classes the objects belong to, and received data on what kind of variables, where to use this data and how to store them. For this system is used Inline Caches.

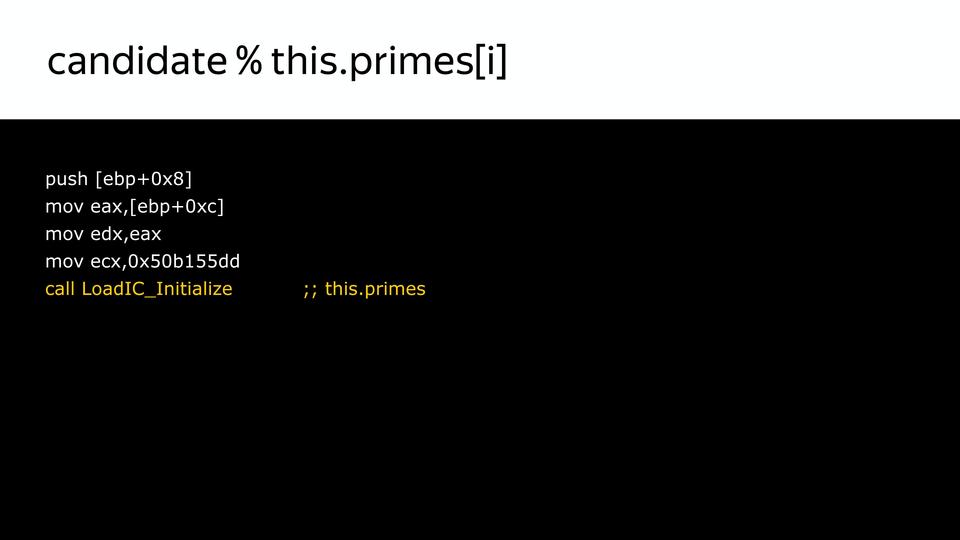

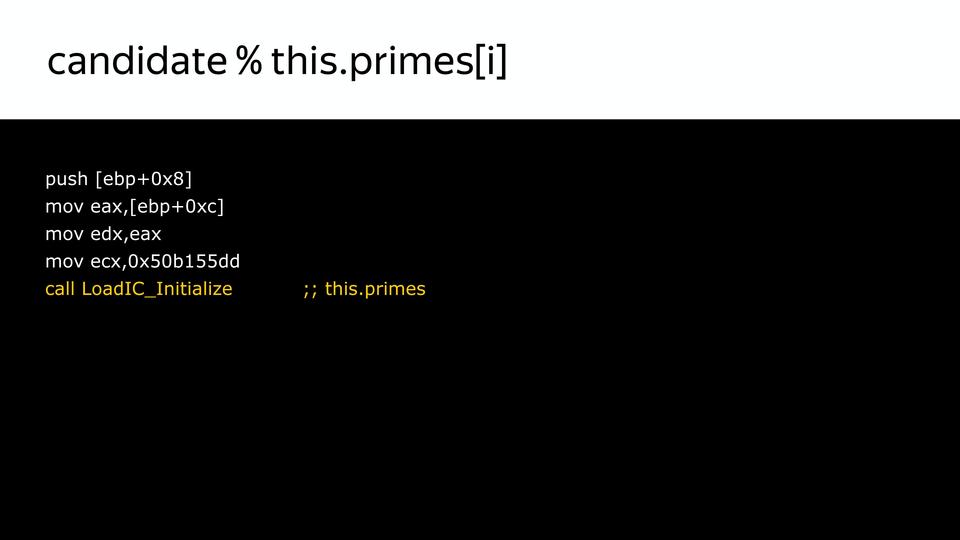

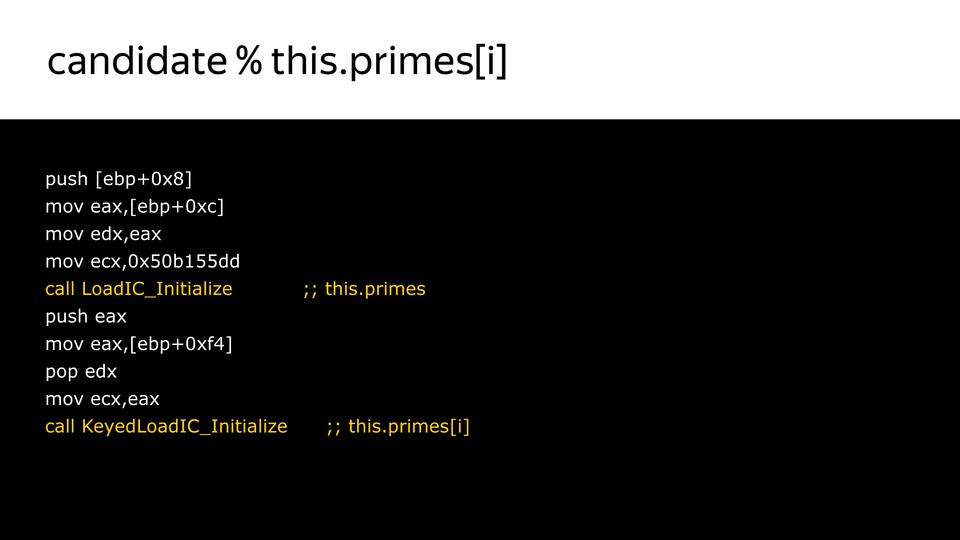

Consider how the creation of Inline Caches for this part. At first, when our code is analyzed, it is supplemented with such calls. This is only initialization. We still do not know what type we will have our Inline Caches.

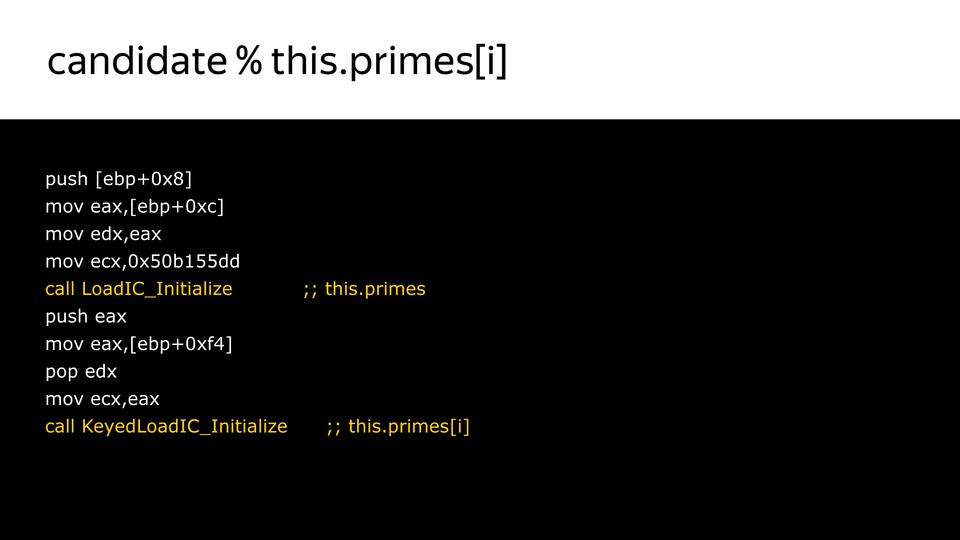

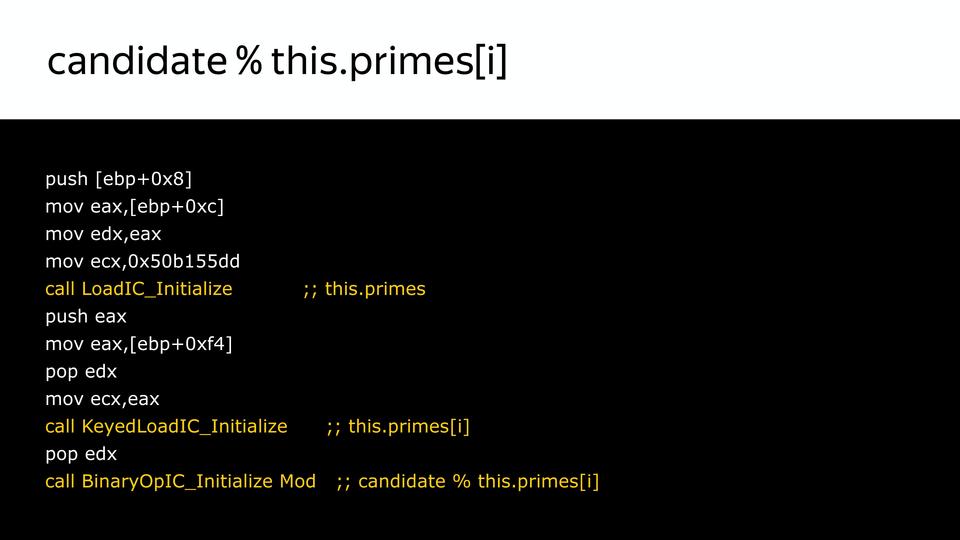

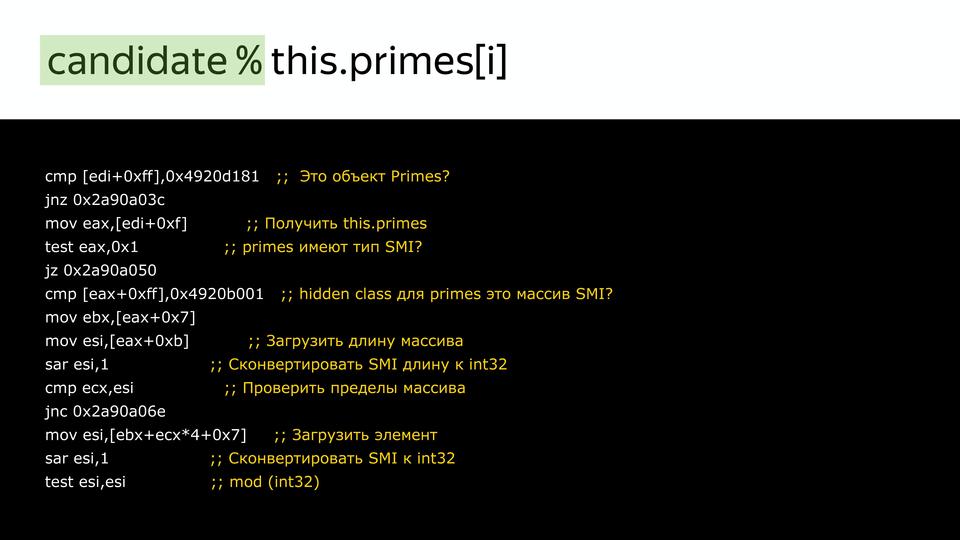

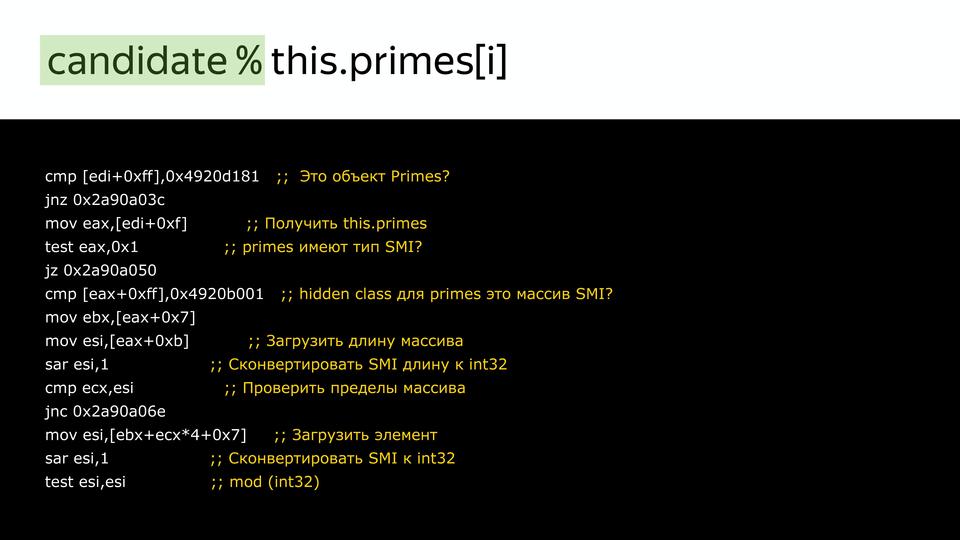

We can say that in this place initiate it, here is the download of this.primes:

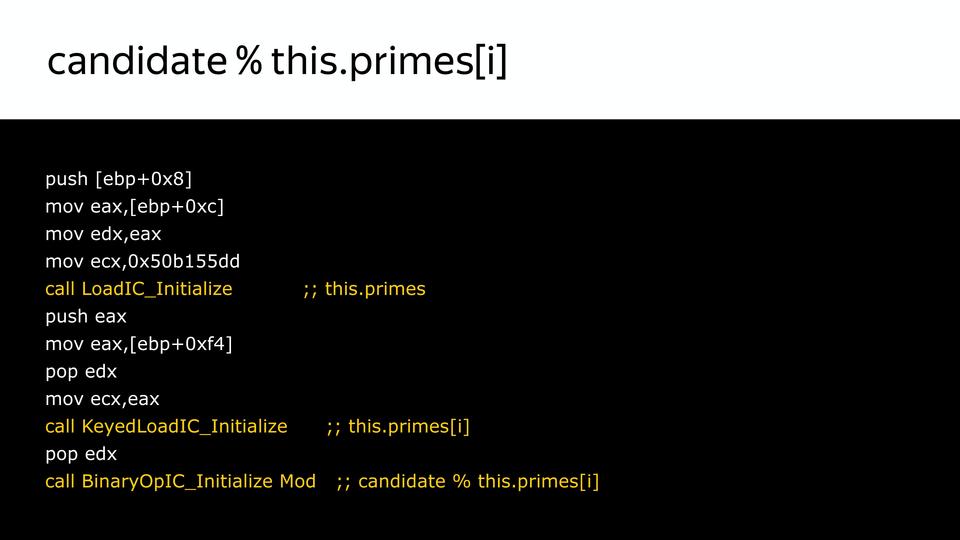

Here is the download by key:

And then the BinaryOperation operation does not mean that it is binary, it means that it is a binary and not a unary operation. An operation that has left and right sides.

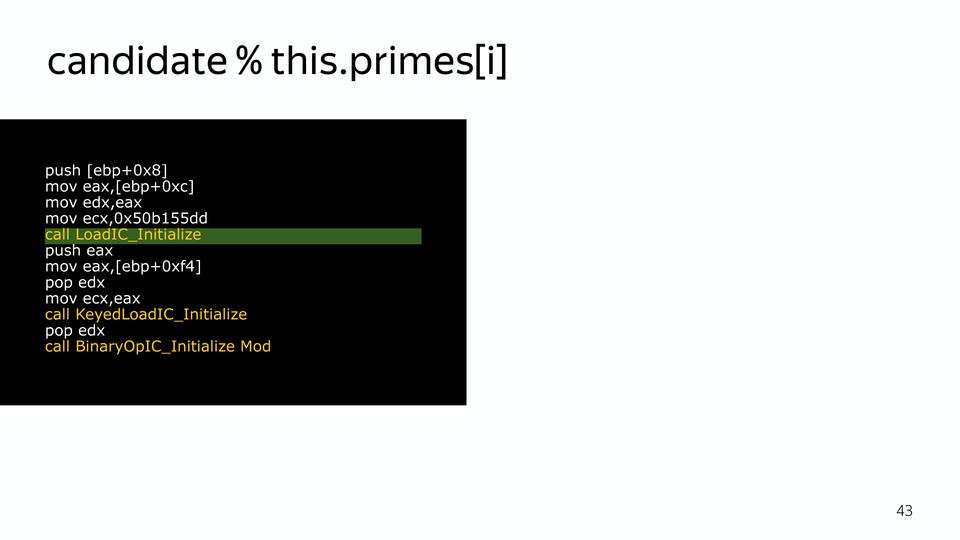

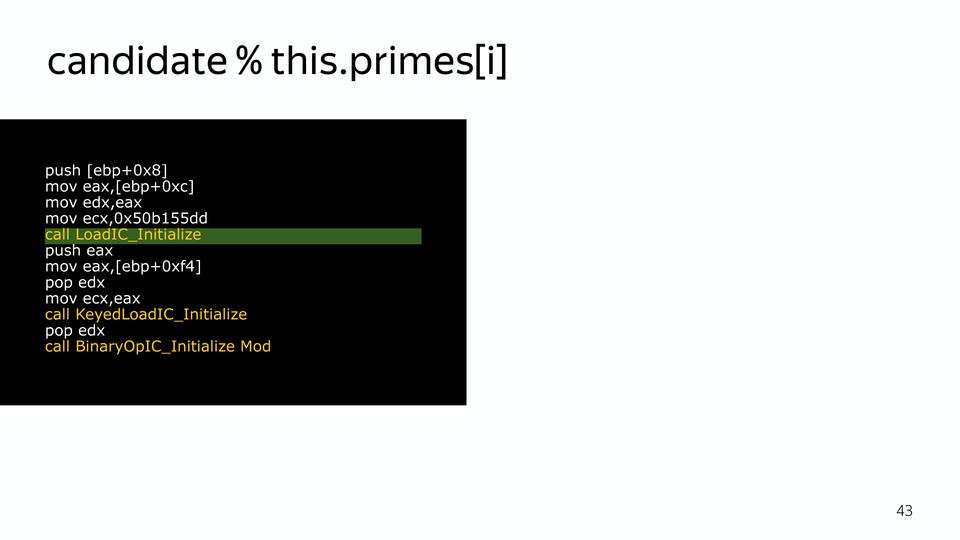

When the code arrives, this is all replaced by pieces of code that we already have inside the compiler, and the compiler knows how to work well with this particular case if we have information about the type. That is, it is replaced here with a call to a code that knows how to get primes from an object:

Here it is replaced by code that knows how to get an element from an SMI array:

Here is a code that knows how to calculate the remainder of the division of two SMIs:

It will already be optimized. And this is how the compiler worked, saturating it with such pieces.

This, of course, gives some overhead, but also gives performance.

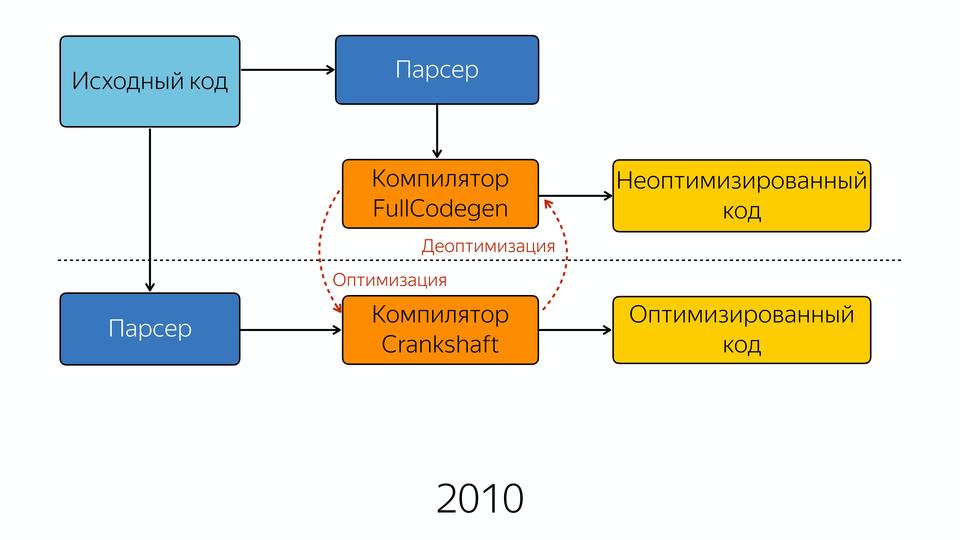

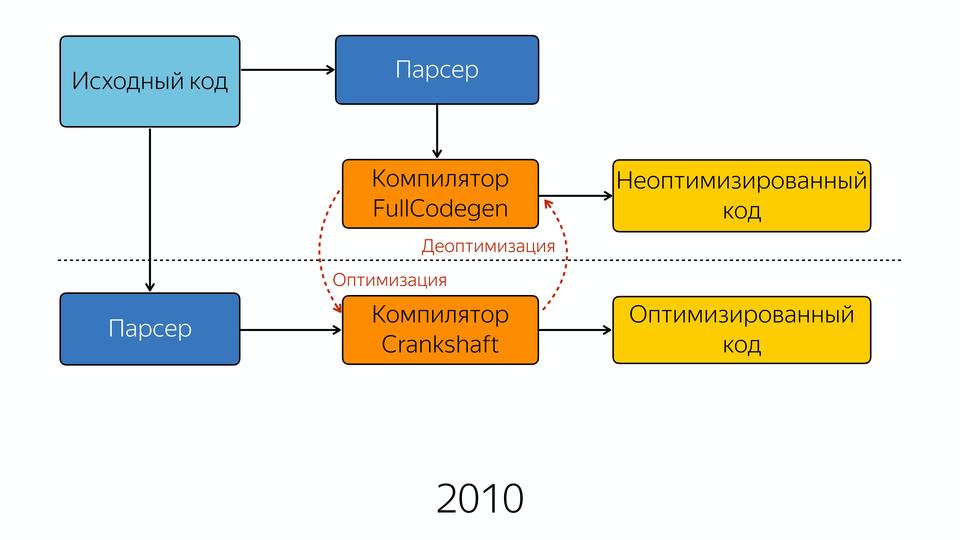

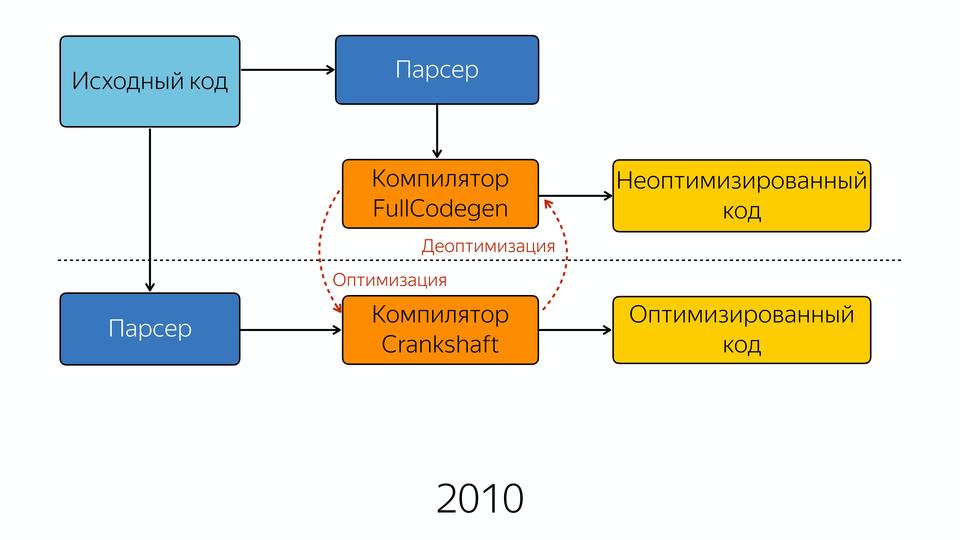

We have developed the Internet, the number of JavaScript has increased, greater performance was required, and Google responded with the creation of a new Crankshaft compiler.

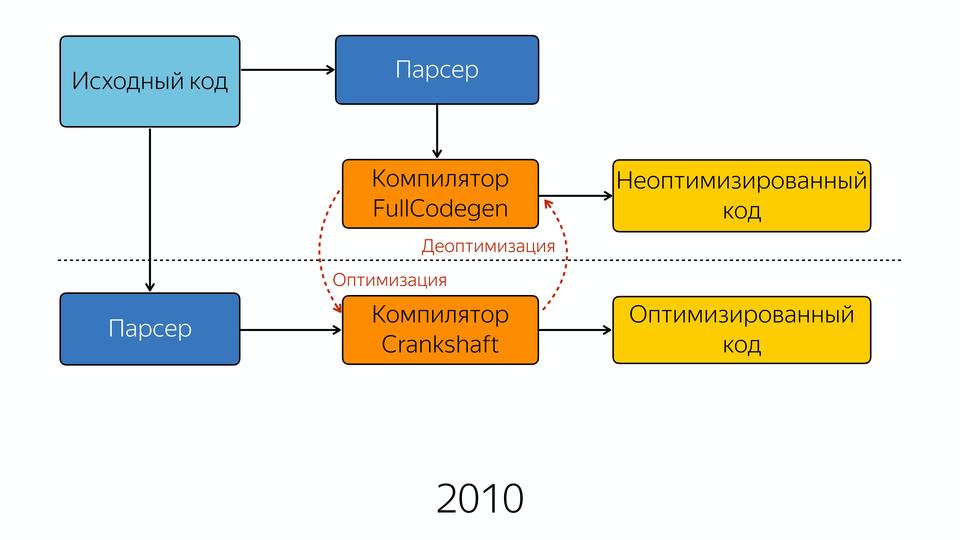

The old compiler began to be called FullCodegen, because it works with a full code base, it knows all of JavaScript, how to compile it. And it produces a non-optimized code. If he stumbles upon a function that is called several times, he thinks that it has become hot, and he knows that the Crankshaft compiler can optimize it. And he gives knowledge of types and that this function can be optimized in the new Crankshaft compiler. Next, the new compiler re-receives the abstract syntax tree. It is important that it does not receive from the old AST compiler, but goes again and requests AST. And knowing about types, it does optimization, and at the output we get optimized code.

If he cannot do optimization, he will fall into deoptimization. When does this happen? This is how I said earlier, for example, in our Hidden Class cycle is spinning, then something unexpected and we fell out into de-optimization. Or, for example, many people like to do a check when we have something on the left side, and we take, for example, the length, i.e. we check if we have a string, and take its length. What is bad? Because when we have no line, then on the left side we get a Boolean and the output is a Boolean, and before that there was a Number. And in this case, we fall into deoptimization. Or he met the code, can not optimize it.

By the example of the same code. Here we had a code rich inline-caches, it is all inline in the new compiler.

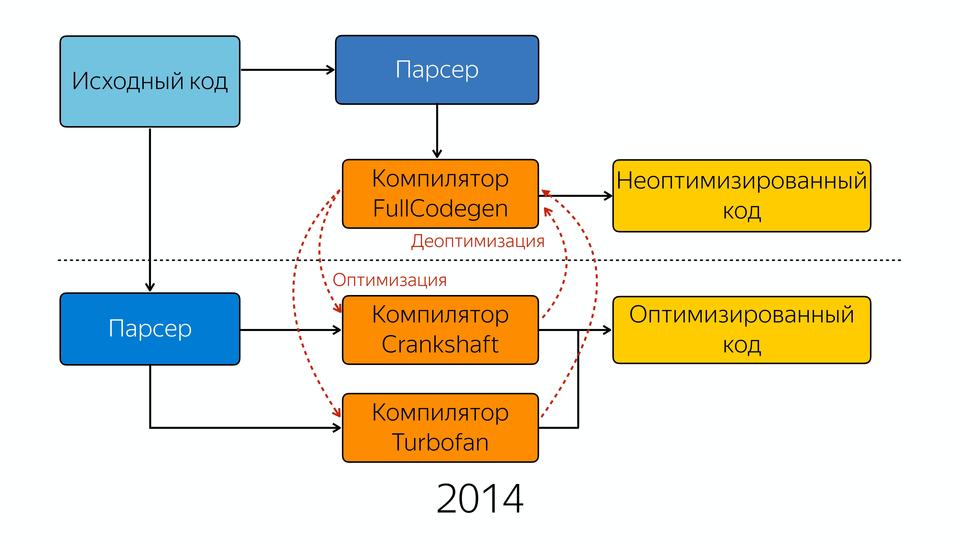

He puts it all inline. Moreover, this compiler is a speculative optimizing compiler. What does he speculate on? He speculates on knowledge of types. He assumes that if we called 10 times with this type, then this type will continue. Everywhere there are such checks that the type that he expected came, and when a type came that he did not expect, he falls into de-optimization. These improvements gave a good performance boost, but gradually the team involved in the V8 engine realized that everything had to start from scratch. Why? There is such a way of software development when we write the first version, and we write the second version from scratch, because we understood how to write. And created a new compiler - Turbofan in 2014.

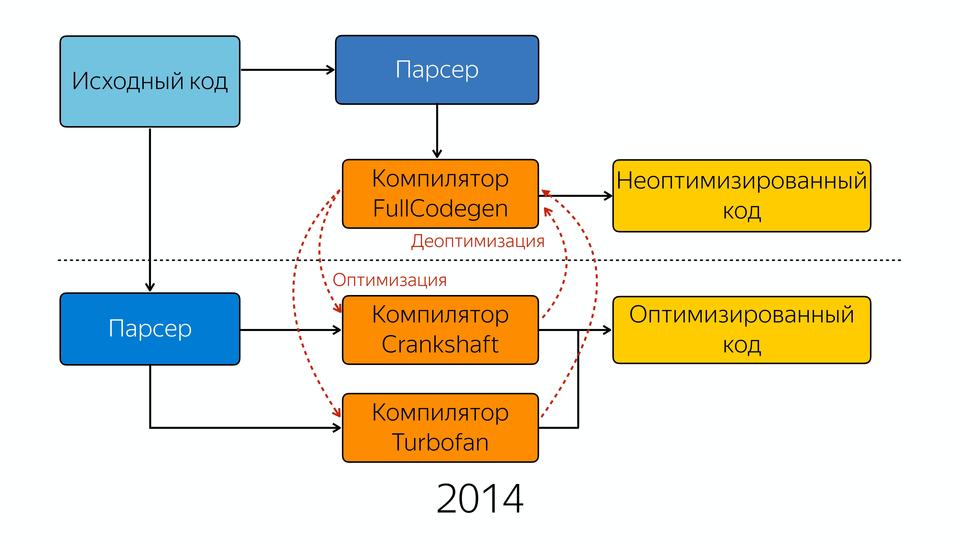

We have the source code that goes into the parser, then to the FullCodegen compiler. So it was before that, no difference. At the output we get a non-optimized code. If we can do any optimization, then we leave in two compilers, Crankshaft and Turbofan. FullCodegen decides whether the Turbofan compiler can optimize specific things, and if it can, sends it to it, and if it cannot, it sends it to the old compiler. Gradually began to add new designs from ES6. We started by optimizing asm.js into it.

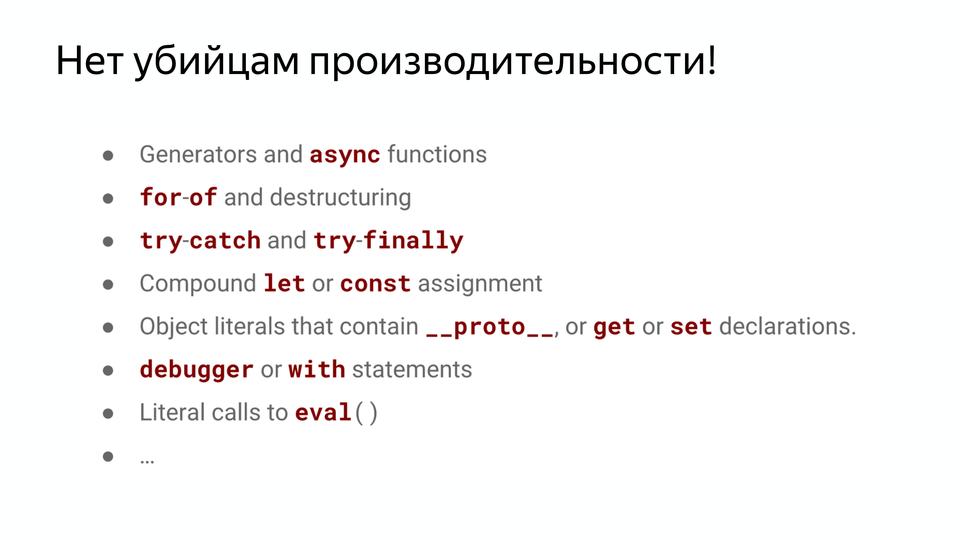

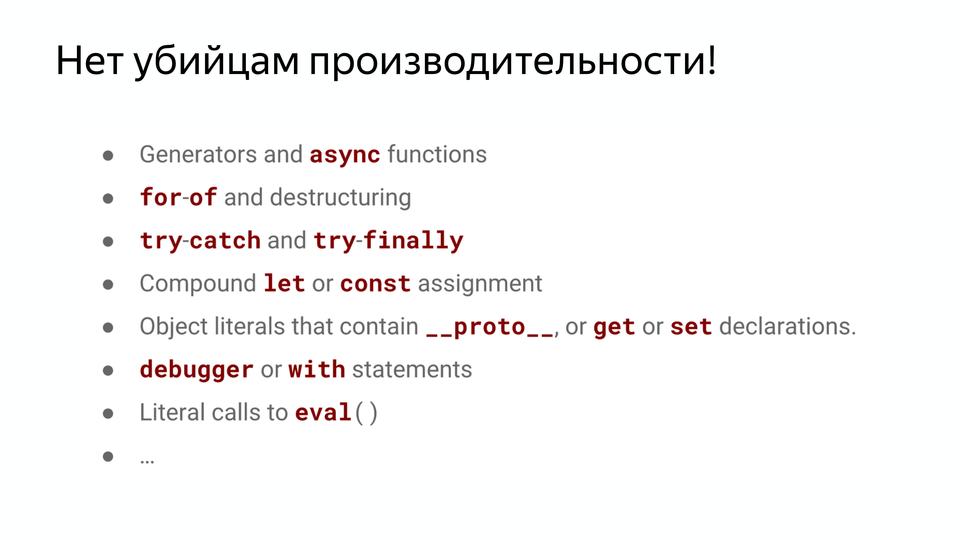

The old compiler was written in those years when we had powerful desktops. And it was tested on tests like octane, synthetic, which checked peak performance. Recently there was a Google I / O conference, and there the manager managing V8 development stated that they refused octane in principle, because it does not correspond to what the compiler actually works with. And this led to the fact that we had a very good peak performance, but the baseline, ie things were not optimized in the code, and when the code, which worked well, ran across such things, there was a significant drop in performance. And there are a lot of such operations, here are a few of them: forEach, map, reduce. They are written in ordinary JS, stuffed with checks, much slower than for. Often advised to use for.

Slow bind operation - it is implemented inside, it turns out to be absolutely terrible. Many frameworks have written their bind implementations. Often people said that I sat down, wrote a bind on my knee and it works faster, surprisingly. Functions containing try {} catch (e) {} (and finally) are very slow.

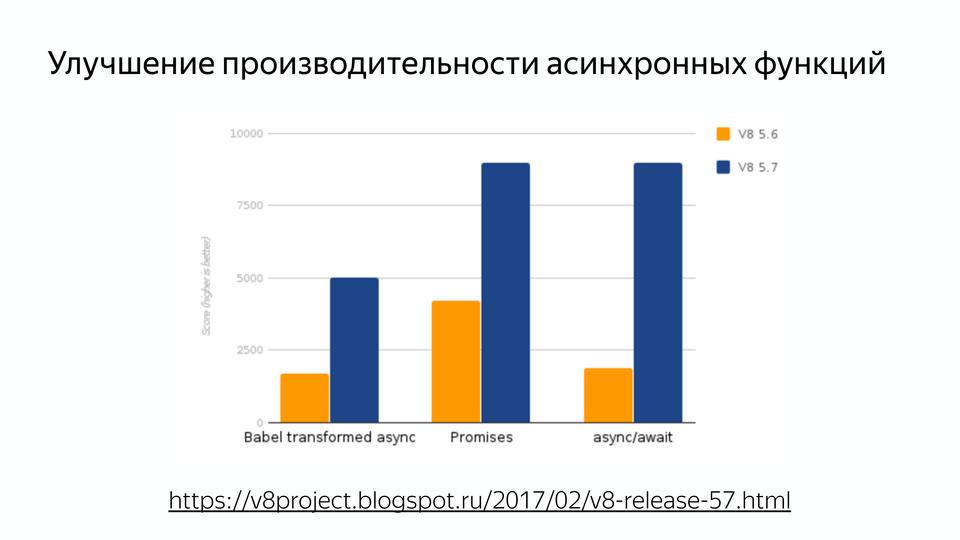

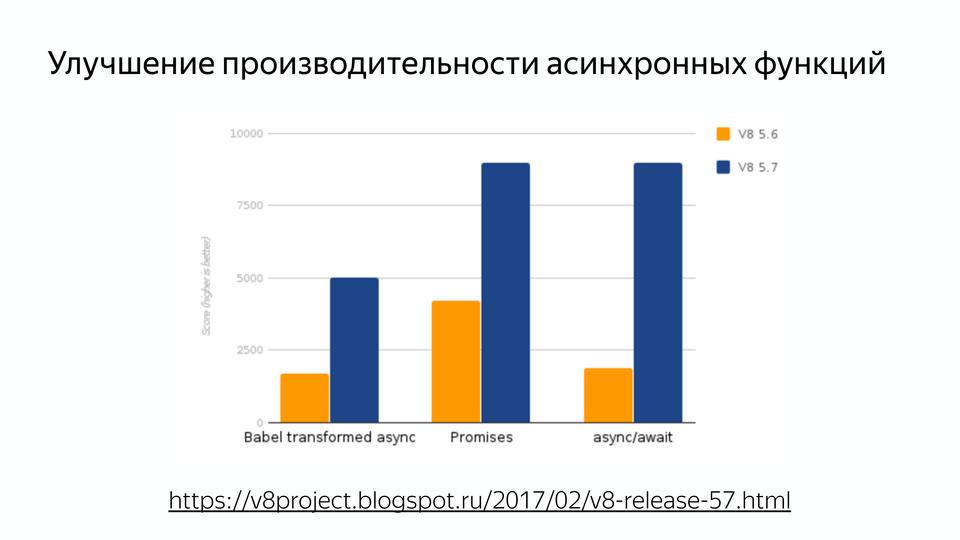

Often there was such a sign that it is better not to use, so as not to subside performance. Actually, the code is slow because the compiler is not working correctly. And with the advent of Turbofan, you can forget about it, because everything has already been optimized. Also very important: the performance of asynchronous functions has been improved.

Therefore, everyone is waiting for the release of the new node, which has recently been released, and performance with async / await is important there. We have an asynchronous language initially, and we could only use callbacks well. And who writes with the promise, they know that their third-party implementations work faster than the native implementation.

The next task was to make the performance predictable. There was a situation like this: the code that showed itself perfectly on jsPerf, when inserted into the working code, showed a completely different performance. But there are other cases where we could not guarantee that our code would work as productively as we had originally intended.

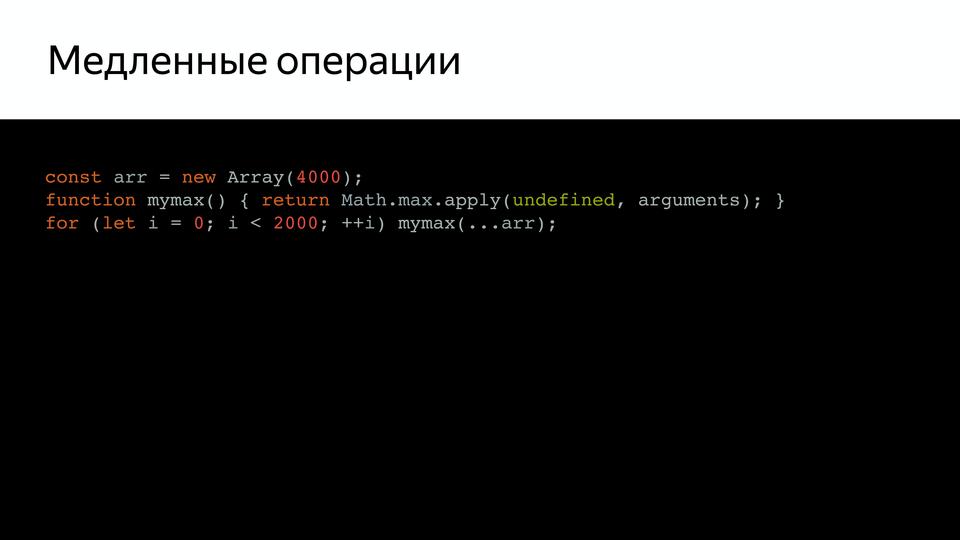

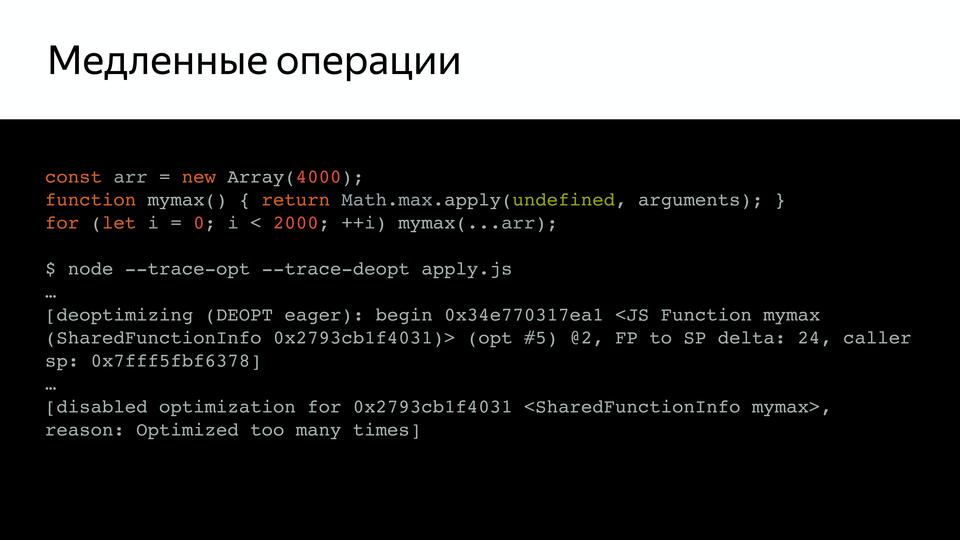

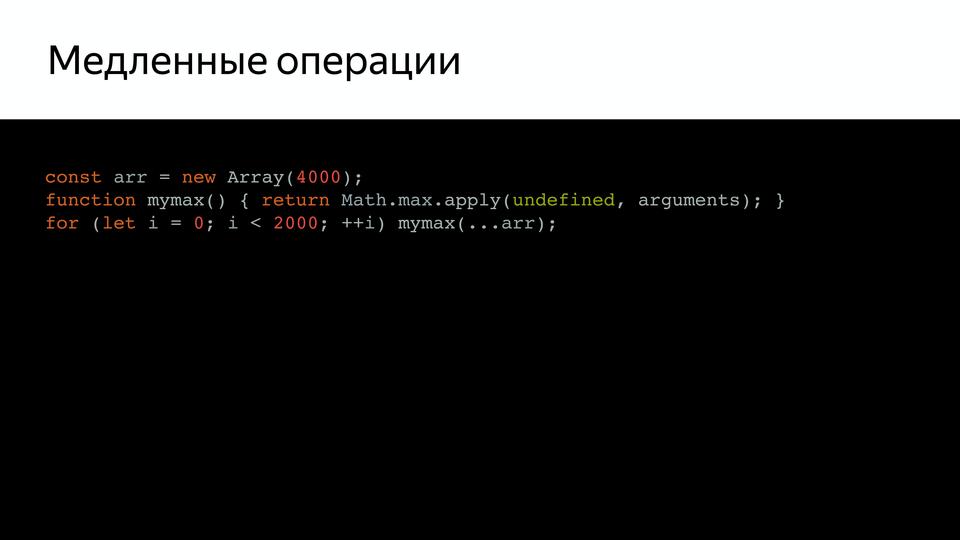

For example, we have such a fairly simple code that calls mymax, and if we check it (using the trace-opt and trace-deopt keys, we show which functions were optimized and which were not).

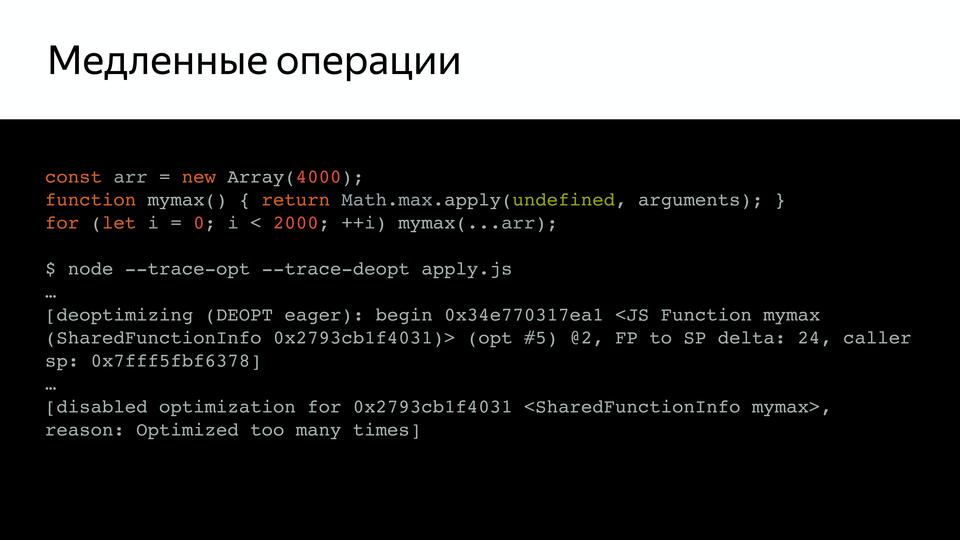

We can start it with node, and we can also run it with D8, a special environment where the V8 works separately from the browser. It shows us that the optimization has been disabled. Because too many times it was started on check. What is the problem? It turns out that the pseudo-array arguments is too big, and inside, it turns out, there was a check on the size of this array. Moreover, this test, as Benedikt Meurer (the leading developer of Turbofan) said, did not make any sense, it simply went over copypaste over the years.

And why is the length limited? After all, the stack size is not checked, nothing, that was just so limited. This is an unexpected behavior that was necessary to get rid of.

Another example, here we have a dispatcher that calls two callbacks. Also, if we call it, we will see that it has been de-optimized. What is the problem here? That one function is strict, and the second is not strict. And they have different hidden classes in the old compiler. Those. he considers them different. And in this case, he also goes on deoptimization. And this, and the previous code, it is written in principle correctly, but it is de-optimized. This was unexpected.

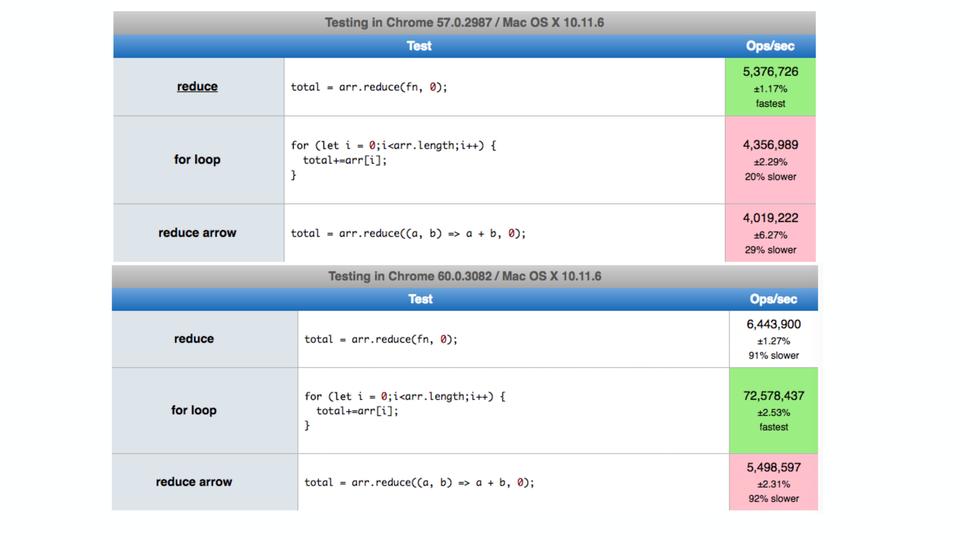

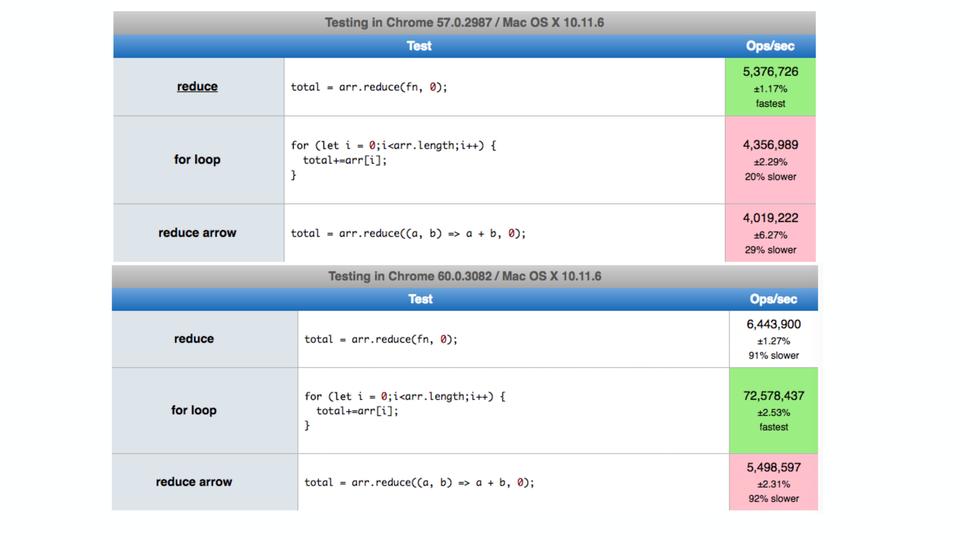

There was another example on Twitter, when it turned out that in some cases the for loop in chrome worked even slower than reduce. Although we know that reduce is slower. It turned out the problem was that let was used inside for - unexpectedly. I even put the latest version at that time and the result is already good - corrected.

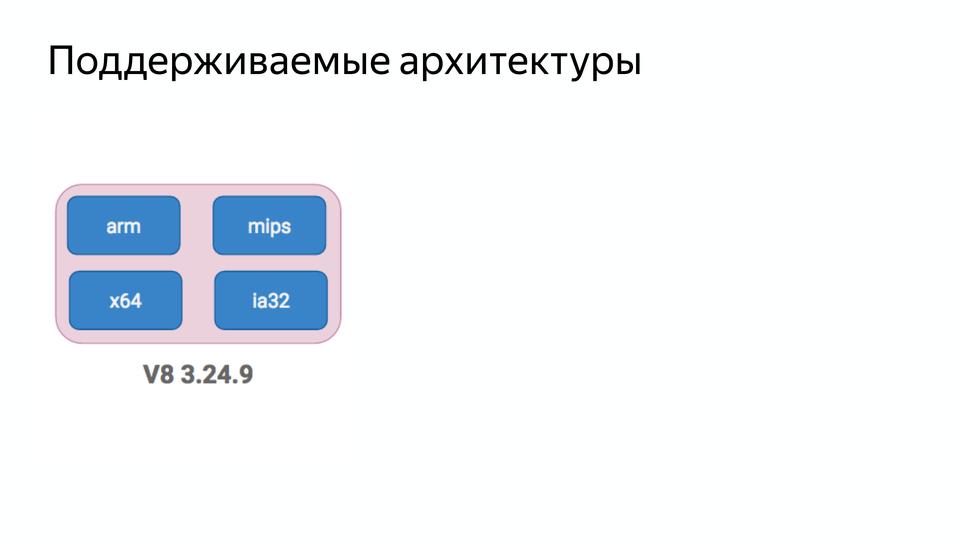

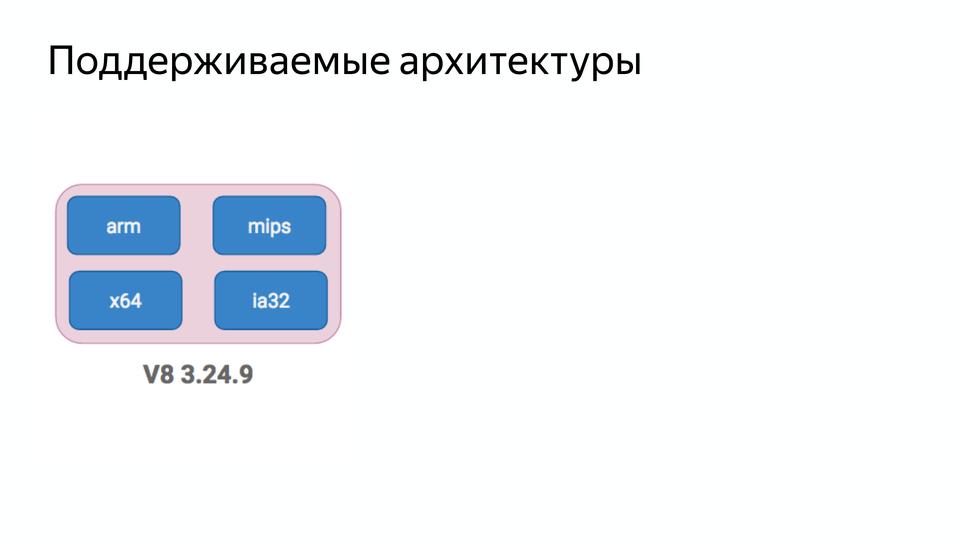

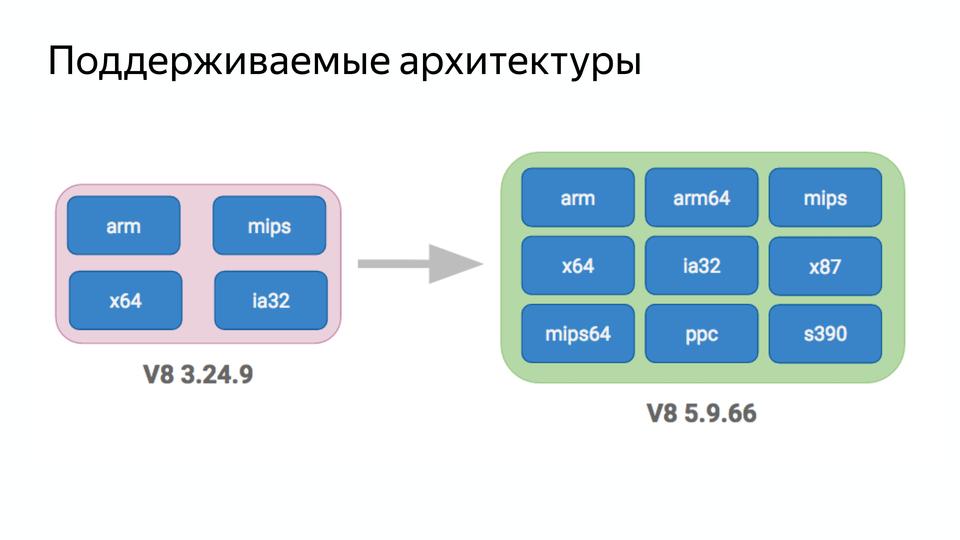

The next item was to reduce complexity. Here we had version V8 3.24.9 and it supported four architectures.

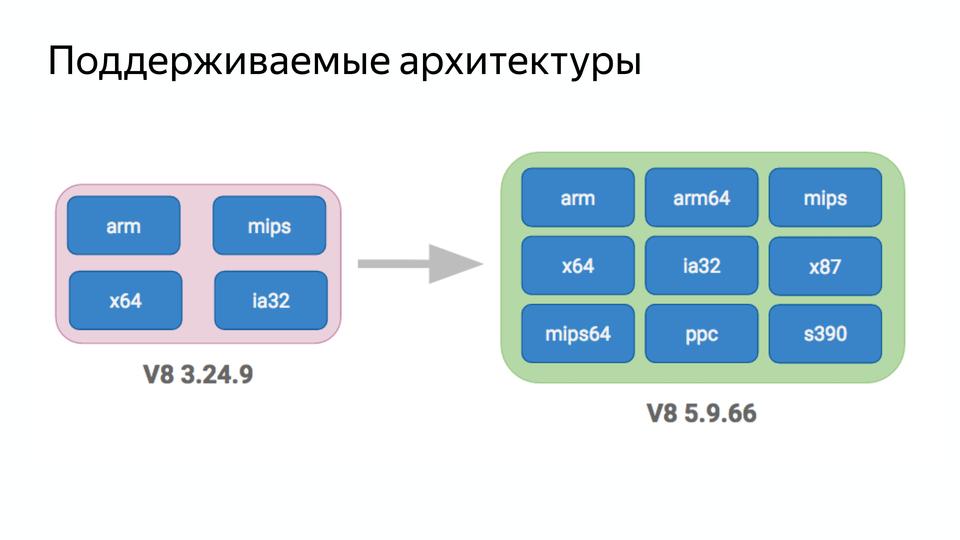

Now V8 supports nine architectures!

And the code has been accumulating over the years. It was written in part in C, Assembler, JS, and the developer who came to the team felt like this.

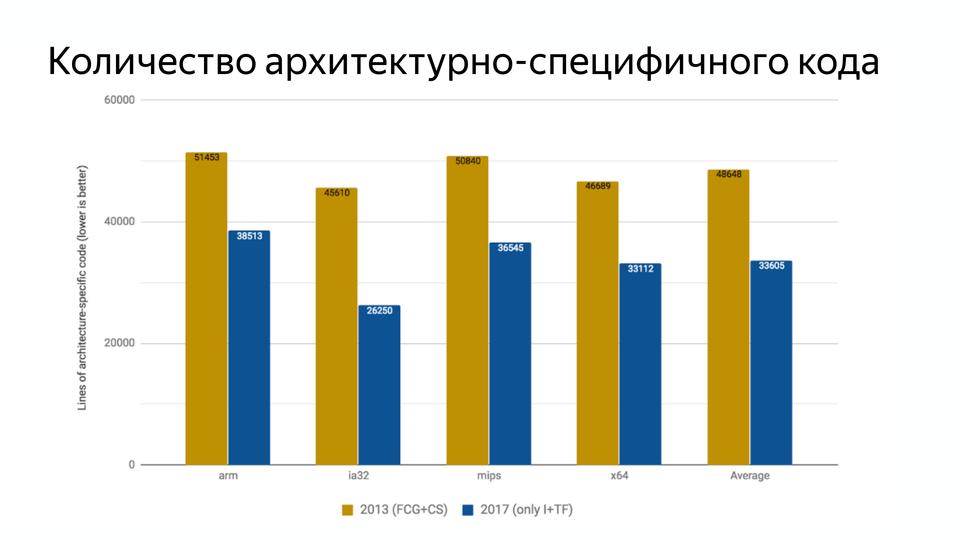

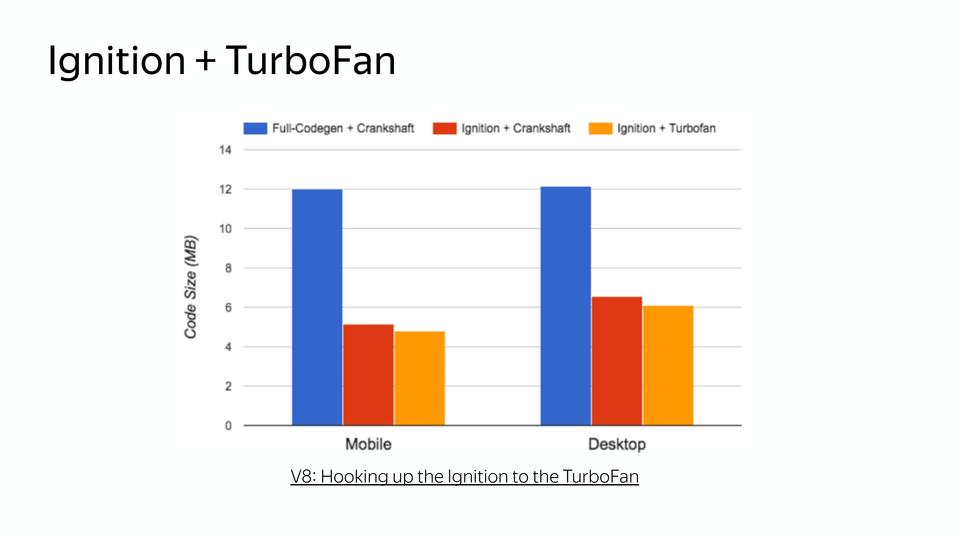

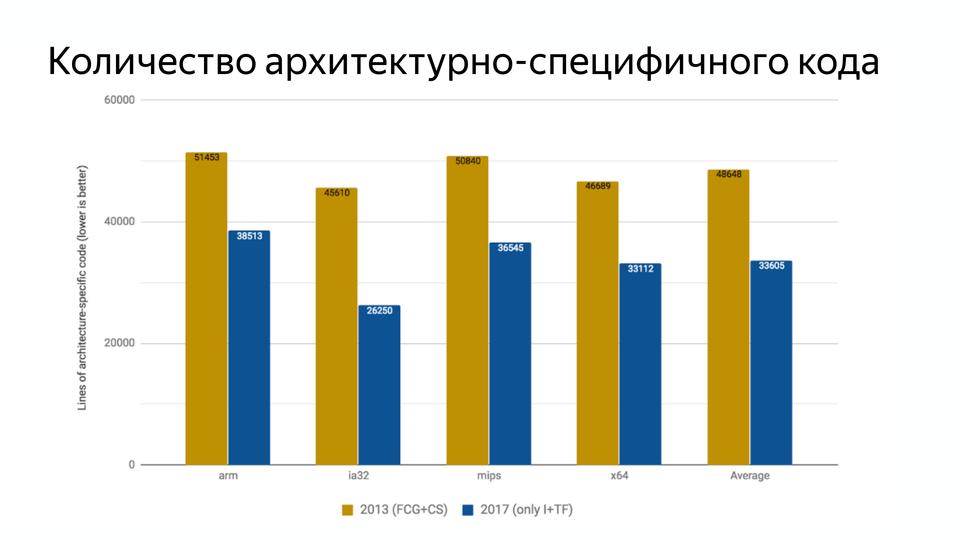

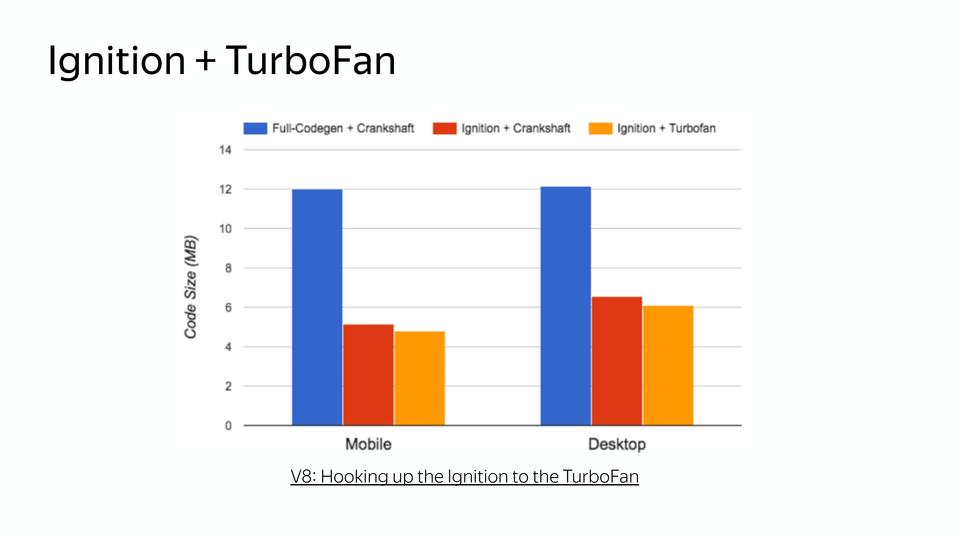

The code must be easily modified in order to be able to respond to changes in the world. And with the introduction of Turbofan, the number of architectural-specific code has decreased.

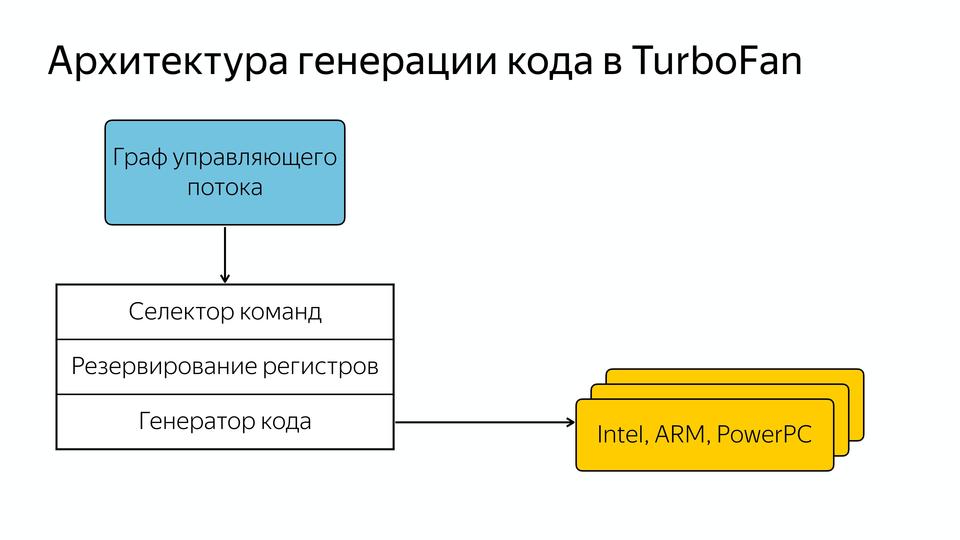

From 2013 to 2017, it was 29% less than the architectural-specific code. This was due to the emergence of a new code generation architecture in Turbofan.

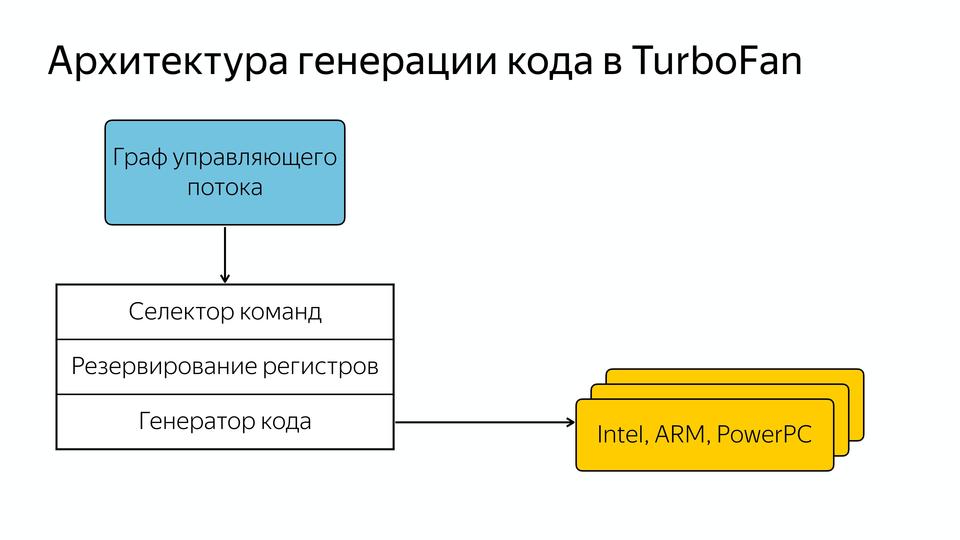

They made it data driven, i.e. we have a control flow graph that contains data and knowledge of what should happen to them. And it gets into the general command selector, then the registers are reserved and then the code is generated for different architectures. Those. the developer no longer needs to know how everything is written for specific architectures, but it is possible to make more general code. That's how it all happened, well improved the code, but gradually a few years after the compiler was written for the interpreted language, it turned out that we still need an interpreter.

And what's the reason? The reason holds Steve Jobs.

This, of course, is not the iPhone itself, but those smartphones that the iPhone has spawned, which have given convenient access to the Internet. And this led to the fact that the number of users on mobile devices exceeded the number on desktops.

And originally compilers were developed for powerful desktops, but not for mobile devices.

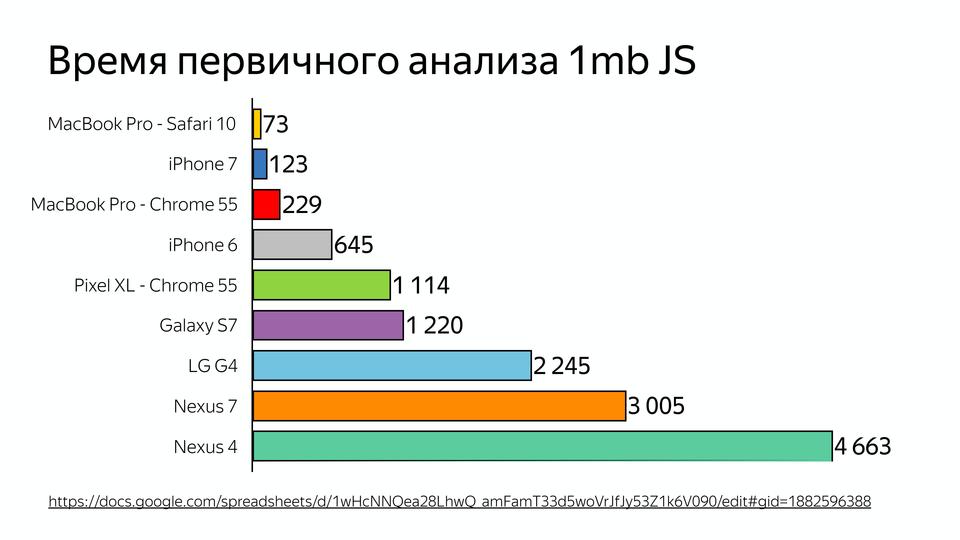

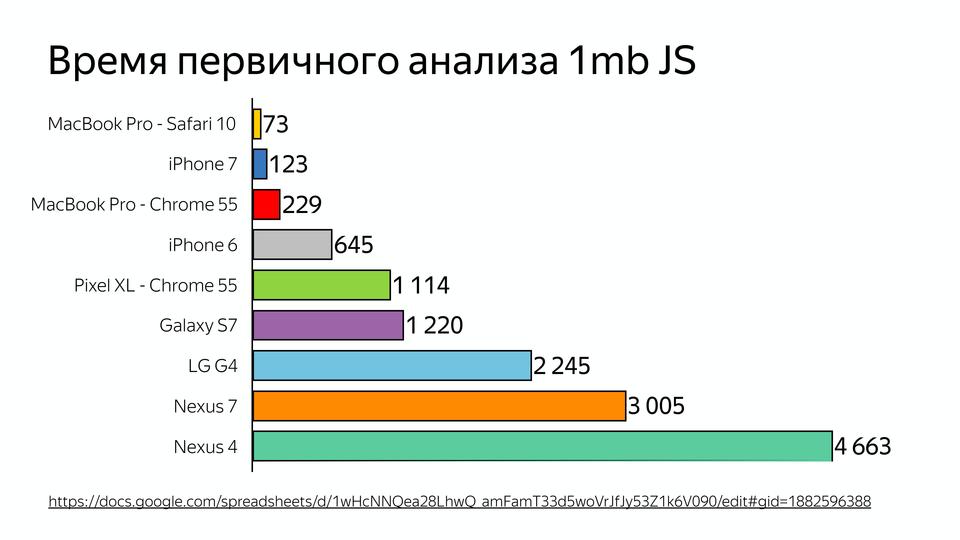

Here is the 1MB JavaScript primary analysis timeline. And recently there was a question why VKontakte does server rendering, and not client. Because the time spent on JS analysis can be 2-5 times longer on mobile devices. And we are talking about top devices, and people often go with very different.

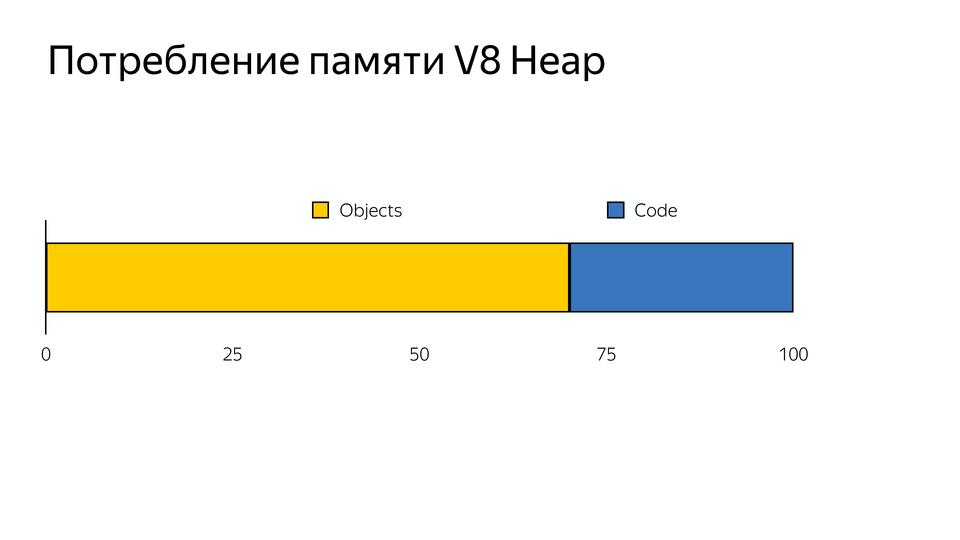

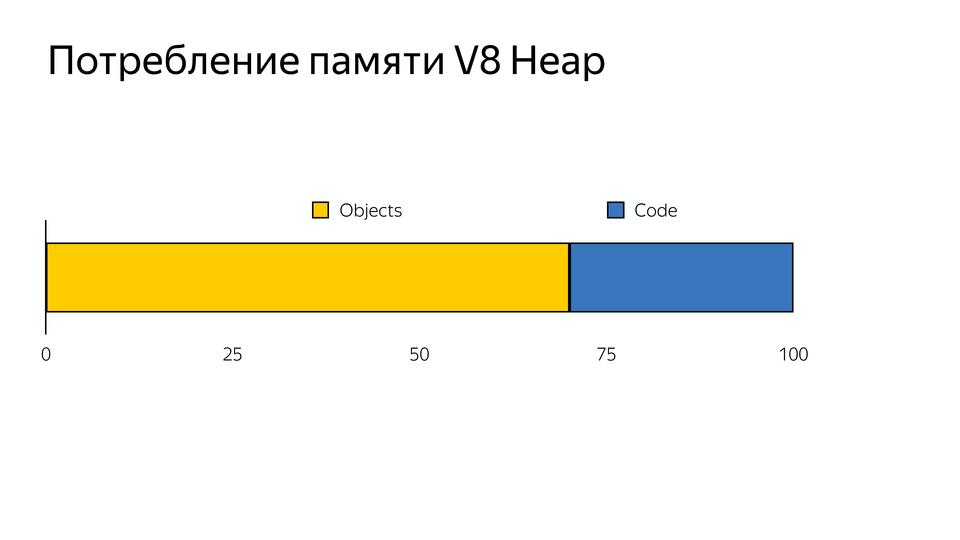

And one more problem: many Chinese memory devices have 512 MB, and if you look at how V8 memory is allocated, another problem appears.

The memory is divided into objects (what our code uses) and code objects (this is what the compiler itself uses - for example, it stores inline caches there). It turns out that 30% of the memory is occupied by the virtual machine to support internal use. We cannot manage this memory, the compiler itself consumes it.

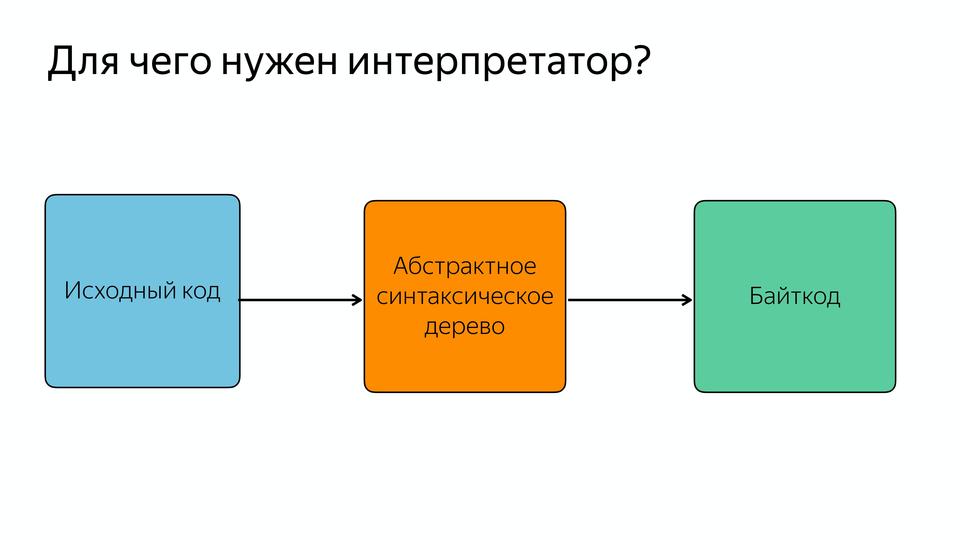

It was necessary to do something with this, and in 2016, the Android development team from London responded with the creation of a new interpreter Ignition.

You may have noticed that the code optimized by the Turbofan compiler does not refer to the parser for the syntax tree, but receives something from the interpreter. He gets a bytecode.

Now the abstract syntax tree is parsed into bytecode, and this JavaScript parsing happens once, then bytecode is used.

If someone does not know, baytkod is a compact platform-independent representation of the program. This is somewhat similar to assembler, only platform-independent. So it is called, because all instructions occupy one byte.

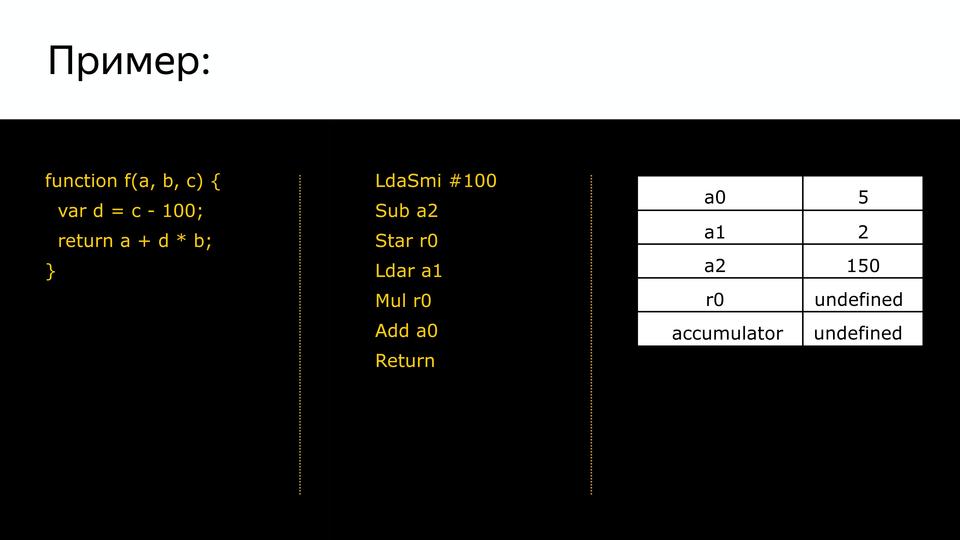

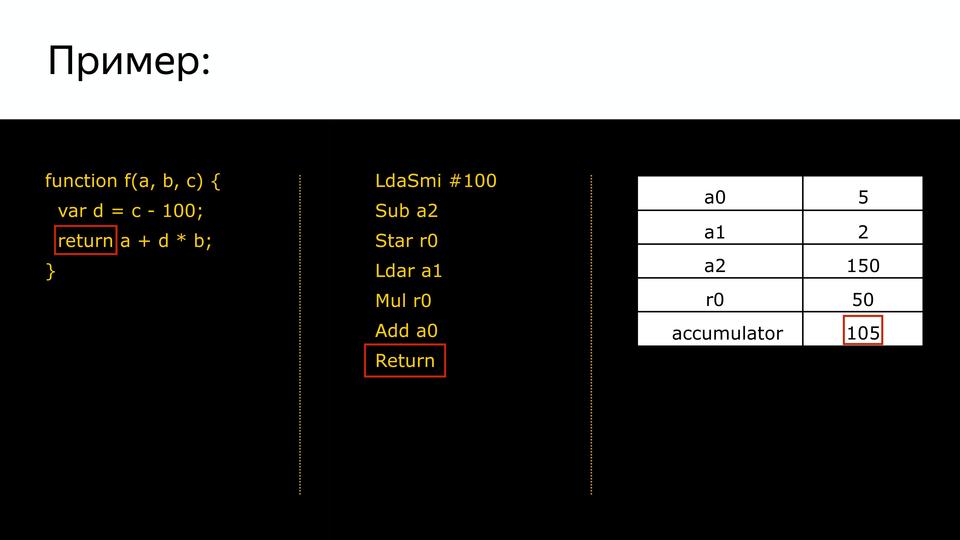

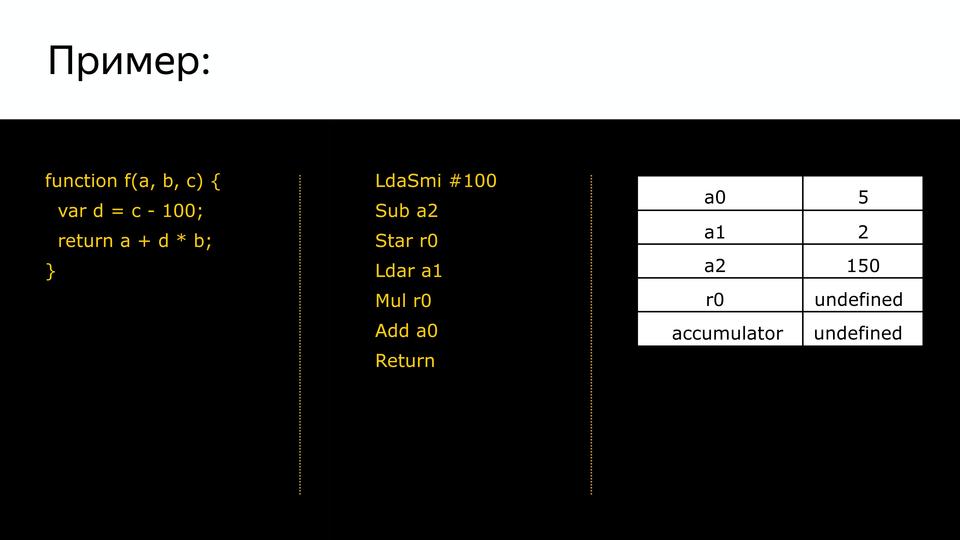

Let's see how bytecode is generated for such a piece of the program.

We have a program, we have generated bytecode, and we have a set of registers.

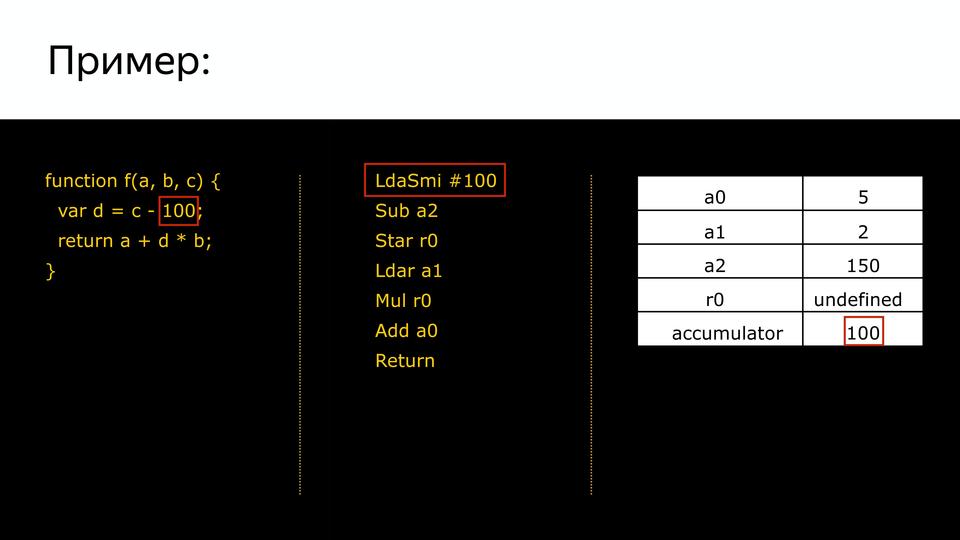

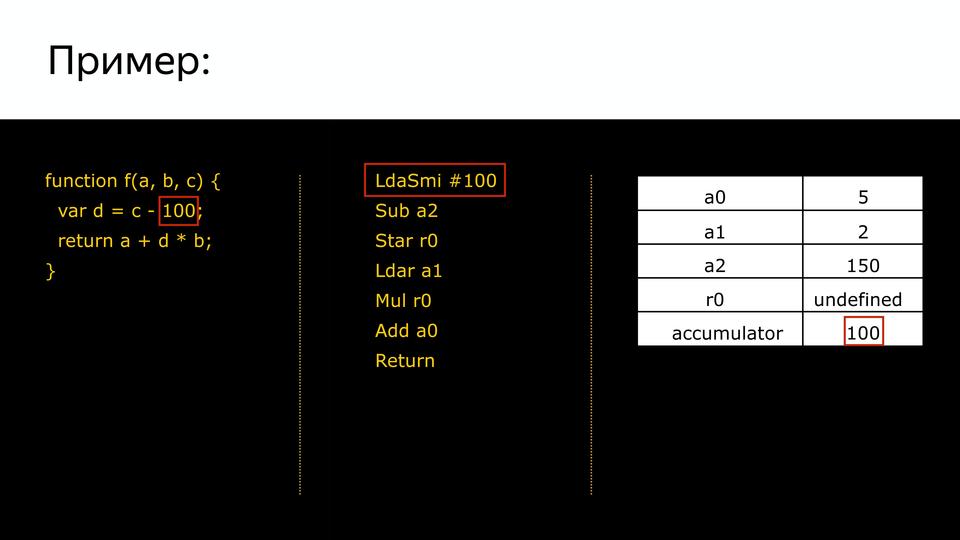

So we set the values for these registers input, which fall into our program. At the first stage, we load into the special register accumulator (it is needed in order not to spend registers once more, but only participates in calculations) smi integer equal to 100.

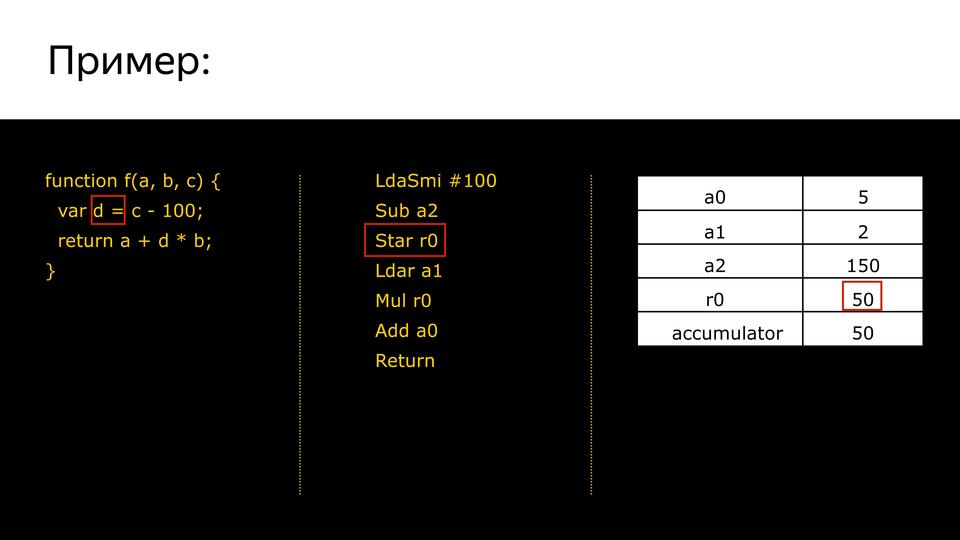

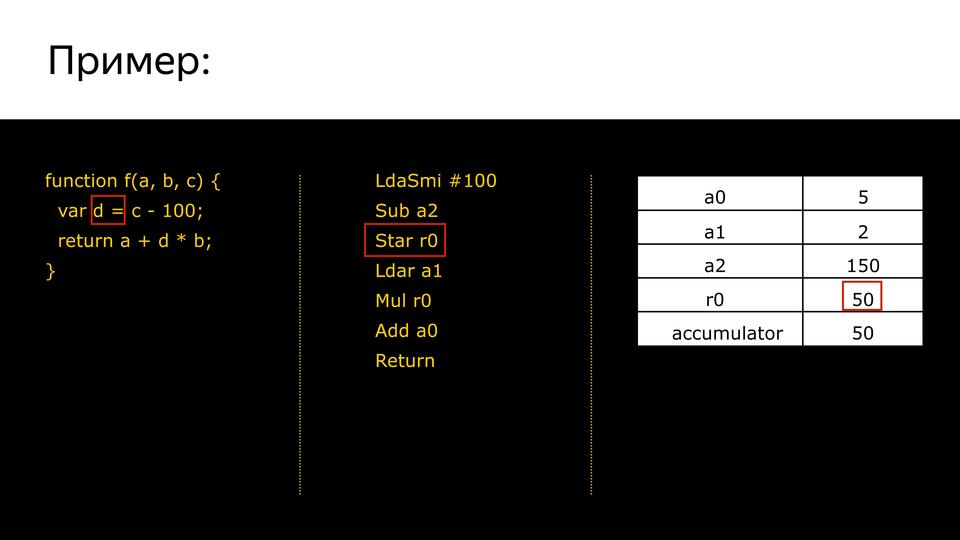

The next command tells us that we need to subtract from the register a2 (on the plate we see 150 there) the previous value of the battery (100). In accumulator, we got 50.

Next, the command tells us what to save in r0. It is associated with the variable d.

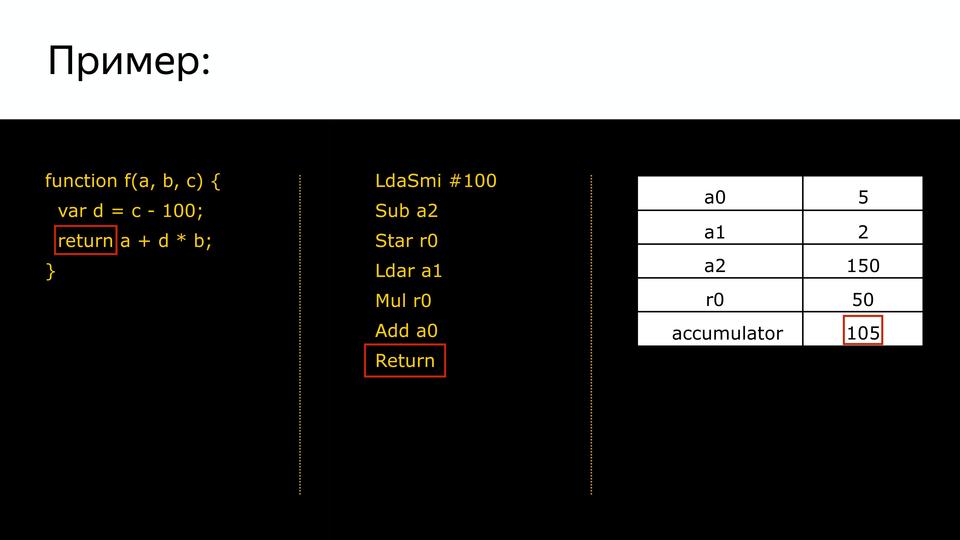

Further it becomes more clear. Again we load the value from b, multiply it by the value accumulator, add a0 and get the output, respectively, 105.

And our entire program turns into a long chain from bytecode. Thus, the memory consumption that was stored on our code was reduced.

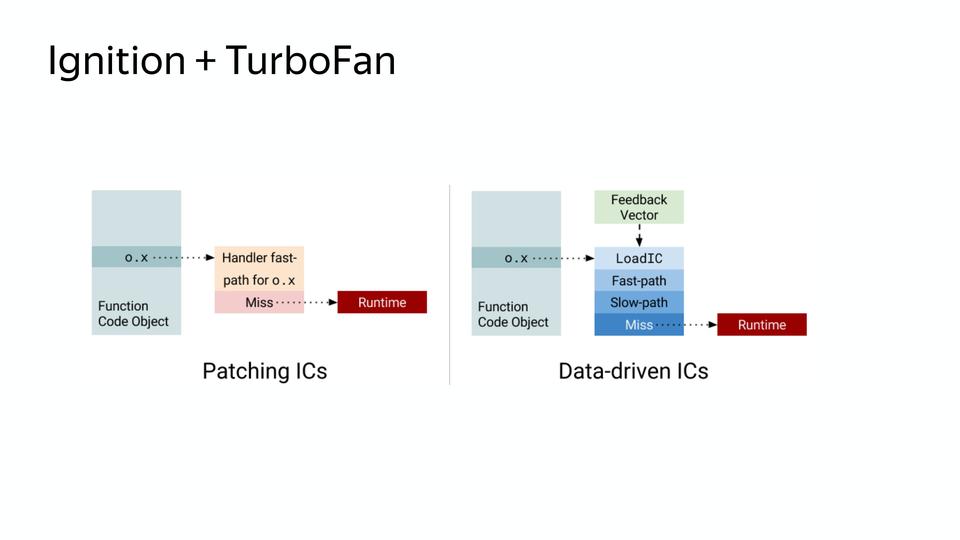

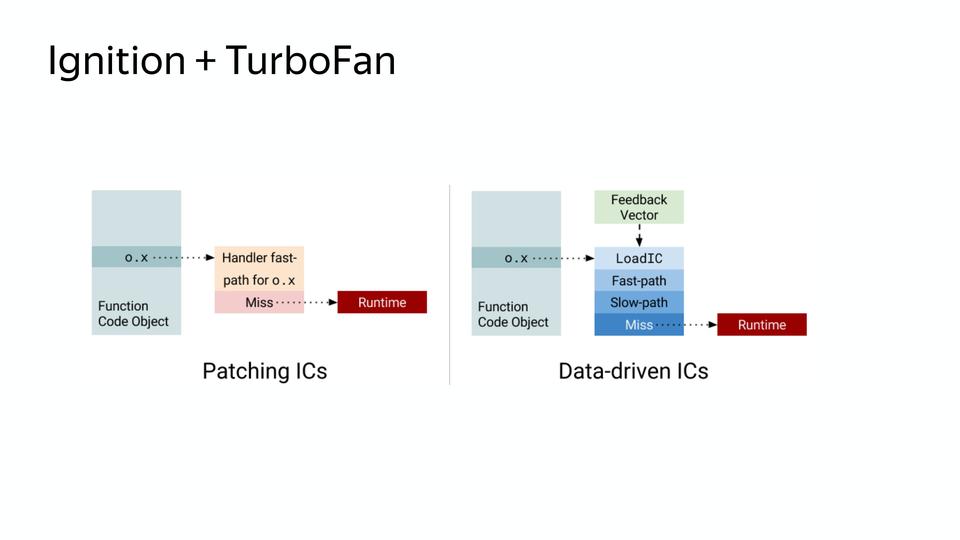

There was a second problem, the memory that inline caches consumed. For this, we switched to new caches - Data-driven IC, which reduce the cost of a slow path. The slow path is how non-optimized code works, fast code when optimized.

On the left we see the old scheme. When we need to find a field in an object, we store knowledge about where it lies in the object, somewhere we store this object and know how to handle it. In the new scheme there is a control vector in which there are data and commands, and knowledge of what to do with these commands. And it goes on loading inline caches, on the fast path, if deoptimization, then on the slow path. And, accordingly, this scheme no longer requires storing references to objects, and it turns out to be more compact. As a result, after the introduction of the scheme, memory consumption on the same code decreased.

And finally, this year the scheme has become much simpler.

Here we always work in the Turbofan compiler. You can see that the FullCodegen compiler used to know the entire JS, and the Crankshaft compiler is only part of the JS, and now the Turbofan compiler knows the entire JS and works with all JS. If it cannot optimize, it issues a non-optimized code, if it can, then, accordingly, it is optimized. And for the code, he turns to the interpreter.

We have classes that are not visible (many people know that there are new classes in ES6, but this is just sugar). They must be monitored, because the code for good performance must be monomorphic, not polymorphic. Those. if the classes that enter the function change, their hidden class changes - for objects that fall into our function, the code becomes polymorphic and it is poorly optimized. If our objects come of the same type of hidden class, then, accordingly, the code is monomorphic.

In V8, the code passes through the interpreter and the JIT compiler. The task of the JIT compiler is to make the code faster. The JIT compiler runs our code in a loop and each time, based on the data it received last time, it tries to make the code better. He fills him with knowledge of the types he gets during the first run, and due to this he makes some optimizations. Inside it are pieces that are oriented to work with maximum performance. If we have a + b in the code, it's slow. We know that this is number + number or string + string, we can do it quickly. This is what the JIT compiler does.

The better the optimization, the higher the overhead (time, memory). The task of the interpreter is to reduce the overhead of memory. Even with the advent of Turbofan, some optimizations were abandoned, which were previously, because they decided to increase the basic performance and slightly reduce the peak.

The compiler has two modes of operation - cold and hot. Cold is when our function is started for the first time. If the function has been run several times, the compiler understands that it is already hot, and tries to optimize it. There is an ambush with tests. When developers chase tests many times and get any data, this is already optimized hot code. But in reality, this code can be called once or twice and show completely different performance. This must be considered.

With the monomorphic code the same example. That is, when we write code, we can help our compiler. We can write code as if we have a typed language (do not override variables, do not write different types to them, always return the same type).

That's all the main secrets.

Reading links

github.com/v8/v8/wiki/TurboFan

http://benediktmeurer.de/

http://mrale.ph/

http://darksi.de/

https://medium.com/@amel_true

If you like JS just as we do, and with pleasure delve into all its interior, you may be interested in these reports at the upcoming Moscow conference HolyJS :

The material is based on the author's report at the conference HolyJS 2017, which was held in St. Petersburg on June 2-3. Presentation in pdf can be found at this link .

A few months ago, the movie The Last Dragon Slayer was released. There, if the protagonist kills the dragon, then the magic disappears in the world. I want to act as an antagonist today, I want to kill the dragon, because in the JavaScript world there is no place for magic. Everything that works, works explicitly. We need to figure out how it works in order to understand how it works.

')

I want to share with you my passion. At some point in time, I realized that I don’t know how it works under the hood of a V8. I began to read literature, watch reports, which are mostly in English, have accumulated knowledge, systematized and want to bring them to you.

Interpreted or compiled language?

I hope everyone knows the difference, but I repeat. Compiled languages: source code in them is converted by the compiler into machine code and written to a file. They use compilation before execution. What is the advantage? It does not need to be recompiled, it is as automated as possible for the system under which it is compiled. What is the disadvantage? If your operating system changes, and if you do not have the source code, you lose the program.

Interpreted languages - when the source code is executed by an interpreter program. The advantages are that it is easy to achieve cross-platform. We deliver our source code as is, and if there is an interpreter in this system, then the code will work. JavaScript is, of course, interpretable.

Immerse yourself in history. In 2008, Chrome browser comes out. That year, Google presented the new V8 engine. In 2009, Node.js was introduced on the same engine, which consisted of V8 and the libUV library, which provides io, i.e. accessing files, network stuff, etc. In general, two very important things for us are built on the V8 engine. Let's see what it consists of.

In 2008, the engine was pretty simple inside. Well, relatively simple - his scheme was simple. The source code got into the parser, from the parser into the compiler, and at the output we received a semi-optimized code. Semi-optimized, because there wasn’t good optimization yet. Perhaps, in those years, we had to write the best JavaScript, because it was impossible to hope that the optimizer would optimize it internally.

What does a parser need in this scheme?

The parser is needed in order to turn the source code into an abstract syntax tree or AST. AST is a tree in which all vertices are operators, and all leaves are operands.

Let's look at an example of a mathematical expression. We have such a tree, all vertices are operators, branches are operands. What makes it good is that it is very easy to generate machine code from it later. Who worked with the Assembler, knows that most often the instruction consists of what to do and what to do.

And here we can just see if we are an operator or operand at the current point. If this is an operator, we look at its operands and assemble the command.

What happens in JavaScript if we have, for example, an array, and we request an element from it at index 1? An abstract syntax tree appears in which the operator “load a property by key” and the operands are the object and the key by which we load this property.

Why in javascript compiler?

As I said, we have an interpreted language, but in its scheme we see the compiler. Why is he? There are actually two types of compilers. There are compilers (ahead-of-time) that compile before execution, and JIT compilers that compile at run time. And due to JIT compilation, good acceleration is obtained. What is it for? Let's compare.

There is one and the same code. One on Pascal, another on JavaScript. Pascal is a great language. I think that it is necessary to learn how to program from it, but not with JavaScript. If you have a person who wants to learn how to program, then show him Pascal or C.

What's the Difference? Pascal can be both compiled and interpretable, and JavaScript already requires interpretation. The most important difference is static typing.

Because when we write on Pascal, we specify the variables that are needed, and then we write their types. Then the compiler can easily build a good optimized code. How do we refer to variables in memory? We have an address, and we have a shift. For example, Integer 32, then we make a shift to 32 at this address in memory and receive data.

In JavaScript there is no, we always change types at runtime, and the compiler, when it executes this code, the first time it performs it as it is, but collects information about types. And the second time, when he performs the same function, he is already based on the data he received last time, by assuming what types were there, can do some kind of optimization. If everything is clear with variables, they are determined by value, then what about our objects?

After all, we have JavaScript, it has a prototype model, and we have no classes for objects. In fact, there are, but they are not visible. This is the so-called Hidden Classes. They are visible only to the compiler itself.

How are Hidden Classes created?

We have a point - this is a constructor, and objects are created. First a hidden class is created that contains only the point itself.

Next, we set the property of this object x and from the fact that we had a hidden class, the following hidden class is created that contains x.

Next we set y and, accordingly, we get another hidden class, which contains x and y.

So we got three hidden class. After that, when we create a second object using the same constructor, the same thing happens. There are already hidden classes, they no longer need to be created, you just need to match them. So that later we know that these two objects are identical in structure. And you can work with them.

But what happens when we later add a property to the p2 object later? A new hidden class is created, i.e. p1 and p2 are no longer alike. Why is it important? Because when the compiler will iterate through the point loop, and now it will have all the same as p1, it twists, twists, twists, bumps into p2, and it has another hidden class, and the compiler goes into deoptimization, because he didn't get what he expected.

This is the so-called duck typing. What is duck typing? The expression came from American slang, if something goes like a duck, quacks like a duck, then it's a duck. Those. if p1 and p2 are the same in structure, they belong to the same class. But once we add p2 to the structure, these ducks quack in different ways, respectively, these are different classes.

And here we received data on which classes the objects belong to, and received data on what kind of variables, where to use this data and how to store them. For this system is used Inline Caches.

Consider how the creation of Inline Caches for this part. At first, when our code is analyzed, it is supplemented with such calls. This is only initialization. We still do not know what type we will have our Inline Caches.

We can say that in this place initiate it, here is the download of this.primes:

Here is the download by key:

And then the BinaryOperation operation does not mean that it is binary, it means that it is a binary and not a unary operation. An operation that has left and right sides.

What happens at run time?

When the code arrives, this is all replaced by pieces of code that we already have inside the compiler, and the compiler knows how to work well with this particular case if we have information about the type. That is, it is replaced here with a call to a code that knows how to get primes from an object:

Here it is replaced by code that knows how to get an element from an SMI array:

Here is a code that knows how to calculate the remainder of the division of two SMIs:

It will already be optimized. And this is how the compiler worked, saturating it with such pieces.

This, of course, gives some overhead, but also gives performance.

We have developed the Internet, the number of JavaScript has increased, greater performance was required, and Google responded with the creation of a new Crankshaft compiler.

The old compiler began to be called FullCodegen, because it works with a full code base, it knows all of JavaScript, how to compile it. And it produces a non-optimized code. If he stumbles upon a function that is called several times, he thinks that it has become hot, and he knows that the Crankshaft compiler can optimize it. And he gives knowledge of types and that this function can be optimized in the new Crankshaft compiler. Next, the new compiler re-receives the abstract syntax tree. It is important that it does not receive from the old AST compiler, but goes again and requests AST. And knowing about types, it does optimization, and at the output we get optimized code.

If he cannot do optimization, he will fall into deoptimization. When does this happen? This is how I said earlier, for example, in our Hidden Class cycle is spinning, then something unexpected and we fell out into de-optimization. Or, for example, many people like to do a check when we have something on the left side, and we take, for example, the length, i.e. we check if we have a string, and take its length. What is bad? Because when we have no line, then on the left side we get a Boolean and the output is a Boolean, and before that there was a Number. And in this case, we fall into deoptimization. Or he met the code, can not optimize it.

By the example of the same code. Here we had a code rich inline-caches, it is all inline in the new compiler.

He puts it all inline. Moreover, this compiler is a speculative optimizing compiler. What does he speculate on? He speculates on knowledge of types. He assumes that if we called 10 times with this type, then this type will continue. Everywhere there are such checks that the type that he expected came, and when a type came that he did not expect, he falls into de-optimization. These improvements gave a good performance boost, but gradually the team involved in the V8 engine realized that everything had to start from scratch. Why? There is such a way of software development when we write the first version, and we write the second version from scratch, because we understood how to write. And created a new compiler - Turbofan in 2014.

We have the source code that goes into the parser, then to the FullCodegen compiler. So it was before that, no difference. At the output we get a non-optimized code. If we can do any optimization, then we leave in two compilers, Crankshaft and Turbofan. FullCodegen decides whether the Turbofan compiler can optimize specific things, and if it can, sends it to it, and if it cannot, it sends it to the old compiler. Gradually began to add new designs from ES6. We started by optimizing asm.js into it.

Why do you need a new compiler?

- Improve baseline performance

- Make Predictable Performance

- Reduce source code complexity

What does “improve baseline productivity” mean?

The old compiler was written in those years when we had powerful desktops. And it was tested on tests like octane, synthetic, which checked peak performance. Recently there was a Google I / O conference, and there the manager managing V8 development stated that they refused octane in principle, because it does not correspond to what the compiler actually works with. And this led to the fact that we had a very good peak performance, but the baseline, ie things were not optimized in the code, and when the code, which worked well, ran across such things, there was a significant drop in performance. And there are a lot of such operations, here are a few of them: forEach, map, reduce. They are written in ordinary JS, stuffed with checks, much slower than for. Often advised to use for.

Slow bind operation - it is implemented inside, it turns out to be absolutely terrible. Many frameworks have written their bind implementations. Often people said that I sat down, wrote a bind on my knee and it works faster, surprisingly. Functions containing try {} catch (e) {} (and finally) are very slow.

Often there was such a sign that it is better not to use, so as not to subside performance. Actually, the code is slow because the compiler is not working correctly. And with the advent of Turbofan, you can forget about it, because everything has already been optimized. Also very important: the performance of asynchronous functions has been improved.

Therefore, everyone is waiting for the release of the new node, which has recently been released, and performance with async / await is important there. We have an asynchronous language initially, and we could only use callbacks well. And who writes with the promise, they know that their third-party implementations work faster than the native implementation.

The next task was to make the performance predictable. There was a situation like this: the code that showed itself perfectly on jsPerf, when inserted into the working code, showed a completely different performance. But there are other cases where we could not guarantee that our code would work as productively as we had originally intended.

For example, we have such a fairly simple code that calls mymax, and if we check it (using the trace-opt and trace-deopt keys, we show which functions were optimized and which were not).

We can start it with node, and we can also run it with D8, a special environment where the V8 works separately from the browser. It shows us that the optimization has been disabled. Because too many times it was started on check. What is the problem? It turns out that the pseudo-array arguments is too big, and inside, it turns out, there was a check on the size of this array. Moreover, this test, as Benedikt Meurer (the leading developer of Turbofan) said, did not make any sense, it simply went over copypaste over the years.

And why is the length limited? After all, the stack size is not checked, nothing, that was just so limited. This is an unexpected behavior that was necessary to get rid of.

Another example, here we have a dispatcher that calls two callbacks. Also, if we call it, we will see that it has been de-optimized. What is the problem here? That one function is strict, and the second is not strict. And they have different hidden classes in the old compiler. Those. he considers them different. And in this case, he also goes on deoptimization. And this, and the previous code, it is written in principle correctly, but it is de-optimized. This was unexpected.

There was another example on Twitter, when it turned out that in some cases the for loop in chrome worked even slower than reduce. Although we know that reduce is slower. It turned out the problem was that let was used inside for - unexpectedly. I even put the latest version at that time and the result is already good - corrected.

The next item was to reduce complexity. Here we had version V8 3.24.9 and it supported four architectures.

Now V8 supports nine architectures!

And the code has been accumulating over the years. It was written in part in C, Assembler, JS, and the developer who came to the team felt like this.

The code must be easily modified in order to be able to respond to changes in the world. And with the introduction of Turbofan, the number of architectural-specific code has decreased.

From 2013 to 2017, it was 29% less than the architectural-specific code. This was due to the emergence of a new code generation architecture in Turbofan.

They made it data driven, i.e. we have a control flow graph that contains data and knowledge of what should happen to them. And it gets into the general command selector, then the registers are reserved and then the code is generated for different architectures. Those. the developer no longer needs to know how everything is written for specific architectures, but it is possible to make more general code. That's how it all happened, well improved the code, but gradually a few years after the compiler was written for the interpreted language, it turned out that we still need an interpreter.

And what's the reason? The reason holds Steve Jobs.

This, of course, is not the iPhone itself, but those smartphones that the iPhone has spawned, which have given convenient access to the Internet. And this led to the fact that the number of users on mobile devices exceeded the number on desktops.

And originally compilers were developed for powerful desktops, but not for mobile devices.

Here is the 1MB JavaScript primary analysis timeline. And recently there was a question why VKontakte does server rendering, and not client. Because the time spent on JS analysis can be 2-5 times longer on mobile devices. And we are talking about top devices, and people often go with very different.

And one more problem: many Chinese memory devices have 512 MB, and if you look at how V8 memory is allocated, another problem appears.

The memory is divided into objects (what our code uses) and code objects (this is what the compiler itself uses - for example, it stores inline caches there). It turns out that 30% of the memory is occupied by the virtual machine to support internal use. We cannot manage this memory, the compiler itself consumes it.

It was necessary to do something with this, and in 2016, the Android development team from London responded with the creation of a new interpreter Ignition.

You may have noticed that the code optimized by the Turbofan compiler does not refer to the parser for the syntax tree, but receives something from the interpreter. He gets a bytecode.

Now the abstract syntax tree is parsed into bytecode, and this JavaScript parsing happens once, then bytecode is used.

If someone does not know, baytkod is a compact platform-independent representation of the program. This is somewhat similar to assembler, only platform-independent. So it is called, because all instructions occupy one byte.

Let's see how bytecode is generated for such a piece of the program.

We have a program, we have generated bytecode, and we have a set of registers.

So we set the values for these registers input, which fall into our program. At the first stage, we load into the special register accumulator (it is needed in order not to spend registers once more, but only participates in calculations) smi integer equal to 100.

The next command tells us that we need to subtract from the register a2 (on the plate we see 150 there) the previous value of the battery (100). In accumulator, we got 50.

Next, the command tells us what to save in r0. It is associated with the variable d.

Further it becomes more clear. Again we load the value from b, multiply it by the value accumulator, add a0 and get the output, respectively, 105.

And our entire program turns into a long chain from bytecode. Thus, the memory consumption that was stored on our code was reduced.

There was a second problem, the memory that inline caches consumed. For this, we switched to new caches - Data-driven IC, which reduce the cost of a slow path. The slow path is how non-optimized code works, fast code when optimized.

On the left we see the old scheme. When we need to find a field in an object, we store knowledge about where it lies in the object, somewhere we store this object and know how to handle it. In the new scheme there is a control vector in which there are data and commands, and knowledge of what to do with these commands. And it goes on loading inline caches, on the fast path, if deoptimization, then on the slow path. And, accordingly, this scheme no longer requires storing references to objects, and it turns out to be more compact. As a result, after the introduction of the scheme, memory consumption on the same code decreased.

And finally, this year the scheme has become much simpler.

Here we always work in the Turbofan compiler. You can see that the FullCodegen compiler used to know the entire JS, and the Crankshaft compiler is only part of the JS, and now the Turbofan compiler knows the entire JS and works with all JS. If it cannot optimize, it issues a non-optimized code, if it can, then, accordingly, it is optimized. And for the code, he turns to the interpreter.

We have classes that are not visible (many people know that there are new classes in ES6, but this is just sugar). They must be monitored, because the code for good performance must be monomorphic, not polymorphic. Those. if the classes that enter the function change, their hidden class changes - for objects that fall into our function, the code becomes polymorphic and it is poorly optimized. If our objects come of the same type of hidden class, then, accordingly, the code is monomorphic.

In V8, the code passes through the interpreter and the JIT compiler. The task of the JIT compiler is to make the code faster. The JIT compiler runs our code in a loop and each time, based on the data it received last time, it tries to make the code better. He fills him with knowledge of the types he gets during the first run, and due to this he makes some optimizations. Inside it are pieces that are oriented to work with maximum performance. If we have a + b in the code, it's slow. We know that this is number + number or string + string, we can do it quickly. This is what the JIT compiler does.

The better the optimization, the higher the overhead (time, memory). The task of the interpreter is to reduce the overhead of memory. Even with the advent of Turbofan, some optimizations were abandoned, which were previously, because they decided to increase the basic performance and slightly reduce the peak.

The compiler has two modes of operation - cold and hot. Cold is when our function is started for the first time. If the function has been run several times, the compiler understands that it is already hot, and tries to optimize it. There is an ambush with tests. When developers chase tests many times and get any data, this is already optimized hot code. But in reality, this code can be called once or twice and show completely different performance. This must be considered.

With the monomorphic code the same example. That is, when we write code, we can help our compiler. We can write code as if we have a typed language (do not override variables, do not write different types to them, always return the same type).

That's all the main secrets.

Reading links

github.com/v8/v8/wiki/TurboFan

http://benediktmeurer.de/

http://mrale.ph/

http://darksi.de/

https://medium.com/@amel_true

If you like JS just as we do, and with pleasure delve into all its interior, you may be interested in these reports at the upcoming Moscow conference HolyJS :

- Better, faster, stronger - getting more from the web platform (Martin Splitt)

- The Post JavaScript Apocalypse (Douglas Crockford)

- Testing serverless applications (Slobodan Stojanovic)

Source: https://habr.com/ru/post/338930/

All Articles