Computer vision. Intel Expert Answers

Two weeks ago, we invited Habra readers to ask their questions to the creators of the computer vision library OpenCV. There were a lot of questions asked, and interesting ones, which means that this topic is of interest not only for Intel, but also for the masses of developers. Without further ado, we turn to the publication of answers and invite them to discuss them. And also announce the authors of the best questions! At the very end of the post.

Question noonv

Question noonv

Anatoly Baksheev . A few of my thoughts:

')

I think after some time there will be some return to the traditional computer vision (when all the low-hanging fruits on DL are broken), but at a slightly different level of development and possibilities.

Vadim Pisarevsky . My vision is pretty standard. The near future (actually already present) is for deep / deep learning, and it will be used in an increasingly sophisticated and non-trivial way, as the CVPR 2017 conference proved. 6 years ago deep learning appeared, or rather, was revived after the article by Kryzhevsky (Alexnet), and then he solved only one problem well - recognizing the class of an object under the condition that one dominant object was in the frame, without determining its position. 2 years ago, almost everyone in our area spoke about it. They invented the first grids for detecting objects and grids for semantic segmentation. Prior to this, the semantic segmentation problem was considered a hopeless, unsolvable problem, as proof of Fermat's theorem.

There was a big problem with speed - everything worked very slowly. Now the grids are squeezed, the implementations have been optimized, shifted to the GPU, specialized hardware is on the way and the issue of speed has largely disappeared and completely disappears in the next couple of years - the grids are already working as a whole as fast as the traditional approaches, and much better. Now the main areas for research are:

The first common task will be the main trend of the next decade, at least the second task, I think, will be solved in the next few years, in particular cases it is already being solved. Prospects such that computer vision from a highly specialized area with a bunch of artisanal methods will turn into an industrial area and will greatly affect the lives of many people. Actually, this process is already occurring at high speed, and we are more likely talking not only about vision, but about artificial intelligence.

Question IliaSafonov

Question IliaSafonov

Vadim . Basic elementwise functions can already work with such data. There is no 3D filtering or any other more complex algorithms. But, truth, there are deep grids that can do some transformations over 3D data arrays. If there is a list of necessary operations, I invite you to submit a request for the extension of functionality . If there is a good detailed request with a description of the task, with links, then it is quite possible that this will be one of our projects for the next Google Summer of Code (summer 2018).

Question of MaximKucherenko

Question of MaximKucherenko

Anatoly . It is difficult to answer without having the images themselves. You can try to make CNN that would somehow restore the picture. Check out the work on CNN impainting, where the grid “thinks out” the damaged parts of the image. Or CNN debluring, where the grid, in fact, is trying to learn the classic Debluring algorithm. You can try to do the same for you.

In your case, the grid can be recurrent at some point in order to take into account previous frames for the synthesis of a “clean” image.

Vadim . We need some kind of temporal filtering, taking into account the movement of machines and cameras - i.e. It is necessary to collect frames from several, we are talking about a certain variation on the topic of video superresolution, but without increasing the resolution. A time neighborhood of each frame is taken, a dense optical flow is calculated between the central frame and adjacent time frames, a certain penalty function is compiled for the resulting “enhanced” image - it should be smooth at the same time and resemble all the images from the neighborhood, taking into account the compensated motion. Then an iterative optimization process starts. I'm not sure that such an algorithm will produce wonders, especially in extreme conditions (blizzard), but in situations of moderate complexity, it may be possible to improve the image at the output. But at the very beginning, without such an algorithm, you can try the function cv :: equalizeHist () , maybe it will give something.

Question uzh13

Question uzh13

Vadim . Currently the most preferred options are:

Depending on the circumstances, Python may be preferable to C ++ or vice versa. Erlang is too exotic for this area. Perhaps you will write something on it, but then it will be difficult to find like-minded people to discuss and develop this code together. From the books on classical computer vision, we can recommend the book by R. Szeliski. Computer Vision, the draft of which is available here . There are many books on OpenCV , as a rule, also in English. With deep learning, you can begin to get acquainted using the following tutorial . As for communication, the question is more complicated. Well, actually, everybody has the Internet now, you can join some project.

Anatoly . C ++ and Python are, in my opinion, a classic for fast prototyping and there is no escape for serious solutions.

In addition to answering Vadim, I recommend awesome repositories on github:

Generally seen awesome repositories maintained by enthusiasts for many areas.

Question of ChaikaBogdan

Question of ChaikaBogdan

Anatoly . I think you are on the right track.

In general, if a person wants to work in this area, I think any normal leader will take him to himself, even without skills. The main thing is to demonstrate the desire to work, expressed in specific actions: show the algorithms you made for OpenCV, for caffe / tf / torch, show your projects on github, show your rating on Kaggle. I have an engineer who left the previous boring non-CV of work, went to Thailand and did not work for a year. After six months, he got bored there, and he began to participate in Kaggle competitions. Then when he came to me, his good rating on Kaggle also played a role even without experience in CV. Now it is one of the strongest of my engineers.

Vadim . I have a "success story" for you. At one time, a series of patches was filed in OpenCV with the addition of face recognition functionality. Of course, now the problem of face recognition is solved with the help of deep learning, but then it was quite simple algorithms, but not the essence. The author of the code was a certain person from Germany named Philip. He was then engaged in boring projects at his main job; in his own words, he programmed DSP. I found time after work to do face recognition, prepared patches, we accepted them. Naturally, he was listed there as the author. After some time, he wrote me a joyful letter that, thanks to such a vivid “resume”, he found work related to computer vision.

Of course, this is not the only way. Just if you really like computer vision, get ready to do it overtime on a voluntary basis, gain practical experience. And about education - how do you think, how many people from the OpenCV team received education in this area? Zero. We are all mathematicians, physicists, engineers. The general skills (which are developed by practice) are important to study new material, mainly in English, to program, to communicate, to solve mathematical and engineering problems. And specific knowledge is transient. With the advent of deep learning a few years ago, most of our knowledge has become obsolete, and in a few years, deep learning can become an obsolete technology.

Question aslepov78

Question aslepov78

Vadim . Paraphrasing Winston Churchill, perhaps [modern] in-depth training is a bad way to solve computer vision problems, but everything else we know is even worse. But no one has a monopoly on research, thank God, invent your own. And in fact, people come up with. I myself was a big skeptic of this approach a few years ago, but, first, the results are obvious, and secondly, it turned out that deep learning can be applied not stupidly (I took the first available architecture, scored a million training examples, launched a cluster Got a model or not received), but you can apply creatively. And then it becomes a truly magical technology, and problems begin to be solved, to which it was not at all clear how to approach. For example, the definition of 3D poses of players on the field with a single camera.

Question aslepov78

Question aslepov78

Vadim . We make the tool primarily for ourselves and our colleagues, and also integrate patches from the user community (not all, it is true, but most of them), i.e. what users find useful for themselves and others. It would be nice, of course, if in C ++ there was a certain general model - how to write libraries so that they were compatible with each other, and they could be easily used together and there would be no problems with building and converting data structures. Then, perhaps, OpenCV could be replaced without serious consequences by a series of more special libraries. But there is no such model yet, and maybe it will not. In Python, there is a similar model built around numpy and a system of modules and extensions, and the Python wrappers for OpenCV, it seems to me, are pretty well built in there. I think if you practically work in the field of CV for several years, then you will understand why OpenCV is needed and why it is arranged the way it is. Or will not come.

Question aslepov78

Question aslepov78

Vadim . In truth, there are no ready-made solutions in OpenCV at all. Ready solutions in computer vision cost a lot of money and are written for a specific customer to solve specific, very clearly set tasks. The process of creating such solutions differs from the combination of blocks in much the same way as the process of designing, building and arranging an individual house to order differs from the assembly of a Lego cube toy house.

Question vlasenkofedor

Question vlasenkofedor

Anatoly . We have little experience with these devices.

Question killla

Question killla

Vadim . Raspberry Pi, starting from the second generation, contains an ARM CPU with NEON vector instructions. OpenCV should be pretty smart to work on such a piece of hardware. Regarding the speed of video capture - we somehow squeezed out 20-30 frames / sec from USB 2, it's not very clear what this is about.

Question killla

Question killla

Vadim . OpenCV is built for any ARM Linux and is largely optimized using NEON. I think the Raspberry Pi is worth watching first of all, for example, here is the experience of an enthusiast .

Question killla

Question killla

Vadim . For the iron part of the answer will not give, explore. What about tracking the dog. The radio beacon solves this problem easier, cheaper, more reliable. If the goal is not to solve the problem, but I want to practice in computer vision, then please. For 2-4 weeks, you can indulge and at the same time start thinking about questions like:

According to my humble estimates, if you are seriously engaged in this task, you can definitely take yourself for a year or two. Learn about computer vision more than they teach anywhere.

Question almator

Question almator

Vadim . See an example letter_recog.py from the OpenCV distribution.

Question almator

Question almator

Anatoly . OpenCV does not plan to train networks, only fast optimized inference. We already have CNN Face Detector which can work more than 100fps on a modern Core i5 (although we can’t put it in public access). I think many current algorithms will be gradually instrumented by small (> 5000fps) auxiliary grids, whether they are featues or optical flow, or RANSAC, or any other algorithm.

Vadim . OpenCV will evolve towards deep learning. Ordinary neural networks are a special case and now we have little interest. I cannot advise anything in Russian, but I will be grateful if you find and inform. There are online courses and online books in English, the same deep learning tutorial mentioned above.

Question almator

Question almator

Vadim . Deep mesh + augmentation training base. That is, you need to collect a database of images of this logo, and then artificially expand it many times. Here , for example, immediately is via Google.

Question WEBMETRICA

Question WEBMETRICA

Anatoly . I think it will not happen soon. Moreover, there is no method to reliably tell how other beings see the world.

Vadim . On CVPR 2017 there was an interesting article about using human readable signals for pattern recognition. The authors have promised an interesting sequel. Perhaps soon our brothers will get to our smaller ones.

Question WEBMETRICA

Question WEBMETRICA

Vadim . Everything's possible. It is necessary to go from a specific task, it seems to me.

Question barabanus

Question barabanus

Anatoly . I have known this problem for about 8 years already. As far as I remember, this is impossible to implement - you can try it yourself. There you get something like the ambiguity of calling a constructor for a service intermediate type — the compiler cannot decide for itself which constructor to call and throws an error. You will have to manually convert to a point via cv :: Mat * Point _ <...> (Vec _ <...>).

Vadim . I suggest to submit a request . It is possible that in this particular case, they simply missed this function, or intentionally disabled it, in order not to confuse the C ++ compiler in the whole set of overlapped '*' operators - sometimes this happens.

Question barabanus

Question barabanus

Vadim . Yes, that would be helpful. After reviewing and necessary revision, if it is needed, such a patch can be accepted .

Question perfect_genius

Question perfect_genius

Anatoly . Hardware networks have little meaning, because progress is moving forward very quickly, and such a piece of hardware will become obsolete before it goes on sale. But the creation of accelerating instructions for networks (a la MMX / SSE / AVX) or even co-processors, in my opinion, is a very logical step. But we do not own the information.

Vadim . At this stage, we know the attempts, and our colleagues are actively involved in them, to use the available hardware (CPU, GPU) to accelerate the implementation of grids. Attempts are quite successful. Accelerated solutions for CPUs (MKL-dnn library and Intel Caffe compiled with it) and for GPUs (clDNN) allow you to run a large number of popular networks, such as AlexNet, GooLeNet / Inception, Resnet-50, etc. in real time on a regular computer without a powerful discrete card, on a regular laptop. Even OpenCV, although it does not yet use these optimized libraries, allows you to run some grids for classification, detection and semantic segmentation in real time on a laptop without discrete graphics. Try our examples and see for yourself. Effective networking is closer than many people think.

Question Mikhail063

Question Mikhail063

Vadim . Because somewhere there is some kind of mistake, obviously :) We must start with localization.

Question KOLANICH

Question KOLANICH

Anatoly . Sometimes classic features can be a quick fix.

Vadim . For analysis it is better to train. For simpler tasks, such as gluing panoramas, classic features such as SIFT are still competitive.

Question vishnerevsky

Question vishnerevsky

Vadim . Databases took the standard, are in the public domain. Now specific configuration files with file lists have been lost, many years have passed. The patch with the addition of YOLO v.2 is hanging, by the time these replies are published, I think we’ll fill it up. An example with MobileNet_SSD is already there . There you can find examples with segmentation.

Question iv_kovalyov

Question iv_kovalyov

Vadim . See the tip above for logo search. Only here, most likely, you will need two grids - detection and subsequent recognition.

Intel experts recognized IliaSafonov 's best questions about using OpenCV for 3D objects and ChaikaBogdan about building a career in computer vision for a beginner. The authors of these questions get prizes from Intel. Congratulations to the winners and thanks to Anatoly and Vadim for informative answers!

Question noonv

Question noonvHow do you see the future of computer vision? Watching the development of machine learning, what prospects do you see?

Anatoly Baksheev . A few of my thoughts:

')

- everything learnable, minimum handcrafting

- new is well forgotten old.

I think after some time there will be some return to the traditional computer vision (when all the low-hanging fruits on DL are broken), but at a slightly different level of development and possibilities.

Vadim Pisarevsky . My vision is pretty standard. The near future (actually already present) is for deep / deep learning, and it will be used in an increasingly sophisticated and non-trivial way, as the CVPR 2017 conference proved. 6 years ago deep learning appeared, or rather, was revived after the article by Kryzhevsky (Alexnet), and then he solved only one problem well - recognizing the class of an object under the condition that one dominant object was in the frame, without determining its position. 2 years ago, almost everyone in our area spoke about it. They invented the first grids for detecting objects and grids for semantic segmentation. Prior to this, the semantic segmentation problem was considered a hopeless, unsolvable problem, as proof of Fermat's theorem.

There was a big problem with speed - everything worked very slowly. Now the grids are squeezed, the implementations have been optimized, shifted to the GPU, specialized hardware is on the way and the issue of speed has largely disappeared and completely disappears in the next couple of years - the grids are already working as a whole as fast as the traditional approaches, and much better. Now the main areas for research are:

- try to apply deep learning for new tasks, more and more complex,

- in particular, apply to tasks where it is difficult to collect huge training bases.

The first common task will be the main trend of the next decade, at least the second task, I think, will be solved in the next few years, in particular cases it is already being solved. Prospects such that computer vision from a highly specialized area with a bunch of artisanal methods will turn into an industrial area and will greatly affect the lives of many people. Actually, this process is already occurring at high speed, and we are more likely talking not only about vision, but about artificial intelligence.

Question IliaSafonov

Question IliaSafonovAre there any plans to add the ability to process 3D (volumetric) images in OpenCV? I work with tomographic images of about 4000x4000x4000 size. The existing open-source libraries for 3D are, to put it mildly, poor and rather low compared to OpenCV.

Vadim . Basic elementwise functions can already work with such data. There is no 3D filtering or any other more complex algorithms. But, truth, there are deep grids that can do some transformations over 3D data arrays. If there is a list of necessary operations, I invite you to submit a request for the extension of functionality . If there is a good detailed request with a description of the task, with links, then it is quite possible that this will be one of our projects for the next Google Summer of Code (summer 2018).

Question of MaximKucherenko

Question of MaximKucherenkoThe video camera hangs on the bridge under which there is a stream of cars. The camera has excellent lighting, in normal weather at night you can even look at the drivers faces. When a snowstorm begins, the pictures are almost white (due to the large number of small moving objects, snowflakes). Can you tell me how to overcome this "noise"?

Anatoly . It is difficult to answer without having the images themselves. You can try to make CNN that would somehow restore the picture. Check out the work on CNN impainting, where the grid “thinks out” the damaged parts of the image. Or CNN debluring, where the grid, in fact, is trying to learn the classic Debluring algorithm. You can try to do the same for you.

In your case, the grid can be recurrent at some point in order to take into account previous frames for the synthesis of a “clean” image.

Vadim . We need some kind of temporal filtering, taking into account the movement of machines and cameras - i.e. It is necessary to collect frames from several, we are talking about a certain variation on the topic of video superresolution, but without increasing the resolution. A time neighborhood of each frame is taken, a dense optical flow is calculated between the central frame and adjacent time frames, a certain penalty function is compiled for the resulting “enhanced” image - it should be smooth at the same time and resemble all the images from the neighborhood, taking into account the compensated motion. Then an iterative optimization process starts. I'm not sure that such an algorithm will produce wonders, especially in extreme conditions (blizzard), but in situations of moderate complexity, it may be possible to improve the image at the output. But at the very beginning, without such an algorithm, you can try the function cv :: equalizeHist () , maybe it will give something.

Question uzh13

Question uzh13Which language is best for CV experiments? Is it worth it to deal with Erlang for this?

Is there a canonical set of books or a series of articles for a quick start with a technical vision? Is there anyone to chat with?

Vadim . Currently the most preferred options are:

- C ++ with a good development environment

- Python

Depending on the circumstances, Python may be preferable to C ++ or vice versa. Erlang is too exotic for this area. Perhaps you will write something on it, but then it will be difficult to find like-minded people to discuss and develop this code together. From the books on classical computer vision, we can recommend the book by R. Szeliski. Computer Vision, the draft of which is available here . There are many books on OpenCV , as a rule, also in English. With deep learning, you can begin to get acquainted using the following tutorial . As for communication, the question is more complicated. Well, actually, everybody has the Internet now, you can join some project.

Anatoly . C ++ and Python are, in my opinion, a classic for fast prototyping and there is no escape for serious solutions.

In addition to answering Vadim, I recommend awesome repositories on github:

- awesome-rnn

- awesome-deep-vision

- awesome-computer-vision

- awesome-random-forest

- neural-network-papers

- awesome-tensorflow

Generally seen awesome repositories maintained by enthusiasts for many areas.

Question of ChaikaBogdan

Question of ChaikaBogdanHow to start your career in the field of CV, if you have no experience in it? Where to gain experience in solving real problems and experience in this area, which are so fond of HRy?A bit of TL; DR which is the background storyI studied at the university, where there was no such direction and, of course, went to work in another area (software engineering, automation). If I had the opportunity and understanding of how potentially cool it is to work in the field of CV, I would enroll in it, even if in another university, but, alas, I found out about it too late. To relearn a second higher education is somehow inadmissibly long.

On duty, I got the task of detecting using Python + OpenCV, I decided somehow through the template match (since the subject area allowed). It was fun, new and everyone liked it, especially me.

He began to explore the possibility of self-study, took a course on Introduction to Computer Vision (Udacity-Georgia Tech) and began practical from PyImageSearch .

At the same time, I looked at vacancies at Upwork and PyImageSearch Jobs, Fiverr was also frustrated, as there is clearly not enough knowledge to solve real problems (for example, light / shadow / angle conditions interfere almost everywhere). I'm not sure that even the complete passage of, say, the Guru course from PyImageSearch will help you find a decent job, for the examples are very “ideal” and rarely work as intended in real conditions.

On exchanges like Fiverr , Upwork , PyImageJobs there is a lot of competition and tasks are required to be done very quickly. And you want something with a small threshold of entry and takeoff learning-curve. About remote work generally keep quiet. Plus, everywhere else they want deep / machine learning after all.

I don’t want to quit the main job in order not to find a job in the CV. But give up too. This is a cool and interesting area to develop professionally, no matter how you look at it).

Anatoly . I think you are on the right track.

In general, if a person wants to work in this area, I think any normal leader will take him to himself, even without skills. The main thing is to demonstrate the desire to work, expressed in specific actions: show the algorithms you made for OpenCV, for caffe / tf / torch, show your projects on github, show your rating on Kaggle. I have an engineer who left the previous boring non-CV of work, went to Thailand and did not work for a year. After six months, he got bored there, and he began to participate in Kaggle competitions. Then when he came to me, his good rating on Kaggle also played a role even without experience in CV. Now it is one of the strongest of my engineers.

Vadim . I have a "success story" for you. At one time, a series of patches was filed in OpenCV with the addition of face recognition functionality. Of course, now the problem of face recognition is solved with the help of deep learning, but then it was quite simple algorithms, but not the essence. The author of the code was a certain person from Germany named Philip. He was then engaged in boring projects at his main job; in his own words, he programmed DSP. I found time after work to do face recognition, prepared patches, we accepted them. Naturally, he was listed there as the author. After some time, he wrote me a joyful letter that, thanks to such a vivid “resume”, he found work related to computer vision.

Of course, this is not the only way. Just if you really like computer vision, get ready to do it overtime on a voluntary basis, gain practical experience. And about education - how do you think, how many people from the OpenCV team received education in this area? Zero. We are all mathematicians, physicists, engineers. The general skills (which are developed by practice) are important to study new material, mainly in English, to program, to communicate, to solve mathematical and engineering problems. And specific knowledge is transient. With the advent of deep learning a few years ago, most of our knowledge has become obsolete, and in a few years, deep learning can become an obsolete technology.

Question aslepov78

Question aslepov78Do not you think that you have succumbed to mass hysteria about neural networks, deep learning?

Vadim . Paraphrasing Winston Churchill, perhaps [modern] in-depth training is a bad way to solve computer vision problems, but everything else we know is even worse. But no one has a monopoly on research, thank God, invent your own. And in fact, people come up with. I myself was a big skeptic of this approach a few years ago, but, first, the results are obvious, and secondly, it turned out that deep learning can be applied not stupidly (I took the first available architecture, scored a million training examples, launched a cluster Got a model or not received), but you can apply creatively. And then it becomes a truly magical technology, and problems begin to be solved, to which it was not at all clear how to approach. For example, the definition of 3D poses of players on the field with a single camera.

Question aslepov78

Question aslepov78OpenCV has become a warehouse of algorithms from different areas (computational geometry, signal processing, machine learning, etc.). Meanwhile, there are more advanced libraries for the same computational geometry (not to mention neural networks). It turns out that the meaning of OpenCV is only in one - all the dependencies in one bottle?

Vadim . We make the tool primarily for ourselves and our colleagues, and also integrate patches from the user community (not all, it is true, but most of them), i.e. what users find useful for themselves and others. It would be nice, of course, if in C ++ there was a certain general model - how to write libraries so that they were compatible with each other, and they could be easily used together and there would be no problems with building and converting data structures. Then, perhaps, OpenCV could be replaced without serious consequences by a series of more special libraries. But there is no such model yet, and maybe it will not. In Python, there is a similar model built around numpy and a system of modules and extensions, and the Python wrappers for OpenCV, it seems to me, are pretty well built in there. I think if you practically work in the field of CV for several years, then you will understand why OpenCV is needed and why it is arranged the way it is. Or will not come.

Question aslepov78

Question aslepov78Why so few off-the-shelf solutions? For example, if I'm new to CV, and I want to look for a black square on a white background, then by opening the OpenCV dock, I will drown in it. Instead, I would like to look through the list of the most typical and simple tasks and select, or combine. Those. OpenCV has virtually no declarative approach.

Vadim . In truth, there are no ready-made solutions in OpenCV at all. Ready solutions in computer vision cost a lot of money and are written for a specific customer to solve specific, very clearly set tasks. The process of creating such solutions differs from the combination of blocks in much the same way as the process of designing, building and arranging an individual house to order differs from the assembly of a Lego cube toy house.

Question vlasenkofedor

Question vlasenkofedorPlease tell us about the most interesting projects with the original solution - OpenCV with microcomputers (Raspberry, ASUS ...)

Anatoly . We have little experience with these devices.

Question killla

Question killlaAre there small boards (of the Raspberry Pi level with a processor sharpened for OpenCV video processing) and a video camera connected directly to the microprocessor (microcontroller) without any intermediaries in the form of USB and its large delays? So that we could take it and quickly make a device on the knee for counting crows on a bed or a device for tracking an object (simplest image processing + reaction with minimal delays to stimuli).My own experienceLast time I tried to solve a similar problem about 4 years ago. 1) All popular available development boards did not pull the processing of a good video stream faster than 1-2 times per second, it was impossible to use DSP without programming at low levels, and it wasn’t easy to get a controller with powerful well-documented DSP and software 2) everything The cameras in all the examples cling via USB, respectively, from scratch, huge delays + software processing of the camera using a low-power main processor. There is almost no time left to recognize CPU time.

Vadim . Raspberry Pi, starting from the second generation, contains an ARM CPU with NEON vector instructions. OpenCV should be pretty smart to work on such a piece of hardware. Regarding the speed of video capture - we somehow squeezed out 20-30 frames / sec from USB 2, it's not very clear what this is about.

Question killla

Question killlaAre there ready distributions and software “out of the box” for such glands, with which you can immediately start working without finishing weeks?

Vadim . OpenCV is built for any ARM Linux and is largely optimized using NEON. I think the Raspberry Pi is worth watching first of all, for example, here is the experience of an enthusiast .

Question killla

Question killlaTo summarize, I’ll ask the following question: is it possible in 2017-2018 a 2-3-year student of an IT specialty with basic programming skills to do 10,000 rubles to get a 2-3-year-old piece of hardware, which for 2-4 weeks of studying OpenCV and writing a code to create a simple device: a camera on a motor suspension with a pair of axes of movement, which will hang on the balcony and follow the movement of your favorite dog in the yard?

Vadim . For the iron part of the answer will not give, explore. What about tracking the dog. The radio beacon solves this problem easier, cheaper, more reliable. If the goal is not to solve the problem, but I want to practice in computer vision, then please. For 2-4 weeks, you can indulge and at the same time start thinking about questions like:

- how to handle the movements / vibrations of the camera itself, what behavior is expected in the dark, fog, rain, snow, how to handle different seasons,

- how to handle different lighting conditions - overcast, the sun at the zenith, the sun at sunrise and sunset with large shadows,

- how the system should handle the appearance of another object in the field of visibility (car, person, cat, other dog, another dog of the same breed),

- what quality is considered acceptable (the system gives you false messages about the loss of a dog every 5 minutes, the system reports about the loss of a dog a day after its loss)

- etc.

According to my humble estimates, if you are seriously engaged in this task, you can definitely take yourself for a year or two. Learn about computer vision more than they teach anywhere.

Question almator

Question almatorThe model = cv2.ANN_MLP () function does not work on python.Function codeimport cv2 import numpy as np import math class NeuralNetwork(object): def __init__(self): self.model = cv2.ANN_MLP() def create(self): layer_size = np.int32([38400, 32, 4]) self.model.create(layer_size) self.model.load('mlp_xml/mlp.xml') def predict(self, samples): ret, resp = self.model.predict(samples) return resp.argmax(-1) model = NeuralNetwork() model.create()

AttributeError error : 'module' object has no attribute 'ANN_MLP'

Vadim . See an example letter_recog.py from the OpenCV distribution.

Question almator

Question almatorHow will OpenCV evolve towards neural networks, machine learning? Where are simple examples for beginners in machine learning? Desirable in Russian.

Anatoly . OpenCV does not plan to train networks, only fast optimized inference. We already have CNN Face Detector which can work more than 100fps on a modern Core i5 (although we can’t put it in public access). I think many current algorithms will be gradually instrumented by small (> 5000fps) auxiliary grids, whether they are featues or optical flow, or RANSAC, or any other algorithm.

Vadim . OpenCV will evolve towards deep learning. Ordinary neural networks are a special case and now we have little interest. I cannot advise anything in Russian, but I will be grateful if you find and inform. There are online courses and online books in English, the same deep learning tutorial mentioned above.

Question almator

Question almatorWhat algorithm is better to search for relatively complex logos in a photo - for example, logos of various markings, where text and images are usually present, and everything is written into a form? Tried through Haar Cascade - this algorithm is well looking for solid pieces, but such a complex multi-component object, like a logo, does not find. I tried the MatchTemplate - it does not search if a minimal mismatch occurs - decrease, rotation relative to the original picture. Do not tell me in which direction to look?

Vadim . Deep mesh + augmentation training base. That is, you need to collect a database of images of this logo, and then artificially expand it many times. Here , for example, immediately is via Google.

Question WEBMETRICA

Question WEBMETRICAIs it possible with the help of computer vision to simulate the analogs of vision of various biological organisms, for example, animals and insects, and create an application that makes it possible to see the world through the eyes of other creatures?

Anatoly . I think it will not happen soon. Moreover, there is no method to reliably tell how other beings see the world.

Vadim . On CVPR 2017 there was an interesting article about using human readable signals for pattern recognition. The authors have promised an interesting sequel. Perhaps soon our brothers will get to our smaller ones.

Question WEBMETRICA

Question WEBMETRICAIf you go further, then you can create many models of the view of various living beings, skip all this diversity through a neural network and create something new? Is it possible to synthesize the vision of various biological systems?

Vadim . Everything's possible. It is necessary to go from a specific task, it seems to me.

Question barabanus

Question barabanusWhy it is impossible to multiply the matrix by a vector (cv :: Vec_) in OpenCV, but at the same time it can be multiplied by a point? (cv :: Point_) It turns out that it is easier to manipulate with points when mathematically these are not points, but vectors. For example, the direction of a line is easier to store as a point, and not as a vector — fewer type conversions in a chain of operations.

Anatoly . I have known this problem for about 8 years already. As far as I remember, this is impossible to implement - you can try it yourself. There you get something like the ambiguity of calling a constructor for a service intermediate type — the compiler cannot decide for itself which constructor to call and throws an error. You will have to manually convert to a point via cv :: Mat * Point _ <...> (Vec _ <...>).

Vadim . I suggest to submit a request . It is possible that in this particular case, they simply missed this function, or intentionally disabled it, in order not to confuse the C ++ compiler in the whole set of overlapped '*' operators - sometimes this happens.

Question barabanus

Question barabanusWhy so far in OpenCV there is no implementation of the Hough Transform, which would return the battery. After all, sometimes you need to find, say, the only maximum! Will project holders allow a new implementation to be added that returns a battery?

Vadim . Yes, that would be helpful. After reviewing and necessary revision, if it is needed, such a patch can be accepted .

Question perfect_genius

Question perfect_geniusDoes Intel try to create hardware neural networks for image processing and are there any results?

Anatoly . Hardware networks have little meaning, because progress is moving forward very quickly, and such a piece of hardware will become obsolete before it goes on sale. But the creation of accelerating instructions for networks (a la MMX / SSE / AVX) or even co-processors, in my opinion, is a very logical step. But we do not own the information.

Vadim . At this stage, we know the attempts, and our colleagues are actively involved in them, to use the available hardware (CPU, GPU) to accelerate the implementation of grids. Attempts are quite successful. Accelerated solutions for CPUs (MKL-dnn library and Intel Caffe compiled with it) and for GPUs (clDNN) allow you to run a large number of popular networks, such as AlexNet, GooLeNet / Inception, Resnet-50, etc. in real time on a regular computer without a powerful discrete card, on a regular laptop. Even OpenCV, although it does not yet use these optimized libraries, allows you to run some grids for classification, detection and semantic segmentation in real time on a laptop without discrete graphics. Try our examples and see for yourself. Effective networking is closer than many people think.

Question Mikhail063

Question Mikhail063I have been using OpenCV not for the first year, but I ran into such an interesting thing. There is a camera that transmits a signal, and there is telemetry that receives a signal, and there is also a tuner that decodes the signal into video to a computer. So, programs for capturing images work with a bang, but the OpenCV library when you try to display an image displays a black screen, and when you try to exit the program, a blue screen pops up) QUESTION Why does this happen?

Device characteristics: EasyCap USB 2.0 TV tuner, 5.8 GHz RC832 FPV video signal receiver, FPV camera with 5.8 GHz 1000TVL transmitter.

Video errors

Vadim . Because somewhere there is some kind of mistake, obviously :) We must start with localization.

Question KOLANICH

Question KOLANICHIs there any sense in modern realities in video analysis programs based on features designed by man, or is it better not to fool around and immediately train a neural network?

Anatoly . Sometimes classic features can be a quick fix.

Vadim . For analysis it is better to train. For simpler tasks, such as gluing panoramas, classic features such as SIFT are still competitive.

Question vishnerevsky

Question vishnerevskyI used OpenCV version 3.1.0, used cv2.HOGDescriptor () and .setSVMDetector (cv2.HOGDescriptor_getDefaultPeopleDetector ()) , remained under a good impression. But I want to reduce the number of false positives and therefore I want to know which data set was used to train the SVM classifier and can I access this set? I would also like to know whether it is planned to create OpenCV modules for recognizing various objects based on YOLO or Semantic Segmentation?

Vadim . Databases took the standard, are in the public domain. Now specific configuration files with file lists have been lost, many years have passed. The patch with the addition of YOLO v.2 is hanging, by the time these replies are published, I think we’ll fill it up. An example with MobileNet_SSD is already there . There you can find examples with segmentation.

Question iv_kovalyov

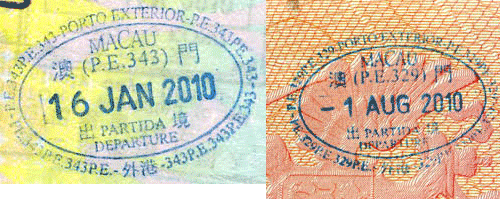

Question iv_kovalyov“Advise how you can recognize the stamp on the left in the image on the right? Stamps are not identical, but there are common elements.

I tried to find_obj.py from opencv examples, but this example does not help in this situation.

Vadim . See the tip above for logo search. Only here, most likely, you will need two grids - detection and subsequent recognition.

Intel experts recognized IliaSafonov 's best questions about using OpenCV for 3D objects and ChaikaBogdan about building a career in computer vision for a beginner. The authors of these questions get prizes from Intel. Congratulations to the winners and thanks to Anatoly and Vadim for informative answers!

Source: https://habr.com/ru/post/338926/

All Articles