Overview of one Russian RTOS, part 6. Thread synchronization tools

Unfortunately, when developing real multi-threaded applications, it is impossible to simply write the code of all tasks, connect them to the scheduler and simply launch them for execution.

Let's start with banality - if there are a lot of tasks, then they will start wasting CPU time on execution in idle cycles. As already noted in previous publications, all tasks that currently have nothing to do (data from the equipment did not come or for other organizational reasons) should be blocked. Only those tasks should be set for execution, which now have something to do, since the microcontrollers are always short on processor cycles.

Further, tasks may conflict with each other for certain resources (in particular, for equipment). When considering the types of multitasking, we have already considered typical cases of conflicts over the SPI port (partially solved by switching to cooperative multitasking, but it is not always possible to switch to it).

')

And the worst case is task dependency. Often the result of one task is used in another. From the obvious examples, we can mention the following: it is useless to recalculate the data for the PID temperature controller output, until enough data from the thermal sensor is received and averaged, it makes no sense to change the effect on the engine speed, until we receive information about the current period of its rotation, there is no need to process the character string from the terminal until the terminating character (the end of line character) is received. And besides the obvious, there are a lot of unobvious cases of dependencies and the races they generate. Sometimes a novice developer takes more time to fight races than directly to implement the algorithms of the program.

In all these cases, synchronization objects come to the aid of the developer. Let us in the current publication consider what synchronization objects and functions are available in the MAKS RTOS.

For those who have not seen the previous parts, links:

Part 1. General information

Part 2. Core MAX MAX

Part 3. The structure of the simplest program

Part 4. Useful theory

Part 5. The first application

Part 6. Thread synchronization tools (this article)

Part 7. Means of data exchange between tasks

Part 8. Work with interruptions

To warm up, consider the CriticalSection class. It is used to frame areas where context switching is not allowed.

As soon as a class object appears in scope, all interrupts with MAX_SYSCALL_INTERRUPT_PRIORITY or lower are blocked. Today, this constant is five, which blocks all interrupts from devices and from the system timer, but does not block exceptional situations.

When an object goes out of scope, the interrupt level is restored to what it was before the entry. The latter allows you not to care about the fact that nested critical sections are obtained - only the first one really works. All, except for external - will perform unnecessary work. First, to replace the high level of allowed interrupts with him, and when you exit - to restore the original one - all the same initially high. Exiting the outermost of the nested critical sections will restore the low level. Of course, nesting is most often obtained when a function is called that also has a critical section.

Since the critical section changes the interrupt priorities, that is, it programs the NVIC, it should only be used in code running in privileged mode.

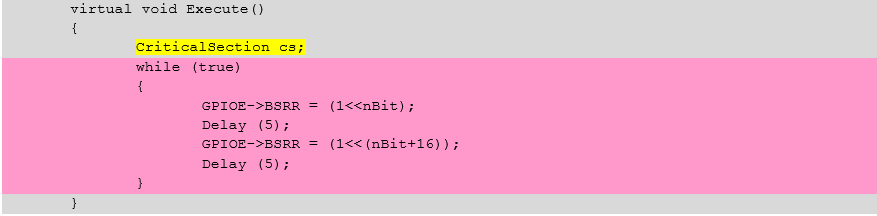

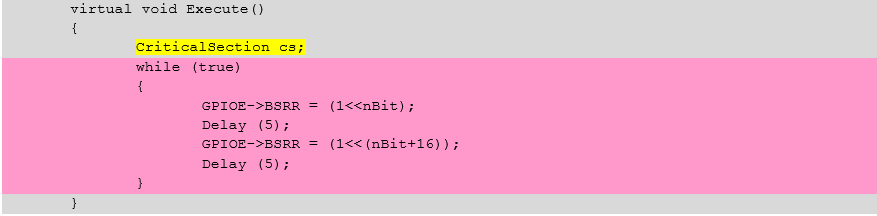

Consider an example (the area highlighted in pink is protected by a critical section, it is guaranteed that the task will not be taken out of control)

Of course, the scope of an object can always be limited to curly braces.

What should be included in the critical section? Well, for example, operations where several variables need to be atomically changed (the example above just does this). Various lists and other things, where one task reads, and the second writes, may well require atomic access.

But there are less obvious things. Let there be a certain counter, which increases in one task, and decreases in another. It would seem quite an atomic operation.

cnt ++;

But it is atomic at the level of high level language. At the assembly level, it splits into read-modify-write operations

Suppose the value (for accuracy, say 10) has already fallen into the register r0, after which the scheduler will transfer control to another task. It also considers the value 10 and reduces it, setting 9. Then, when the control returns to the current task, it adds one not to the variable, but to what has already entered the register r0 - to the top ten. It turns out 11. The value of the counter will be distorted.

That's just to protect against such situations, it is quite suitable critical section.

Although, of course, the overhead of working with NVIC should also be kept in mind, since it will not be three, but many more assembly instructions

This is not counting the contents of the system routines ... We give only the first, so that the reader presents its complexity

As you can see, this is not the maximum nesting ... On the other hand, this is still the lesser of the evils. Just do not get involved in frequent entries and exits of the critical section.

The critical section is a very powerful, but potentially dangerous tool, because until it goes out of scope, multitasking is disabled. Ideally, locking should be done on only a few lines. The presence of a cycle can significantly increase the delay time, the input to functions — and even more so (if the programmer has little idea of the time spent in these functions), and working with certain types of equipment is a very potentially dangerous thing. Let the programmer decide to assure himself that there is no context switch at the time of transmitting two bytes on the 10 MHz SPI bus. One bit has a period of 100 ns. 16 bits - 1.6 µs. This is an acceptable result. The next task will lose no more than this area (in general, this is comparable with the time of the scheduler). But if you transmit a string of 20 characters on the UART at a speed of 250 kilobits per second, it will take 20 * 10 * 4 μs = 0.8 ms. That is, start the process closer to the end of the time quantum of the task, it will “eat” almost the entire quantum of the next task.

In general, the critical sections mechanism is quite powerful, but the programmer using it is fully responsible for ensuring the system’s operation in real time.

Again, do not put to sleep the task that is in the critical section, using functions that are waiting for other resources.

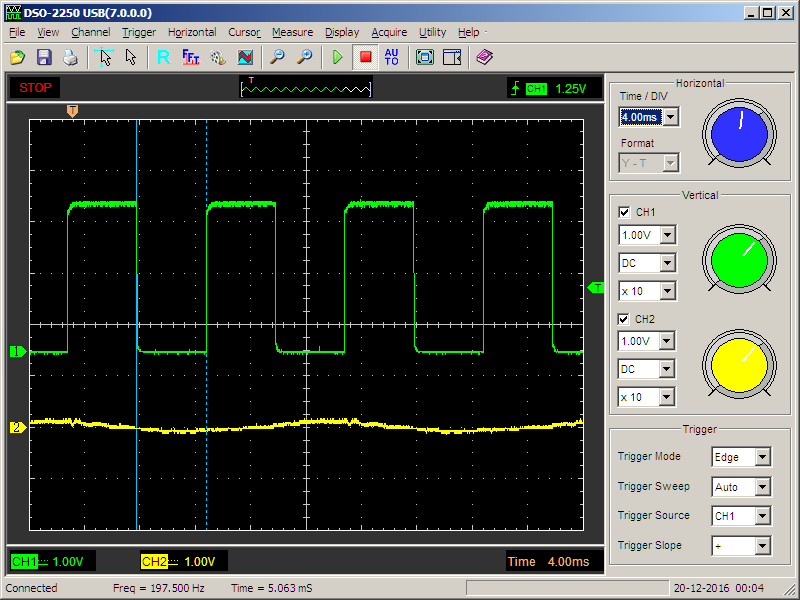

Consider the simplest example. Already known task, changing the state of the port, periodically calling the delay function with blocking:

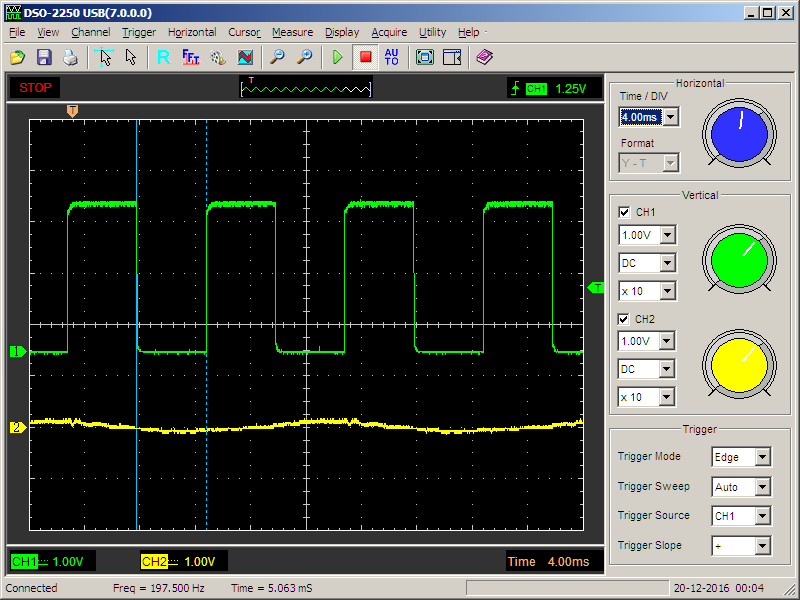

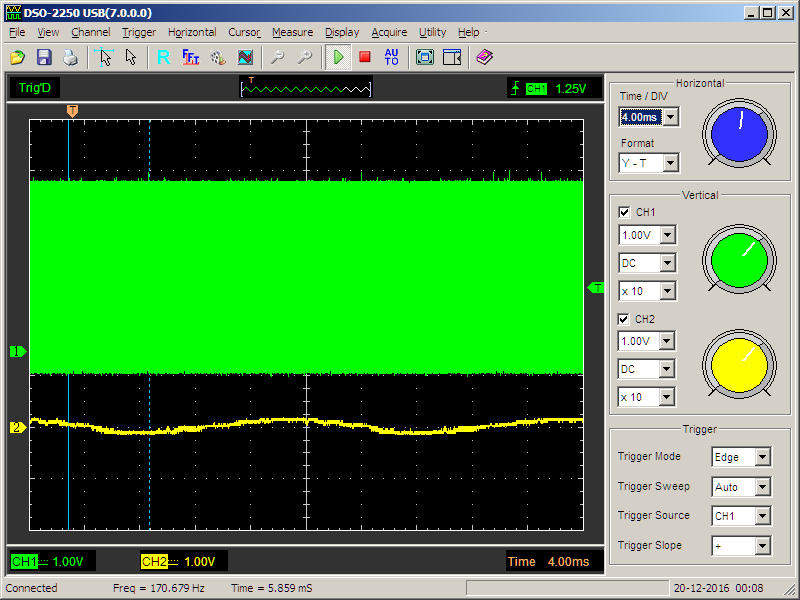

It gives a normal meander:

Add a critical section

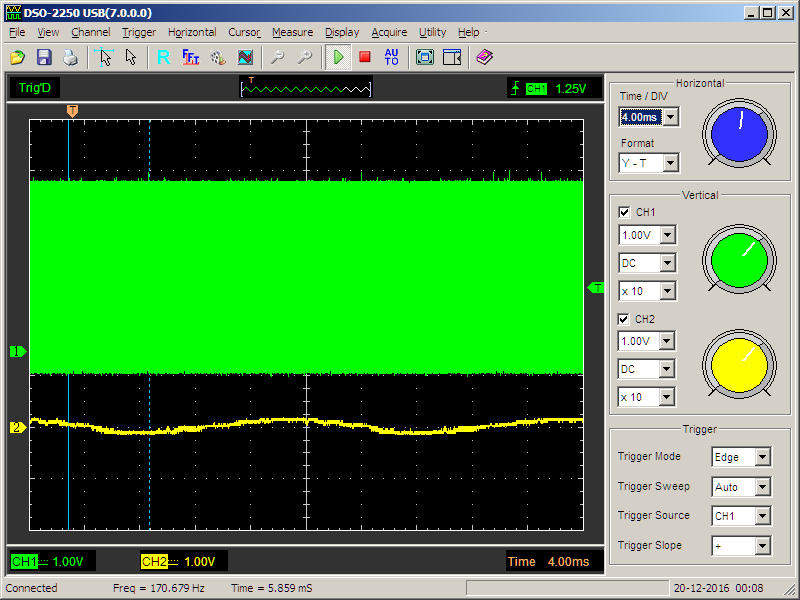

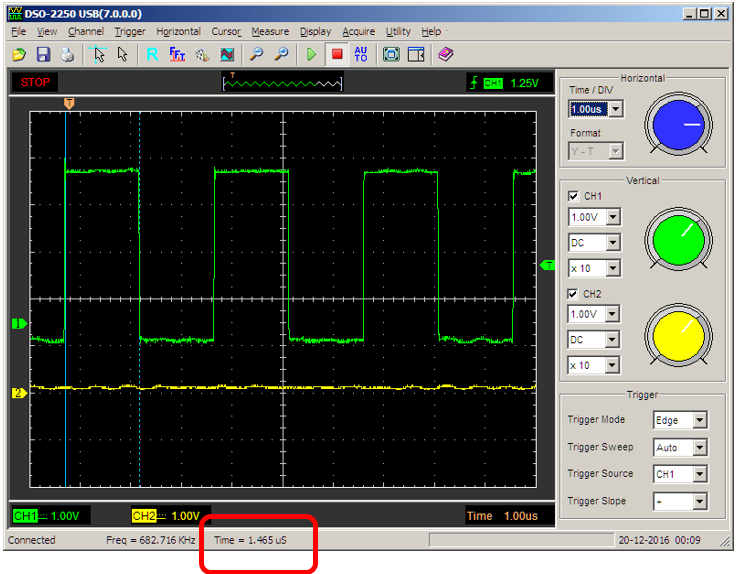

We get a completely different signal

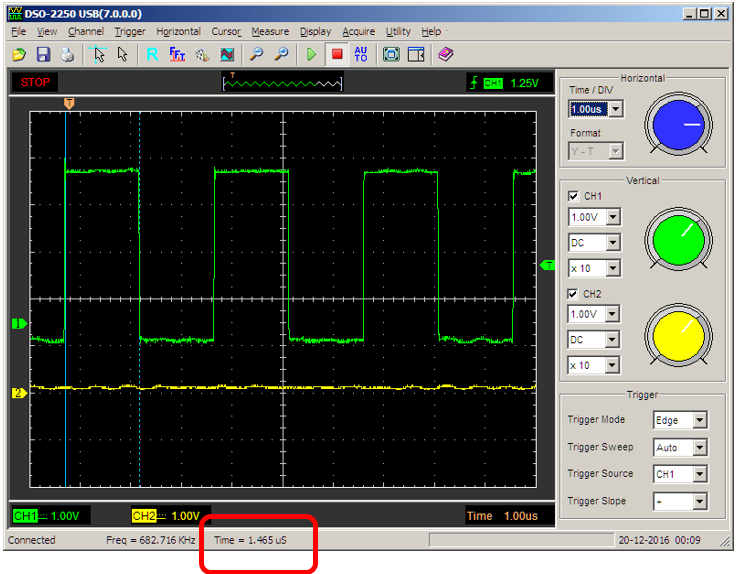

Zoom in - the signal period is completely wrong ...

In general, the critical section is a powerful tool, but it should be used only if you are aware of your actions (or, at least, controlling your every step).

The warm-up somehow was delayed. The simplest thing spread out on a bunch of sheets. Well, let's move on to a slightly more complicated logic, but requiring less text, and a thing to do - a binary semaphore.

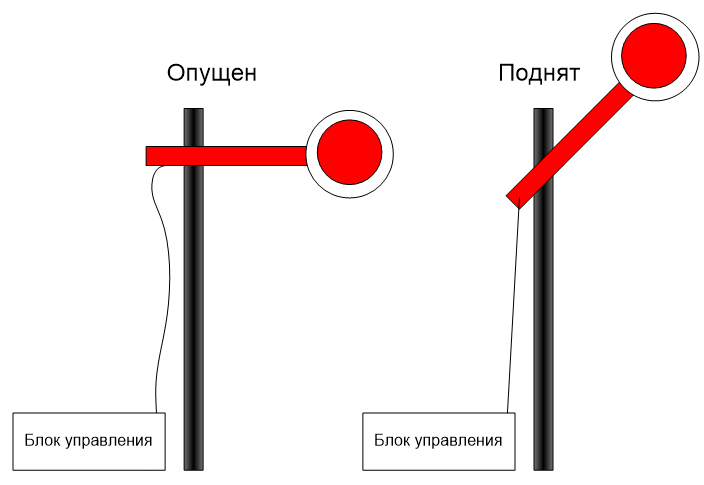

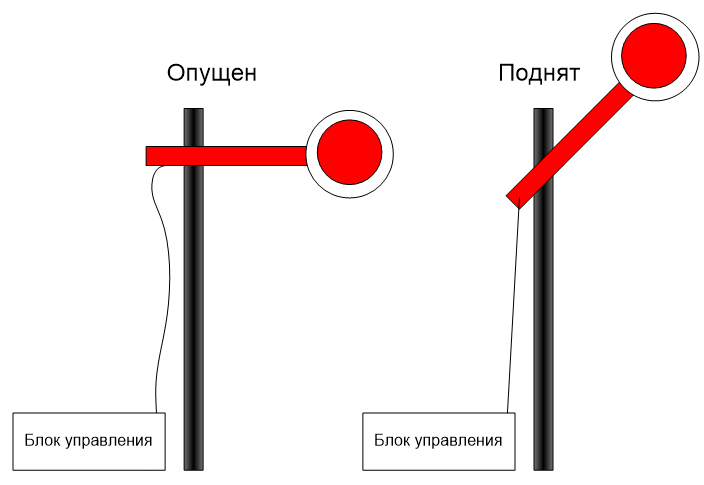

Once upon a time, semaphores were actively used on the railway. They had two states: raised - the train can move. Omitted - the train must wait. Well, and lifted and lowered it the dispatcher. So here, some task (or tasks) pulls the string (acting as a dispatcher), and some task (or tasks) - waiting for the path to be opened. At the same time, the pending tasks are blocked, that is, they do not spend time quanta.

Such a semaphore is implemented by the BinarySemaphore class.

The class constructor contains a required argument that specifies the initial state of the semaphore.

explicit BinarySemaphore (bool is_empty = true)

Next we consider only the functions of the binary semaphore (the fact is that it is the heir of a simple semaphore, and we will consider all its functionality below).

Tasks that need to go through the semaphore should call the Wait () function. The argument of this function is the timeout in milliseconds. If the task has been unlocked within the specified time, the function will return the value ResultOk . Accordingly, if the timeout is reached , the result of the function will be equal to ResultTimeout . When the function must wait "until it stops", the timeout value should be passed to INFINITE_TIMEOUT .

If the function is called with a zero timeout value, then it will return control instantly, but by the result ( ResultOk or ResultTimeout ) it will be clear whether the semaphore was open or closed.

When called from an interrupt, the task lock is not possible, therefore with any non-zero timeout, the result of the function will be ResultErrorInterruptNotSupported . However, with zero timeout, the function can also be called from the interrupt.

If the function returns the ResultOk result, the semaphore will close automatically.

As already noted, several tasks can wait for the semaphore at once. In this case, the selection of the “lucky one”, which will be skipped first, will be done as follows: Tasks in the waiting list are followed in order of decreasing priority, and with the same priority, in the order of calling the Wait () function.

To open the semaphore, use the Signal () function. If the semaphore is already open, it will return the result ResultErrorInvalidState , otherwise ResultOk . A function cannot be called from an interrupt with a priority higher than MAX_SYSCALL_INTERRUPT_PRIORITY.

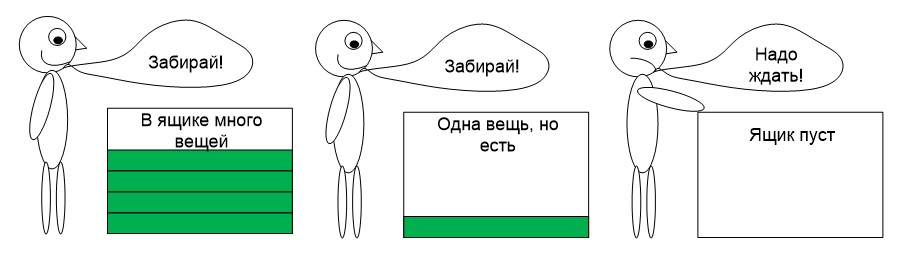

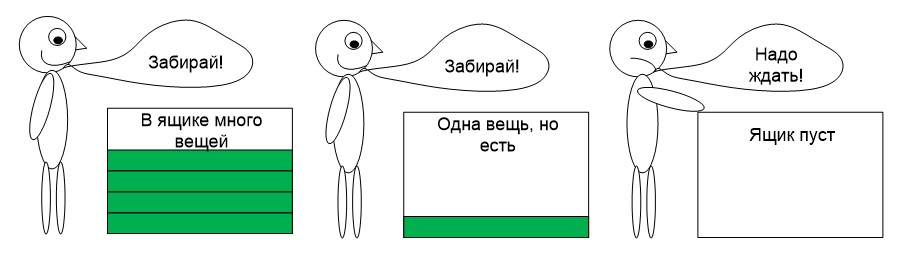

Frankly, I absolutely do not like this name. It would be more correct to call this synchronization object “Zavhoz”, but you will not try against the traditions. Everywhere it is called a semaphore, the RTOS MAX is no exception. The difference between a simple semaphore (or a supply manager) and a binary one is that it can count (put resources at the warehouse). There is at least one resource - a task can pass (and the number of resources decreases). No resources - the task will wait for at least one.

Such semaphores come in handy when you need to allocate any resources. For example, I made 4 buffers for outputting data to USB (this bus does not work with bytes, but with arrays of bytes, so it’s most convenient to prepare the data in buffers). Accordingly, the work task can determine if there are free buffers. If there is - fill them. No - wait for the USB driver to transfer and free at least one. In general, if not one, but several resources are allocated somewhere, it is most convenient to assign their distribution to the supply manager (or, according to traditional naming, the semaphore).

Accordingly, this object is implemented in the Semaphore class. Consider its difference from the binary semaphore. First, he has a slightly different constructor.

Semaphore (size_t start_count, size_t max_count)

The first parameter is how many things are initially put in the box, the second is the dimension of this box. Sometimes it is useful at the beginning of work to declare a complete lack of resources, and then to add them (calling, as we remember, the Signal () function). Sometimes - on the contrary, initially the resources are loaded to the maximum, and then - they are spent. Other options are possible with incomplete initial loading, but readers will come up with this themselves when this is necessary.

The Signal () function, respectively, increases the resource counter. If it has reached the maximum, it will return ResultErrorInvalidState . Once again, we recall that a function cannot be called from interrupts with a priority higher than MAX_SYSCALL_INTERRUPT_PRIORITY.

The Wait () function will skip a task if the number of resources is not equal to zero, while reducing the counter. And if there are no resources, the task will be blocked until they are returned via the Signal () function. Once again, we recall that from interrupt this function can be called only with zero timeout.

Now consider the functions that did not make sense in the binary case.

GetCurrentCount () will return the current value of the resource counter

GetMaxCount () will return the maximum possible counter value (if the semaphore was created by another task, it may be useful to determine its characteristics)

The name of this object comes from the words Mut ualy Ex clusive. That is, with its help the system provides mutually exclusive access to one resource. While in semaphores, some tasks were awaiting destruction, while others opened a semaphore, then in this case, everyone tries to capture the resource, and the system provides it.

Briefly, the essence of the mutex can be explained with the phrase “Whoever first stood up - that and sneakers”. There is a protected object - "sneakers". The husband woke up, asked for them - the system gave them to him for undivided use. The wife and son requested - they were blocked. As soon as the husband returned the slippers to the system, the wife received them. She returned - got a son. He returned - the object passed to the free state, the next requesting, will receive them again without waiting.

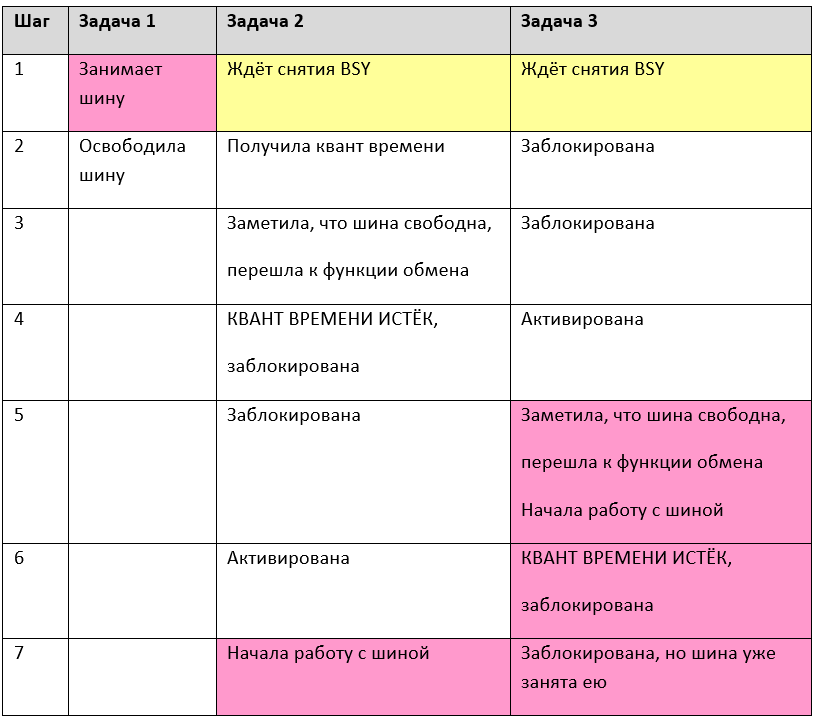

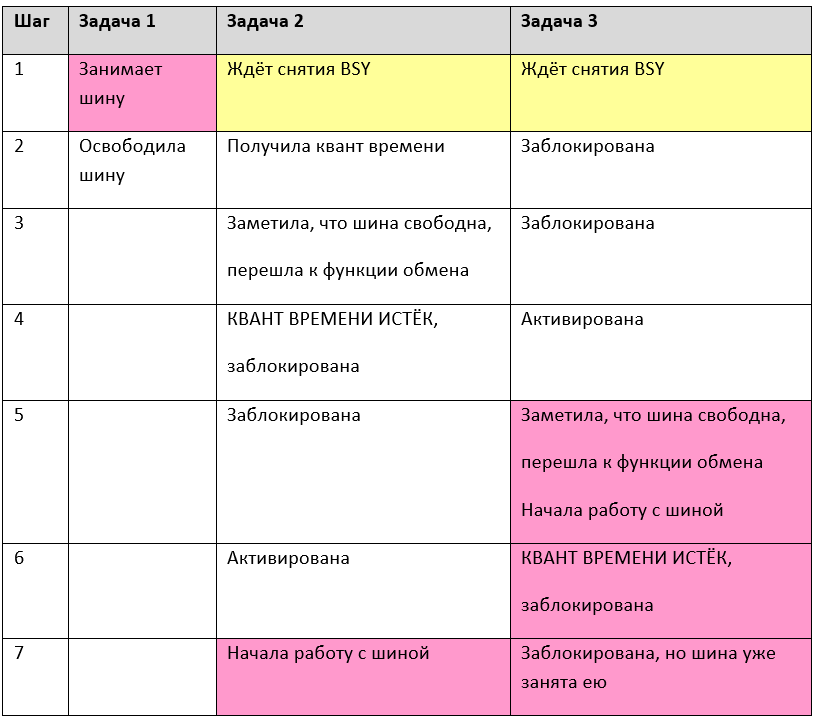

In microcontrollers, a wonderful resource that needs to be protected in this way is the port (SPI, I2C, etc.), if several tasks are trying to work through it. We have already considered that several dissimilar devices can be connected to the same physical channel, for example, in a classic TV: on a single I2C bus there can be a video processor, an audio processor, a teletext processor, a tuner - they can be served by different tasks. Why waste processor time waiting for the BSY bit to be reset? Moreover, all the same, collisions are possible. Consider the work of three tasks, exclusively analyzing the port BSY bit, executing them step by step:

As you can see, in step 7, two tasks immediately try to control the bus. If she was protected by a mutex, this would not have happened. In addition, at conditional step 1 (in fact, this is a mass of steps where task 2 and task 3 are blocked) tasks waste time quanta. Mutex solves this problem too - all pending tasks are blocked.

Sometimes it may happen that the developer is too carried away with mutexes, and the task can capture the mutex several times. Of course, most likely, this will occur in nested functions. Function 1 captures the mutex, then control is passed to function 2, from there to function 3, from there to function 4 (written a year ago), which also tries to capture the same mutex. In order to prevent blocking, in such cases recursive mutexes should be created. One task will be able to capture them many times. It is only important to release as many times as he was captured. In Windows, all mutexes are recursive, but such an approach on weak microcontrollers would lead to unnecessary expenditure of resources, so by default, in the MAX RTOS, mutexes are not recursive.

Consider the basic functions of the class Mutex. First of all - its designer

Mutex (boolrecursive = false);

The constructor argument specifies whether the type is recursive or not.

The lock () function captures the mutex. The argument is the timeout value. As always, you can set special values - zero timeout (instant exit without waiting) or INFINITE_TIMEOUT (wait until victory). If the mutex succeeded in capturing, the result ResultOk will be returned. When the timeout expires, the result of the ResultTimeout will be returned. When attempting to capture a non-recursive mutex, the result will be ResultErrorInvalidState . A mutex cannot be captured in an interrupt. If you try to do this, the result will be ResultErrorInterruptNotSupported .

Unlock () function - frees mutex. Accordingly, it should be called at the end of the execution of the protected section.

The IsLocked () function allows you to determine if a mutex is captured or free without capturing it. With preemptive multitasking, the result may lose relevance even before it is analyzed, but with cooperative multitasking, this function may well be useful.

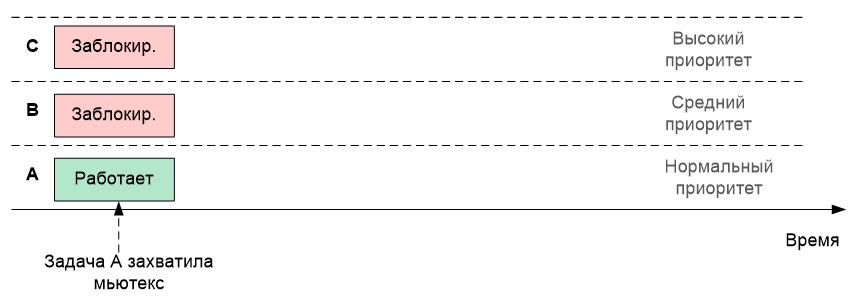

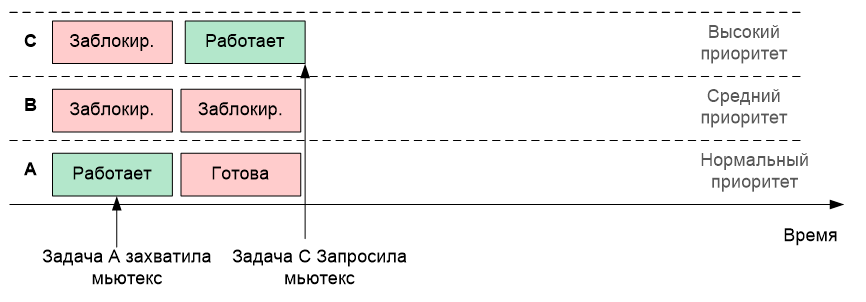

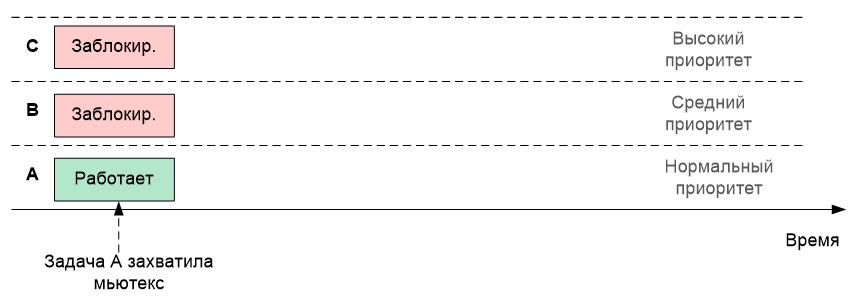

Now it is worth telling about such a thing as inheriting priorities. Suppose the system has tasks A with normal priority, B with increased priority, and C with high priority. Suppose that tasks B and C were blocked, and A at that time managed to capture the mutex. Let's draw it graphically, placing the tasks one above the other (the higher the priority, the higher the task in the figure)

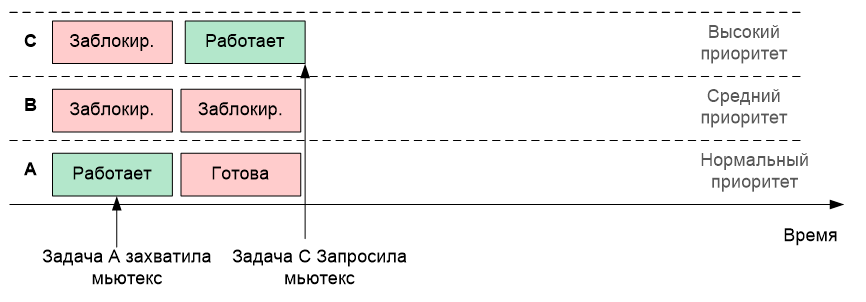

Now task C is unlocked. Of course, having the highest priority, it began to execute. And let's say she is also going to capture the same mutex.

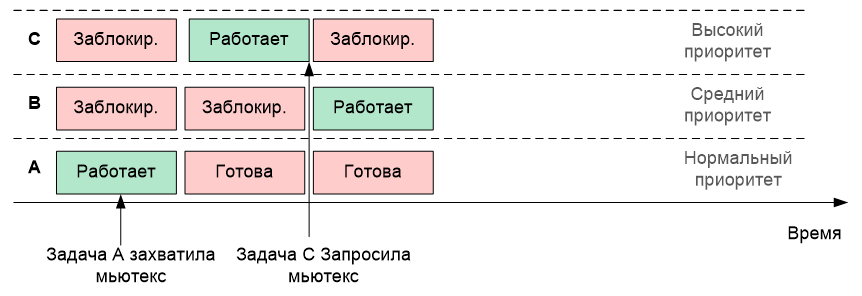

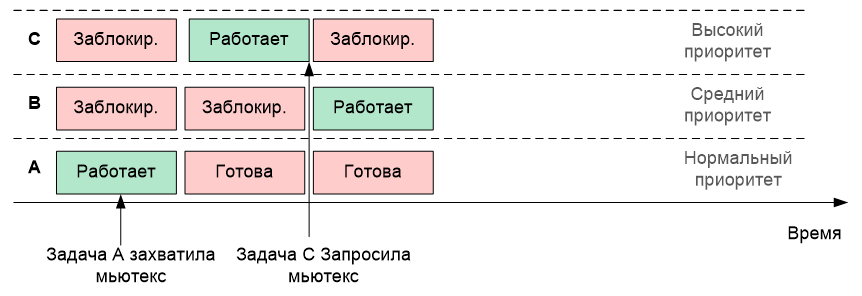

But the mutex is in the possession of task A! According to the standard logic, once it is busy, task C is blocked until it is released. And suddenly the task B was unblocked unexpectedly (even if she waited for some other resource, and he was freed). Since its priority is higher than that of A, then it will be executed exactly (that is, task B)

What we have? High priority C cannot be executed because it is waiting for the mutex to be released. And it cannot wait, because its current owner has been superseded by a higher priority task relative to the owner of the mutex, but a lower priority one is a relatively unfairly blocked task.

To prevent this, the owner of the mutex at the time of possession is assigned the priority of the highest task of those who now expect it. Such a mechanism (inheritance of priorities) allows you to bring the moment of release of the mutex.

This fact is extremely important to remember, because if task A also interacts with any task E, which also has a normal priority, for the duration of the priority inheritance, it will lose this interaction. Alas, nothing can be done with this (within the framework of the standard RTOS concept), this should be simply taken into account when designing programs.

Classical algorithmic programming involves one input and one output in any algorithm. However, the practice is such that the fanatical provision of this principle leads to an unjustified complication of the text and reduced readability. Let's consider the following pseudocode:

In fact, here, before each exit from a function, you should methodically place mutex.Unlock (). And there can be many such sections in a large algorithm. And they can be added. Sooner or later, the programmer will forget to unlock the mutex somewhere, and the program will “hang”. And this - despite the fact that mankind did not sleep at night, it invented the PLO in general and the destructors of classes in particular!

Mutex-guard is just engaged in the use of destructors. This class in the interface part has nothing but a constructor. Copy its description from the Programmer’s Guide:

explicit MutexGuard (Mutex & mutex, bool only_unlock = false);

Arguments:

The constructor should be passed mutex. He will be captured. And it will be released at the moment when the mutex guard goes out of scope. Thus, the previous example should be rewritten like this:

Semaphores and mutexes are usually used to eliminate the competition of tasks when using certain resources, but sometimes you just need to block the task until some condition arises to unlock it. Typical scenario - an interruption has occurred and the handler function signals the high-priority task of deferred processing, that you should wake up and perform some actions. Events allow you to implement this mechanism.

After reading the previous paragraph, it may seem that the event simply duplicates the semaphore. But it is not. Let's look at the differences.

The first difference: The event affects only those who are waiting for him. If the event currently has no recipient, it will go nowhere. If a moment after the occurrence of the event, someone starts to wait for him - he will be blocked. Unlocking will occur only on the next event. Who did not have time - he was late. As we remember, the semaphore, on the contrary, whether someone was waiting for him or not, will open anyway. And the first one who will pass by the semaphore will be skipped.

The second difference is that if several tasks are waiting for the semaphore to open, only one of them will be unlocked. The rest will be waiting for the next discovery. The event can be set to the mode when it unlocks everyone who was waiting for its occurrence. That is, all pending tasks will be transferred from the “Blocked” state to someone to the “Active” state, and the luckiest task to the “Executed” state.

Otherwise, the logic of events resembles the logic of the binary semaphore.

Constructor class:

Event (bool broadcast = true);

The broadcast parameter specifies the rule by which recipients are informed who are waiting for an event to occur. true– all tasks waiting for it will be unlocked, false – only one task that is first in the queue waiting for this event will be unlocked.

The Raise () function sends an event. Cannot be called from interrupt, with priority higher than MAX_SYSCALL_INTERRUPT_PRIORITY .

The Wait () function is already familiar to us from the same function of the previously considered synchronization objects. Similarly, it has a timeout argument. Similarly, the timeout can be zero, or INFINITE_TIMEOUT . This function cannot be called from interrupts.

Unfortunately, it is impossible to make simple and beautiful examples where sync objects are used. All simple will result in a demonstration of examples of function calls. Practically applicable things will take too much space. They will have to be described for a long time, and the reader will lose the thread on the second page, and the twenty-eight others will remain never read by anyone. Therefore, I will refrain from writing such a work.

In the planned third part, a description of the adaptation of a large program for a CNC machine should appear, leaving examples for it. And for those who crave practice, I can recommend unit tests for the OS. They are located in the directory ... \ maksRTOS \ Source \ Tests \ Unit tests. Here is a list of directories hosted there:

BinarySemaphore

Event

MessageQueue

Mutex

MutexGuard

Scheduler

Semaphore

Best practical examples are hard to come up with. Enjoyable learning (although it is believed that a rare reader will come to the end of the first quarter of the tests).

Let's start with banality - if there are a lot of tasks, then they will start wasting CPU time on execution in idle cycles. As already noted in previous publications, all tasks that currently have nothing to do (data from the equipment did not come or for other organizational reasons) should be blocked. Only those tasks should be set for execution, which now have something to do, since the microcontrollers are always short on processor cycles.

Further, tasks may conflict with each other for certain resources (in particular, for equipment). When considering the types of multitasking, we have already considered typical cases of conflicts over the SPI port (partially solved by switching to cooperative multitasking, but it is not always possible to switch to it).

')

And the worst case is task dependency. Often the result of one task is used in another. From the obvious examples, we can mention the following: it is useless to recalculate the data for the PID temperature controller output, until enough data from the thermal sensor is received and averaged, it makes no sense to change the effect on the engine speed, until we receive information about the current period of its rotation, there is no need to process the character string from the terminal until the terminating character (the end of line character) is received. And besides the obvious, there are a lot of unobvious cases of dependencies and the races they generate. Sometimes a novice developer takes more time to fight races than directly to implement the algorithms of the program.

In all these cases, synchronization objects come to the aid of the developer. Let us in the current publication consider what synchronization objects and functions are available in the MAKS RTOS.

For those who have not seen the previous parts, links:

Part 1. General information

Part 2. Core MAX MAX

Part 3. The structure of the simplest program

Part 4. Useful theory

Part 5. The first application

Part 6. Thread synchronization tools (this article)

Part 7. Means of data exchange between tasks

Part 8. Work with interruptions

Critical section

To warm up, consider the CriticalSection class. It is used to frame areas where context switching is not allowed.

As soon as a class object appears in scope, all interrupts with MAX_SYSCALL_INTERRUPT_PRIORITY or lower are blocked. Today, this constant is five, which blocks all interrupts from devices and from the system timer, but does not block exceptional situations.

When an object goes out of scope, the interrupt level is restored to what it was before the entry. The latter allows you not to care about the fact that nested critical sections are obtained - only the first one really works. All, except for external - will perform unnecessary work. First, to replace the high level of allowed interrupts with him, and when you exit - to restore the original one - all the same initially high. Exiting the outermost of the nested critical sections will restore the low level. Of course, nesting is most often obtained when a function is called that also has a critical section.

Since the critical section changes the interrupt priorities, that is, it programs the NVIC, it should only be used in code running in privileged mode.

Consider an example (the area highlighted in pink is protected by a critical section, it is guaranteed that the task will not be taken out of control)

Same text

void ProfEye::Tune() { ProfData::m_empty_call_overhead = 0; ProfData::m_empty_constr_overhead = 0; ProfData::m_embrace_overhead = 0; CriticalSection _cs_; loop ( int, i, 1000 ) { PROF_DECL(PE_EMPTY_CALL, empty_call); PROF_START(empty_call); PROF_STOP(empty_call); { PROF_EYE(PE_EMBRACE, _embrace_); { PROF_EYE(PE_EMPTY_CONSTR, _empty_constr_); } } } ProfData::m_empty_call_overhead = prof_data[PE_EMPTY_CALL].TimeAvg(); ProfData::m_empty_constr_overhead = prof_data[PE_EMPTY_CONSTR].TimeAvg(); ProfData::m_embrace_overhead = prof_data[PE_EMBRACE].TimeAvg() + ProfData::ADJUSTMENT - 2 * ProfData::m_empty_constr_overhead; } Of course, the scope of an object can always be limited to curly braces.

Text

ProfEye::ProfEye(PROF_EYE eye, bool run) { m_eye = eye; m_lost = 0; m_run = false; if ( run ) { { CriticalSection _cs_; prof_data[m_eye].Lock(true); m_up_eye = m_cur_eye; m_cur_eye = this; } Start(); } else m_up_eye = nullptr; } What should be included in the critical section? Well, for example, operations where several variables need to be atomically changed (the example above just does this). Various lists and other things, where one task reads, and the second writes, may well require atomic access.

But there are less obvious things. Let there be a certain counter, which increases in one task, and decreases in another. It would seem quite an atomic operation.

cnt ++;

But it is atomic at the level of high level language. At the assembly level, it splits into read-modify-write operations

26: cnt++;

0x08004818 6B60 LDR r0,[r4,#0x34]

0x0800481A 1C40 ADDS r0,r0,#1

0x0800481C 6360 STR r0,[r4,#0x34]

Suppose the value (for accuracy, say 10) has already fallen into the register r0, after which the scheduler will transfer control to another task. It also considers the value 10 and reduces it, setting 9. Then, when the control returns to the current task, it adds one not to the variable, but to what has already entered the register r0 - to the top ten. It turns out 11. The value of the counter will be distorted.

That's just to protect against such situations, it is quite suitable critical section.

{ CriticalSection cs; cnt++ } Although, of course, the overhead of working with NVIC should also be kept in mind, since it will not be three, but many more assembly instructions

27: CriticalSection cs;

0x0800481A 4668 MOV r0,sp

0x0800481C F7FEFCE8 BL.W _ZN4maks15CriticalSectionC2Ev (0x080031F0)

28: cnt++;

0x08004820 6B60 LDR r0,[r4,#0x34]

0x08004822 1C40 ADDS r0,r0,#1

29: }

0x08004824 6360 STR r0,[r4,#0x34]

0x08004826 4668 MOV r0,sp

0x08004828 F7FEFDA0 BL.W _ZN4maks19InterruptMaskSetterD2Ev (0x0800336C)

This is not counting the contents of the system routines ... We give only the first, so that the reader presents its complexity

0x080031F0 B510 PUSH {r4,lr}

0x080031F2 2150 MOVS r1,#0x50

0x080031F4 F000F8AE BL.W _ZN4maks19InterruptMaskSetterC2Ej (0x08003354)

0x080031F8 4901 LDR r1,[pc,#4] ; @0x08003200

0x080031FA 6001 STR r1,[r0,#0x00]

0x080031FC BD10 POP {r4,pc}

As you can see, this is not the maximum nesting ... On the other hand, this is still the lesser of the evils. Just do not get involved in frequent entries and exits of the critical section.

The critical section is a very powerful, but potentially dangerous tool, because until it goes out of scope, multitasking is disabled. Ideally, locking should be done on only a few lines. The presence of a cycle can significantly increase the delay time, the input to functions — and even more so (if the programmer has little idea of the time spent in these functions), and working with certain types of equipment is a very potentially dangerous thing. Let the programmer decide to assure himself that there is no context switch at the time of transmitting two bytes on the 10 MHz SPI bus. One bit has a period of 100 ns. 16 bits - 1.6 µs. This is an acceptable result. The next task will lose no more than this area (in general, this is comparable with the time of the scheduler). But if you transmit a string of 20 characters on the UART at a speed of 250 kilobits per second, it will take 20 * 10 * 4 μs = 0.8 ms. That is, start the process closer to the end of the time quantum of the task, it will “eat” almost the entire quantum of the next task.

In general, the critical sections mechanism is quite powerful, but the programmer using it is fully responsible for ensuring the system’s operation in real time.

Again, do not put to sleep the task that is in the critical section, using functions that are waiting for other resources.

Consider the simplest example. Already known task, changing the state of the port, periodically calling the delay function with blocking:

virtual void Execute() { while (true) { GPIOE->BSRR = (1<<nBit); Delay (5); GPIOE->BSRR = (1<<(nBit+16)); Delay (5); } } It gives a normal meander:

Add a critical section

Text

virtual void Execute() { CriticalSection cs; while (true) { GPIOE->BSRR = (1<<nBit); Delay (5); GPIOE->BSRR = (1<<(nBit+16)); Delay (5); } } We get a completely different signal

Zoom in - the signal period is completely wrong ...

In general, the critical section is a powerful tool, but it should be used only if you are aware of your actions (or, at least, controlling your every step).

The warm-up somehow was delayed. The simplest thing spread out on a bunch of sheets. Well, let's move on to a slightly more complicated logic, but requiring less text, and a thing to do - a binary semaphore.

Binary Semaphore

Once upon a time, semaphores were actively used on the railway. They had two states: raised - the train can move. Omitted - the train must wait. Well, and lifted and lowered it the dispatcher. So here, some task (or tasks) pulls the string (acting as a dispatcher), and some task (or tasks) - waiting for the path to be opened. At the same time, the pending tasks are blocked, that is, they do not spend time quanta.

Such a semaphore is implemented by the BinarySemaphore class.

The class constructor contains a required argument that specifies the initial state of the semaphore.

explicit BinarySemaphore (bool is_empty = true)

Next we consider only the functions of the binary semaphore (the fact is that it is the heir of a simple semaphore, and we will consider all its functionality below).

Tasks that need to go through the semaphore should call the Wait () function. The argument of this function is the timeout in milliseconds. If the task has been unlocked within the specified time, the function will return the value ResultOk . Accordingly, if the timeout is reached , the result of the function will be equal to ResultTimeout . When the function must wait "until it stops", the timeout value should be passed to INFINITE_TIMEOUT .

If the function is called with a zero timeout value, then it will return control instantly, but by the result ( ResultOk or ResultTimeout ) it will be clear whether the semaphore was open or closed.

When called from an interrupt, the task lock is not possible, therefore with any non-zero timeout, the result of the function will be ResultErrorInterruptNotSupported . However, with zero timeout, the function can also be called from the interrupt.

If the function returns the ResultOk result, the semaphore will close automatically.

As already noted, several tasks can wait for the semaphore at once. In this case, the selection of the “lucky one”, which will be skipped first, will be done as follows: Tasks in the waiting list are followed in order of decreasing priority, and with the same priority, in the order of calling the Wait () function.

To open the semaphore, use the Signal () function. If the semaphore is already open, it will return the result ResultErrorInvalidState , otherwise ResultOk . A function cannot be called from an interrupt with a priority higher than MAX_SYSCALL_INTERRUPT_PRIORITY.

Semaphore

Frankly, I absolutely do not like this name. It would be more correct to call this synchronization object “Zavhoz”, but you will not try against the traditions. Everywhere it is called a semaphore, the RTOS MAX is no exception. The difference between a simple semaphore (or a supply manager) and a binary one is that it can count (put resources at the warehouse). There is at least one resource - a task can pass (and the number of resources decreases). No resources - the task will wait for at least one.

Such semaphores come in handy when you need to allocate any resources. For example, I made 4 buffers for outputting data to USB (this bus does not work with bytes, but with arrays of bytes, so it’s most convenient to prepare the data in buffers). Accordingly, the work task can determine if there are free buffers. If there is - fill them. No - wait for the USB driver to transfer and free at least one. In general, if not one, but several resources are allocated somewhere, it is most convenient to assign their distribution to the supply manager (or, according to traditional naming, the semaphore).

Accordingly, this object is implemented in the Semaphore class. Consider its difference from the binary semaphore. First, he has a slightly different constructor.

Semaphore (size_t start_count, size_t max_count)

The first parameter is how many things are initially put in the box, the second is the dimension of this box. Sometimes it is useful at the beginning of work to declare a complete lack of resources, and then to add them (calling, as we remember, the Signal () function). Sometimes - on the contrary, initially the resources are loaded to the maximum, and then - they are spent. Other options are possible with incomplete initial loading, but readers will come up with this themselves when this is necessary.

The Signal () function, respectively, increases the resource counter. If it has reached the maximum, it will return ResultErrorInvalidState . Once again, we recall that a function cannot be called from interrupts with a priority higher than MAX_SYSCALL_INTERRUPT_PRIORITY.

The Wait () function will skip a task if the number of resources is not equal to zero, while reducing the counter. And if there are no resources, the task will be blocked until they are returned via the Signal () function. Once again, we recall that from interrupt this function can be called only with zero timeout.

Now consider the functions that did not make sense in the binary case.

GetCurrentCount () will return the current value of the resource counter

GetMaxCount () will return the maximum possible counter value (if the semaphore was created by another task, it may be useful to determine its characteristics)

Mutex

The name of this object comes from the words Mut ualy Ex clusive. That is, with its help the system provides mutually exclusive access to one resource. While in semaphores, some tasks were awaiting destruction, while others opened a semaphore, then in this case, everyone tries to capture the resource, and the system provides it.

Briefly, the essence of the mutex can be explained with the phrase “Whoever first stood up - that and sneakers”. There is a protected object - "sneakers". The husband woke up, asked for them - the system gave them to him for undivided use. The wife and son requested - they were blocked. As soon as the husband returned the slippers to the system, the wife received them. She returned - got a son. He returned - the object passed to the free state, the next requesting, will receive them again without waiting.

In microcontrollers, a wonderful resource that needs to be protected in this way is the port (SPI, I2C, etc.), if several tasks are trying to work through it. We have already considered that several dissimilar devices can be connected to the same physical channel, for example, in a classic TV: on a single I2C bus there can be a video processor, an audio processor, a teletext processor, a tuner - they can be served by different tasks. Why waste processor time waiting for the BSY bit to be reset? Moreover, all the same, collisions are possible. Consider the work of three tasks, exclusively analyzing the port BSY bit, executing them step by step:

As you can see, in step 7, two tasks immediately try to control the bus. If she was protected by a mutex, this would not have happened. In addition, at conditional step 1 (in fact, this is a mass of steps where task 2 and task 3 are blocked) tasks waste time quanta. Mutex solves this problem too - all pending tasks are blocked.

Sometimes it may happen that the developer is too carried away with mutexes, and the task can capture the mutex several times. Of course, most likely, this will occur in nested functions. Function 1 captures the mutex, then control is passed to function 2, from there to function 3, from there to function 4 (written a year ago), which also tries to capture the same mutex. In order to prevent blocking, in such cases recursive mutexes should be created. One task will be able to capture them many times. It is only important to release as many times as he was captured. In Windows, all mutexes are recursive, but such an approach on weak microcontrollers would lead to unnecessary expenditure of resources, so by default, in the MAX RTOS, mutexes are not recursive.

Consider the basic functions of the class Mutex. First of all - its designer

Mutex (boolrecursive = false);

The constructor argument specifies whether the type is recursive or not.

The lock () function captures the mutex. The argument is the timeout value. As always, you can set special values - zero timeout (instant exit without waiting) or INFINITE_TIMEOUT (wait until victory). If the mutex succeeded in capturing, the result ResultOk will be returned. When the timeout expires, the result of the ResultTimeout will be returned. When attempting to capture a non-recursive mutex, the result will be ResultErrorInvalidState . A mutex cannot be captured in an interrupt. If you try to do this, the result will be ResultErrorInterruptNotSupported .

Unlock () function - frees mutex. Accordingly, it should be called at the end of the execution of the protected section.

The IsLocked () function allows you to determine if a mutex is captured or free without capturing it. With preemptive multitasking, the result may lose relevance even before it is analyzed, but with cooperative multitasking, this function may well be useful.

Now it is worth telling about such a thing as inheriting priorities. Suppose the system has tasks A with normal priority, B with increased priority, and C with high priority. Suppose that tasks B and C were blocked, and A at that time managed to capture the mutex. Let's draw it graphically, placing the tasks one above the other (the higher the priority, the higher the task in the figure)

Now task C is unlocked. Of course, having the highest priority, it began to execute. And let's say she is also going to capture the same mutex.

But the mutex is in the possession of task A! According to the standard logic, once it is busy, task C is blocked until it is released. And suddenly the task B was unblocked unexpectedly (even if she waited for some other resource, and he was freed). Since its priority is higher than that of A, then it will be executed exactly (that is, task B)

What we have? High priority C cannot be executed because it is waiting for the mutex to be released. And it cannot wait, because its current owner has been superseded by a higher priority task relative to the owner of the mutex, but a lower priority one is a relatively unfairly blocked task.

To prevent this, the owner of the mutex at the time of possession is assigned the priority of the highest task of those who now expect it. Such a mechanism (inheritance of priorities) allows you to bring the moment of release of the mutex.

This fact is extremely important to remember, because if task A also interacts with any task E, which also has a normal priority, for the duration of the priority inheritance, it will lose this interaction. Alas, nothing can be done with this (within the framework of the standard RTOS concept), this should be simply taken into account when designing programs.

Mutex Guard

Classical algorithmic programming involves one input and one output in any algorithm. However, the practice is such that the fanatical provision of this principle leads to an unjustified complication of the text and reduced readability. Let's consider the following pseudocode:

m_mutex.Lock(); switch (cond) { case 0x00: .... return ResultCode1; case 0x02: .... return ResultCode2; case 0x0a: .... return ResultCode3; case 0x15: .... return ResultCode4; } .... m_mutex.Unlock(); In fact, here, before each exit from a function, you should methodically place mutex.Unlock (). And there can be many such sections in a large algorithm. And they can be added. Sooner or later, the programmer will forget to unlock the mutex somewhere, and the program will “hang”. And this - despite the fact that mankind did not sleep at night, it invented the PLO in general and the destructors of classes in particular!

Mutex-guard is just engaged in the use of destructors. This class in the interface part has nothing but a constructor. Copy its description from the Programmer’s Guide:

explicit MutexGuard (Mutex & mutex, bool only_unlock = false);

Arguments:

- mutex - link to mutex;

- only_unlock – if the value is true, no mutex is captured in the constructor. This implies that the mutex has already been captured by the previously explicit call to the Lock () method.

The constructor should be passed mutex. He will be captured. And it will be released at the moment when the mutex guard goes out of scope. Thus, the previous example should be rewritten like this:

Text

{ MutexGuard (m_mutex); switch (cond) { case 0x00: .... return ResultCode1; case 0x02: .... return ResultCode2; case 0x0a: .... return ResultCode3; case 0x15: .... return ResultCode4; } .... } Event

Semaphores and mutexes are usually used to eliminate the competition of tasks when using certain resources, but sometimes you just need to block the task until some condition arises to unlock it. Typical scenario - an interruption has occurred and the handler function signals the high-priority task of deferred processing, that you should wake up and perform some actions. Events allow you to implement this mechanism.

After reading the previous paragraph, it may seem that the event simply duplicates the semaphore. But it is not. Let's look at the differences.

The first difference: The event affects only those who are waiting for him. If the event currently has no recipient, it will go nowhere. If a moment after the occurrence of the event, someone starts to wait for him - he will be blocked. Unlocking will occur only on the next event. Who did not have time - he was late. As we remember, the semaphore, on the contrary, whether someone was waiting for him or not, will open anyway. And the first one who will pass by the semaphore will be skipped.

The second difference is that if several tasks are waiting for the semaphore to open, only one of them will be unlocked. The rest will be waiting for the next discovery. The event can be set to the mode when it unlocks everyone who was waiting for its occurrence. That is, all pending tasks will be transferred from the “Blocked” state to someone to the “Active” state, and the luckiest task to the “Executed” state.

Otherwise, the logic of events resembles the logic of the binary semaphore.

Constructor class:

Event (bool broadcast = true);

The broadcast parameter specifies the rule by which recipients are informed who are waiting for an event to occur. true– all tasks waiting for it will be unlocked, false – only one task that is first in the queue waiting for this event will be unlocked.

The Raise () function sends an event. Cannot be called from interrupt, with priority higher than MAX_SYSCALL_INTERRUPT_PRIORITY .

The Wait () function is already familiar to us from the same function of the previously considered synchronization objects. Similarly, it has a timeout argument. Similarly, the timeout can be zero, or INFINITE_TIMEOUT . This function cannot be called from interrupts.

Examples of working with sync objects

Unfortunately, it is impossible to make simple and beautiful examples where sync objects are used. All simple will result in a demonstration of examples of function calls. Practically applicable things will take too much space. They will have to be described for a long time, and the reader will lose the thread on the second page, and the twenty-eight others will remain never read by anyone. Therefore, I will refrain from writing such a work.

In the planned third part, a description of the adaptation of a large program for a CNC machine should appear, leaving examples for it. And for those who crave practice, I can recommend unit tests for the OS. They are located in the directory ... \ maksRTOS \ Source \ Tests \ Unit tests. Here is a list of directories hosted there:

BinarySemaphore

Event

MessageQueue

Mutex

MutexGuard

Scheduler

Semaphore

Best practical examples are hard to come up with. Enjoyable learning (although it is believed that a rare reader will come to the end of the first quarter of the tests).

Source: https://habr.com/ru/post/338682/

All Articles