Apache® Ignite ™ + Persistent Data Store - In-Memory penetrates discs. Part I - Durable Memory

In Apache Ignite , starting from version 2.1, there appeared its own persistence implementation.

In order to build this mechanism in its modern design, dozens of man-years left, which were mainly spent on building distributed fault-tolerant transactional storage with SQL support, left.

')

It all started with the fundamental problems of the previous mechanism, which allowed to integrate the In-Memory Data Grid with external permanent storage, for example, Cassandra or Postgres.

Such an approach imposed certain restrictions - for example, it was impossible to perform SQL or distributed computing on top of data that is not in memory, but in such external storage, cold start and low RTO (Recovery Time Objective) were impossible without significant additional complications.

If you use Apache Ignite Persistence, then you keep all the usual Apache Ignite features — ACID , distributed transactions, distributed SQL99 , access via the Java / .NET API or JDBC / ODBC interfaces, distributed computing, and so on. But now what you use can work both on top of memory and on top of a disk that expands memory on installations from one node to several thousand nodes.

Let's take a look at how Apache Ignite Persistence works inside. Today I will consider its basis - Durable Memory, and in the next publication - the disk component itself.

Terminology

I will make a remark about the terminology. In the context of a cache on an Apache Ignite cluster, I will use the terms “cache” and “table” interchangeably. I will use “cache” more often with reference to internal mechanics, and “table” to SQL. In general, outside Apache Ignite, these concepts may have a slightly different meaning, and outside this article may not always be equivalent. So, taking into account the availability of permanent storage “cache” Apache Ignite does not always fit into the common semantics of the word. As for the “table”, then on the basis of the Apache Ignite cache, several “tables” can be defined with the ability to be accessed using SQL, or no tables are defined at all (then the access will be possible only through the Java / .NET / C ++ API ).

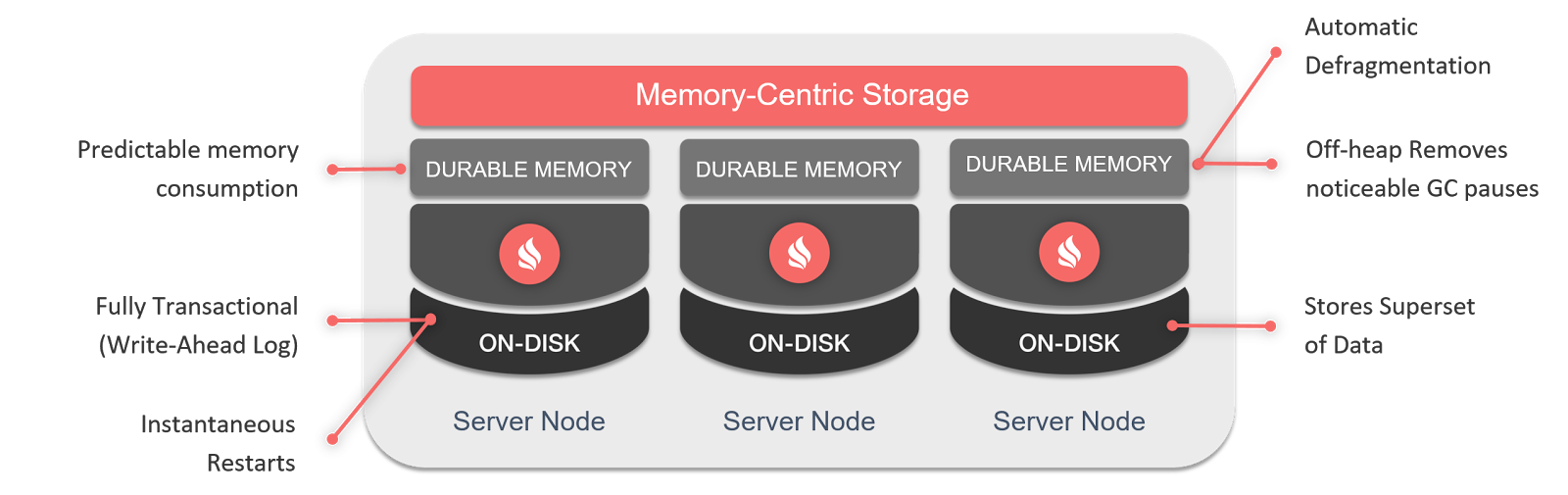

Durable memory

To build efficient mixed storage in memory and on disk, without duplicating a huge amount of code, which would significantly increase the cost of product support, we had to significantly rework the architecture of data storage in memory.

The new architecture - Durable Memory - like Persistence, was tested on large GridGain clients since the end of last year, and debuted publicly since Apache Ignite 2.0. It provides off-heap data storage in page format.

Memory Pages / Pages

The basic storage unit is the “page”, which contains actual data or metadata.

When data is pushed onto a disk when the allocated memory is exhausted, this happens page by page. Therefore, the page size should not be too large, otherwise the efficiency of displacement will suffer, since in large pages hot data will be more likely to be mixed with cold data, which will constantly pull the page up into memory.

But when the pages become small, there are problems of saving massive entries that do not fit on one page, as well as problems of fragmentation and memory allocation (it is too expensive to request memory from the operating system for every small page that contains 1-2 entries).

The first problem - with large records - is solved through the possibility of “smearing” such a record into several pages, each of which stores only a certain segment. The flip side of this approach is lower performance when working with such records due to the need to crawl several pages to obtain complete information. Therefore, if this is a frequent case for you, then it makes sense to consider increasing the default size of the page (initially 2 KiB with the ability to vary from 1-16 KiB) through

MemoryConfiguration.setPageSize(…) .In most situations, it makes sense to override the page size also if it is different from the page size on your SSD (most often it is 4 KiB). Otherwise, some performance degradation may be observed.

The second problem - with fragmentation - is partially solved by online defragmentation built into the platform, which leaves only some small “fireproof residue” on a data page that is too small to fit anything else.

The third problem - the high cost of allocating memory for pages with a large number of them - is solved through the next level of abstraction, "segments".

Memory Segments / Segments

Segments are continuous blocks of memory that are an atomic unit of allocated memory. When the allocated memory ends and if the usage limit has not yet been reached, an additional segment is requested from the OS, which is further internally divided into pages.

In the current implementation, it is planned to allocate up to 16 memory segments per region with a segment size of at least 256 MiB. The actual volume of a segment is defined as the difference between the maximum allowed memory and the original allocation divided by 15 (the 16th segment is the initially allocated memory). For example, if the upper limit is 512 GiB per node and 16 GiB is initially allocated, then the size of the allocated segments will be (512 - 16) / 15 ≈ 33 GiB.

When we talk about segments, it is impossible not to mention the restrictions on memory consumption. Let us consider their implementation in more detail.

It is not optimal to make global settings for all relevant parameters: maximum and initial volumes, extrusion, and so on, since different data may have different storage requirements. One example is online and archival data storage. We may wish to store orders for the last year in the online storage, which is mostly in memory, because there may be hot data, but at the same time, we may want to store the old order history and the past internal transactions on disk, not even temporarily precious memory.

It would be possible to set up restrictions at the level of each cache, but then we would get into a situation where, with a large number of tables — a few hundred or thousands — each would have to allocate a few crumbs of memory, or do overbooking, quickly getting a memory shortage error.

A mixed approach was chosen that allows you to define limits for groups of tables, which brings us to the next level of abstraction.

Regions Memory

The top level of the Durable Memory storage architecture is the “memory region” logical entity, which groups tables that share a single storage area with its own settings, restrictions, and so on.

For example, if you have in your application a cache of goods with data that are critical to reliability and a number of derived aggregates caches that are actively filled but not very critical for loss, then you can define two memory regions: the first, with a 384 GiB consumption restriction and strict guarantees consistency, bind the cache of goods, and to the second, with a limit of 64 GiB and with weakened guarantees, bind all temporary caches that will be shared between these 64 GiB memory.

Regions of memory impose restrictions, define storage settings, and group caches into space-related groups in terms of storage space.

Page types and data retrieval

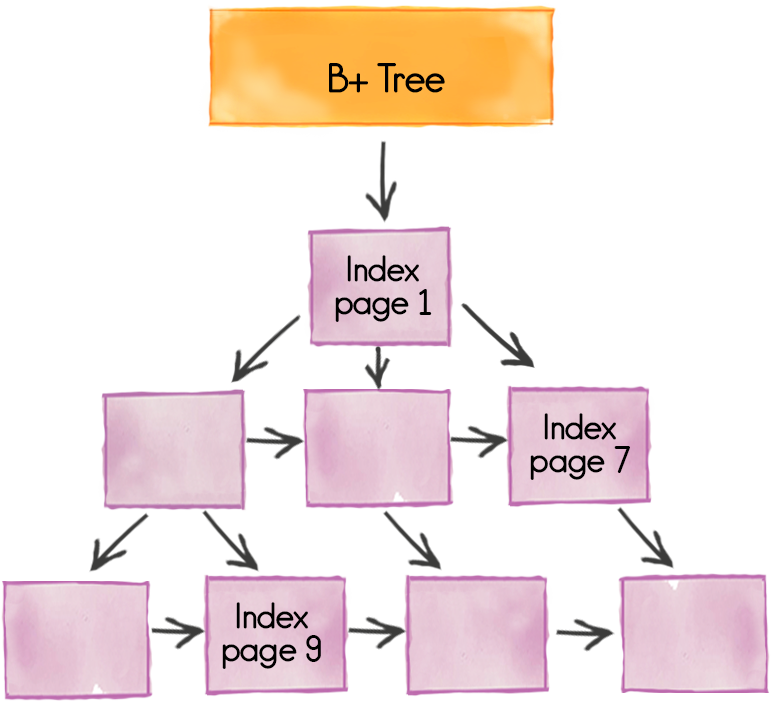

Memory pages are divided into several types, the key of which are data pages and indexes .

Data pages store data directly; they have already been discussed in general terms above. If the record does not fit into one page, it will be spread over several, but this is not a free operation. And if there are a lot of big entries in the application scenario, then it makes sense to increase the page size through

MemoryConfiguration.setPageSize(…) . Data is pushed out to disk by page: the page is either completely in RAM or completely on disk.Index pages are stored as B + trees , each of which can be spread across multiple pages. Index pages are always in memory for maximum prompt access when searching for data.

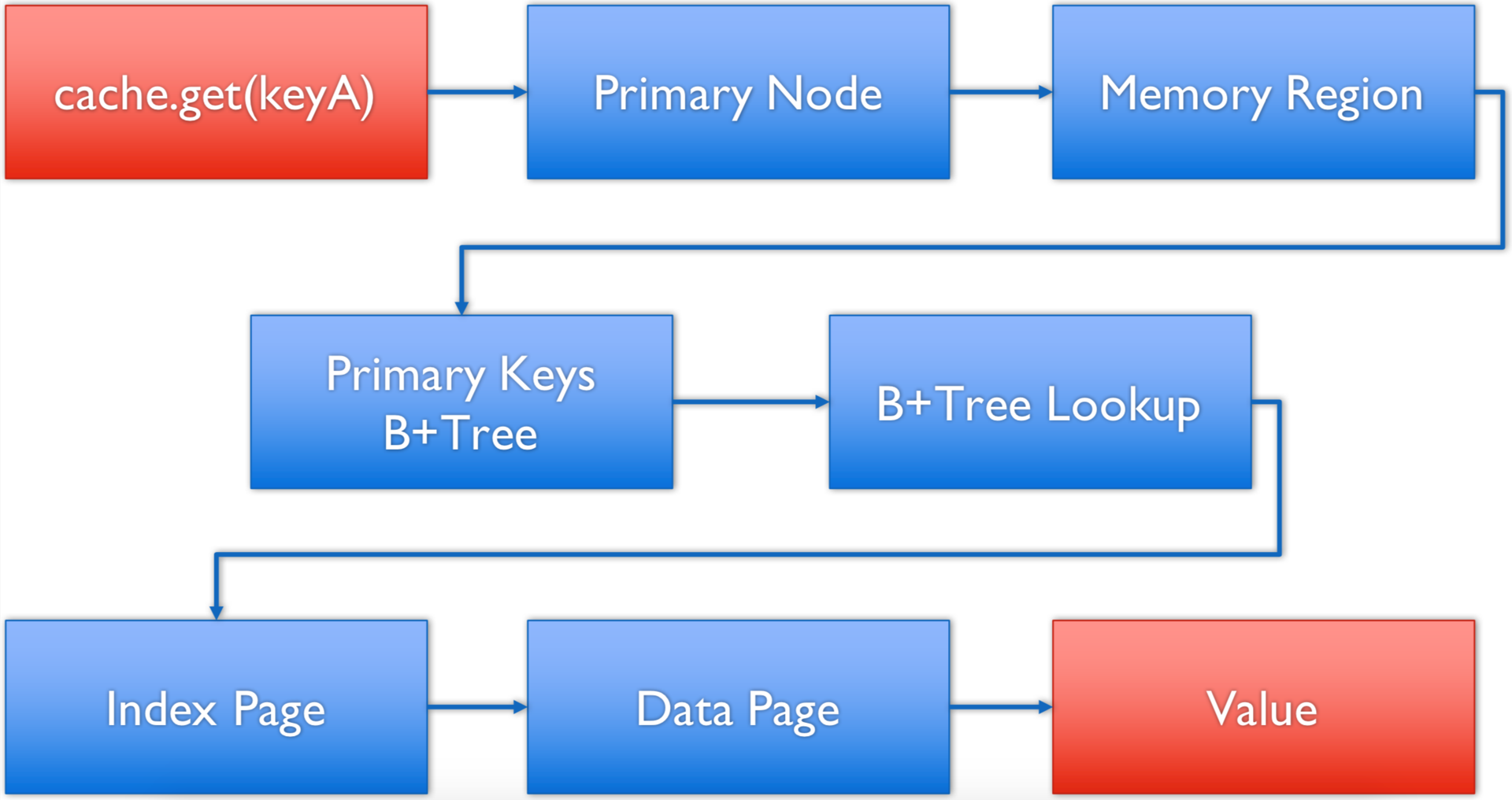

In this scheme, to obtain data by key, we go through the following process:

- the client calls the

cache.get(keyA);methodcache.get(keyA); - the client determines the server node, which is responsible for this key, using the built-in affinity function, and delegates a request over the network to this server node;

- the server node determines the region of memory that is responsible for the cache, according to the key in which the request goes;

- in the corresponding region, the meta-information page is accessed, which contains entry points to B + -trees for the primary key of this cache;

- the search for the required index page for the given key is performed;

- in the index page, the actual key search is performed and the data page that contains it is determined, as well as the offset in this page;

- the data page is accessed, and the value is read from the key.

SQL

SQL queries using H2 generate a two-stage execution plan (in general), which essentially reduces to a MapReduce-like approach. The first stage of the plan is “poured” onto all nodes that are responsible for the table, where the definition of the memory region responsible for the table is similarly performed. Further, if the selection is based on an index, the desired page of indexes is searched for, the locations of the selected values are determined, and it is iterated on. In the case of a full scan, a full iteration occurs over the primary index and, accordingly, all pages are accessed.

crowding out

Starting from version 2.0, preemption works page by page, with the removal of a page from memory. If Persistence is configured, then the copy of the page and the recording in the indexes will remain intact, which will make it possible to later pick up the necessary information from the local disk. If Persistence is explicitly disabled or not configured, then crowding will completely erase the corresponding data from the cluster.

Page-by-page preemption makes it impossible to work with key-value pairs easily, but it is much better placed on Persistence, and with a sufficiently small page size gives good results.

Version 2.1 supports 3 wipe modes :

- disabled - wipeout does not occur, if there is a shortage of memory, an error is thrown;

- random lru - when preempted in a cycle, random 5 data pages are selected, after which one is deleted — the one with the smallest access timestamp (accessed most long ago). The approach with the selection of 5 random pages was chosen because of the complexity of the high-performance implementation of the complete orderliness of access time stamps;

- random 2 lru is similar to the previous one, but each page has two access time stamps — the last and the last but one — and the minimum one is used to select it. This approach allows us to more effectively handle situations, for example, with rare full scans or rare requests for a large part of the data set, which with random lru can create the appearance that some cold pages are hot.

DataPageEvictionMode

/** * Defines memory page eviction algorithm. A mode is set for a specific * {@link MemoryPolicyConfiguration}. Only data pages, that store key-value entries, are eligible for eviction. The * other types of pages, like index or meta pages, are not evictable. */ public enum DataPageEvictionMode { /** Eviction is disabled. */ DISABLED, /** * Random-LRU algorithm. * <ul> * <li>Once a memory region defined by a memory policy is configured, an off-heap array is allocated to track * last usage timestamp for every individual data page. The size of the array is calculated this way - size = * ({@link MemoryPolicyConfiguration#getMaxSize()} / {@link MemoryConfiguration#pageSize})</li> * <li>When a data page is accessed, its timestamp gets updated in the tracking array. The page index in the * tracking array is calculated this way - index = (pageAddress / {@link MemoryPolicyConfiguration#getMaxSize()}</li> * <li>When it's required to evict some pages, the algorithm randomly chooses 5 indexes from the tracking array and * evicts a page with the latest timestamp. If some of the indexes point to non-data pages (index or system pages) * then the algorithm picks other pages.</li> * </ul> */ RANDOM_LRU, /** * Random-2-LRU algorithm: scan-resistant version of Random-LRU. * <p> * This algorithm differs from Random-LRU only in a way that two latest access timestamps are stored for every * data page. At the eviction time, a minimum between two latest timestamps is taken for further comparison with * minimums of other pages that might be evicted. LRU-2 outperforms LRU by resolving "one-hit wonder" problem - * if a data page is accessed rarely, but accidentally accessed once, it's protected from eviction for a long time. */ RANDOM_2_LRU; // ... } * * *

In the next publication, I will discuss in more detail how Durable Memory lays down on the implementation of disk storage ( WAL + Checkpointing), and also focuses on the possibilities of creating snepshots, which provide proprietary GridGain extensions.

Source: https://habr.com/ru/post/338606/

All Articles