Three ideas on how to improve development efficiency: the results of the hackathon for Machine Learning in SberTech

We regularly conduct external hackathons on various topics. But this summer we decided to give the opportunity to prove themselves and the staff - after all, they probably want to solve the tasks on the available data. What happened to the colleagues at SberTech - says samorlov , chief development manager in the Department of development of the laboratory cluster of super-arrays.

Participants were encouraged to develop solutions for Machine Learning that would help predict the timing of improvements and the criticality of bugs. These solutions could increase the efficiency of development at SberTech, including:

The best ideas of the participants are planned to be introduced in SberTech in the near future.

We perfectly understood that it is impossible to create an industrial solution for a limited time hakaton. Nobody harbored illusions and it was clear that the decisions would be largely raw. But why invent tasks from the air, if we have well-defined tasks? As a result, we gave the children a fan (and of course, a monetary reward), and in return received some interesting ideas that can be taken into work.

')

Initial data

The task was solved on the basis of data from Jira internal and external work networks, as well as from the PMU (internal automated project management system). If the Jira data sets were text fields with history and attachments, then the MCC kept more specific information used for planning changes.

The data sets were laid out on the file share for member access. Since this is a hackathon, it was assumed that they themselves will figure out how to work with what. As in real life :)

Iron

If we hardly doubted the knowledge and skills of our colleagues, then the power of desktop computers, to put it mildly, is not transcendent. Therefore, in addition, on request, a small Hadoop cluster was provided. The cluster configuration (80 CPU, 200 GB, 1.5 TB) resembles a single-unit server with an emphasis on computing, but no, it’s still a cluster deployed in Openstack.

Of course, this is a small stand. It is designed for testing solutions and integration of our Data Laboratory and was a greatly reduced copy of the industrial one. But for the hackathon it was enough.

JupyterHUB was involved in the Data Lab, creating separate JupyterNotebook instances. And in order to be able to work with parallel computing, with the help of Cloudera parcels we added several versions of kernels with different sets of python libraries to Jupyter.

As a result, at the entrance, we got the independent work of N users with the ability to use the necessary versions of libraries without disturbing anyone. In addition, parallel computing could be started without much headache (we know that there is a Data Science Workbench from Cloudera, and we are already trying to work with it, but at the time of the hackathon this tool was not yet available).

I place - auto processing of bugs

Purpose: Creating a pipeline for auto processing of bugs in Sberbank-Technology projects.

Authors of the decision: Anna Rozhkova, Pavel Shvets and Mikhail Baranov (Moscow)

The team used Sberbank Online mobile app customer reviews from Google Play and the AppStore as well as information about bugs from Jira as source data.

First, the participants solved the problem of breaking reviews into positive and negative using a tree-based classifier. Then, using negative reviews, identified the main topics that have caused discontent among users. This, for example:

In a separate category were people who wrote that "everything is bad."

With the help of agglomerative hierarchical clustering, the team shared feedback on client issues (the advantage of this approach is the possibility of adding expert opinion, for example, when feedback on goals and contributions can be attributed to one cluster). For example, one of the selected clusters combined the problems with the input on the Asus Zenfone 2 device (the period between the appearance of the first reviews of the problem and the registration of the bug in Jira was 16 days).

Participants suggested reducing the response time to user problems as much as possible by making online feedback processing with auto-creation of bugs on dedicated clusters, taking advantage of the bank - a large number of caring customers (1500 reviews per day). During the work, we managed to achieve accuracy = 86% and precision = 88% in determining negative feedback.

Another solution team - visualization of processes in development. Case was disassembled on the example of Sberbank Online Android (ASBOL).

Participants counted the number of transitions of the status of bugs between team members and painted them in the form of a graph. With this tool, it is easier to make management decisions and evenly distribute the load within the team. In addition, it is clearly visible who is the key member of the team and where there are bottlenecks of the project. Based on this information, it is proposed to automatically assign bugs to specific team members, taking into account their load and the criticality of the bug.

In addition, participants tried to analyze the problem of automatic prioritization of bugs using logistic regression and a naive Bayes classifier. For this, the importance of a bug according to its description, the presence of attachments and other characteristics was determined. However, the model showed the result accuracy = 54% with cross-validation on 3 folds - at the time of delivery of works, the prototype is not suitable for implementation.

According to the team members, the advantages of their models are simplicity, good interpretation of the result and quick work. This is a step towards real-time processing of user feedback using machine learning, allowing real-time interaction with users, identifying and fixing problems, increasing customer loyalty.

Team presentation

II place - optimization of production processes

Author of the decision: Anton Baranov (Moscow)

Tasks:

Anton worked with bugs from Jira. The data set included information on more than 67,000 bugs with the status “completed” from 2011 to 2017. He conducted a search for a solution of the problems posed using Python libraries and other ML libraries.

Anton analyzed and selected signs that affect the final priority of the bug, and based on them built a model for predicting the final priority. In addition, after analyzing and searching for features of various time series, he built models for predicting the number of bugs. The proposed solutions will be useful in testing.

In Agile, the result of predicting the number of defects for this model can be taken into account when planning work in future sprints. Anton's decision will help to more accurately determine the time required to correct problems, which will affect the final performance.

Presentation of the participant

Author of the decision: Nikolay Zheltovsky (Innopolis)

Objective: To create a neural network based prediction system to minimize risks when managing IT projects.

From the source data sets proposed by the organizers, the participant chose to upload the task list from Jira. A task is a separate task for the development of a software component. Each task in the course of its life cycle passes through various states: creation, development, various types of testing and coordination, and closure. There may be several dozen such states.

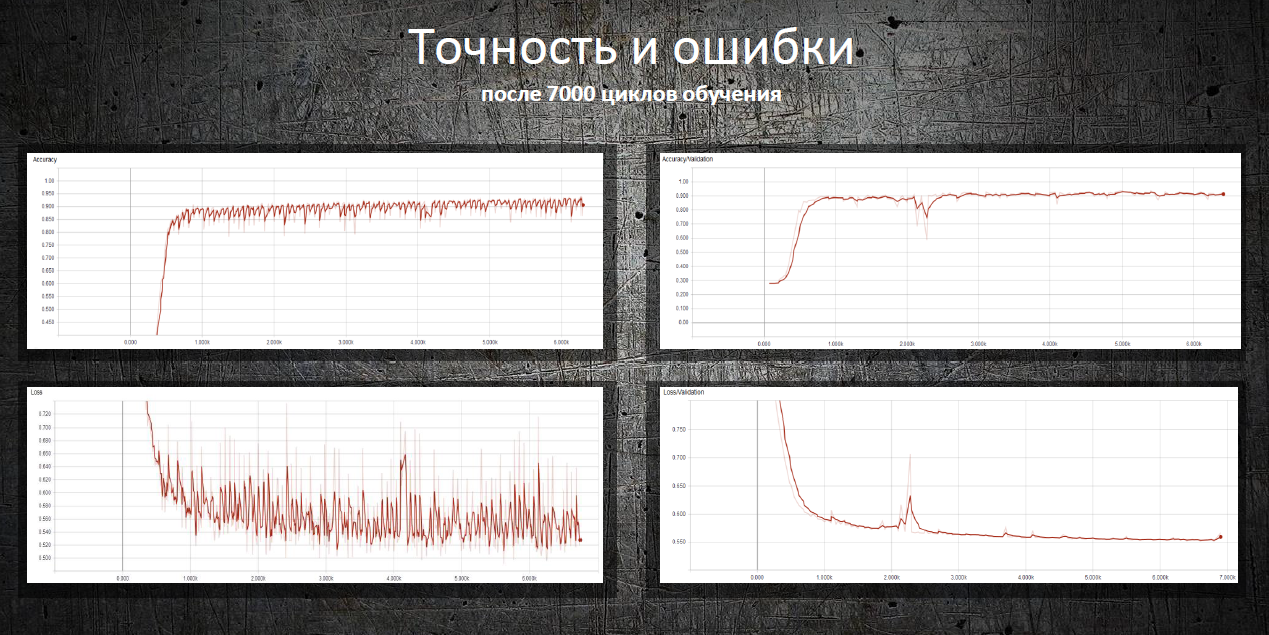

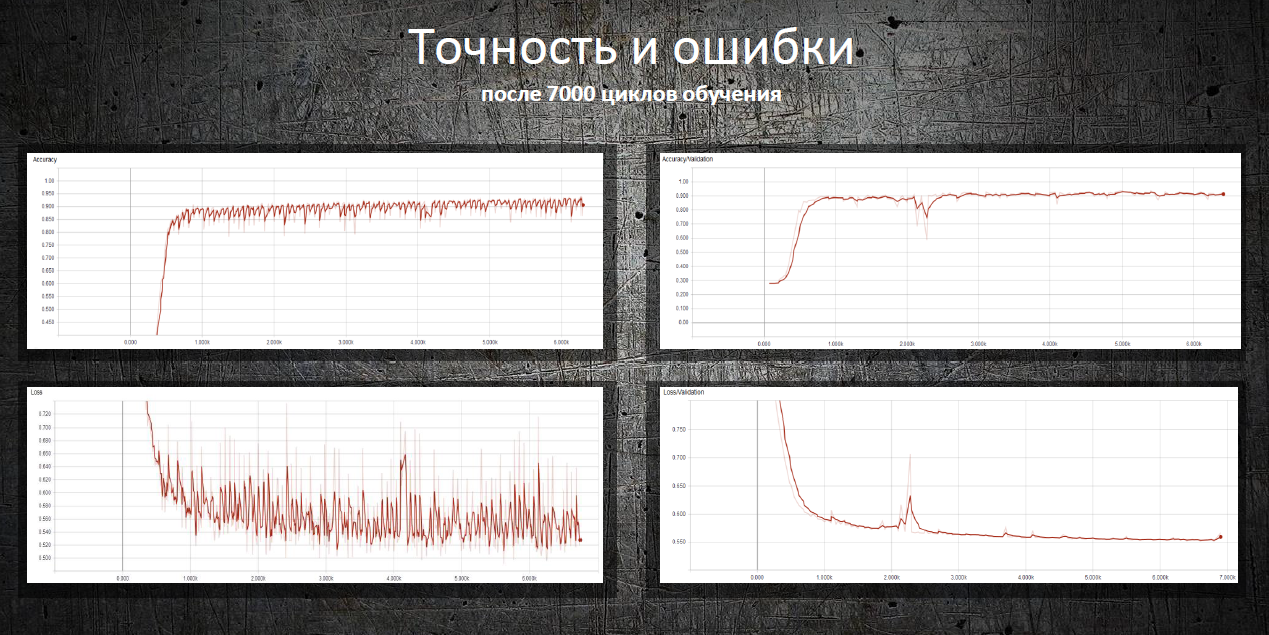

Nikolay built and trained a neural network capable of predicting the transitions of tasks to certain states and predicting the dates of these events, reporting possible critical events or deviations from planned values - important functions for the control process.

To train the neural network, Nikolay restored the sequence of intermediate states for each task in the event log. One of the intermediate states of the problem was sent to the input of the neural network, the output was final, thus the network was trained to predict events. Additionally, derived data were used for training - for example, a table with a time difference between all events in the past; It took into account the hypothesis that the tasks for which work is carried out on weekends can be problematic.

The neural network predicts some critical events with an accuracy of 91-94%. For example, reopening a task. The closing date of the task is predicted with a deviation from 0 to 37 days immediately after its creation. At later stages, when any work is done on the task, the maximum deviation is no more than a week.

Presentation of the participant

Participants were encouraged to develop solutions for Machine Learning that would help predict the timing of improvements and the criticality of bugs. These solutions could increase the efficiency of development at SberTech, including:

- it is better to plan the loading of teams during patches at different stages of development;

- to form the release structure in the hot fix part;

- plan work in sprints - determine story points reserved for problem fixes;

- in general, reduce the number of bugs in the program code;

- reduce time to market;

- increase the predictability of decisions and the effectiveness of testing.

The best ideas of the participants are planned to be introduced in SberTech in the near future.

We perfectly understood that it is impossible to create an industrial solution for a limited time hakaton. Nobody harbored illusions and it was clear that the decisions would be largely raw. But why invent tasks from the air, if we have well-defined tasks? As a result, we gave the children a fan (and of course, a monetary reward), and in return received some interesting ideas that can be taken into work.

')

Initial data

The task was solved on the basis of data from Jira internal and external work networks, as well as from the PMU (internal automated project management system). If the Jira data sets were text fields with history and attachments, then the MCC kept more specific information used for planning changes.

The data sets were laid out on the file share for member access. Since this is a hackathon, it was assumed that they themselves will figure out how to work with what. As in real life :)

Iron

If we hardly doubted the knowledge and skills of our colleagues, then the power of desktop computers, to put it mildly, is not transcendent. Therefore, in addition, on request, a small Hadoop cluster was provided. The cluster configuration (80 CPU, 200 GB, 1.5 TB) resembles a single-unit server with an emphasis on computing, but no, it’s still a cluster deployed in Openstack.

Of course, this is a small stand. It is designed for testing solutions and integration of our Data Laboratory and was a greatly reduced copy of the industrial one. But for the hackathon it was enough.

JupyterHUB was involved in the Data Lab, creating separate JupyterNotebook instances. And in order to be able to work with parallel computing, with the help of Cloudera parcels we added several versions of kernels with different sets of python libraries to Jupyter.

As a result, at the entrance, we got the independent work of N users with the ability to use the necessary versions of libraries without disturbing anyone. In addition, parallel computing could be started without much headache (we know that there is a Data Science Workbench from Cloudera, and we are already trying to work with it, but at the time of the hackathon this tool was not yet available).

I place - auto processing of bugs

Purpose: Creating a pipeline for auto processing of bugs in Sberbank-Technology projects.

Authors of the decision: Anna Rozhkova, Pavel Shvets and Mikhail Baranov (Moscow)

The team used Sberbank Online mobile app customer reviews from Google Play and the AppStore as well as information about bugs from Jira as source data.

First, the participants solved the problem of breaking reviews into positive and negative using a tree-based classifier. Then, using negative reviews, identified the main topics that have caused discontent among users. This, for example:

- updates

- antivirus (root, firmware)

- SMS and payments

In a separate category were people who wrote that "everything is bad."

With the help of agglomerative hierarchical clustering, the team shared feedback on client issues (the advantage of this approach is the possibility of adding expert opinion, for example, when feedback on goals and contributions can be attributed to one cluster). For example, one of the selected clusters combined the problems with the input on the Asus Zenfone 2 device (the period between the appearance of the first reviews of the problem and the registration of the bug in Jira was 16 days).

Participants suggested reducing the response time to user problems as much as possible by making online feedback processing with auto-creation of bugs on dedicated clusters, taking advantage of the bank - a large number of caring customers (1500 reviews per day). During the work, we managed to achieve accuracy = 86% and precision = 88% in determining negative feedback.

Another solution team - visualization of processes in development. Case was disassembled on the example of Sberbank Online Android (ASBOL).

Participants counted the number of transitions of the status of bugs between team members and painted them in the form of a graph. With this tool, it is easier to make management decisions and evenly distribute the load within the team. In addition, it is clearly visible who is the key member of the team and where there are bottlenecks of the project. Based on this information, it is proposed to automatically assign bugs to specific team members, taking into account their load and the criticality of the bug.

In addition, participants tried to analyze the problem of automatic prioritization of bugs using logistic regression and a naive Bayes classifier. For this, the importance of a bug according to its description, the presence of attachments and other characteristics was determined. However, the model showed the result accuracy = 54% with cross-validation on 3 folds - at the time of delivery of works, the prototype is not suitable for implementation.

According to the team members, the advantages of their models are simplicity, good interpretation of the result and quick work. This is a step towards real-time processing of user feedback using machine learning, allowing real-time interaction with users, identifying and fixing problems, increasing customer loyalty.

Team presentation

II place - optimization of production processes

Author of the decision: Anton Baranov (Moscow)

Tasks:

- predict the final priority of the bug, using information about it. For example: description, detection stage, project, etc.

- based on data on the distribution of bugs in time to predict the number of bugs of a certain type, system, stage, etc., in the forecast period.

Anton worked with bugs from Jira. The data set included information on more than 67,000 bugs with the status “completed” from 2011 to 2017. He conducted a search for a solution of the problems posed using Python libraries and other ML libraries.

Anton analyzed and selected signs that affect the final priority of the bug, and based on them built a model for predicting the final priority. In addition, after analyzing and searching for features of various time series, he built models for predicting the number of bugs. The proposed solutions will be useful in testing.

In Agile, the result of predicting the number of defects for this model can be taken into account when planning work in future sprints. Anton's decision will help to more accurately determine the time required to correct problems, which will affect the final performance.

Presentation of the participant

III place: risk prediction

Author of the decision: Nikolay Zheltovsky (Innopolis)

Objective: To create a neural network based prediction system to minimize risks when managing IT projects.

From the source data sets proposed by the organizers, the participant chose to upload the task list from Jira. A task is a separate task for the development of a software component. Each task in the course of its life cycle passes through various states: creation, development, various types of testing and coordination, and closure. There may be several dozen such states.

Nikolay built and trained a neural network capable of predicting the transitions of tasks to certain states and predicting the dates of these events, reporting possible critical events or deviations from planned values - important functions for the control process.

To train the neural network, Nikolay restored the sequence of intermediate states for each task in the event log. One of the intermediate states of the problem was sent to the input of the neural network, the output was final, thus the network was trained to predict events. Additionally, derived data were used for training - for example, a table with a time difference between all events in the past; It took into account the hypothesis that the tasks for which work is carried out on weekends can be problematic.

The neural network predicts some critical events with an accuracy of 91-94%. For example, reopening a task. The closing date of the task is predicted with a deviation from 0 to 37 days immediately after its creation. At later stages, when any work is done on the task, the maximum deviation is no more than a week.

Presentation of the participant

Source: https://habr.com/ru/post/338426/

All Articles