Use PowerShell for IT security. Part III: Budget Classification

Last time, with a few lines of PowerShell code, I launched a completely new software category — analyzing file access. My 15 minutes of fame was almost over, but I was able to point out that PowerShell provides practical options for monitoring file access events. In this post, I’ll finish work on the file access analysis tool and move on to PowerShell data classification.

Event-Based Analytics

')

I want to remind you that in my analysis script, I used the Register-WmiEvent cmdlet to view file access events in a specific folder. I also created a mythical reference value for the intensity of events to compare current data with it. (For those who are thoroughly interested in this, there is a whole area of means for measuring such indicators - visits to websites, calls to a call center, a flow of people from coffee machines, and this guy started studying it first.)

When the number of file accesses exceeds the standard indicators, I ran a program-generated event that is called by another part of the code and displays the file access analysis information panel.

This launch is performed by the New-Event cmdlet, which allows you to send an event along with other information to the recipient. There is a WMI-Event cmdlet to read event data. In the role of the receiving party, there may even be another script, since both event cmdlets use the same SourceIdentifier identifier - in our case, this is Bursts.

These are all basic ideas regarding the operating system: in fact, PowerShell provides a simple messaging system. And quite successfully, after all, we use just the signaling command language.

Anyway, the following is the amazing full code.

1. $cur = Get-Date 2. $Global:Count=0 3. $Global:baseline = @{"Monday" = @(3,8,5); "Tuesday" = @(4,10,7);"Wednesday" = @(4,4,4);"Thursday" = @(7,12,4); "Friday" = @(5,4,6); "Saturday"=@(2,1,1); "Sunday"= @(2,4,2)} 4. $Global:cnts = @(0,0,0) 5. $Global:burst = $false 6. $Global:evarray = New-Object System.Collections.ArrayList 7. 8. $action = { 9. $Global:Count++ 10. $d=(Get-Date).DayofWeek 11. $i= [math]::floor((Get-Date).Hour/8) 12. 13. $Global:cnts[$i]++ 14. 15. #event auditing! 16. 17. $rawtime = $EventArgs.NewEvent.TargetInstance.LastAccessed.Substring(0,12) 18. $filename = $EventArgs.NewEvent.TargetInstance.Name 19. $etime= [datetime]::ParseExact($rawtime,"yyyyMMddHHmm",$null) 20. 21. $msg="$($etime)): Access of file $($filename)" 22. $msg|Out-File C:\Users\bob\Documents\events.log -Append 23. 24. 25. $Global:evarray.Add(@($filename,$etime)) 26. if(!$Global:burst) { 27. $Global:start=$etime 28. $Global:burst=$true 29. } 30. else { 31. if($Global:start.AddMinutes(15) -gt $etime ) { 32. $Global:Count++ 33. #File behavior analytics 34. $sfactor=2*[math]::sqrt( $Global:baseline["$($d)"][$i]) 35. 36. if ($Global:Count -gt $Global:baseline["$($d)"][$i] + 2*$sfactor) { 37. 38. 39. "$($etime): Burst of $($Global:Count) accesses"| Out-File C:\Users\bob\Documents\events.log -Append 40. $Global:Count=0 41. $Global:burst =$false 42. New-Event -SourceIdentifier Bursts -MessageData "We're in Trouble" -EventArguments $Global:evarray 43. $Global:evarray= [System.Collections.ArrayList] @(); 44. } 45. } 46. else { $Global:burst =$false; $Global:Count=0; $Global:evarray= [System.Collections.ArrayList] @();} 47. } 48. } 49. 50. Register-WmiEvent -Query "SELECT * FROM __InstanceModificationEvent WITHIN 5 WHERE TargetInstance ISA 'CIM_DataFile' and TargetInstance.Path = '\\Users\\bob\\' and targetInstance.Drive = 'C:' and (targetInstance.Extension = 'txt' or targetInstance.Extension = 'doc' or targetInstance.Extension = 'rtf') and targetInstance.LastAccessed > '$($cur)' " -sourceIdentifier "Accessor" -Action $action 51. 52. 53. #Dashboard 54. While ($true) { 55. $args=Wait-Event -SourceIdentifier Bursts # wait on Burst event 56. Remove-Event -SourceIdentifier Bursts #remove event 57. 58. $outarray=@() 59. foreach ($result in $args.SourceArgs) { 60. $obj = New-Object System.Object 61. $obj | Add-Member -type NoteProperty -Name File -Value $result[0] 62. $obj | Add-Member -type NoteProperty -Name Time -Value $result[1] 63. $outarray += $obj 64. } 65. 66. 67. $outarray|Out-GridView -Title "FAA Dashboard: Burst Data" 68. } Please do not hit your laptop while watching.

I understand that I continue to show separate views of the tables and there are more suitable ways of processing graphics. With PowerShell, you have access to the entire .NET Framework, so you can create objects — lists, charts, and so on — to access them and then update them if necessary. For now I will leave it for you as a homework.

Classification plays a very important role in data security.

Let's postpone monitoring of file events and look at the topic of data classification in PowerShell.

At Varonis, for objective reasons, we use the principle of “awareness of your data.” To develop a useful data protection program, one of the first steps should be to examine where the critical or confidential data are located - credit card numbers, customer addresses, confidential legal documents, proprietary code.

The goal, of course, is to protect the valuable digital data of the company, but first they should determine their composition. By the way, this is not just a good idea, many laws and regulations on data protection (for example, HIPAA), as well as industry standards (PCI DSS) require the identification of assets in actual risk assessment.

PowerShell has a lot of potential for data classification applications. Can PS directly access and read files? Yes. Can it perform a pattern matching for text? Yes. Can he do it efficiently on a large scale? Yes.

No, the PowerShell classification script that I received in the end will not replace the Varonis Data Classification Framework. But for the scenario that I meant - the IT administrator who needs to monitor the folder with confidential data - my PowerShell test script does not just show a satisfactory result, it can be evaluated, say, 4 with a plus!

WQL and CIM_DataFile

Let's go back to the WQL language, which I mentioned in the first note about event monitoring.

Just as I used this query language to search for event files in a directory, I can modify this script to get a list of all the files from a given directory. As before, I use the CIM_DataFile class, but this time my query affects the folder itself, not the events associated with it.

1. $Get-WmiObject -Query "SELECT * From CIM_DataFile where Path = '\\Users\\bob\\' and Drive = 'C:' and (Extension = 'txt' or Extension = 'doc' or Extension = 'rtf')" Excellent! This line of code will produce an array with the names of the paths to the files.

To read the contents of each of the files in a variable in PowerShell, the Get-Content cmdlet is provided. Thank you Microsoft.

I need another component for my script - pattern matching. Not surprisingly, PowerShell has a standard expression module. For my purposes this is redundant, but I definitely save time.

When talking to security experts, they often told me that companies should explicitly mark documents or presentations with production or confidential information accordingly - for example, the word "secret" or "confidential". This is a recommendation and obviously helps in the data classification process.

In my script, I created a PowerShell hash table with possible labeling texts and the corresponding regular expression to match. For documents that are not explicitly marked in this way, I also added special project names - in my case, snowflake - which are also checked. And to drop, I added a regular expression for social security numbers.

The block of code that I used to read and match the sample is below. The file name for reading and checking is passed as a parameter.

1. $Action = { 2. 3. Param ( 4. 5. [string] $Name 6. 7. ) 8. 9. $classify =@{"Top Secret"=[regex]'[tT]op [sS]ecret'; "Sensitive"=[regex]'([Cc]onfidential)|([sS]nowflake)'; "Numbers"=[regex]'[0-9]{3}-[0-9]{2}-[0-9]{3}' } 10. 11. 12. $data = Get-Content $Name 13. 14. $cnts= @() 15. 16. foreach ($key in $classify.Keys) { 17. 18. $m=$classify[$key].matches($data) 19. 20. if($m.Count -gt 0) { 21. 22. $cnts+= @($key,$m.Count) 23. } 24. } 25. 26. $cnts 27. } Excellent multithreading support

I can simplify my project by taking the code presented above, adding connecting elements and running the result through the Out-GridView cmdlet.

There is a thought that I want to convey. Even in the same folder in the corporate file system there can be hundreds and even thousands of files.

Do you really want to wait for the script to consistently read each file?

Of course not!

Applications where I / O is performed for a large number of files, as in the case of classification, are well suited for multi-threaded processing — you can run multiple actions on files in parallel, thereby significantly reducing the time to get the result.

PowerShell has a practically usable (albeit awkward) background processing system known as Jobs. But there is also a striking and elegant feature with multithreading support - Runspaces.

After some manipulations and borrowing code from several pioneers in using Runspaces, I was really impressed.

Runspaces allows you to handle all the intricate mechanics of synchronization and parallelism. This is not something that can be quickly understood, and even the bloggers Scripting Guys, working with Microsoft, are still only versed in this multi-threaded system.

Anyway, I boldly continued to move forward and used Runspaces to read files in parallel. The following is a part of the code for running streams: for each file in the directory I created a stream that runs the above script block that returns the matching patterns in an array.

1. $RunspacePool = [RunspaceFactory]::CreateRunspacePool(1, 5) 2. 3. $RunspacePool.Open() 4. 5. $Tasks = @() 6. 7. 8. foreach ($item in $list) { 9. 10. $Task = [powershell]::Create().AddScript($Action).AddArgument($item.Name) 11. 12. $Task.RunspacePool = $RunspacePool 13. 14. $status= $Task.BeginInvoke() 15. 16. $Tasks += @($status,$Task,$item.Name) 17. } Let's take a deep breath - we've covered a lot of stuff.

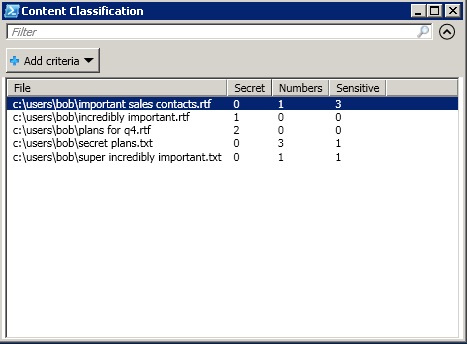

In the next post I will present the full script and discuss some (unpleasant) details. In the meantime, after assigning a mark to some files, I created the following output using Out-GridView.

Classification of the contents of almost nothing!

At the same time, you should think about how to connect two scripts with each other: a script monitoring the activity of files and a classification script, which is presented in this note.

Still, the classification script should transmit data about what should be monitored in the file activity script, and the activity script can, in theory, inform the classification script when a new file is created so that it can be classified - in other words, implement a data addition system.

Sounds like I’m suggesting, I’m not afraid of the word, a PowerShell-based security control platform. We will begin work on doing this next time.

Source: https://habr.com/ru/post/338166/

All Articles