Do WAFs dream about statistical analyzers?

One of the most popular trends in the field of protecting applications of the current decade is virtual patching (VP) technology, which helps protect a web application from exploiting known vulnerabilities at the web application firewall level (here and further WAF is a dedicated solution operating on a separate node between the gateway to the external network and the web server). VP technology is based on building rules for filtering HTTP requests on the WAF side based on the results of the static application security analysis tools (static application security testing, SAST). However, due to the fact that SAST and WAF tools rely on different models of application presentation and different decision-making methods, there are still no truly effective solutions for their integration on the market. In the framework of SAST, work with the application is carried out according to the white box model and, as a rule, formal approaches are used to search for vulnerabilities in the code. For WAF, the application is a black box, and heuristics are used to detect attacks. This does not allow VPs to be effectively used to protect against attacks in cases where the exploitation conditions of the vulnerability go beyond the trivial `http_parameter = plain_text_attack_vector` scheme.

One of the most popular trends in the field of protecting applications of the current decade is virtual patching (VP) technology, which helps protect a web application from exploiting known vulnerabilities at the web application firewall level (here and further WAF is a dedicated solution operating on a separate node between the gateway to the external network and the web server). VP technology is based on building rules for filtering HTTP requests on the WAF side based on the results of the static application security analysis tools (static application security testing, SAST). However, due to the fact that SAST and WAF tools rely on different models of application presentation and different decision-making methods, there are still no truly effective solutions for their integration on the market. In the framework of SAST, work with the application is carried out according to the white box model and, as a rule, formal approaches are used to search for vulnerabilities in the code. For WAF, the application is a black box, and heuristics are used to detect attacks. This does not allow VPs to be effectively used to protect against attacks in cases where the exploitation conditions of the vulnerability go beyond the trivial `http_parameter = plain_text_attack_vector` scheme.But what if “making friends” of SAST and WAF in such a way that information on the internal device of the application obtained with the help of SAST became available on the WAF side and gave him the opportunity to detect attacks on detected vulnerabilities - not guessing, but proving the fact of the attack?

The glitz and poverty of traditional VP

The traditional approach to automating the creation of virtual patches for web applications is to provide WAF information about each vulnerability detected with SAST, including:

- vulnerability class;

- Vulnerable entry point to the web application (URL or part thereof);

- the values of the additional parameters of the HTTP request, in which the attack becomes possible;

- the values of the vulnerable parameter — the carrier of the attack vector;

- set of characters or words (tokens), the appearance of which in a vulnerable parameter will lead to exploitation of a vulnerability.

To determine the values of the parameters of the HTTP request and the dangerous elements of the vulnerable parameter, both a simple enumeration of all possible elements and a generating function (as a rule, based on regular expressions) can be used. Consider an ASP.NET page snippet vulnerable to XSS attacks:

')

01 var condition = Request.Params["condition"]; 02 var param = Request.Params["param"]; 03 04 if (condition == null || param == null) 05 { 06 Response.Write("Wrong parameters!"); 07 return; 08 } 09 10 string response; 11 if (condition == "secret") 12 { 13 response = "Parameter value is `" + param + "`"; 14 } 15 else 16 { 17 response = "Secret not found!"; 18 } 19 20 Response.Write("<b>" + response + "</b>"); As a result of the analysis of this code, the symbolic formula of the conditional set of its values will be derived for the attack vector:

{condition = "secret" ⇒ param ∈ { XSShtml-text }}, XSShtml-text — XSS- TEXT, HTML.Both exploit and virtual patch can be derived from this formula. Based on the virtual patch descriptor, WAF generates filtering rules that allow you to block all HTTP requests that may lead to exploitation of the found vulnerability.

This approach, of course, allows you to defend against a number of attacks, but it also has significant drawbacks:

- to prove the vulnerability of the SAST tool enough to detect one of the possible vectors of attacks on it. To effectively eliminate vulnerability, it is necessary to defend against all possible vectors, which can be difficult to communicate to the WAF side, since their set is not only infinite, but often cannot be expressed by regular expressions due to the irregularity of the attack vector grammars;

- the same applies to the values of all additional query parameters at which the exploitation of a vulnerability becomes possible;

- information about dangerous elements of a vulnerable parameter is useless if, on the way from the entry point to the vulnerable point of execution, the attack vector undergoes intermediate transformations, changing the context of its grammar or even the entire grammar (for example, Base64, URL or HTML coding, string conversions). ).

These shortcomings lead to the fact that the VP technology, focused on protection from particular cases, does not effectively protect against all possible attacks on vulnerabilities detected using SAST tools. In addition, traffic filtering rules constructed in this way often result in the blocking of regular HTTP requests and disruption of the protected application. Let's change the vulnerable code a bit:

01 var condition = Request.Params["condition"]; 02 var param = Request.Params["param"]; 03 04 if (condition == null || param == null) 05 { 06 Response.Write("Wrong parameters!"); 07 return; 08 } 09 10 string response; 11 // CustomDecode base64-URL-base64 12 if (CustomDecode(condition).Contains("secret")) 13 { 14 response = "Parameter value is `" + CustomDecode(param) + "`"; 15 } 16 else 17 { 18 response = "Secret not found!"; 19 } 20 21 Response.Write(response); The only difference with the previous example is that now both query parameters undergo some conversion and the condition on the `secret` parameter is relaxed until the substring is turned on. The formula of the attack vector as a result of the analysis of this code will look like:

(String.Contains (CustomDecode (condition)) ("secret")) ⇒ param ∈ (CustomDecode { XSShtml-text })At the same time, for the function CustomDecode in the corresponding vertex of CompFG, the analyzer will display a formula describing the Base64-URL-Base64 transformation chain:

(Base64Decode (UrlDecode (Base64Decode argument)))According to the formulas of this type, it is still possible to build an exploit (we described this in detail in one of our previous articles ), but the classical approach to building virtual patches is no longer possible here because:

- exploitation of the vulnerability is possible only if the decoded query parameter `condition` will contain the substring“ secret ”(line 12), but the set of values for such a parameter is very large, and it is difficult to express it through regular expressions due to irregular decoding functions;

- The request parameter, which is the attack vector, is also decoded (line 14), which prevents the SAST tool from generating many of its dangerous elements for WAF.

Since all the problems of the traditional VP grow from the inability to work with the application at the WAF level on the white box model, it is obvious that in order to eliminate them it is necessary to realize this possibility and refine the approach so that:

- SAST provided WAF with complete information about all the transformations to which the vulnerable parameter and the conditions of a successful attack from the entry point to the vulnerable point are exposed so that WAF can calculate the values of the arguments in it, based on the values of the parameters of the HTTP request being processed;

- For detection of attacks, not heuristic methods were used, but formal methods based on the strict proof of certain statements and covering the general case of the operating conditions of each specific vulnerability instead of a limited set of particular ones.

This is how virtual execution time patching technology was born.

Runtime virtual patching

At the core of the runtime virtual patching (RVP) technology is the model of the application under investigation called computation flow graph (CompFG) used in the source code analyzer PT Application Inspector (PT AI). This model was described in detail as part of the AppSec Slums Master Class on PHDays VII. CompFG is built during application analysis as a result of abstract interpretation of its code in semantics, similar to traditional symbolic calculations. The vertices of this graph contain generating formulas in the target language, specifying the set of valid values for all data streams present at the corresponding points of execution. These threads are called execution point arguments. For example, the top of the vulnerable point of the implementation of the above example in CompFG looks like this:

One of the properties of CompFG is its concretizability - the ability to calculate the sets of specific values of all arguments at any point of the application, setting values for all input parameters.

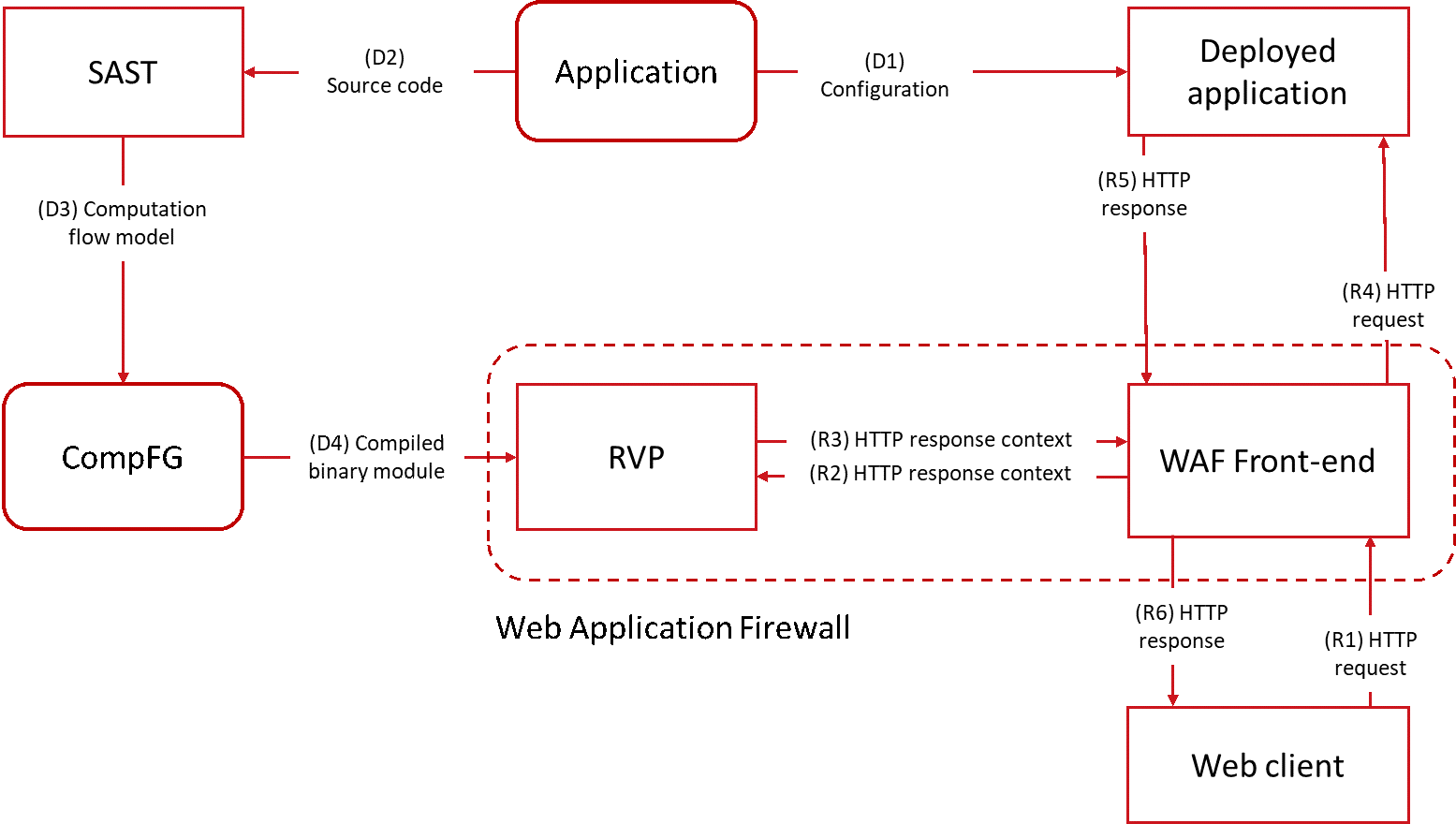

The RVP workflow is divided into two phases, corresponding to the stages of the application life cycle — deployment (steps D) and execution (steps R):

Deployment phase

Before deploying the next version of the application, it is analyzed using PT AI, as a result of which three formulas are derived from each vertex of CompFG describing the vulnerable execution point:

- condition of reachability of the point itself;

- condition of accessibility of the values of all its arguments;

- sets of values of all its arguments and grammars to which they correspond.

All formula sets are grouped based on the fact that the vulnerability belongs to the control flow of one or another entry point into the web application. The concept of an entry point is specific to each of the PT AI supported web frameworks and is defined in the analyzer's knowledge base.

After that, the report with detected vulnerabilities and related formulas is uploaded in the form of code in a special domain language, based on the syntax of S-expressions and allowing the CompFG formulas to be described in a form independent of the target language. The formula for the value of the vulnerable point argument of the previously reviewed sample code is as follows:

(+ ("Parameter value is `") (FromBase64Str (UrlDecodeStr (FromBase64Str (GetParameterData (param))))) ("`")),

and the formula for its reachability is:

(Contains (FromBase64Str (UrlDecodeStr (FromBase64Str (GetParameterData (condition))))) ("secret")).

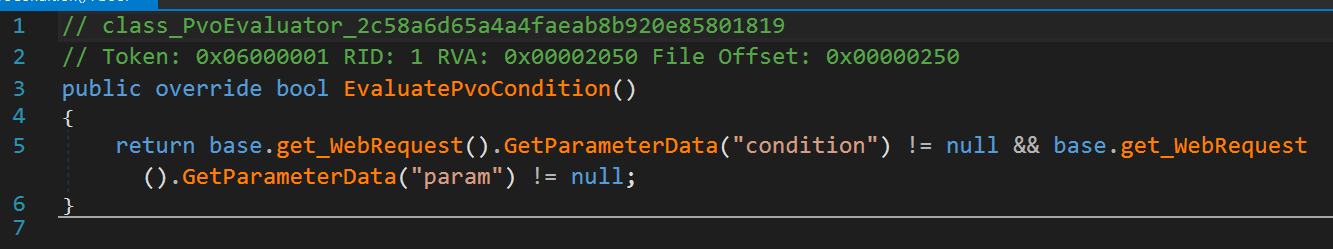

The resulting report is loaded into PT Application Firewall (PT AF), and on its basis a binary module is generated, allowing to calculate all formulas present in it. The decompiled code for calculating the condition of accessibility of the vulnerable point of the considered example looks like this:

In order for the calculation of the formulas to be possible, on the PT AF side it is necessary to have (optional):

- some base solvers all functions that may appear in the report;

- An isolated sandbox with a runtime environment for the language or platform on which the protected application is running (CLR, JVM, PHP, Python or Ruby interpreter, etc.) and the libraries that are used in the application.

The first option gives the maximum speed, but involves a huge amount of manual work on the part of the developers of WAF for describing calculators (even if limited to only the functions of standard libraries). The second option allows you to calculate all the functions that may occur in the report, but also increases the processing time of each HTTP request due to the need to call the runtime environment to calculate each function. The best option here is when the first option is used for the most common functions, and all the rest are calculated using the second one.

It is quite possible that a formula will encounter a function where the analyzer cannot “fail” (for example, calling a method related to a missing project dependency or to native code) and (or) which is also impossible on the PT AF side (for example, function of reading data from external sources or server environment). Such functions are marked in the formulas with the unknown flag and processed in a special way (see below).

Stage of operation

At the operational stage, for each HTTP request, WAF delegates its processing to the generated binary module. The module analyzes the request and determines its associated entry point into the web application. For this point, the formulas for all the vulnerabilities detected as a result of its analysis are selected - and then calculated in a certain way.

First, the formulas of both conditions are calculated: the reachability of the vulnerable point and the values of all its arguments. Instead of variables, the values of the corresponding query parameters are substituted into each formula, after which its value is calculated. If expressions with unknown flag are present in the formula, it is processed as follows:

- each unknown flag propagates through the expression expression tree from bottom to top until it marks a Boolean expression;

- all such expressions (unknown domains) are replaced in the formula with Boolean variables and the resulting Boolean satisfiability problem is solved for the resulting formula;

- from the initial condition formula, n conditions are constructed - by substituting possible values of unknown-domains from all solutions found at the previous step;

- the value of each of the formulas obtained is calculated, and if at least one of them is satisfiable, then the initial condition is also considered to be satisfiable.

If, as a result of the calculation, a false value of the original formula was obtained, this means that this HTTP request cannot lead the application to a vulnerable point with dangerous values of all its arguments. In this case, the RVP simply returns the processing of the request to the main WAF module.

In the case of the fulfillment of the conditions of attack on vulnerability, it is the turn to calculate the value of the argument of the vulnerable point. The algorithm used for this depends on the class of vulnerability to which the point being processed belongs. Common to them is only the logic of processing formulas containing unknown nodes: unlike conditional formulas, such argument formulas cannot be calculated in any way, which is immediately reported to WAF - and then the transition to the next vulnerable point is made. As an example, consider the most complex of the algorithms used to detect injection class attacks.

Injection detection

The class of injections includes any attacks whose purpose is to violate the integrity of the text in any formal language (HTML, XML, JavaScript, SQL, URLs, file paths, etc.) formed on the basis of data controlled by the attacker. The attack is carried out through the transfer to the application of specially formed input data, the substitution of which in the attacked text will lead to going beyond the limits of the token and the introduction into the text of syntactic structures that are not provided for by the application logic.

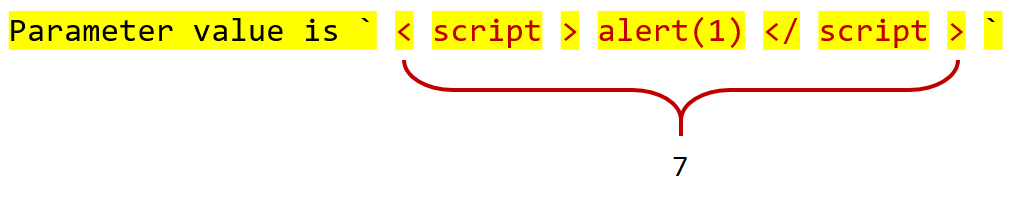

In the event that the current vulnerable point of the application belongs to this class of attacks, the value of its argument is calculated using the algorithm of the so-called incremental calculation with abstract interpretation in the semantics of taint analysis. The essence of this algorithm is that each expression of the formula is calculated separately, from bottom to top, and the result of the calculation obtained at each step is additionally marked by the boundaries of “pollution”, based on the semantics of each calculated function and the rules of the traditional taint analysis . This allows you to select in the final result of the calculation all fragments that were obtained as a result of any transformations of the input data (tainted fragments).

For example, for the code above and the HTTP request with the parameters `? Condition = YzJWamNtVjA% 3d & param, for example, for example

Further, the obtained value is divided into tokens in accordance with the grammar of the argument of the vulnerable point, and if more than one token fell on any of the tainted fragments, then this is a formal sign of a detected attack (by definition, an injection):

At the end of the calculation of the formulas for all vulnerabilities related to the current entry point, the request processing is transferred to the main WAF module along with the detection results.

Advantages and features of RVP

The approach to the protection of an application implemented in this way based on the results of the analysis of the security of its code has a number of significant advantages over the traditional VP:

- due to the formal approach described above and the ability to take into account any intermediate transformations of the output data, all the indicated disadvantages of the traditional VP are eliminated;

- the formal approach also completely eliminates the possibility of errors of the first kind (false positives, false positive), provided there is no unknown-node in the formulas;

- the absence of any negative impact on the functions of the web application, since the protection is implemented not simply in accordance with them, but on their basis.

A prototype of the PT Application Inspector and PT Application Firewall integration module in the form of the HTTP module of the IIS web server under the .NET platform was developed for running in the technology and confirming its effectiveness. You can watch a demonstration of his work with the reviewed code sample on YouTube . Performance tests on one and a half dozen open CMS showed more than acceptable results: the processing time of HTTP requests using RVP turned out to be comparable to the time they were processed by traditional (heuristic) WAF methods. The average percentage of slowing the response of a web application to requests was:

- 0% when processing requests that do not lead to the vulnerable point;

- 6–10% for processing requests that lead to a vulnerable point, but are not an attack (depending on the complexity of the grammar of the vulnerable point);

- 4–7% when processing requests that lead to a vulnerable point and are an attack.

Despite the obvious advantages over traditional VP, RVP still has a number of conceptual limitations that I would like to get rid of:

- there is no possibility to calculate the values of such formulas in which there are external data from sources that are absent on the WAF side (file resources, database, server environment, etc.);

- The quality of formulas directly depends on the quality of approximation of some code fragments during its analysis (cycles, recursion, calls to external library methods, etc.);

- the description of the semantics of transforming functions for the base of calculators requires a certain amount of engineering work, which is weakly automated and allows for the appearance of errors related to the human factor.

However, it was possible to eliminate these shortcomings by shifting some of the RVP functionality to the application side and applying the technologies that underlie the self-protection of the runtime applications (runtime application self-protection, RASP).

But about this - in the second part of the article :)

Source: https://habr.com/ru/post/338110/

All Articles