Zabbix 3.4: Mass data collection using the examples of the counter Mercury and smartmontools

Hello everyone, we have previously mentioned the possibilities for mass data collection in the new version of the open source monitoring system Zabbix 3.4. Now we’ll dwell on this functionality in more detail, and in order to make it clearer, let’s tell you about it in two examples:

- collecting all the data at once, received in JSON from the console utility of the electricity meter Mercury 236

- collecting indicators SMART hard drives and SSD, obtained in tabular form from smartmontools.

And what was the actual problem?

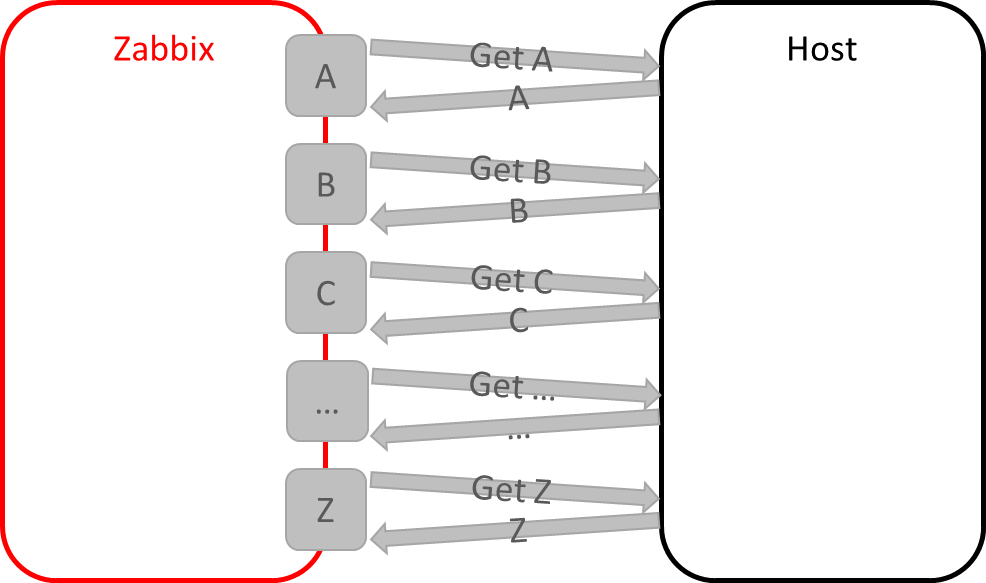

It was possible to collect data via console utilities or API calls, but there were difficulties:

')

- slow start of utilities every time, for each necessary data item

- access to the resource (disk, port, counter, application API) for each data item

- result parsing needed to be done with external scripts / utilities

- and if then it was necessary to correct the parsing - I had to update UserParameters or scripts again

- among other things, simultaneous requests from several Zabbix pollers led to an error when accessing, for example, a serial port.

In general, it was like this:

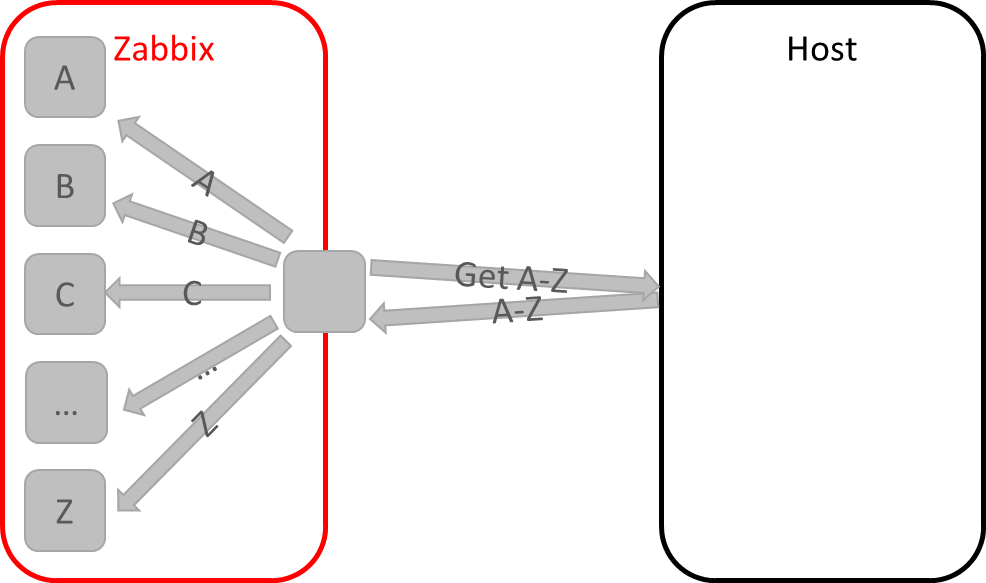

And with the advent of dependent data elements , it became possible like this:

How it works?

- In Zabbix 3.4, the data source may be another data element, which is called the parent or master element. Such an element may, for example, contain an array of data in JSON, XML or arbitrary text format.

- At the time of receipt of new data in the parent element, the remaining data elements, which are called dependent, refer to the parent element and using such preprocessing functions like JSON path, XPath or Regex select the desired metric from the text.

By the way, preprocessing is also an innovation 3.4, it is implemented by adding new processes preprocessing_manager and preprocessing_worker on Zabbix-server. Therefore, if you are updating from 3.2 - do not forget to update the template for the server in order to monitor their work.

Go to the examples.

Mercury 236

Imagine that on our project, besides containers, virtual machines, applications, network devices, databases, business indicators and everything else requiring control, there is a need to monitor the indicators of the power grid and other “engineers”, such as climate control equipment. Standard devices for our middle band are used: a three-phase electricity meter Mercury 236 RT-01 PQRS with RS-485 interface, on top of which communication takes place through the manufacturer’s proprietary protocol.

The task is responsible - immediately collect indicators of voltage, power, current, consumption, frequency. Connecting such a device to a server with a Zabbix agent is a feasible task - a serial port with RS-485 will be enough, for example, in the form of a USB adapter. But how to read the data? If it were not for github and kind people who shared their solution for a smart home, we would write a module to Zabbix, which we would learn to talk on the protocol of the counter and interrogate the indicators.

The utility is simple and convenient (for which the author thanks a lot) thanks to the counter on the specified port, reads the data and gives us as text, CSV or JSON.

Let's try to install and run:

git clone https://github.com/Shden/mercury236.git

cd mercury236

make

./mercury236 /dev/ttyS0 --help

Usage: mercury236 RS485 [OPTIONS] ...

RS485 address of RS485 dongle (eg /dev/ttyUSB0), required

--debug to print extra debug info

--testRun dry run to see output sample, no hardware required

Output formatting:

....

--help prints this screen

Runs! Ok, we connect the counter, we query, we get JSON:

./mercury236 / dev / ttyS0 --json

{ "U": { "p1": 0.35, "p2": 0.35, "p3": 226.86 }, "I": { "p1": 0.00, "p2": 0.00, "p3": 0.39 }, "CosF": { "p1": 0.00, "p2": 0.00, "p3": 0.60, "sum": 0.60 }, "F": 50.00, "A": { "p1": 41943.03, "p2": 41943.03, "p3": 41943.03 }, "P": { "p1": 0.00, "p2": 0.00, "p3": 53.45, "sum": 53.45 }, "S": { "p1": 0.00, "p2": 0.00, "p3": 89.83, "sum": 89.83 }, "PR": { "ap": 120.51 }, "PR-day": { "ap": 86.00 }, "PR-night": { "ap": 34.51 }, "PY": { "ap": 0.00 }, "PT": { "ap": 0.04 } } As a result, the utility has already done all the hard work for us, implementing the protocol of communication with the counter, pulling out the data, and even offered it to us as a convenient JSON object. That's just before, we just could not use it - we would have to write the binding in the form of scripts, and the most important thing is to implement a mechanism for controlling access to the serial port environment. After all, if two polisher Zabbix simultaneously turn to it - one for the current of the third phase 3, and the other for the current of phase 2, we would not return anything.

In 3.4, everything becomes much simpler, and we can now quickly and easily transfer data from third-party console utilities to Zabbix, without resorting to wrapping scripts, and without running the same data item separately for each data element 10 times. So,

Configure the launch of the Zabbix mercury236 utility

sudo cp mercury236 /etc/zabbix/scripts

cd /etc/zabbix/scripts

chmod +x mercury236

sudo usermod -G dialout zabbix

To run the script, create a new UserParameter in the Zabbix agent config :

UserParameter=mercury236[*],/etc/zabbix/scripts/mercury236 $1 $2 Save the file, do not forget to restart our Zabbix agent.

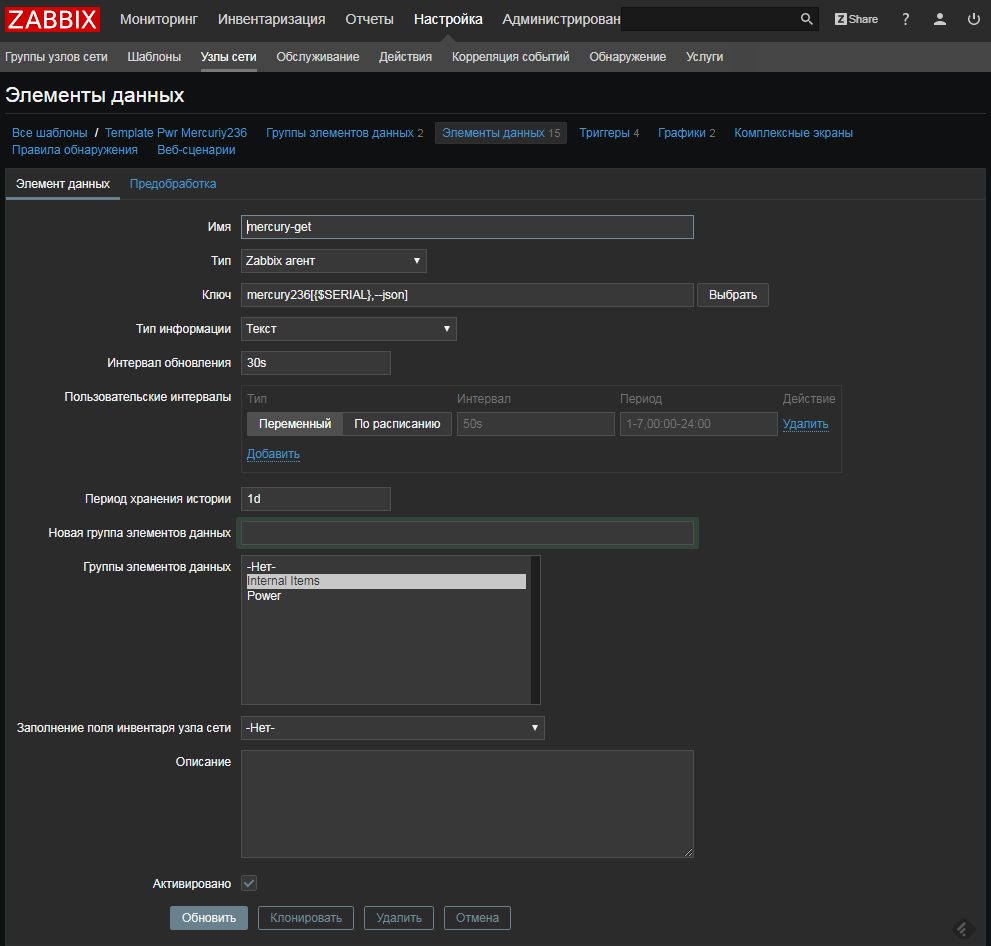

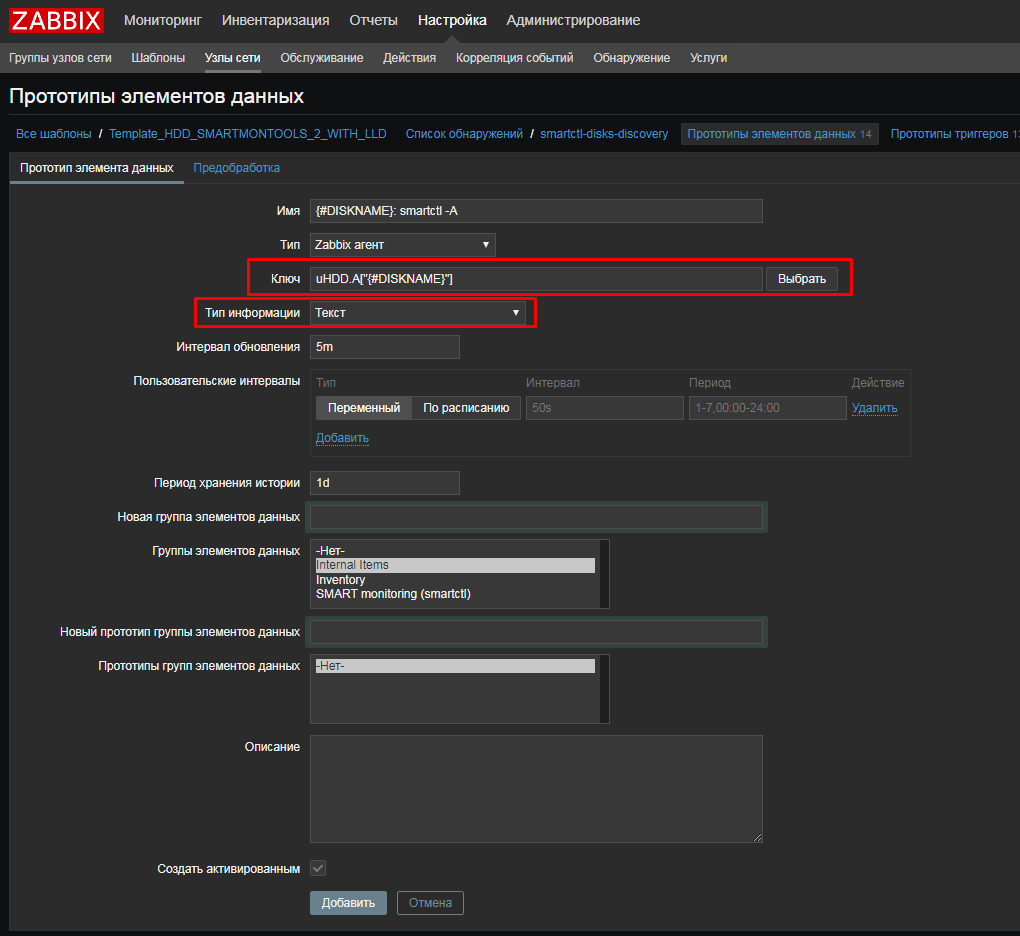

Now we will create a parent data element in the new template:

As you can see, there is nothing special about the parent data element - just check through the Zabbix Agent UserParameter . This means that there are no restrictions on what type of verification can act as a parent element - here there can be data obtained through Zabbix trapper or through External checks . The only thing we chose Type of information - Text and history retention period in 1 day - we keep storing metrics separately in dependent elements for longer (you can not store data at all in the parent element by setting the retention period to 0). Please note that we do not touch the preprocessing in this data element.

Configure receipt of our meter metrics

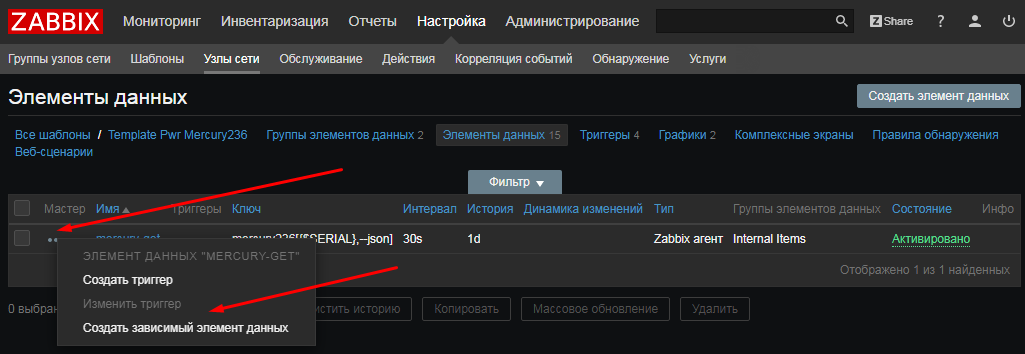

In order to start creating dependent data elements, you can use the new assistant. Well, or just click "Create a data item":

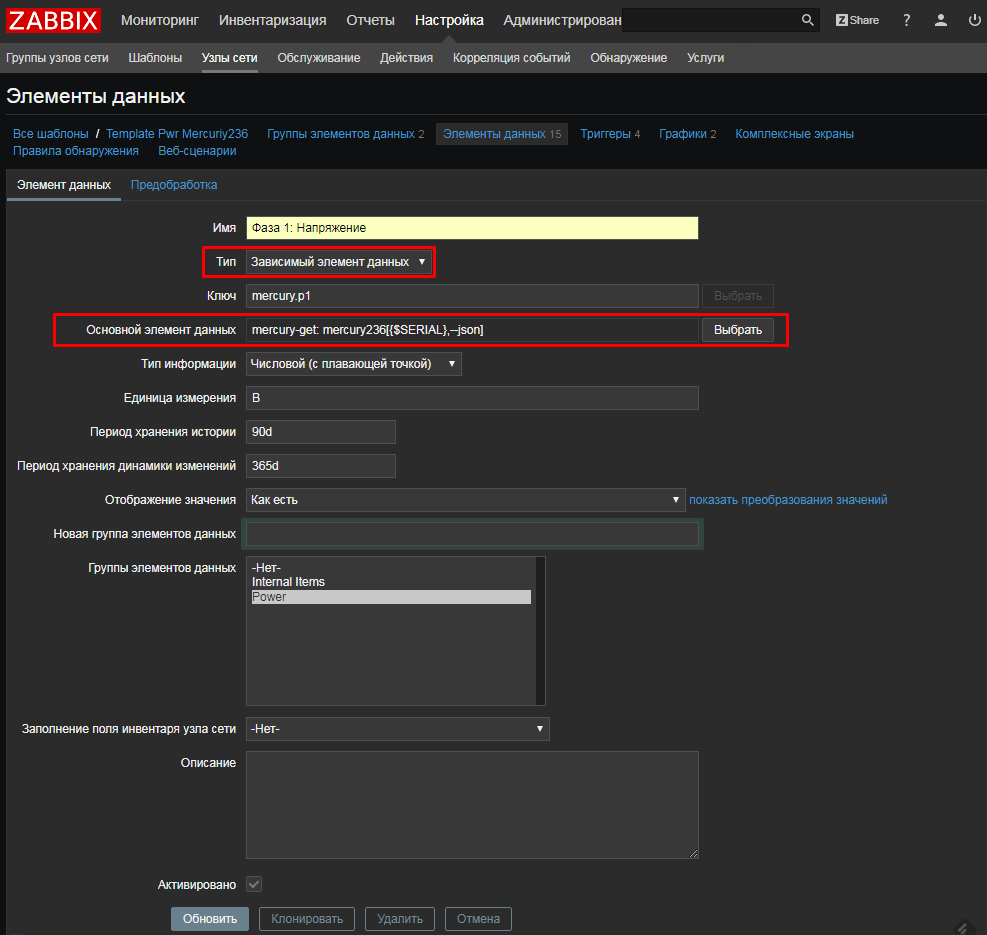

Create a data element for the voltage of the first phase, choose:

- Type: Dependent Data Item

- Master Data Item: mercury-get

Then in the “Pre-Processing” tab add our JSON Path expression:

JSON path: $ .U.p1

By the way, a little advice. In order not to spend a lot of time debugging and catching errors, before filling in the JSON Path, you can quickly check the correctness of the expression online, for example, here: jsonpath.com , copying there the JSON received from the utility.

Similarly, we create other metrics of interest. Including - for the accumulated energy at the daily rate.

To do this, create a new data item and select:

- Type: Dependent Data Item

- Master Data Item: mercury-get

But in the tab "Pre-processing", pay attention to two nuances:

- we will use the notation with square brackets, since there is a hyphen in the JSON path

- preprocessing can be multi-step, for example, here the result of the first step is multiplied by 1000 to get Wh / hr from kWh

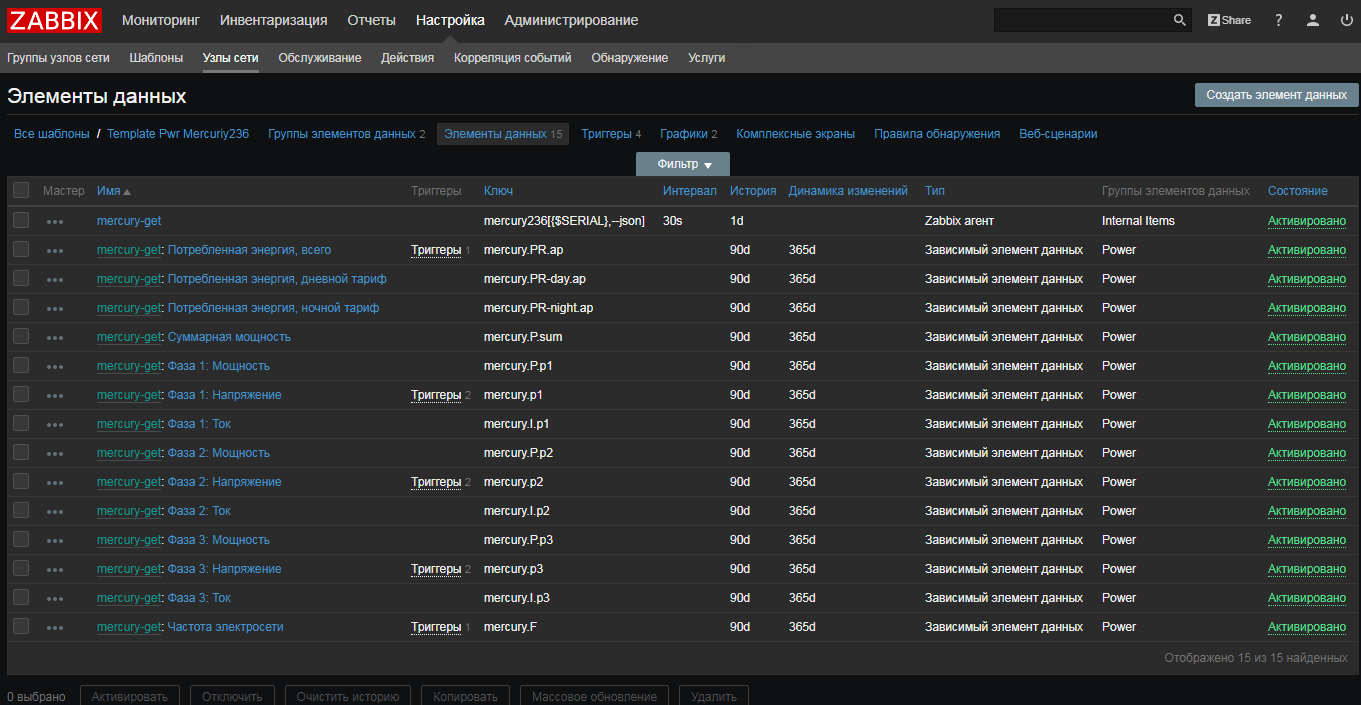

Let's do the same for the other key metrics of the counter, in the end we get the following list:

We bring our template to mind

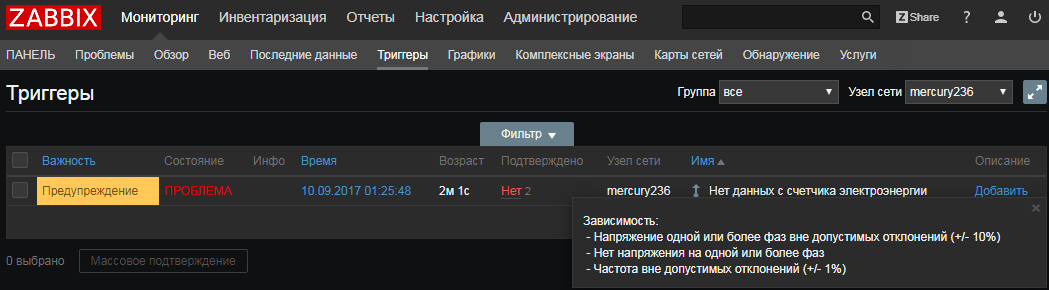

To make the template complete, we add triggers using macros , making it as flexible as possible. Do not forget about the dependencies of the triggers .

What happened

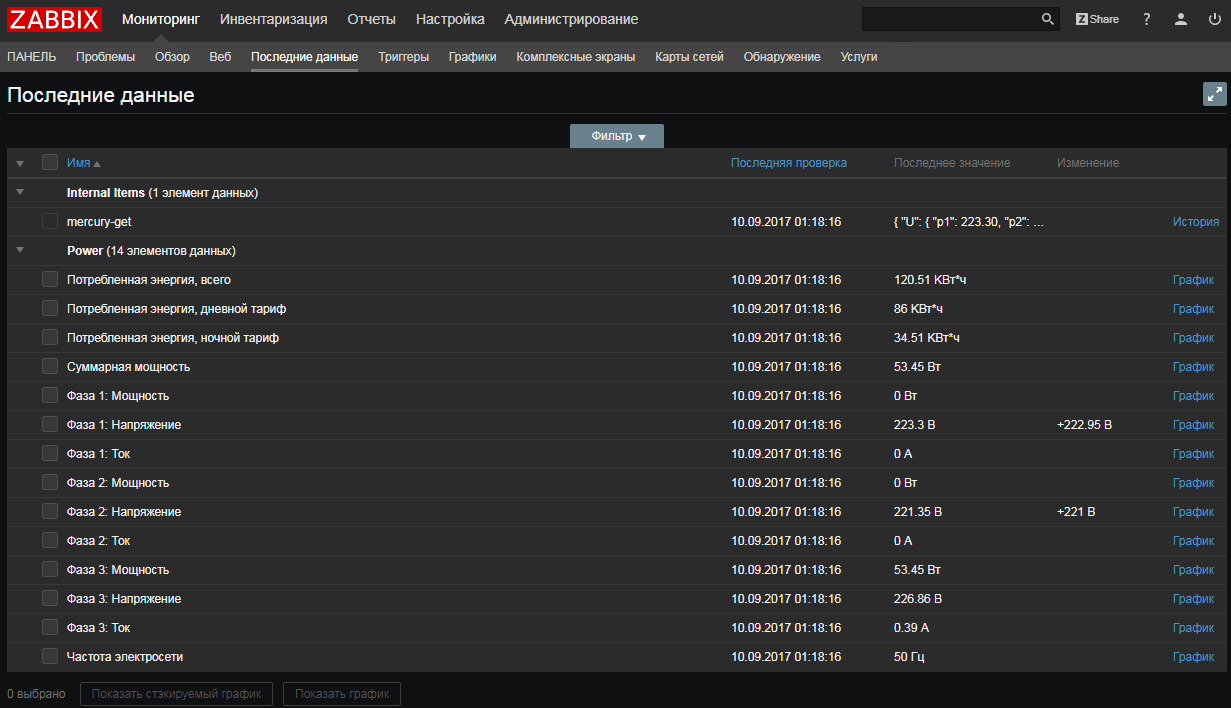

The template is ready, the data ran, let's see what we got:

All the latest data collected for one appeal:

Please note that the collection time for all metrics is absolutely identical.

The final counter template is available in the decision repository at share.zabbix.com here .

Let's sum up:

- reused a good program and did not waste time on writing their own implementation of data collection under the Mercury protocol

- UserParameter remained, but collapsed before a simple call. In fact, you can even use system.run []

- Wrappers, too, did not write. All parsed via JSON path in the template

- The counter was not tortured much, one request - all the data we need at once.

Smartctl and smartmontools

Long time ago we wrote on Habré how it is possible to monitor SMART hard drives in order to have time to change them using UserParameters. This approach works, but it was not without flaws:

- redundant smartctl launches, and in turn each time it accessed the hard disk controller

- I had to do a separate parsing for Linux and Windows. It is especially painful to work with Win now: (for / F ... so ... we screen double quotes with more quotes .... Aaaa !!!!)

We will try to get rid of all this in 3.4.

The case of smartmontools has two differences from the example with the counter above:

- smartctl does not return us JSON

- There are different numbers of disks in the server, so we need to use low-level detection (LLD).

But do not worry! Firstly, dependent data elements work for LLD, and secondly, PCRE regex is among the preprocessing filters. We will use it to pull out the necessary indicators from the not super strongly structured answer of the utility. About this:

Let's get started

Simplify UserParameters

It was:

UserParameter=uHDD[*], sudo smartctl -A $1| grep -i "$2"| tail -1| cut -c 88-|cut -f1 -d' ' UserParameter=uHDD.model.[*],sudo smartctl -i $1 |grep -i "Device Model"| cut -f2 -d: |tr -d " " UserParameter=uHDD.sn.[*],sudo smartctl -i $1 |grep -i "Serial Number"| cut -f2 -d: |tr -d " " UserParameter=uHDD.health.[*],sudo smartctl -H $1 |grep -i "test"| cut -f2 -d: |tr -d " " UserParameter=uHDD.errorlog.[*],sudo smartctl -l error $1 |grep -i "ATA Error Count"| cut -f2 -d: |tr -d " " UserParameter=uHDD.discovery,sudo /etc/zabbix/scripts/smartctl-disks-discovery.pl It became:

UserParameter=uHDD.A[*],sudo smartctl -A $1 UserParameter=uHDD.i[*],sudo smartctl -i $1 UserParameter=uHDD.health[*],sudo smartctl -H $1 UserParameter=uHDD.discovery,sudo /etc/zabbix/scripts/smartctl-disks-discovery.pl We do the same for Windows, simultaneously getting rid of CMD magic using for / F and find . You can see here .

Create new parent data items.

To collect all the attributes of SMART create a prototype of the master data element:

As in the previous example, nothing special needs to be configured. Only the Type of information is Text, and the Storage period is 1 day.

To collect test results and inventory data, we will need to run smartctl with other keys. Therefore, in the same way, we will create two more data items:

- uHDD.i ["{# DISKNAME}"]

- uHDD.health ["{# DISKNAME}"]

Configure getting our SMART disk attributes

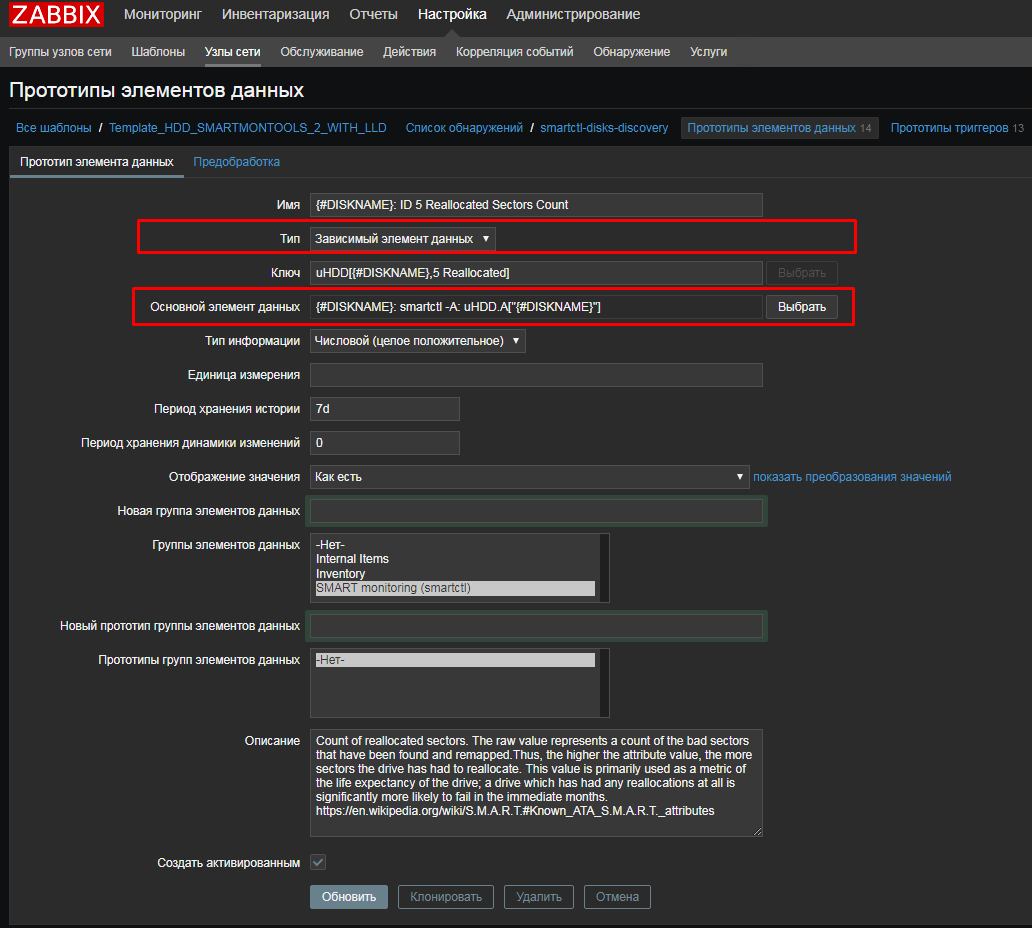

Create a dependent data element for attribute 5, Reallocated :

And in the tab "Pre-processing" we use a regular expression:

And just like with the JSON Path, in order not to spend a lot of time debugging and catching errors, before filling in a regex, it is convenient to quickly check the correctness of the expression online, for example, here: regex101.com copying our smartctl output there.

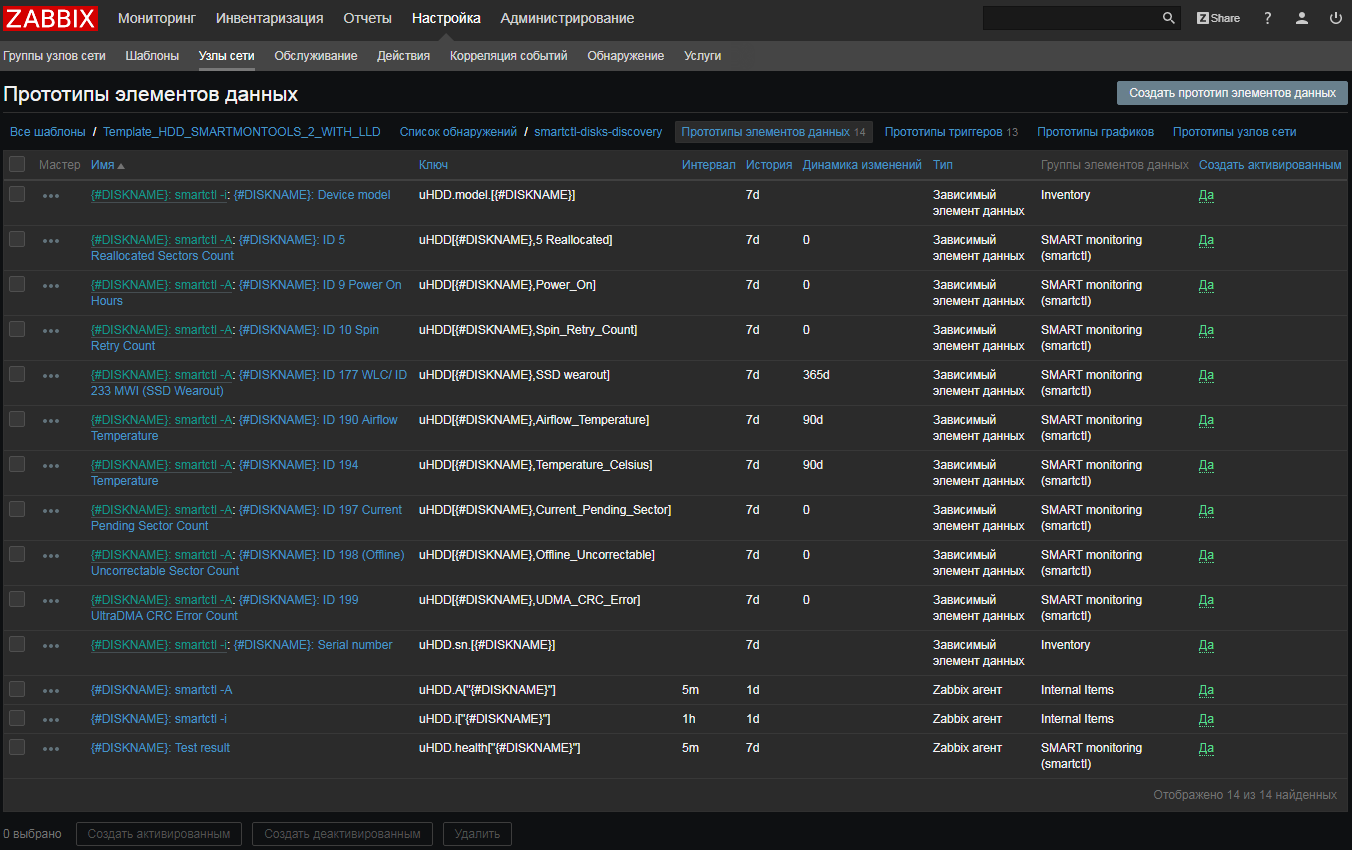

As a result, we get the following list of prototypes:

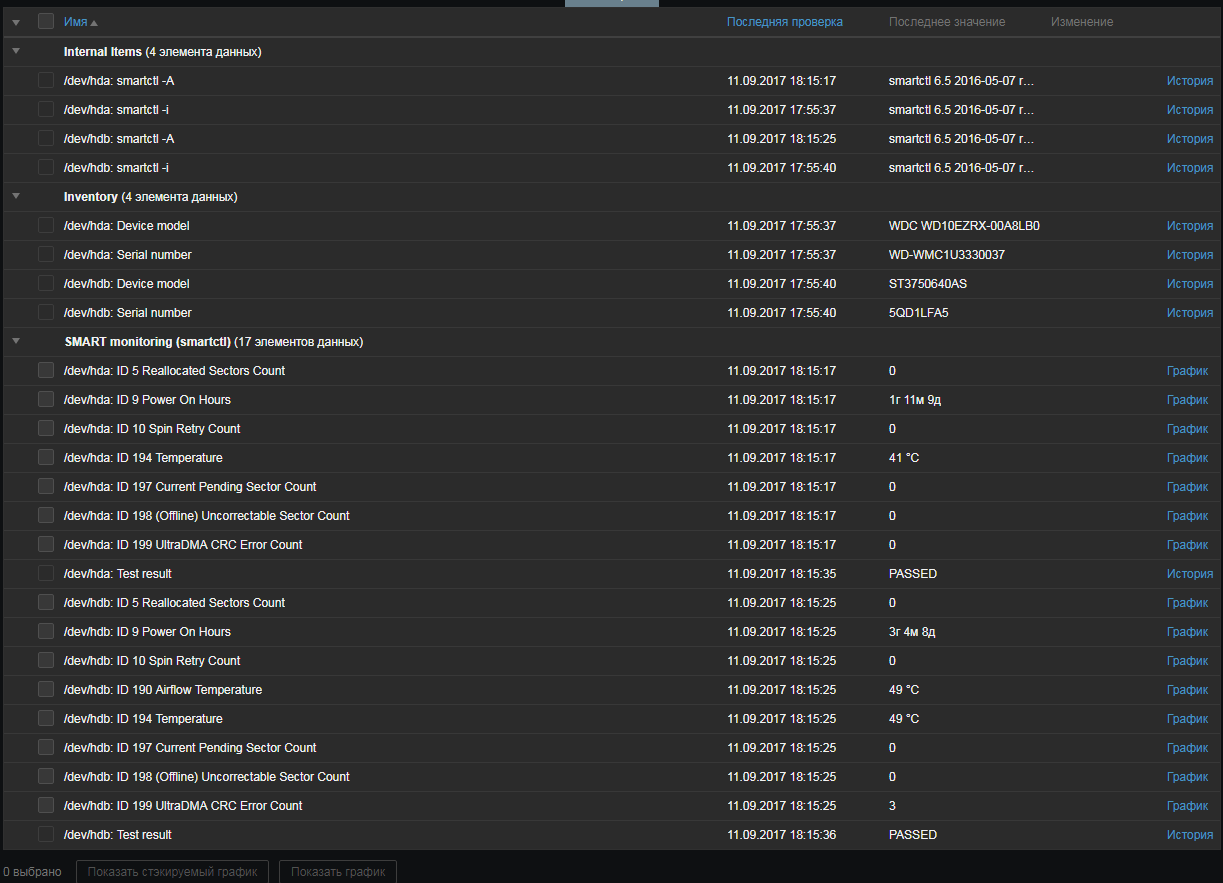

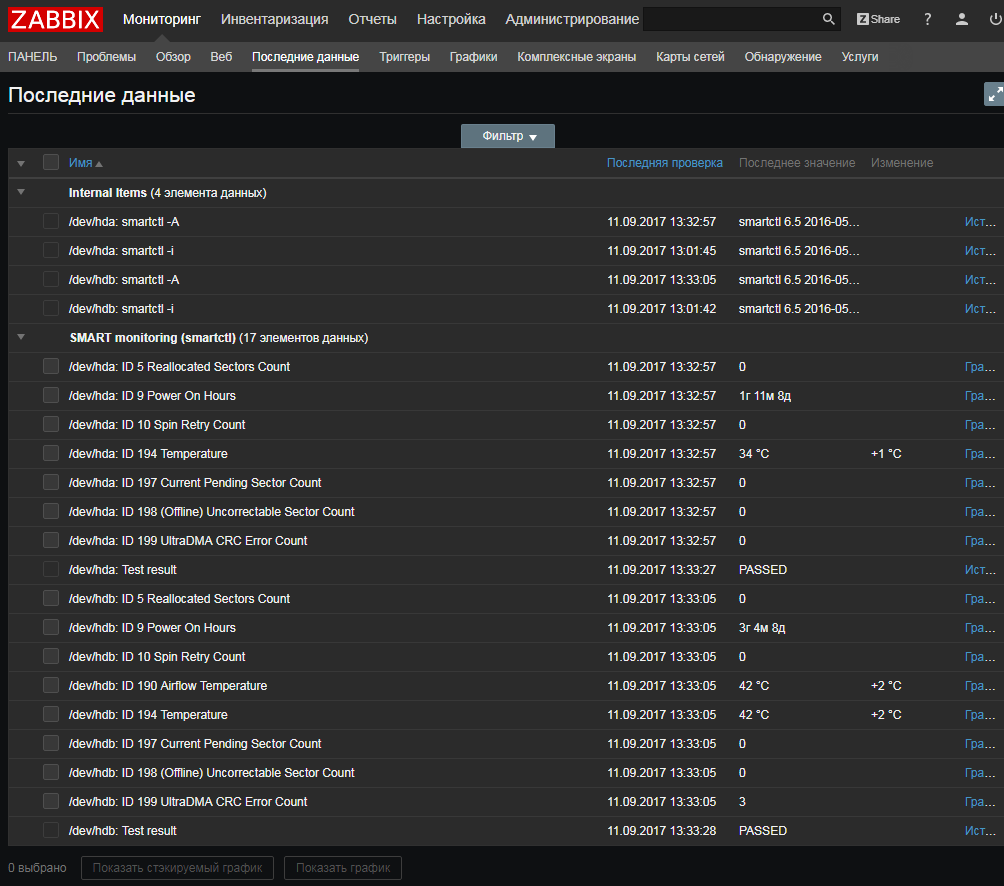

Testing, see what happened

For two HDDs:

For SSD under Windows:

Let's summarize the example with smartmontools:

- we removed all parsing from UserParameters

- there are no external scripts (except LLD), no external dependencies, all parsing occurs on the Zabbix server, it is easy to see and correct it if necessary

- when the utility or API does not return XML / JSON - it does not matter, you can always try using regular expressions

- we don’t torture hard drives any more - first we get the whole list of SMART parameters, and then on the Zabbix server we decompose it by metrics.

The updated template (at the same time updated the triggers, added items for the SSD) is available in the decision repository on share.zabbix.com here .

At the end

Mass collection of metrics is a simple and easy way to reduce the load on the network and on the resources of monitored systems, as well as reduce the need for external scripts. We are sure that many Zabbix users will like it.

PS The article is also available in English in our blog .

Source: https://habr.com/ru/post/337856/

All Articles