How to switch to gRPC, saving REST

Many are familiar with gRPC - Google’s open RPC framework, which supports 10 languages and is actively used within Google, Netflix, Kubernetes, Docker and many others. If you are writing microservices, gRPC provides a lot of advantages over the traditional REST + JSON approach, but on existing projects it is often not so easy to make the transition due to the presence of already used REST clients that cannot be updated at once. Often speaking on the topic of gRPC, you can hear "yes, we in our company are also looking at gRPC, but we won’t try."

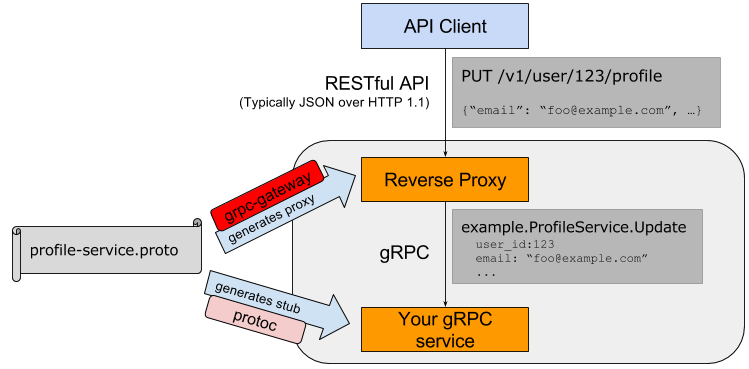

Well, this problem has a good solution called grpc-rest-gateway, which does just that - autogenerating a REST-gRPC proxy with support for all the major benefits of gRPC plus support for Swagger. In this article, I will show you with an example of how it looks and works, and, I hope, this will help you to switch to gRPC without losing existing REST clients.

But, for a start, let's decide what situations we are talking about. The two most common options are:

- backend (Go / Java / C ++ / node.js / whatever) and frontend (JS / iOS / Kotlin / Java / etc) communicate using REST API

- microservices (in different languages) communicate with each other also via REST-like API (HTTP and JSON protocol for serialization)

For small projects, this is an absolutely normal choice, but as projects and the number of people grow on it, the problems of the REST API begin to become very clear and take the lion's share of the developers' time.

What's wrong with REST?

Of course, REST is used everywhere and everywhere because of its simplicity and even a vague understanding of what REST is. In general, REST began as a dissertation from one of the creators of HTTP, Roy Fielding, entitled “Architectural Styles and Design of Network Software Architectures”. Actually, REST is just an architectural style, not some kind of clearly described specification.

But this is the root of some significant problems. There is no single agreement on which HTTP method to use, when which code to return, what to send to the URI, and what is in the body of the request, etc. There are attempts to reach a general agreement, but, unfortunately, they are not very successful.

Further, with the REST approach, you have too many entities that carry meaning - the HTTP method (GET / POST / PUT / DELETE), the request URI ( /users , /user/1 ), the request body ( {id: 1} ) plus headers ( X-User-ID: 1 ). All this adds unnecessary complexity and the possibility of misinterpretation, which becomes a big problem as the API begins to be used between different services that write different commands and the synchronization of all these entities begins to take a significant portion of the command time.

This leads us to the next problem - the complexity of the declarative description of APIs and the description of data types. The OpenAPI Specification (known as Swagger), RAML, and the Blueprint API partially solve this problem, but at the cost of adding another complexity. Someone writes YAML files with handles for each new request, someone uses web-based frameworks with autogeneration, inflating code with descriptions of parameters and request types, and support for the swagger specification in sync with the actual implementation of the API still lies on the shoulders of responsible developers, takes time away from solving, in fact, the tasks that these APIs have to solve.

A separate complexity lies in the API, which develops and changes, and the synchronization of clients and servers can take a lot of time and resources.

gRPC

gRPC solves these problems with code generation and declarative type declaration language and RPC methods. The default Google Protobuf 3 is used as IDL, and HTTP / 2 for transport. Kodogenerators are in 10 languages - Go, Java, C ++, Python, Ruby, Node.js, C #, PHP, Android.Java, Objective-C. There are also unofficial implementations for Rust, Swift and others.

In gRPC, you only have one place where you define how the fields will be named, what are the requests, what to accept and what to return. This is described in the .proto file. For example:

syntax = "proto3"; package library; service LibraryService { rpc AddBook(AddBookRequest) returns (AddBookResponse) } message AddBookRequest { message Author { string name = 1; string surname = 2; } string isbn = 1; repeated Author authors = 2; } message AddBookResponse { int64 id = 1; } From this proto-file, using the protoc compiler, the code of clients and servers in all supported languages (well, those that you specify to the compiler) is generated. Further, if you change something in types or methods, restart the code generation and get the updated code for both the client and the server.

If you have ever resolved conflicts in field names like UserID vs user_id , you will enjoy working with gRPC.

But I will not dwell on the principles of working with gRPC in great detail, and I’ll go over the question of what to do if you want to use gRPC, but you have clients that still have to work through the REST API and will not just be translated / rewrite to gRPC. This is especially true, given that there is no official support for gRPC in the browser yet (JS is only Node.js officially), and the implementation for Swift is also not yet on the official list.

GRPC REST Gateway

The grpc-gateway project, like almost everything in the grpc-ecosystem, is implemented as a plug-in for the protoc compiler. It allows you to add annotations to rpc definitions in a protobuf file, which will be described by the REST equivalent of this method. For example:

import "google/api/annotations.proto"; ... service LibraryService { rpc AddBook(AddBookRequest) returns (AddBookResponse) { option (google.api.http) = { post: "/v1/book" body: "*" }; } } After running protoc with the specified plugin, you will receive an auto-generated code that will transparently redirect POST HTTP requests to the specified URI to the real grpc server and also transparently convert and send the response.

Ie formally, this is the API Proxy, which is launched as a separate service and makes a transparent conversion of REST HTTP requests to gRPC communication between services.

Usage example

Let's continue the example above - say, our book service should be able to work with the old iOS frontend, which so far can only work with REST HTTP. You have already transferred other services to gRPC and enjoy less headaches as your APIs grow or change. By adding the above annotations, we create a new service — for example, rest_proxy and autogenerate the reverse proxy code in it:

protoc -I/usr/local/include -I. \ -I$GOPATH/src \ -I$GOPATH/src/github.com/grpc-ecosystem/grpc-gateway/third_party/googleapis \ --grpc-gateway_out=logtostderr=true:. \ library.proto The code of the service itself may look something like this:

import ( "github.com/myuser/rest-proxy/library" ) var main() { gw := runtime.NewServeMux(muxOpt) opts := []grpc.DialOption{grpc.WithInsecure()} err := library.RegisterLibraryServiceHandlerFromEndpoint(ctx, gw, "library-service.dns.name", opts) if err != nil { log.Fatal(err) } mux := http.NewServeMux() mux.Handle("/", gw) log.Fatal(http.ListenAndServe(":80", mux)) } This code will launch our proxy on port 80, and will send all requests to the gRPC server, available at library-service.dns.name . RegisterLibraryServiceHandlerFromEndpoint is an automatically generated method that does all the magic.

Obviously, this proxy can serve as an entry point for all your other services on gRPC, which need fallback in the form of a REST API - just plug in the rest of auto-generated packages and register them on the same gw-object:

err = users.RegisterUsersServiceHandlerFromEndpoint(ctx, gw, "users-service.dns.name", opts) if err != nil { log.Fatal(err) } and so on.

Benefits

Auto-generated proxy supports automatic reconnect to the service, with an exponential backoff delay, as in conventional grpc services. Similarly, TLS support is out of the box, timeouts and everything that is available in grpc services is also available in proxy.

Middlewares

Separately want to write about the possibility of using the so-called middlewares are request handlers that should automatically work before or after a request. A typical example - your HTTP requests contain a special header that you want to pass on to grpc services.

For example, I will take an example with a standard JWT token that you want to decrypt and transfer the value of the UserID field to grpc services. This is done as simply as the usual http-middlewares:

func checkJWT(h http.Handler) http.Handler { return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) { bearer := r.Header.Get("Authorization") ... // parse and extract value from token ... ctx = context.WithValue(ctx, "UserID", claims.UserID) h.ServeHTTP(w, r.WithContext(ctx)) }) } and wrap our mux object in this middleware function:

mux.Handle("/", checkJWT(gw)) Now on the services side (all gRPC methods in the Go implementation are taken by the first parameter of the context), you just get this value out of context:

func (s *LIbrary) AddBook(ctx context.Context, req *library.AddBookRequest) (*library.AddBookResponse, error) { userID := ctx.Value("UserID").(int64) ... } Additional functionality

Of course, nothing limits your rest-proxy from the implementation of additional functionality. This is the usual http-server, after all. You can forward some HTTP requests to another legacy REST service:

legacyProxy := httputil.NewSingleHostReverseProxy(legacyUrl) mux.Handle("/v0/old_endpoint", legacyProxy) Swagger ui

A separate cherry in the approach with grpc-gateway is the automatic generation of the swagger.json file. It can then be used with online UI, and can also be given directly from our own service.

With the help of small manipulations with SwaggerUI and go-bindata, you can add another endpoint

to our service, which will render a beautiful and, most importantly, up-to-date and auto-generated UI for the REST API.

Generating swagger.json

protoc -I/usr/local/include -I$GOPATH/src -I$GOPATH/src/github.com/grpc-ecosystem/grpc-gateway/third_party/googleapis --swagger_out=logtostderr=true:swagger-ui/ path/to/library.proto Create handlers that will give out static and generate index.html (in the example, static is added directly to the code using go-bindata ):

mux.HandleFunc("/swagger/index.html", SwaggerHandler) mux.Handle("/swagger/", http.StripPrefix("/swagger/", http.FileServer(assetFS()))) ... // init indexTemplate at start func SwaggerHandler(w http.ResponseWriter, r *http.Request) { indexTemplate.Execute(w, nil) } and you get a Swagger UI like this, with up-to-date information and the ability to test right there:

Problems

In general, my experience with grpc-gateway can be described by one phrase - “it just works out of the box”. Of the problems encountered, for example, I can note the following.

Go for serialization in JSON uses so-called "structure tags" - meta information for fields. In encoding/json there is such an omitempty tag - it means that if the value is zero (zero value for this type), then it should not be added to the resulting JSON. The grpc-gateway plugin for Go adds this tag to the structures, which sometimes leads to incorrect behavior.

For example, you have a variable of type bool in the structure, and you give this structure in the response - both true and false values are equally important in the response, and the frontend expects to receive this field. The answer is, the generated grpc-gateway will contain this field only if the value is true , otherwise it will simply be omitted (omitempty).

Fortunately, this is easily solved using the configuration options:

customMarshaller := &runtime.JSONPb{ OrigName: true, EmitDefaults: true, // disable 'omitempty' } muxOpt := runtime.WithMarshalerOption(runtime.MIMEWildcard, customMarshaller) gw := runtime.NewServeMux(muxOpt) One more thing that I would like to share is the non-obvious semantics of working with the protoc compiler itself. Call commands are very long, hard to read, and, most importantly, the logic of where protobuf comes from and where output is generated (+ what directories are created) is very unclear. For example, you want to use a proto-file from another project and generate some plugin code, putting it in the current project in the folder swagger-ui/ . I had to try for 15 minutes a lot of protoc call options before it became clear how to make the generator work that way. But, again, nothing unsolvable.

Conclusion

gRPC can speed up the productivity and efficiency of working with microservice architecture many times, but often the requirement is backward compatibility and REST API support. grpc-gateway provides a simple and effective way to solve this problem by automatically generating a reverse proxy server translating REST / JSON requests to gRPC calls. The project is being actively developed and used in production in many companies.

Links

')

Source: https://habr.com/ru/post/337716/

All Articles