DevOps with Kubernetes and VSTS. Part 2: Cloud History

Continuation of the story about Kubernetes, containers and organization of the CI / CD pipeline. The Azure cloud and Visual Studio Team Services finally appear. Interestingly, the VSTS CI / CD Pipeline uses the kubectl cluster for working with the k8s, so the application can be deployed not only in Azure Container Services, but also in any other Kubernetes installation.

Read the translation of the second part of the article DevOps with Kubernetes and VSTS.

1. Containers for rapid deployment .

2. DevOps with Kubernetes and VSTS. Part 1: Local history.

3. DevOps with Kubernetes and VSTS. Part 2: Cloud history.

4. A node with infinite capacity for Kubernetes.

')

In the first part, I demonstrated an approach to developing multi- container applications using Kubernetes (k8s), or rather minikube , a full-fledged k8s environment that runs one node on a virtual machine on your laptop. In the previous article I cloned this repository (make sure the docker branch is deployed) with two containers: the DotNet Core API and the frontend SPA ( Aurelia ) (as static files in the DotNet Core app). I showed how to create containers locally and run them in the minikube, as well as use the features of ConfigMaps to work with configurations.

In this article I will tell you how to transfer local development to CI / CD and create a pipeline for automated assembly / release generation using VSTS. We will create a container registry and container services in Azure, using k8s as the orchestration mechanism.

I strongly recommend that you study Nigel Poulton's excellent initial course entitled Getting Started with Kubernetes on PluralSight (note the translator - in English, you need a paid subscription), as well as the Atul Malaviya article from Microsoft. Nigel's course was an excellent Kubernetes dive for beginners, and Atula’s article helped to understand the principles of interaction between VSTS and k8s, but neither the course nor the article covered the entire conveyor. Of these, I did not understand how the image update was implemented in the CI / CD pipeline. Well, I had to conduct a series of experiments myself and prepare this article!

k8s can be run locally, in AWS or Google Cloud or Azure. We will use the Azure Container Service. However, the CI / CD pipeline that I demonstrate in this article does not depend on the specific cloud hosting, it is suitable for any k8s cluster. We will also create a private container registry in Azure, but again, you can use any container registry of your choice.

You can also create a k8s cluster in the Azure portal. However, Azure CLI allows you to do this faster, and you save the keys that you need to connect, so I decided to use this mechanism. I will also use Bash for Windows with kubectl, but any platform with kubectl and Azure CLI will do.

Here are the commands:

Notes:

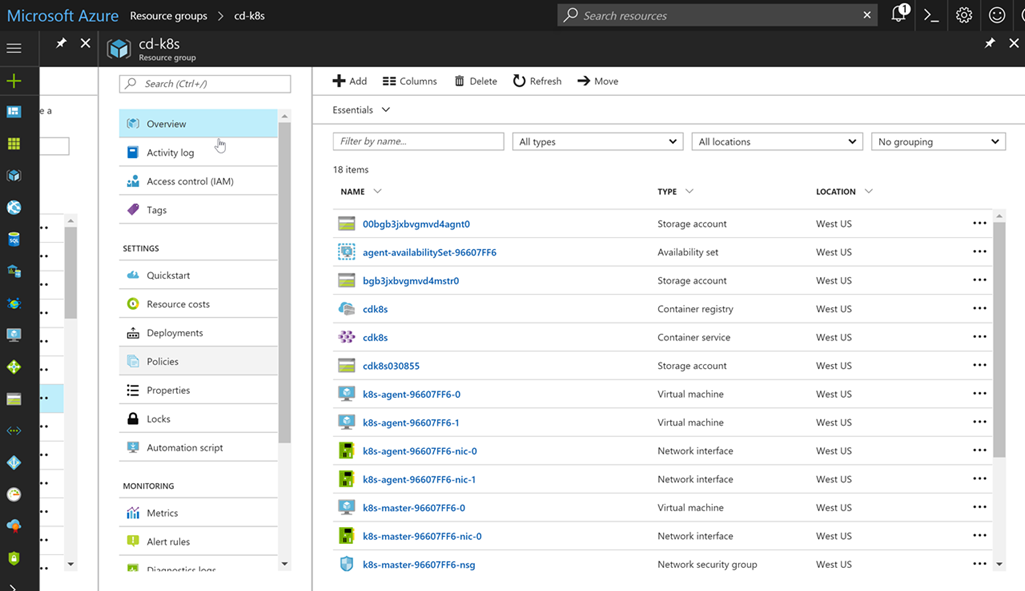

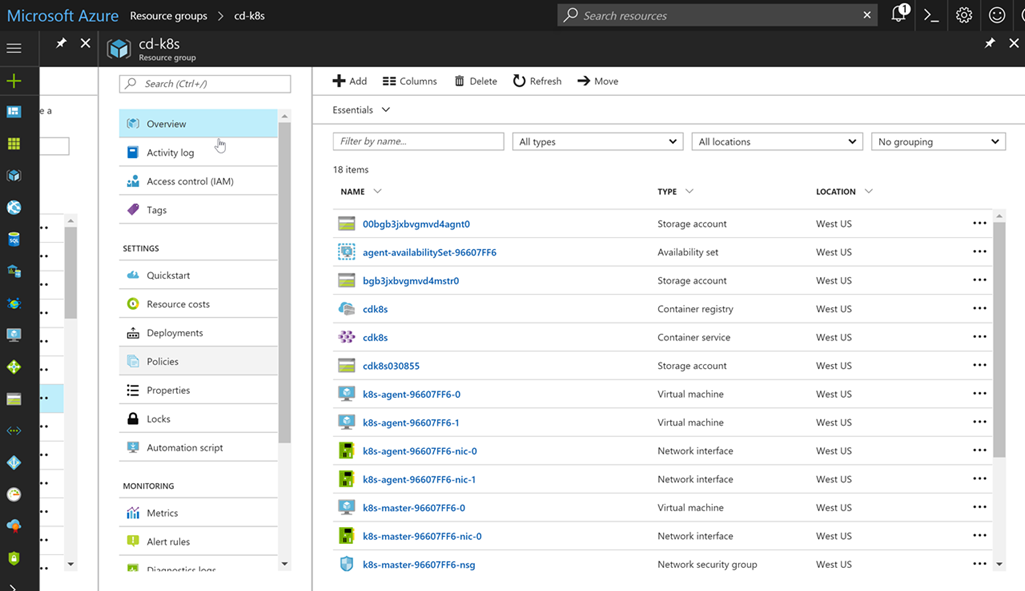

If you launch the browser, navigate to the Azure portal and open your resource group, you will see how much has been created by these simple commands:

Do not worry, you will not have to manage resources yourself. Azure and the k8s cluster take it upon themselves!

Before we create an assembly and release for our container applications, let's look at the promotion model. Typically, the scheme is approximately as follows: development → user acceptance testing → production environment (Dev → UAT → Prod). In the case of c k8s, minikube is a local development environment, which is great. This is a full-featured k8s cluster on your laptop, so you can run your code locally, including using such k8s constructs as configMaps. What about UAT and Prod? Option - to deploy individual clusters, but this approach can be costly. You can also share cluster resources using namespaces.

Namespaces in k8s act as security boundaries, but they can also become isolation boundaries. I can deploy new versions of my application in the dev namespace, which will use the prod namespace resources, but remain completely invisible (own IP addresses, etc.). Of course, you should not conduct load testing within this configuration, since we will consume significant resources for applications in a production environment. This concept is reminiscent of deployment slots in Azure Application Services, which are used to seamlessly test applications before transferring them to production.

If you create a k8s cluster, then in addition to the kube-system and kube-public namespaces (with k8s subsets) you get the default namespace. In the absence of clear instructions on your part, any services, deployments, or approaches that you create will fall into this namespace. But we will create two additional namespaces: dev and prod. Here is our yaml:

This file contains definitions for both namespaces. Run the Apply command to create namespaces. After completing all the procedures, you can list all namespaces in the cluster:

Before proceeding to the code, we’ll perform the final settings: when the k8s cluster retrieves the images to be launched, it must refer to the container registry we created. This registry has secure access, as the registry is private. Therefore, we need to configure a registry secret that can be simply referenced in our yaml deployment files. Here are the commands:

The first command uses az to get the keys for a user with administrator rights (the user name with administrator rights is the same as the container registry name, so I created cdk8s.azurecr.io, and the administrator user name is cdk8s). Pass one of the keys (no matter which one) as a password. Email address is not used, so you can specify any. We now have a registry secret named regsecret that we can reference when deploying to the k8s cluster. K8s will use this secret to authenticate the registry.

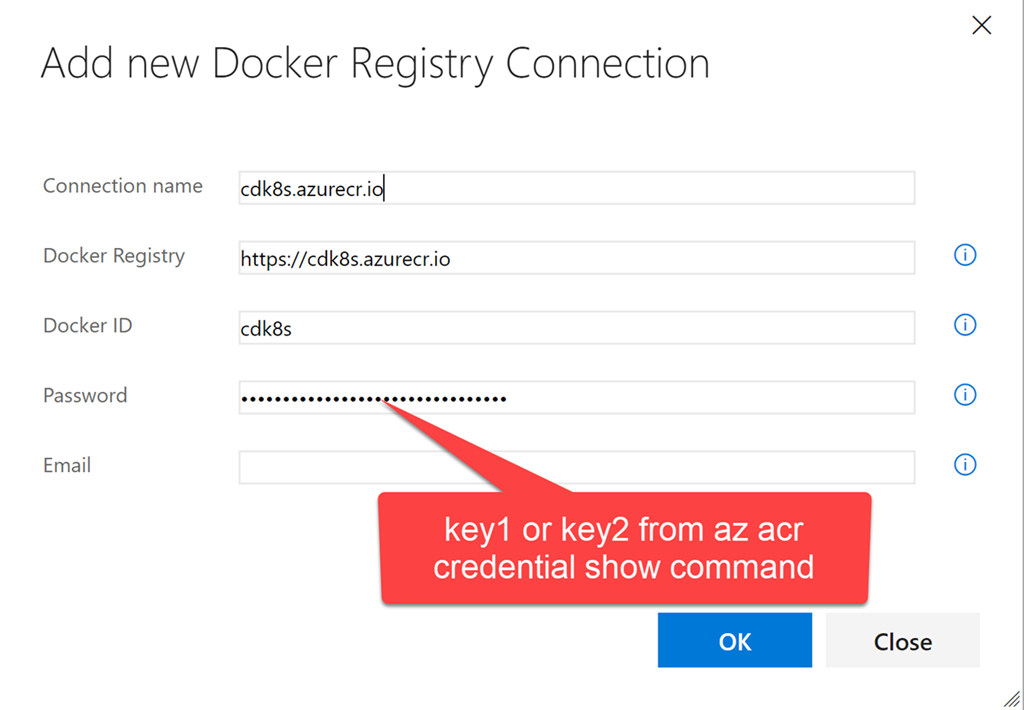

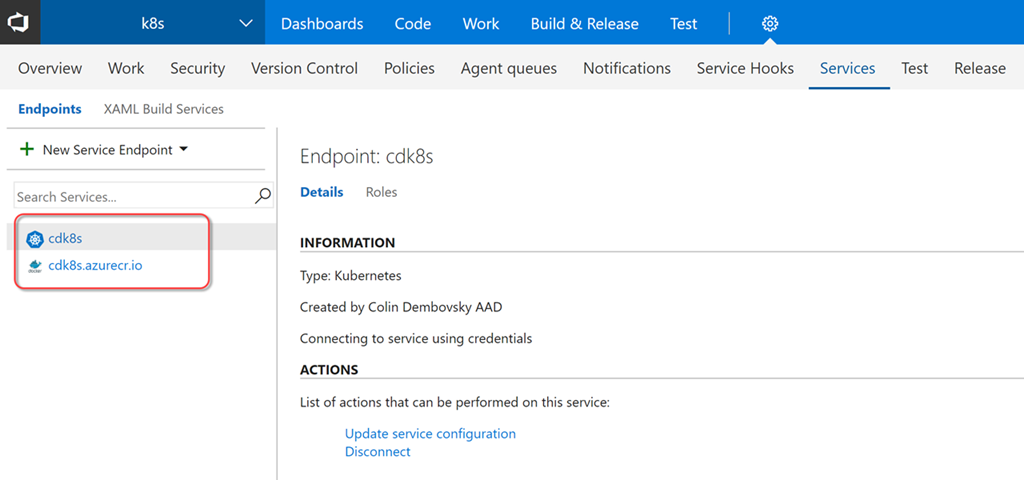

We set up a k8s cluster and a container registry. Now add these endpoints to VSTS so that we can transfer containers to the registry when creating the assembly and execute commands for the k8s cluster during release preparation. Endpoints allow us to abstract authentication in order not to store credentials directly in the definitions of our releases. You can also create roles to restrict access to view and use of endpoints.

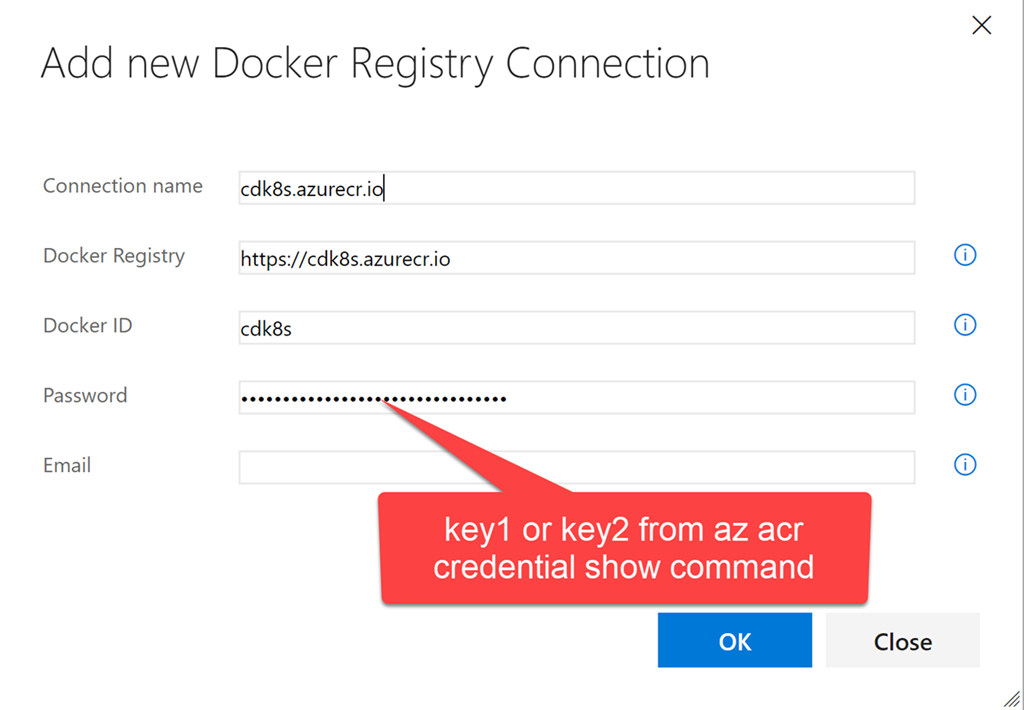

Start VSTS and open a team project (or just create a new one). Go to the team project and click the gear icon to open the settings node for this team project. Click Services. Click + New Services and create a new Docker Registry endpoint. Enter the same credentials that you used to create a registry secret in k8s using kubectl:

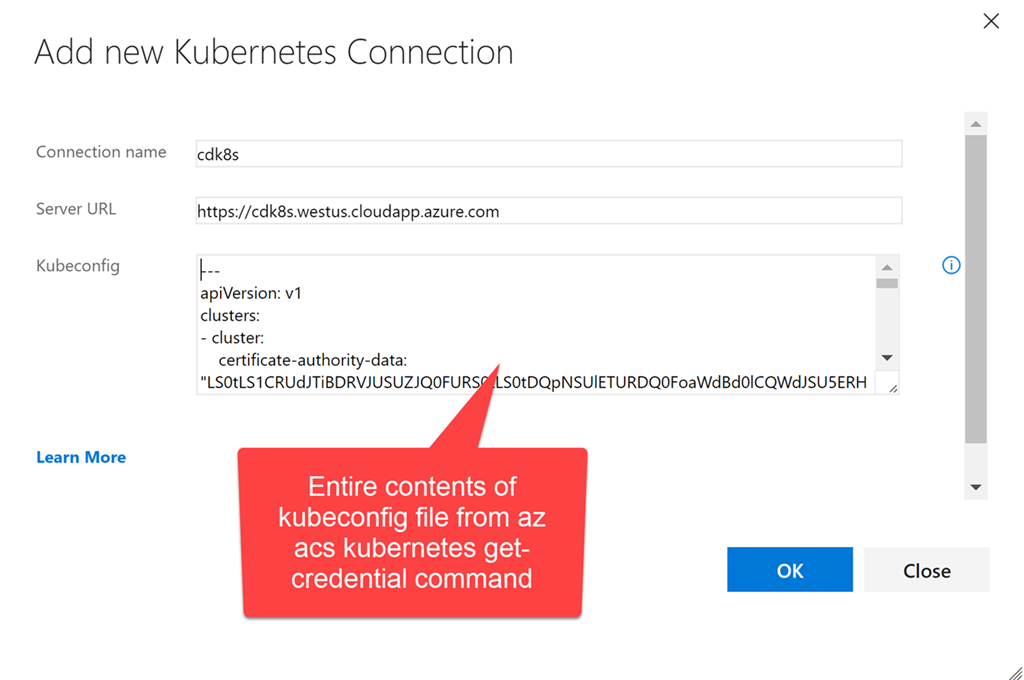

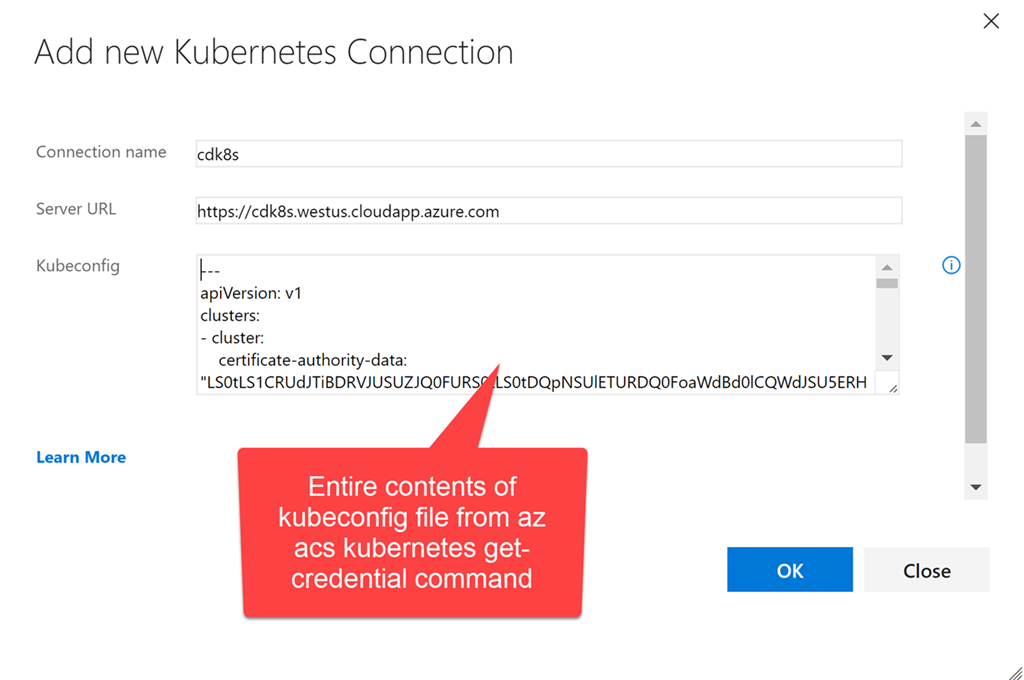

Now create the end point of k8s. Enter the URL: https: //$ClusterName.$location.cloudapp.azure.com (clustername and location are the variables that we used when creating the cluster). All the contents of the file ~ / cdk8s / kubeconfig (you could call it differently), which was created after executing the command az acs kubernetes get-credential, should be copied into the credentials text field:

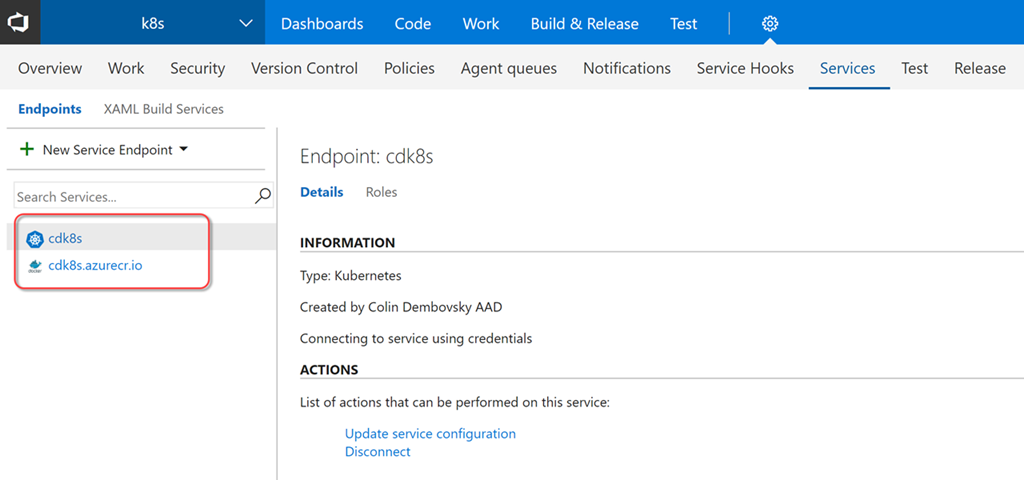

We now have two endpoints that we can use in assembly and release definitions:

Now we can create an assembly that will compile / test our code, create docker images and put them in the container registry, marking them accordingly. Click Build & Release, and then Builds to open the build node. Create a new build definition. Select an ASP.NET Core template and click Apply. The following settings must be made:

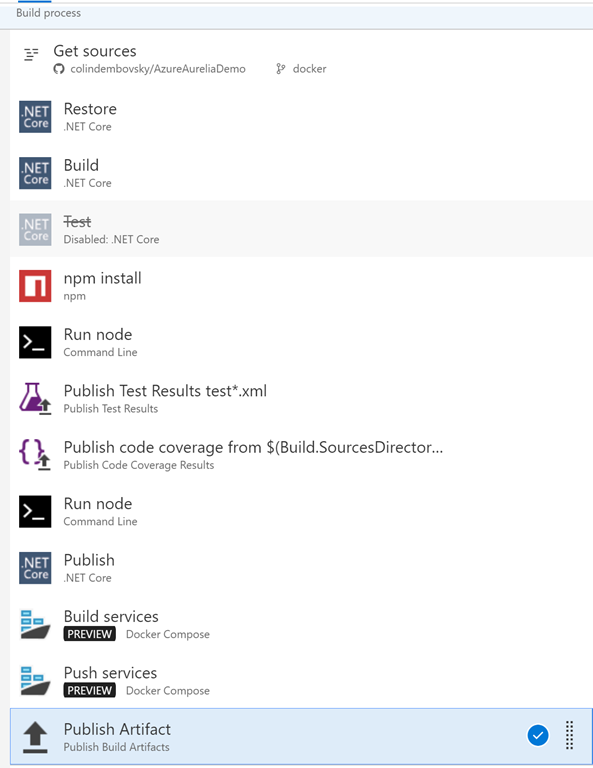

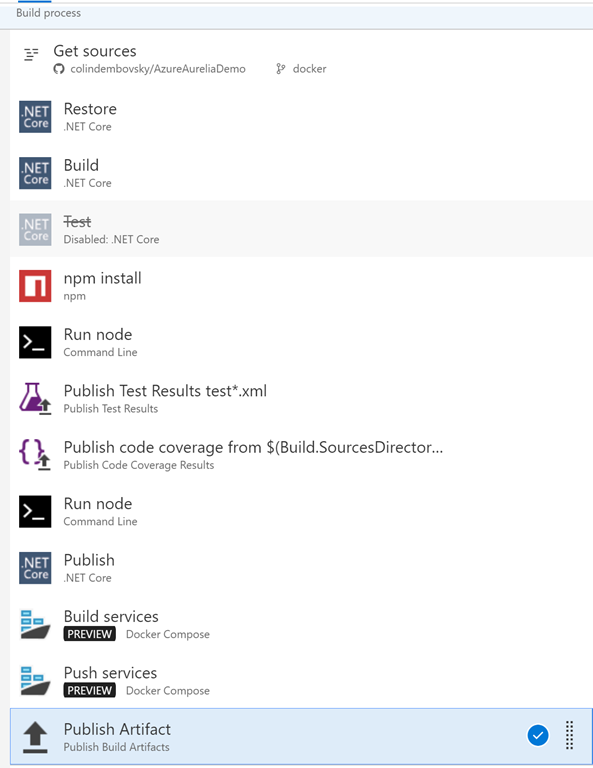

The final task list should look like this:

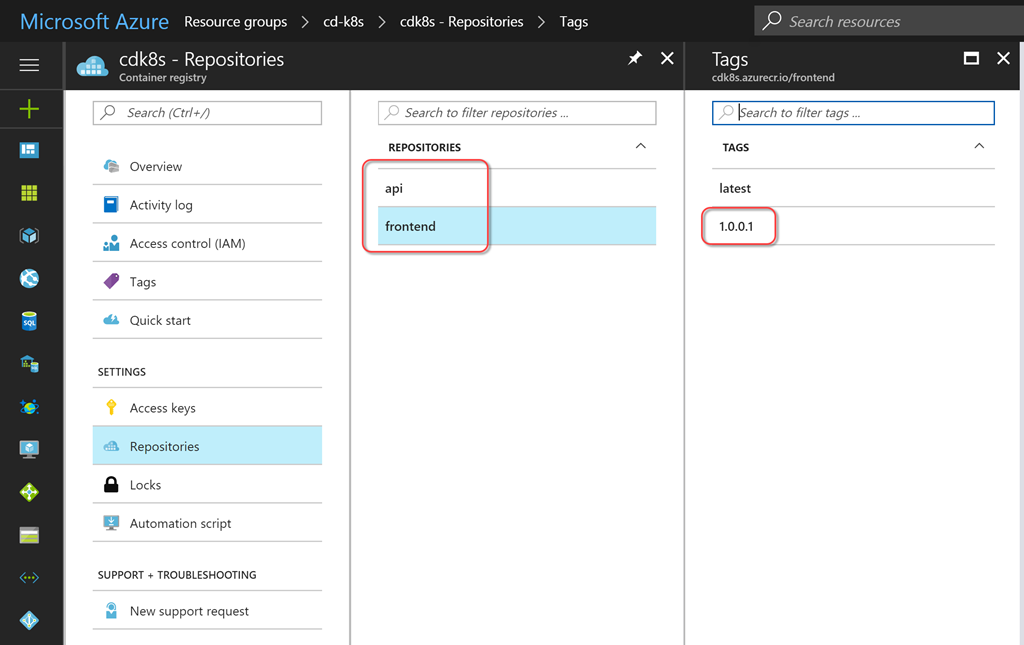

You can now click Save and Queue to save the assembly and put it in a queue. Upon completion of the build process, you will see a summary of the testing / coverage.

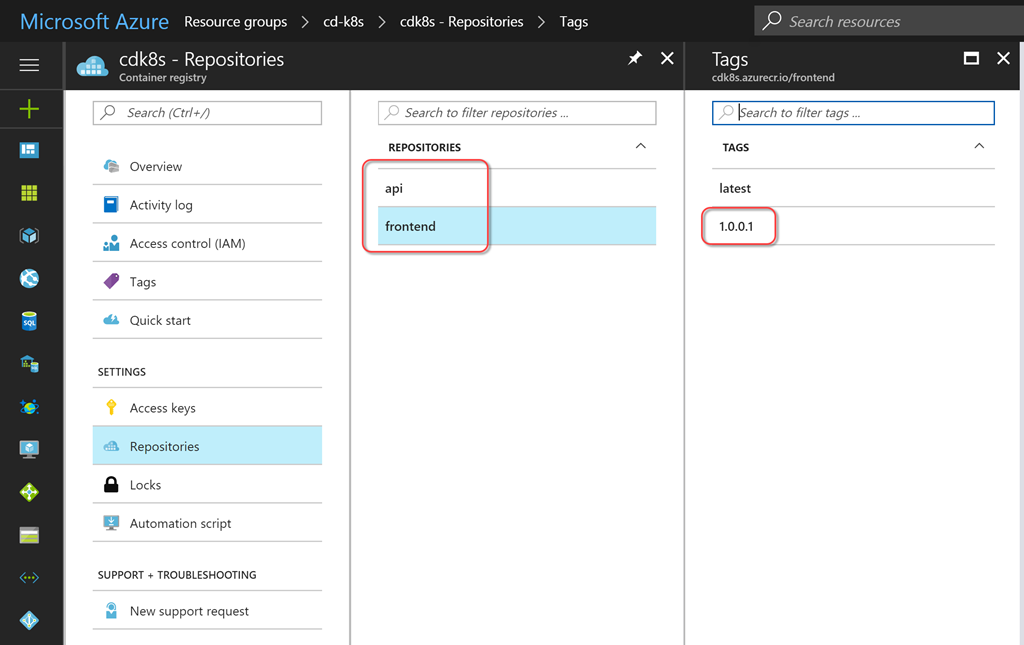

You can also view the contents of your container registry to see newly migrated service images with a label corresponding to the build number.

Now we can customize the release that will create / update the necessary services. For this, configuration management should be provided. It was possible to simply include the configuration in the code, but in that case confidential data (for example, passwords) would be included in the version control tool. I prefer to “tokenize” any configuration so that the release management solution places sensitive data outside the zone controlled by the version control tool. VSTS Release Management solution allows you to create secrets for individual environments or releases, you can also create them in variable groups with reusability support. In addition, seamless integration with Azure Key Vault is now supported.

In order to use environment-specific values instead of tokens, we need a task to replace the token. Fortunately, I have the cross-platform ReplaceTokens task from the Colin's ALM Corner Build & Release Tasks extension module, which I downloaded from the VSTS Marketplace. Click the link to go to the desired page, then click Install to install the extension for your account.

On the build summary page, scroll to the Deployments section on the right and click the Create release link. You can also click Releases and create a new definition from there. Start with an empty template, select your team project and the assembly you just created as the source assembly. Check the Continuous Deployment checkbox so that the release is created automatically for each correct build.

As a name for the definition, specify k8s or something descriptive. On the General tab, change the format of the release number to $ (Build.BuildNumber) - $ (rev: r) so that by the release name you can always easily determine the build number. Return to the Environments section and instead of Environment 1 enter dev. Click the Run on Agent link and make sure that Hosted Linux Preview is selected in the Deployment queue field.

Add the following tasks:

This sets the environment-specific values for all variables in the yml files. The Replace Tokens task will write the necessary values for us to the files. Let's quickly take a look at one of the tokenized files (tokenized lines are highlighted):

Comment on the value for BackendServiceIP: we use 10.0.0.1 as the replacement text, as Azure will assign the IP address to this service when k8s starts the server-side service (you will see the public IP address in the resource group in the Azure portal). We will need to do this once to create the services and then update to get the real IP address and ensure that the service is working on the client side. We also use $ (Release.EnvironmentName) as the value for the namespace, so for dev (and then for prod) the namespaces should be the same as what we created (including the case of characters).

If the service / deployment and configuration do not change, then the first three tasks of the k8s essentially do not work. Some result will be given only by the set command. But this is just great, since the service / deployment and configuration files can be used idempotently! They change when necessary, and do not cause any disturbances in all other situations - an ideal approach for recurring releases!

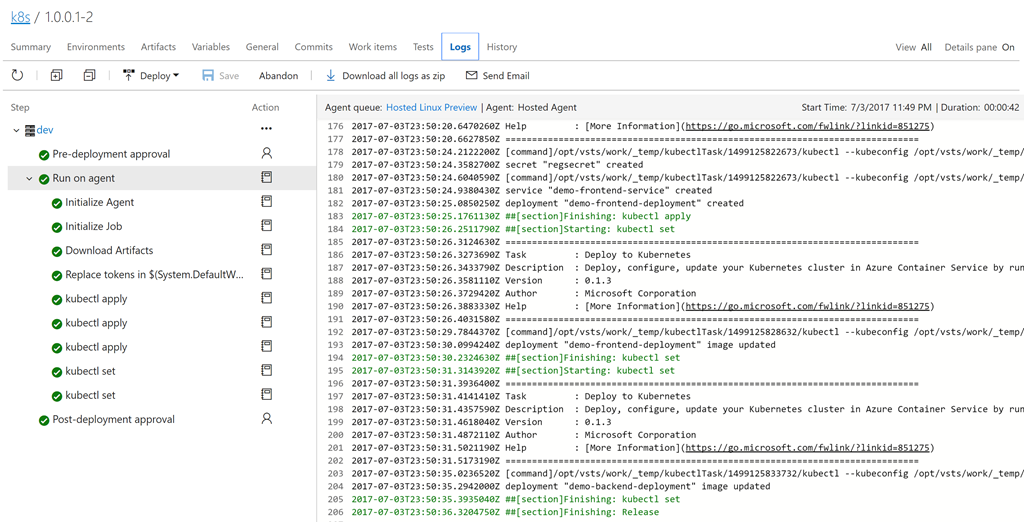

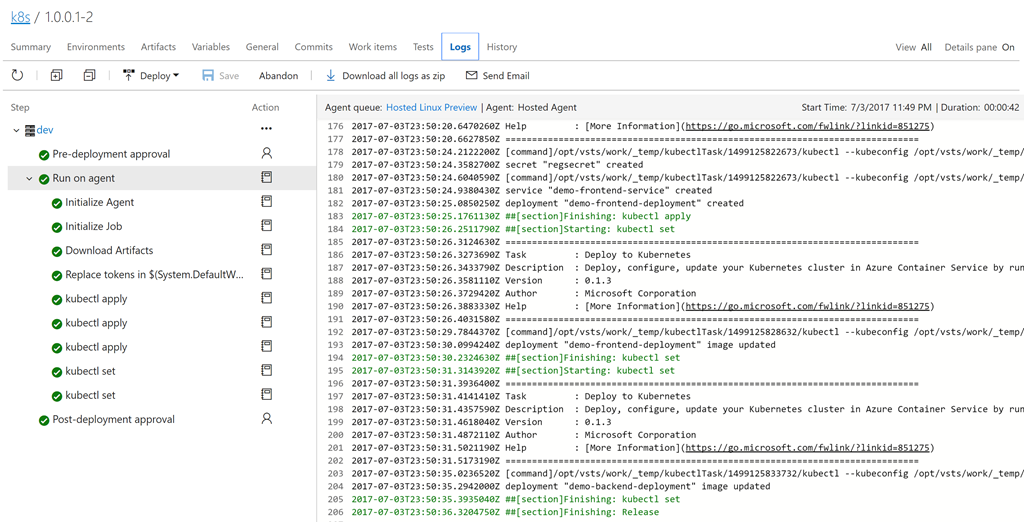

Save the definition. Click + Release to create a new release. Click on the release number (it will be something like 1.0.0.1-1) to open it. Click logs to view the logs.

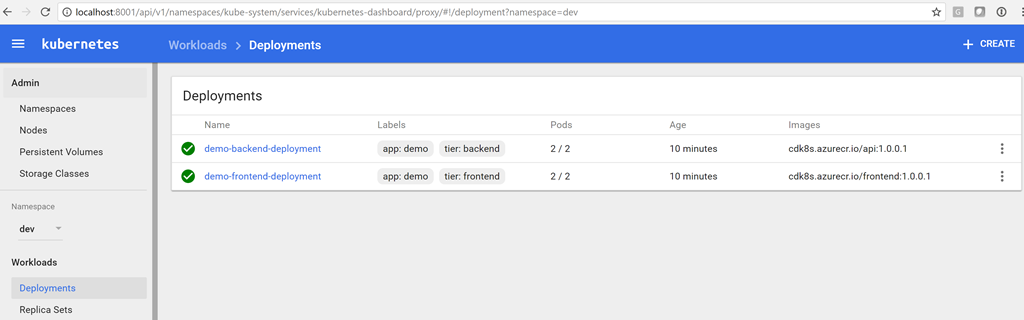

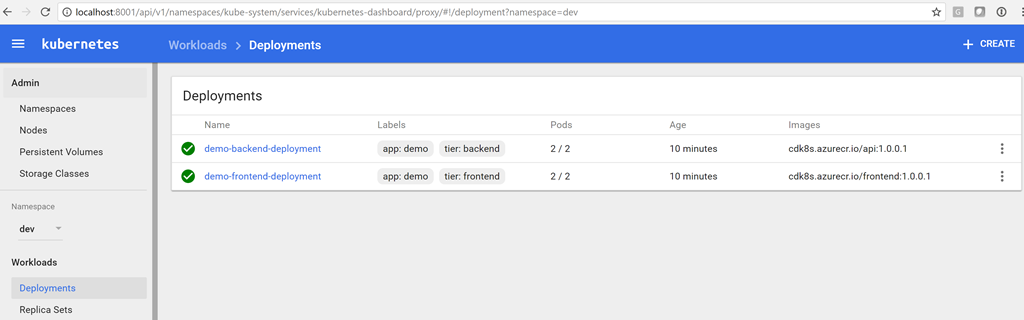

When the release is complete, you will see a deployment in the Kubernetes dashboard. Use the following command to open the information panel:

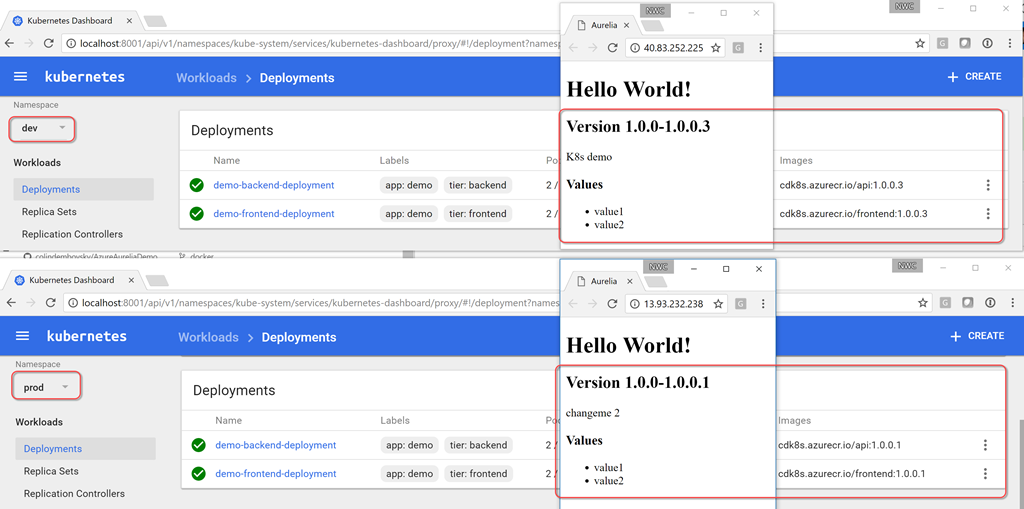

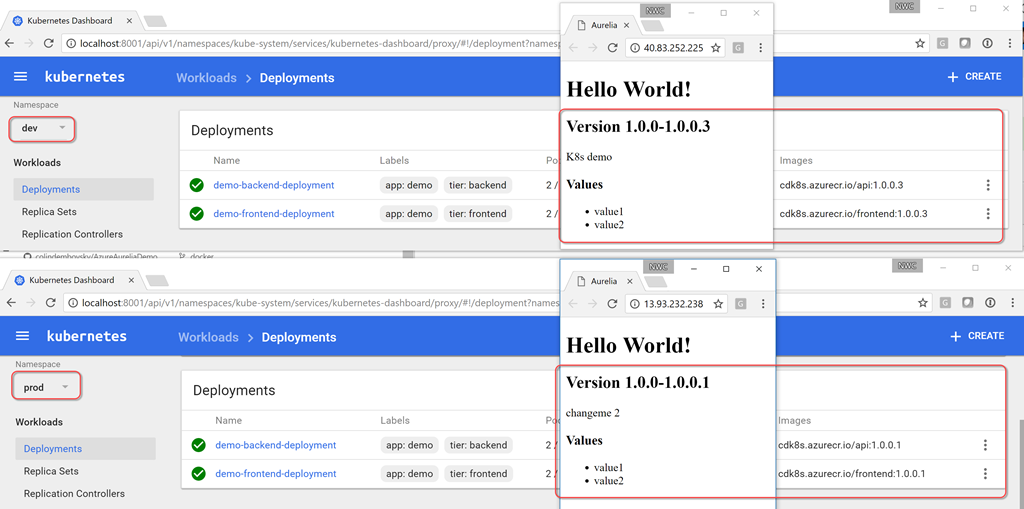

The last argument is the path to the SSH key file that is generated when the cluster is created (specify the current path). Now you can open the browser page http: // localhost: 8001 / ui . In the namespace drop-down menu, select dev and click Deployments. You should see two successful deployments with two workable balers in each. You can also see the images that run in the deployment. Pay attention to the build number specified as a tag!

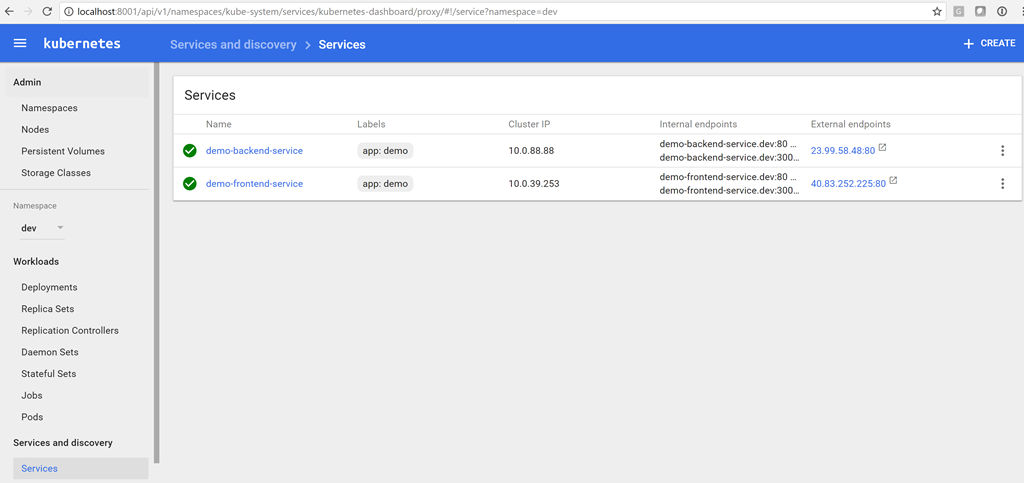

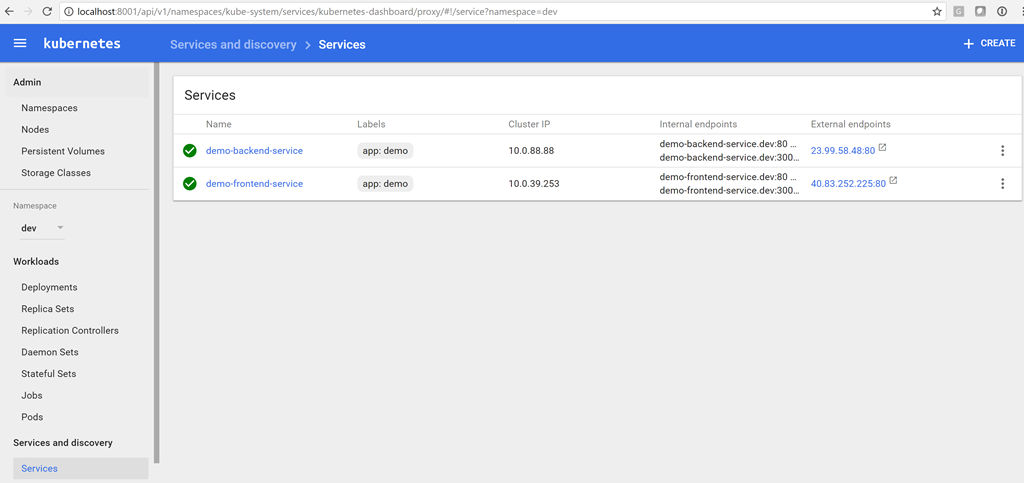

To see the services, click Services.

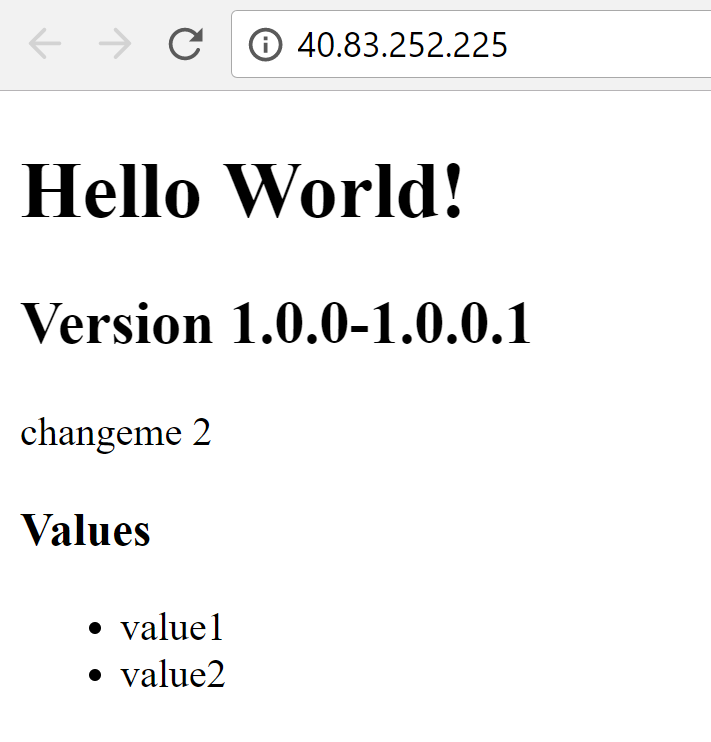

We now have the server-side IP address of the service, so we can update the variable in the release. Then we can queue the new version, and this time the correct IP address for the server-side service will be specified in the client's configuration (in our case, 23.99.58.48). We can enter the IP address of our customer service in the browser and make sure that everything is working now!

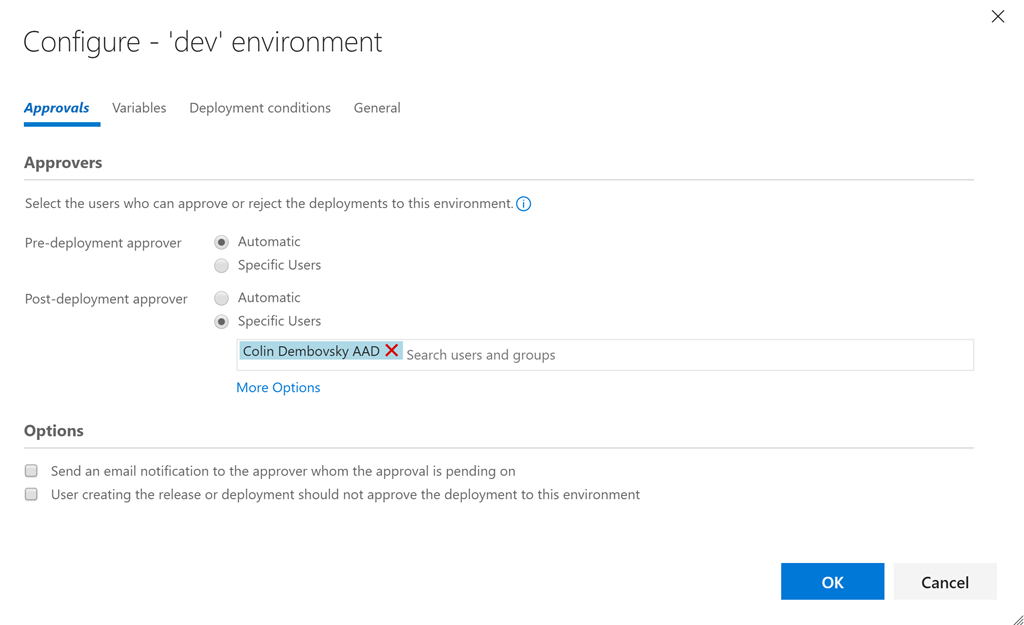

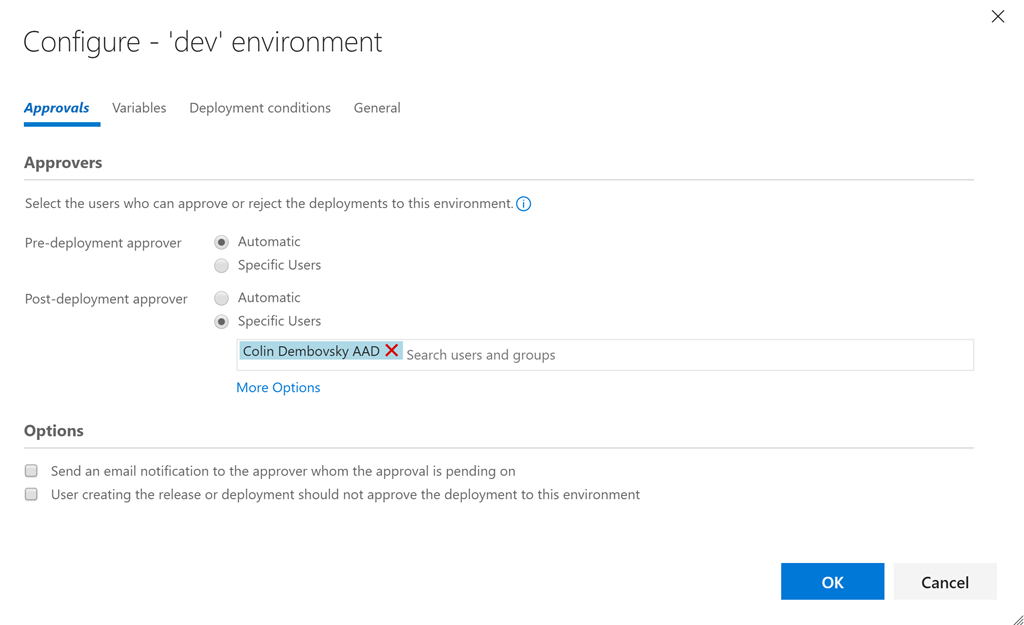

Now that we have verified that the dev environment is working, we can go back to release, clone the dev environment and name the copy prod. Specify post factum approval for dev (or prior approval for prod) so that there is a control point between the two environments.

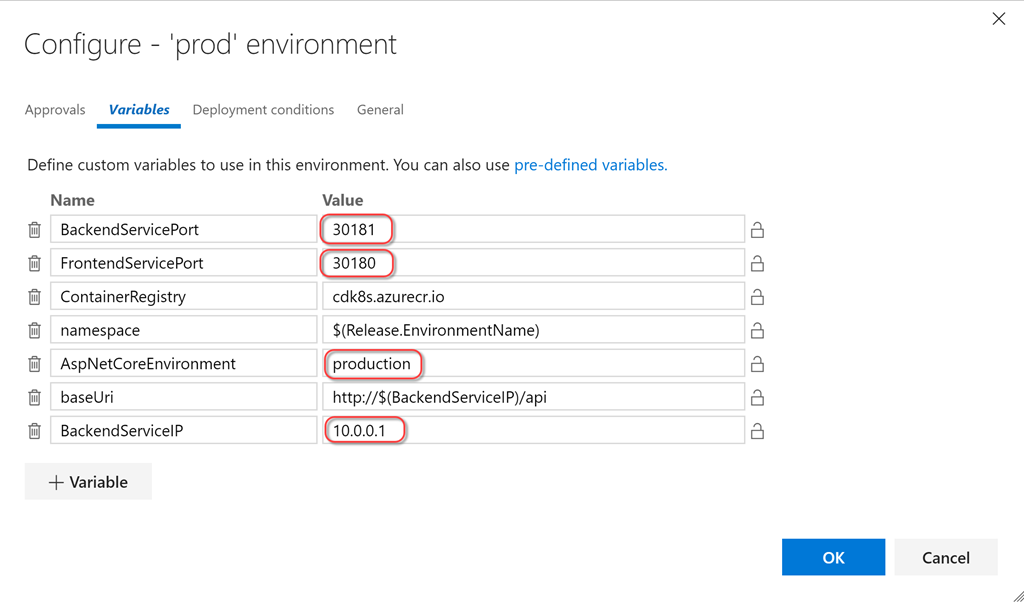

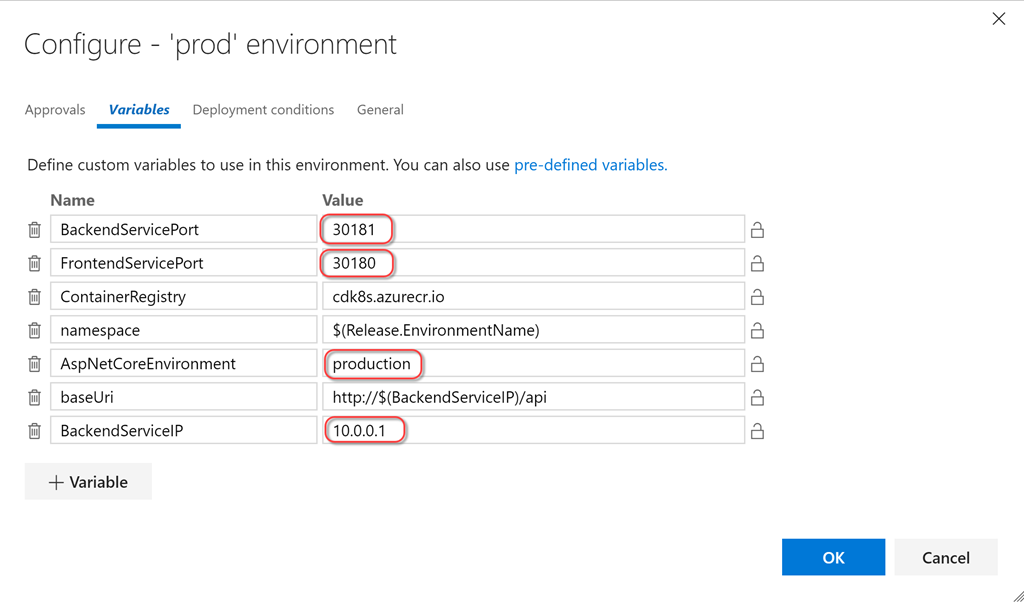

Then we can simply change the ports for the nodes, as well as the values of the AspNetCoreEnvironment and BackendServiceIP variables, and you're done! Of course, we need to first deploy the prod namespace before we see the IP address assigned by k8s / Azure to the prod server. Then you need to rerun the issue creation procedure to update the configuration.

nodePort k8s , , , ( ).

--namespace , , Pull Request vsts-tasks Github, !

, dev prod CI/CD , . «K8s demo» . , , , , dev. dev ( 1.0.0.3 , , 1.0.0.1), prod 1.0.0.1.

dev , prod , prod 1.0.0.3.

json , ( , , ).

k8s . yml « » . , configMaps . Azure CLI k8s Azure . VSTS k8s CI/CD, . minikube, k8s , , (Dev/CI/CD).

, CI/CD ! k8s . , k8s !

k8sing!

PS ( Quantum Quintum ) .

Read the translation of the second part of the article DevOps with Kubernetes and VSTS.

A series of articles "We are talking about containers":

1. Containers for rapid deployment .

2. DevOps with Kubernetes and VSTS. Part 1: Local history.

3. DevOps with Kubernetes and VSTS. Part 2: Cloud history.

4. A node with infinite capacity for Kubernetes.

')

In the first part, I demonstrated an approach to developing multi- container applications using Kubernetes (k8s), or rather minikube , a full-fledged k8s environment that runs one node on a virtual machine on your laptop. In the previous article I cloned this repository (make sure the docker branch is deployed) with two containers: the DotNet Core API and the frontend SPA ( Aurelia ) (as static files in the DotNet Core app). I showed how to create containers locally and run them in the minikube, as well as use the features of ConfigMaps to work with configurations.

In this article I will tell you how to transfer local development to CI / CD and create a pipeline for automated assembly / release generation using VSTS. We will create a container registry and container services in Azure, using k8s as the orchestration mechanism.

CI / CD Pipeline for Kubernetes in VSTS

I strongly recommend that you study Nigel Poulton's excellent initial course entitled Getting Started with Kubernetes on PluralSight (note the translator - in English, you need a paid subscription), as well as the Atul Malaviya article from Microsoft. Nigel's course was an excellent Kubernetes dive for beginners, and Atula’s article helped to understand the principles of interaction between VSTS and k8s, but neither the course nor the article covered the entire conveyor. Of these, I did not understand how the image update was implemented in the CI / CD pipeline. Well, I had to conduct a series of experiments myself and prepare this article!

Deploy your k8s environment using Azure Container Services

k8s can be run locally, in AWS or Google Cloud or Azure. We will use the Azure Container Service. However, the CI / CD pipeline that I demonstrate in this article does not depend on the specific cloud hosting, it is suitable for any k8s cluster. We will also create a private container registry in Azure, but again, you can use any container registry of your choice.

You can also create a k8s cluster in the Azure portal. However, Azure CLI allows you to do this faster, and you save the keys that you need to connect, so I decided to use this mechanism. I will also use Bash for Windows with kubectl, but any platform with kubectl and Azure CLI will do.

Here are the commands:

# set some variables export RG="cd-k8s" export clusterName="cdk8s" export location="westus" # create a folder for the cluster ssh-keys mkdir cdk8s # login and create a resource group az login az group create --location $location --name $RG # create an ACS k8s cluster az acs create --orchestrator-type=kubernetes --resource-group $RG --name=$ClusterName --dns-prefix=$ClusterName --generate-ssh-keys --ssh-key-value ~/cdk8s/id_rsa.pub --location $location --agent-vm-size Standard_DS1_v2 --agent-count 2 # create an Azure Container Registry az acr create --resource-group $RG --name $ClusterName --location $location --sku Basic --admin-enabled # configure kubectl az acs kubernetes get-credentials --name $ClusterName --resource-group $RG --file ~/cdk8s/kubeconfig --ssh-key-file ~/cdk8s/id_rsa export KUBECONFIG="~/cdk8s/kubeconfig" # test connection kubectl get nodes NAME STATUS AGE VERSION k8s-agent-96607ff6-0 Ready 17m v1.6.6 k8s-agent-96607ff6-1 Ready 17m v1.6.6 k8s-master-96607ff6-0 Ready,SchedulingDisabled 17m v1.6.6 Notes:

- Lines 2–4: create variables.

- Line 6: create a directory for ssh keys and a kubeconfig configuration file.

- Line 9: Log in to Azure (request to open a page with a login menu in the browser; if you do not have an Azure subscription, create a free one now!).

- Line 10: create a group to hold all the resources we are going to create.

- Line 13: Deploy the k8s cluster using the resource group we just created and the name we give; generate ssh-keys and place in the specified directory; we need two agents (nodes) with the specified size of the virtual machine.

- Line 16: Create a registry of Azure containers in the same resource group with administrator access.

- Line 19: we get credentials for connecting to the cluster using kubectl; use the resulting ssh-key and save the credentials in the specified kubeconfig file.

- Line 20: ask kubectl to use this configuration instead of the default configuration (which may have other k8s clusters or minikube configuration).

- Line 23: check the connectivity to the cluster.

- Lines 24–27: we are successfully connecting!

If you launch the browser, navigate to the Azure portal and open your resource group, you will see how much has been created by these simple commands:

Do not worry, you will not have to manage resources yourself. Azure and the k8s cluster take it upon themselves!

Namespaces

Before we create an assembly and release for our container applications, let's look at the promotion model. Typically, the scheme is approximately as follows: development → user acceptance testing → production environment (Dev → UAT → Prod). In the case of c k8s, minikube is a local development environment, which is great. This is a full-featured k8s cluster on your laptop, so you can run your code locally, including using such k8s constructs as configMaps. What about UAT and Prod? Option - to deploy individual clusters, but this approach can be costly. You can also share cluster resources using namespaces.

Namespaces in k8s act as security boundaries, but they can also become isolation boundaries. I can deploy new versions of my application in the dev namespace, which will use the prod namespace resources, but remain completely invisible (own IP addresses, etc.). Of course, you should not conduct load testing within this configuration, since we will consume significant resources for applications in a production environment. This concept is reminiscent of deployment slots in Azure Application Services, which are used to seamlessly test applications before transferring them to production.

If you create a k8s cluster, then in addition to the kube-system and kube-public namespaces (with k8s subsets) you get the default namespace. In the absence of clear instructions on your part, any services, deployments, or approaches that you create will fall into this namespace. But we will create two additional namespaces: dev and prod. Here is our yaml:

apiVersion: v1 kind: Namespace metadata: name: dev --- apiVersion: v1 kind: Namespace metadata: name: prod This file contains definitions for both namespaces. Run the Apply command to create namespaces. After completing all the procedures, you can list all namespaces in the cluster:

kubectl apply -f namespaces.yml namespace "dev" created namespace "prod" created kubectl get namespaces NAME STATUS AGE default Active 27m dev Active 20s kube-public Active 27m kube-system Active 27m prod Active 20s Setting a secret for the registry of containers

Before proceeding to the code, we’ll perform the final settings: when the k8s cluster retrieves the images to be launched, it must refer to the container registry we created. This registry has secure access, as the registry is private. Therefore, we need to configure a registry secret that can be simply referenced in our yaml deployment files. Here are the commands:

az acr credential show --name $ClusterName --output table USERNAME PASSWORD PASSWORD2 ---------- -------------------------------- -------------------------------- cdk8s some-long-key-1 some-long-key-2 kubectl create secret docker-registry regsecret --docker-server=$ClusterName.azurecr.io --docker-username=$ClusterName --docker-password=<some-long-key-1> --docker-email=admin@azurecr.io secret "regsecret" created The first command uses az to get the keys for a user with administrator rights (the user name with administrator rights is the same as the container registry name, so I created cdk8s.azurecr.io, and the administrator user name is cdk8s). Pass one of the keys (no matter which one) as a password. Email address is not used, so you can specify any. We now have a registry secret named regsecret that we can reference when deploying to the k8s cluster. K8s will use this secret to authenticate the registry.

Configuring VSTS Endpoints

We set up a k8s cluster and a container registry. Now add these endpoints to VSTS so that we can transfer containers to the registry when creating the assembly and execute commands for the k8s cluster during release preparation. Endpoints allow us to abstract authentication in order not to store credentials directly in the definitions of our releases. You can also create roles to restrict access to view and use of endpoints.

Start VSTS and open a team project (or just create a new one). Go to the team project and click the gear icon to open the settings node for this team project. Click Services. Click + New Services and create a new Docker Registry endpoint. Enter the same credentials that you used to create a registry secret in k8s using kubectl:

Now create the end point of k8s. Enter the URL: https: //$ClusterName.$location.cloudapp.azure.com (clustername and location are the variables that we used when creating the cluster). All the contents of the file ~ / cdk8s / kubeconfig (you could call it differently), which was created after executing the command az acs kubernetes get-credential, should be copied into the credentials text field:

We now have two endpoints that we can use in assembly and release definitions:

Assembly

Now we can create an assembly that will compile / test our code, create docker images and put them in the container registry, marking them accordingly. Click Build & Release, and then Builds to open the build node. Create a new build definition. Select an ASP.NET Core template and click Apply. The following settings must be made:

- Tasks → Process: Enter a name, for example k8s-demo-CI, and select the Hosted Linux Preview queue.

- Optional: change the build number format to 1.0.0 $ (rev: .r) so that your builds have the format 1.0.0.x.

- Tasks → Get Sources: select a Github repository with OAuth or PAT authentication. Select AzureAureliaDemo and then docker as the default branch. You may have to create a “fork” for the repository (or just import it into VSTS) if you perform actions with me.

- Tasks → DotNet Restore: do not make any changes.

- Tasks → DotNet Build: Add --version-suffix $ (Build.BuildNumber) to the assembly arguments to ensure that the version and build number match.

- Tasks → DotNet Test: turn off this task, because in our solution DotNet tests are not used (of course, if you have tests, the task can be re-enabled).

- Tasks → add task npm. Select frontend as the working directory and make sure that the install command is used.

- Tasks → add command line task. Select node as a tool (tool), specify the arguments: node_modules / aurelia-cli / bin / aurelia-cli.js test and working directory: frontend. So you run the Aurelia tests.

- Tasks → add a task to publish test results. In the Test Results files field, type test * .xml, and in the Search Folder field, type $ (Build.SourcesDirectory) / frontend / testresults. So you publish the results of the tests Aurelia.

- Tasks → add a task to publish code coverage. In the Coverage Tool field, enter Cobertura, in the Summary File field - $ (Build.SourcesDirectory) /frontend/reports/coverage/cobertura.xml, in the Report Directory field - $ (Build.SourcesDirectory) / frontend / reports / coverage / html. This will publish your Aurelia test coverage data.

- Tasks → add command line task. Select node as a tool (tool), specify the arguments: node_modules / aurelia-cli / bin / aurelia-cli.js build --env prod and working directory: frontend. So you compile, process and package the Aurelia SPA application.

- Tasks → DotNet Publish. For the arguments, type -c $ (BuildConfiguration) -o publish and uncheck the Zip Published Projects checkbox.

- Tasks → add the Docker Compose task. In the Container Registry Type field, specify the Azure Container Registry; as a subscription and the Azure container registry, specify the registry for which we created the end point earlier. In the Additional Docker Compose Files field, specify docker-compose.vsts.yml, in the Action field - Build service images, in the Additional Image Tags field - $ (Build.BuildNumber), in order for the build number to be used as a tag for images.

- Create a Docker Compose task clone. For the name, enter Push service images and select the action Push service images. Check the Include Latest Tag box.

- Tasks → Publish Artifact. In the Path to Publish and Artifact Name fields, select k8s. So you will publish the yaml k8s files to include them in the release.

The final task list should look like this:

You can now click Save and Queue to save the assembly and put it in a queue. Upon completion of the build process, you will see a summary of the testing / coverage.

You can also view the contents of your container registry to see newly migrated service images with a label corresponding to the build number.

Release

Now we can customize the release that will create / update the necessary services. For this, configuration management should be provided. It was possible to simply include the configuration in the code, but in that case confidential data (for example, passwords) would be included in the version control tool. I prefer to “tokenize” any configuration so that the release management solution places sensitive data outside the zone controlled by the version control tool. VSTS Release Management solution allows you to create secrets for individual environments or releases, you can also create them in variable groups with reusability support. In addition, seamless integration with Azure Key Vault is now supported.

In order to use environment-specific values instead of tokens, we need a task to replace the token. Fortunately, I have the cross-platform ReplaceTokens task from the Colin's ALM Corner Build & Release Tasks extension module, which I downloaded from the VSTS Marketplace. Click the link to go to the desired page, then click Install to install the extension for your account.

On the build summary page, scroll to the Deployments section on the right and click the Create release link. You can also click Releases and create a new definition from there. Start with an empty template, select your team project and the assembly you just created as the source assembly. Check the Continuous Deployment checkbox so that the release is created automatically for each correct build.

As a name for the definition, specify k8s or something descriptive. On the General tab, change the format of the release number to $ (Build.BuildNumber) - $ (rev: r) so that by the release name you can always easily determine the build number. Return to the Environments section and instead of Environment 1 enter dev. Click the Run on Agent link and make sure that Hosted Linux Preview is selected in the Deployment queue field.

Add the following tasks:

- Replace Tokens.

- Source Path: In Explorer, open the k8s directory.

- Target File Pattern: * -release.yml. Thus, the token will be replaced in any yml file with a name that ends with -release. There are three such files: the service / deployment files for the server and the client, and the client configuration file. This task finds tokens in a file (with a prefix and a postfix __) and searches for variables with the same name. Each variable is replaced by its value. After a while we will create variables.

- Kubernetes Task 1 (apply configuration for client).

- Configure the k8s connection to the endpoint you created earlier. You also need to set up a connection to the Azure Container Registry. This applies to all Kubernetes tasks. In the Command field, select apply, select the Use Configuration Files check box and specify the file k8s / app-demo-frontend-config-release.yml using the file picker. Specify --namespace $ (namespace) in the text box for the arguments.

- Configure the k8s connection to the endpoint you created earlier. You also need to set up a connection to the Azure Container Registry. This applies to all Kubernetes tasks. In the Command field, select apply, select the Use Configuration Files check box and specify the file k8s / app-demo-frontend-config-release.yml using the file picker. Specify --namespace $ (namespace) in the text box for the arguments.

- Kubernetes Task 2 (apply service / deployment definition on server side).

- Set the same connection settings for the k8s service and the Azure Container Registry. This time in the Secret Name field, specify regsecret (this is the name of the secret that we created when setting up the k8s cluster, and the name we refer to in the imagePullSecret parameter in the deployment definitions). Check the Force update secret box. This will ensure that the values of the k8s secret and the key from Azure match. This parameter could be skipped because we created the key manually.

- In the Command field, select apply, select the Use Configuration Files check box, and specify the k8s / app-demo-backend-release.yml file using the file picker. Specify --namespace $ (namespace) in the text box for the arguments.

- Kubernetes Task 3 (apply service / deployment definition on client side).

- The settings are similar to the previous task, just select the file k8s / app-demo-frontend-release.yml.

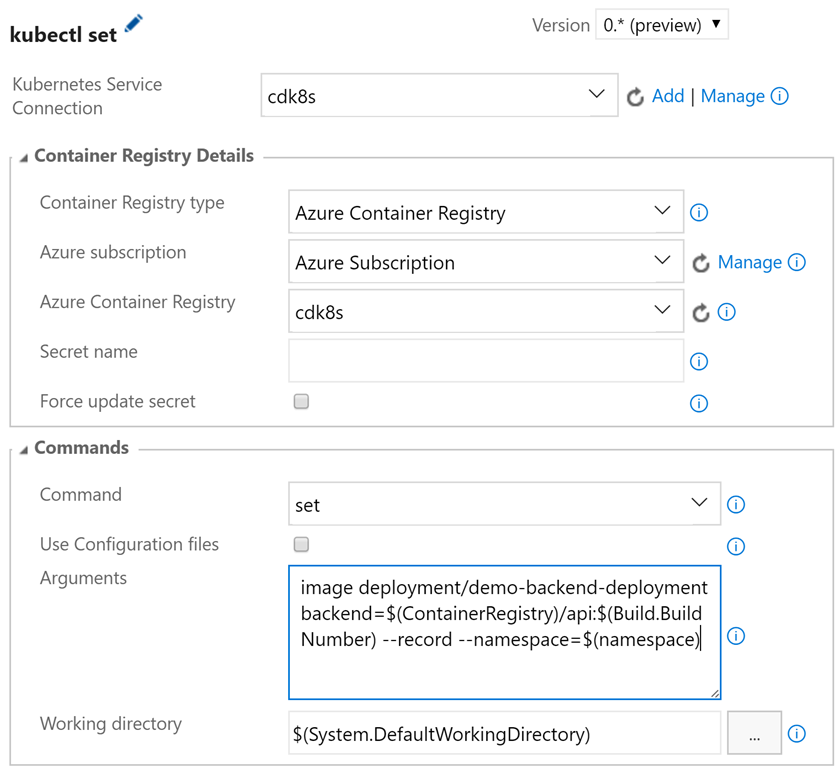

- Kubernetes Task 4 (update server side image).

- Set the same connection settings for the k8s service and the Azure Container Registry. The secret is not required here. In the Command field, select set, and in the Arguments field, select image deployment / demo-backend-deployment backend = $ (ContainerRegistry) / api: $ (Build.BuildNumber) --record --namespace = $ (namespace).

- This will update the version (tag) of the used container image. K8s will perform a sequential update, launching new containers and disabling old ones, and the service will be operational all the time.

- Kubernetes Task 5 (update image on client side).

- The parameters are similar to the previous task, only in the Arguments field you must specify image deployment / demo-frontend-deployment frontend = $ (ContainerRegistry) / frontend: $ (Build.BuildNumber) --record --namespace = $ (namespace)

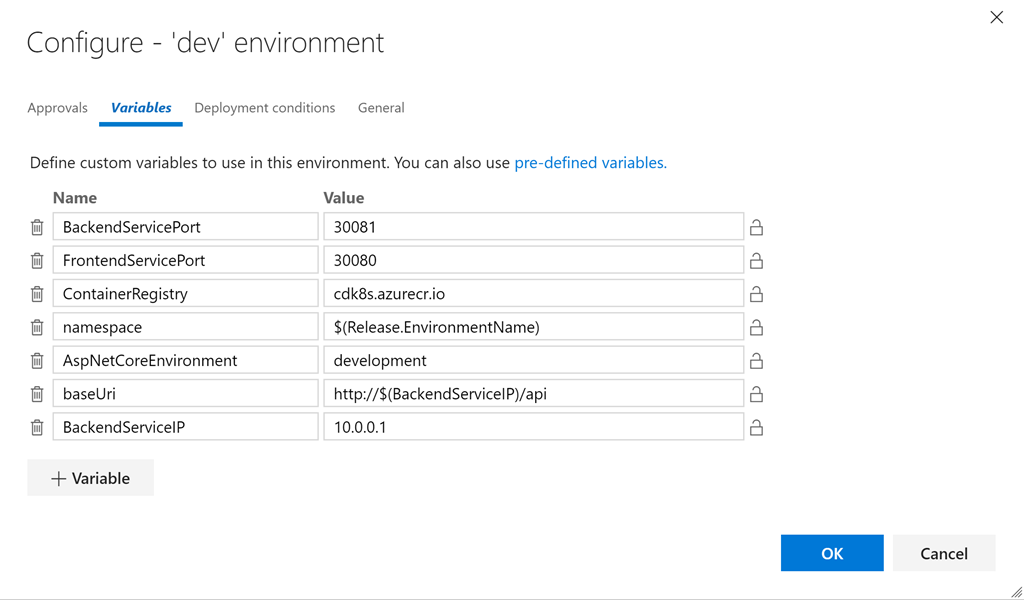

- Click the "..." button on the dev card and click Configure Variables to configure variables. Set the following values:

- BackendServicePort: 30081

- FrontendServicePort: 30080

- ContainerRegistry: <your registry of containers> .azurecr.io

- namespace: $ (Release.EnvironmentName)

- AspNetCoreEnvironment: development

- baseUri: http: // $ (BackendServiceIP) / api

- BackendServiceIP: 10.0.0.1

This sets the environment-specific values for all variables in the yml files. The Replace Tokens task will write the necessary values for us to the files. Let's quickly take a look at one of the tokenized files (tokenized lines are highlighted):

apiVersion: v1 kind: Service metadata: name: demo-frontend-service labels: app: demo spec: selector: app: demo tier: frontend ports: - protocol: TCP port: 80 nodePort: __FrontendServicePort__ type: LoadBalancer --- apiVersion: apps/v1beta1 kind: Deployment metadata: name: demo-frontend-deployment spec: replicas: 2 template: metadata: labels: app: demo tier: frontend spec: containers: - name: frontend image: __ContainerRegistry__/frontend ports: - containerPort: 80 env: - name: "ASPNETCORE_ENVIRONMENT" value: "__AspNetCoreEnvironment__" volumeMounts: - name: config-volume mountPath: /app/wwwroot/config/ imagePullPolicy: Always volumes: - name: config-volume configMap: name: demo-app-frontend-config imagePullSecrets: - name: regsecret Comment on the value for BackendServiceIP: we use 10.0.0.1 as the replacement text, as Azure will assign the IP address to this service when k8s starts the server-side service (you will see the public IP address in the resource group in the Azure portal). We will need to do this once to create the services and then update to get the real IP address and ensure that the service is working on the client side. We also use $ (Release.EnvironmentName) as the value for the namespace, so for dev (and then for prod) the namespaces should be the same as what we created (including the case of characters).

If the service / deployment and configuration do not change, then the first three tasks of the k8s essentially do not work. Some result will be given only by the set command. But this is just great, since the service / deployment and configuration files can be used idempotently! They change when necessary, and do not cause any disturbances in all other situations - an ideal approach for recurring releases!

Save the definition. Click + Release to create a new release. Click on the release number (it will be something like 1.0.0.1-1) to open it. Click logs to view the logs.

When the release is complete, you will see a deployment in the Kubernetes dashboard. Use the following command to open the information panel:

az acs kubernetes browse -n $ClusterName -g $RG --ssh-key-file ~/cdk8s/id_rsa Proxy running on 127.0.0.1:8001/ui Press CTRL+C to close the tunnel... Starting to serve on 127.0.0.1:8001 The last argument is the path to the SSH key file that is generated when the cluster is created (specify the current path). Now you can open the browser page http: // localhost: 8001 / ui . In the namespace drop-down menu, select dev and click Deployments. You should see two successful deployments with two workable balers in each. You can also see the images that run in the deployment. Pay attention to the build number specified as a tag!

To see the services, click Services.

We now have the server-side IP address of the service, so we can update the variable in the release. Then we can queue the new version, and this time the correct IP address for the server-side service will be specified in the client's configuration (in our case, 23.99.58.48). We can enter the IP address of our customer service in the browser and make sure that everything is working now!

Creating a production environment

Now that we have verified that the dev environment is working, we can go back to release, clone the dev environment and name the copy prod. Specify post factum approval for dev (or prior approval for prod) so that there is a control point between the two environments.

Then we can simply change the ports for the nodes, as well as the values of the AspNetCoreEnvironment and BackendServiceIP variables, and you're done! Of course, we need to first deploy the prod namespace before we see the IP address assigned by k8s / Azure to the prod server. Then you need to rerun the issue creation procedure to update the configuration.

nodePort k8s , , , ( ).

--namespace , , Pull Request vsts-tasks Github, !

CI/CD

, dev prod CI/CD , . «K8s demo» . , , , , dev. dev ( 1.0.0.3 , , 1.0.0.1), prod 1.0.0.1.

dev , prod , prod 1.0.0.3.

json , ( , , ).

Conclusion

k8s . yml « » . , configMaps . Azure CLI k8s Azure . VSTS k8s CI/CD, . minikube, k8s , , (Dev/CI/CD).

, CI/CD ! k8s . , k8s !

k8sing!

PS ( Quantum Quintum ) .

Source: https://habr.com/ru/post/337708/

All Articles