"Man" of art: is artificial intelligence capable of creating?

With the development of neural networks, they come up with more and more diverse applications. With their help, Tesla autopilots are trained, and face recognition is used not only for processing photos with applications like Prisma, but also in security systems . Artificial intelligence is taught to diagnose diseases. In the end, with his help even win the election .

But there is one area that has traditionally been considered to belong solely to man - creativity. However, this statement is beginning to be questioned. Lee Sedol, the loser of AlphaGo, admitted : “The defeat made me doubt human creativity. When I saw how AlphaGo plays, I wondered how well I play myself. ” Therefore, in today's post, let's talk about whether robots are able to step onto the territory of art, into the space of creativity, which means emotions and perceptions.

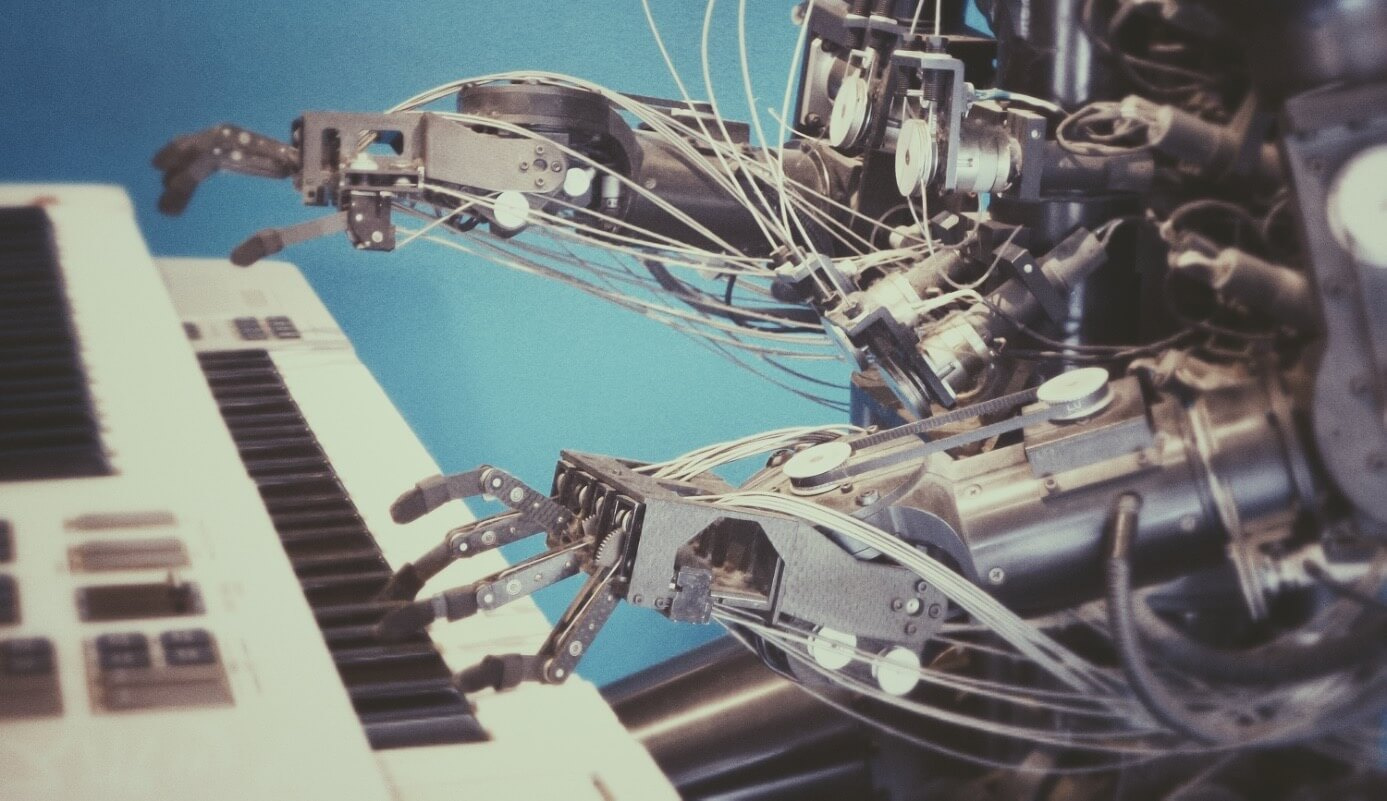

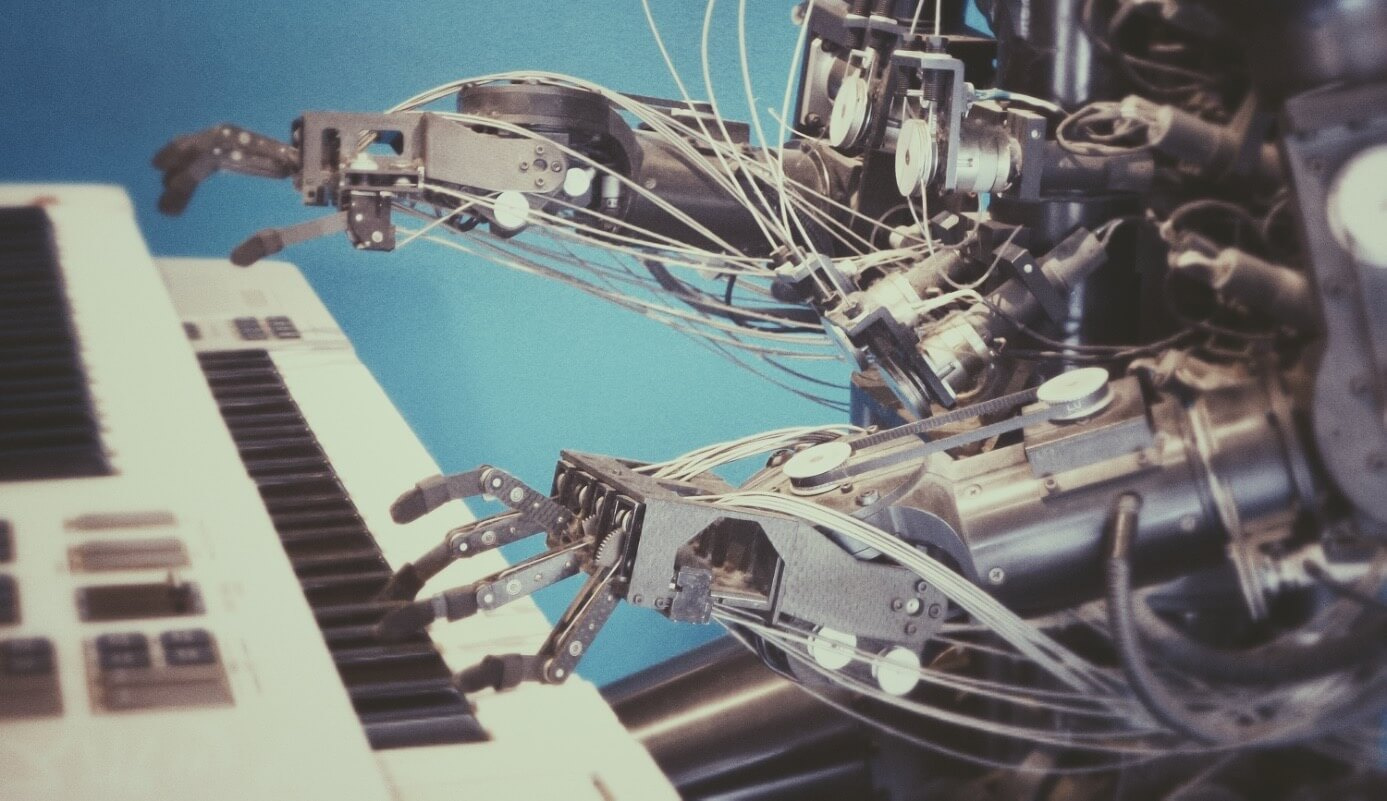

/ Flickr / franck injapan / PD

/ Flickr / franck injapan / PD

')

Self-learning systems have long started to check for creativity. For example, in 1970, scientists developed an algorithm that could write prosaic texts - albeit rather meaningless ones.

Since then, neural networks have learned how to draw pictures , compose music and poems, and invent scenarios for films. Yandex teaches neural networks to record music albums, similar to albums of popular groups, and write poetry in the style of Yegor Letov. The principle of operation of all algorithms is similar: they analyze a huge array of works of art, and then, based on the obtained laws, they "create" their creation: a picture, a musical composition, a novel, etc.

Creativity of neural networks is gradually institutionalized. So, in 2016, for the first time, a competition of art works created by robots was held. This year, the main prize of 40 thousand dollars was won by the PIX18 algorithm, invented by Creative Machines Lab: it was praised for its good smear and the ability to generate works based on photos at its disposal.

So commented on the victory in the committee: “The composition and work with the brush resembles Van Gogh. An interesting palette. It looks like a real criticism of the painting of the beginning artist.

Paintings created by Google's DeepDream algorithm are considered practically an art - first of all, precisely because they were created by artificial intelligence.

However, there is another important issue - novelty. By this criterion, we evaluate the works of artists. If the algorithms do not copy or do not process the photos, but, for example, write abstract pictures, can they really create something new?

Developers from the Laboratory of Artificial Intelligence and Art at Rutgers University tried to answer this question by creating a generative-contention network (GAN). Earlier, the algorithm was studied on the basis of the answers of one discriminator: he analyzed pictures, painted his own and checked the result. He produced images similar to those he studied before.

The team took the next step in the development of the network and added a second discriminator, competing with the first. Now the neural network analyzes approximately 81 thousand pictures and, based on such a large sample, forms a list of conditions under which the created picture can be attributed to works of art. In parallel, the second discriminator makes a list of styles and checks the picture for similarity with them - it carries out a verification operation. A new picture is born when an image is recognized as a work of art, not identical to any of the previously existing styles.

In addition, neural networks are already able to create animations . The computer program The Painting Fool , developed by Simon Colton of Imperial College London, was able to weave the same images she painted in the video.

The system adapts special solutions for the automated creation of collages, simulating brush strokes on canvas. The software is able to imitate drawing techniques, for which the capabilities of multi-core processors are used - each thread controls a separate brush. This allows you to "mix" the brush in unpredictable combinations, which leads to a more plausible effect.

The drawing process itself — for example, a portrait — begins by marking out areas of interest: eyes, mouths, eyebrows, etc. For each “region”, the program segments the image using the neighbourhood-growing method and establishes the boundaries. Then The Painting Fool is engaged in coloring each segment. He can "draw" with pencils, pastels, watercolors and crayons, taking into account the light, environmental conditions.

And The Painting Fool is just a case in point, only one representative of the “computers of art”. Moreover, their number is constantly increasing. One robot managed to charm the audience so much with a musical composition that they decided that it was written by a man. A short novel written by a Japanese robot almost won a literary award.

This brings us to an important question - the problem of the reception of art by the beholders. Is there a difference between our perception of a work created by man and that which is “generated” by a robot? On the Internet there is a site Bot or Not, which suggests to guess who wrote this or that poem - a bot or a person . The answer is not always obvious. This is an ambiguous territory.

The site Bot or Not has poems written by robots - despite the fact that people attributed them to the authorship of man. Accordingly, we can assume that these algorithms have passed the Turing test for poetry. The computer must convince 30% of people in their "humanity" to take the test. But the writer Oscar Schwartz, the creator of Bot or Not, notes that this game is not one of the gates: we can not only confuse what has been written by the bot with the human work, but vice versa - we take people's creativity for the work of robots. There is a mixture of levels, a new understanding of texts and meanings, where the line between illusion and authenticity is erased in its usual form.

This raises another problem related to the essence of the work of art: how it differs from copying and reproducing past experience.

American psychologist Colin Martindale (Colin Martindale) proposed an original theory of creativity. According to his research, the primary goal of the creator is to cause emotional arousal in the consumer. This can be achieved by various means: novelty, complexity of ideas, intellectual challenge, ambiguity and ambiguity of interpretations and messages. A society in which the level of arousal ceases to grow (or begins to decrease) —degrades.

Martindale identified two stages of the cognitive process. The primary process is undirected, irrational thinking, like dreams or dreams. The secondary process - conscious, conceptual, is the solution of specific problems and the use of logic. He applied similar optics to the creative process: conceptual consciousness can distinguish, can think logically, but it is not able to create or derive something that it did not know before, ex nihilo nihil fit - “nothing comes out of nothing”. Primordial thinking can draw analogies, build up chains of association and compare, generating new combinations of mental elements. It produces raw materials that conceptual thinking can process.

According to a similar principle, the GAN described above works — one neural network “distinguishes”, the other “compares and finds associations”. The algorithm follows the theory of creativity, produces new canvases that provoke an emotional response in people.

Art and technology have always intersected and fed each other (just recall the Renaissance, the experiments of Leonardo and Michelangelo). New materials, approaches and inventions often allowed artists to create masterpieces and whole types of art. So today, in addition to the independent “production” of poems, paintings and music, neural networks help scientists conduct research in the creative field.

The development of the modern music industry focuses on classified patterns that help literally build a mathematical model of music and “program” the desired effect from listening to the composition.

An international research team from universities in Japan and Belgium, in collaboration with Crimson Technologies, has released a special machine-based learning device that can detect the emotional state of students and generate fundamentally new content based on the information gathered.

“As a rule, machines and programs for creating songs depend directly on automatic composition systems, their pre-determined and stored volume of finished musical material allows you to compose only tracks that are similar to each other,” says Professor Masayuki of Osaka University.

Numao and his team want to provide “machines” with information about the emotional state of a person. In their opinion, this should help increase the interactivity of the musical experience. Scientists conducted an experiment, during which the subjects listened to music in headphones with sensors of brain activity. The combined EEG data was transmitted to the robot composer. As a result, it was possible to identify greater involvement and more intense emotional reaction of listeners to certain music.

Numao believes that emotionally-connected interfaces have the potential: “For example, we can use them in healthcare to motivate a person to exercise more often or simply to encourage him, to cheer up.”

John Berger (Burger), in The Art of Seeing, noted that human vision is primary in relation to language. Knowledge affects our assessment. According to Berger, any image is just one of many ways of seeing, but our perception of the image depends on the way we use the vision.

Therefore, the discussion about the creativity of algorithms motivates us to think not only about how the programs are “created”, but also about how we ourselves perceive creativity. Neural networks can write poetry, and sometimes we confuse them with human ones: but it is our perception, our interpretation that fills them with meaning. For example, for an algorithm, words, strokes, colors, and sounds are just a set of characters that it can put into a harmonious structure. This is a raw material for which the robot does not see the content, the semantic field. At least not yet see.

Robots can not give objects value, and works - a global cultural value. “I have never written so many poems and so little poetry,” we read in Umberto Eco. The AI can create a brilliant symphony or a combination of rhymes that are correctly organized graphically, but only recognition of a person will allow all this to acquire a status so desired by many - to really be an art, and not to seem to them.

But there is one area that has traditionally been considered to belong solely to man - creativity. However, this statement is beginning to be questioned. Lee Sedol, the loser of AlphaGo, admitted : “The defeat made me doubt human creativity. When I saw how AlphaGo plays, I wondered how well I play myself. ” Therefore, in today's post, let's talk about whether robots are able to step onto the territory of art, into the space of creativity, which means emotions and perceptions.

/ Flickr / franck injapan / PD

/ Flickr / franck injapan / PD')

Creativity of robots

Self-learning systems have long started to check for creativity. For example, in 1970, scientists developed an algorithm that could write prosaic texts - albeit rather meaningless ones.

Since then, neural networks have learned how to draw pictures , compose music and poems, and invent scenarios for films. Yandex teaches neural networks to record music albums, similar to albums of popular groups, and write poetry in the style of Yegor Letov. The principle of operation of all algorithms is similar: they analyze a huge array of works of art, and then, based on the obtained laws, they "create" their creation: a picture, a musical composition, a novel, etc.

Creativity of neural networks is gradually institutionalized. So, in 2016, for the first time, a competition of art works created by robots was held. This year, the main prize of 40 thousand dollars was won by the PIX18 algorithm, invented by Creative Machines Lab: it was praised for its good smear and the ability to generate works based on photos at its disposal.

So commented on the victory in the committee: “The composition and work with the brush resembles Van Gogh. An interesting palette. It looks like a real criticism of the painting of the beginning artist.

Perception of works

Paintings created by Google's DeepDream algorithm are considered practically an art - first of all, precisely because they were created by artificial intelligence.

However, there is another important issue - novelty. By this criterion, we evaluate the works of artists. If the algorithms do not copy or do not process the photos, but, for example, write abstract pictures, can they really create something new?

Developers from the Laboratory of Artificial Intelligence and Art at Rutgers University tried to answer this question by creating a generative-contention network (GAN). Earlier, the algorithm was studied on the basis of the answers of one discriminator: he analyzed pictures, painted his own and checked the result. He produced images similar to those he studied before.

The team took the next step in the development of the network and added a second discriminator, competing with the first. Now the neural network analyzes approximately 81 thousand pictures and, based on such a large sample, forms a list of conditions under which the created picture can be attributed to works of art. In parallel, the second discriminator makes a list of styles and checks the picture for similarity with them - it carries out a verification operation. A new picture is born when an image is recognized as a work of art, not identical to any of the previously existing styles.

In addition, neural networks are already able to create animations . The computer program The Painting Fool , developed by Simon Colton of Imperial College London, was able to weave the same images she painted in the video.

The system adapts special solutions for the automated creation of collages, simulating brush strokes on canvas. The software is able to imitate drawing techniques, for which the capabilities of multi-core processors are used - each thread controls a separate brush. This allows you to "mix" the brush in unpredictable combinations, which leads to a more plausible effect.

The drawing process itself — for example, a portrait — begins by marking out areas of interest: eyes, mouths, eyebrows, etc. For each “region”, the program segments the image using the neighbourhood-growing method and establishes the boundaries. Then The Painting Fool is engaged in coloring each segment. He can "draw" with pencils, pastels, watercolors and crayons, taking into account the light, environmental conditions.

And The Painting Fool is just a case in point, only one representative of the “computers of art”. Moreover, their number is constantly increasing. One robot managed to charm the audience so much with a musical composition that they decided that it was written by a man. A short novel written by a Japanese robot almost won a literary award.

This brings us to an important question - the problem of the reception of art by the beholders. Is there a difference between our perception of a work created by man and that which is “generated” by a robot? On the Internet there is a site Bot or Not, which suggests to guess who wrote this or that poem - a bot or a person . The answer is not always obvious. This is an ambiguous territory.

The site Bot or Not has poems written by robots - despite the fact that people attributed them to the authorship of man. Accordingly, we can assume that these algorithms have passed the Turing test for poetry. The computer must convince 30% of people in their "humanity" to take the test. But the writer Oscar Schwartz, the creator of Bot or Not, notes that this game is not one of the gates: we can not only confuse what has been written by the bot with the human work, but vice versa - we take people's creativity for the work of robots. There is a mixture of levels, a new understanding of texts and meanings, where the line between illusion and authenticity is erased in its usual form.

Creativity is an emotional impact.

This raises another problem related to the essence of the work of art: how it differs from copying and reproducing past experience.

American psychologist Colin Martindale (Colin Martindale) proposed an original theory of creativity. According to his research, the primary goal of the creator is to cause emotional arousal in the consumer. This can be achieved by various means: novelty, complexity of ideas, intellectual challenge, ambiguity and ambiguity of interpretations and messages. A society in which the level of arousal ceases to grow (or begins to decrease) —degrades.

Martindale identified two stages of the cognitive process. The primary process is undirected, irrational thinking, like dreams or dreams. The secondary process - conscious, conceptual, is the solution of specific problems and the use of logic. He applied similar optics to the creative process: conceptual consciousness can distinguish, can think logically, but it is not able to create or derive something that it did not know before, ex nihilo nihil fit - “nothing comes out of nothing”. Primordial thinking can draw analogies, build up chains of association and compare, generating new combinations of mental elements. It produces raw materials that conceptual thinking can process.

According to a similar principle, the GAN described above works — one neural network “distinguishes”, the other “compares and finds associations”. The algorithm follows the theory of creativity, produces new canvases that provoke an emotional response in people.

Neural networks - to help the artist. And the musician

Art and technology have always intersected and fed each other (just recall the Renaissance, the experiments of Leonardo and Michelangelo). New materials, approaches and inventions often allowed artists to create masterpieces and whole types of art. So today, in addition to the independent “production” of poems, paintings and music, neural networks help scientists conduct research in the creative field.

The development of the modern music industry focuses on classified patterns that help literally build a mathematical model of music and “program” the desired effect from listening to the composition.

An international research team from universities in Japan and Belgium, in collaboration with Crimson Technologies, has released a special machine-based learning device that can detect the emotional state of students and generate fundamentally new content based on the information gathered.

“As a rule, machines and programs for creating songs depend directly on automatic composition systems, their pre-determined and stored volume of finished musical material allows you to compose only tracks that are similar to each other,” says Professor Masayuki of Osaka University.

Numao and his team want to provide “machines” with information about the emotional state of a person. In their opinion, this should help increase the interactivity of the musical experience. Scientists conducted an experiment, during which the subjects listened to music in headphones with sensors of brain activity. The combined EEG data was transmitted to the robot composer. As a result, it was possible to identify greater involvement and more intense emotional reaction of listeners to certain music.

Numao believes that emotionally-connected interfaces have the potential: “For example, we can use them in healthcare to motivate a person to exercise more often or simply to encourage him, to cheer up.”

Art to see

John Berger (Burger), in The Art of Seeing, noted that human vision is primary in relation to language. Knowledge affects our assessment. According to Berger, any image is just one of many ways of seeing, but our perception of the image depends on the way we use the vision.

Therefore, the discussion about the creativity of algorithms motivates us to think not only about how the programs are “created”, but also about how we ourselves perceive creativity. Neural networks can write poetry, and sometimes we confuse them with human ones: but it is our perception, our interpretation that fills them with meaning. For example, for an algorithm, words, strokes, colors, and sounds are just a set of characters that it can put into a harmonious structure. This is a raw material for which the robot does not see the content, the semantic field. At least not yet see.

Robots can not give objects value, and works - a global cultural value. “I have never written so many poems and so little poetry,” we read in Umberto Eco. The AI can create a brilliant symphony or a combination of rhymes that are correctly organized graphically, but only recognition of a person will allow all this to acquire a status so desired by many - to really be an art, and not to seem to them.

Source: https://habr.com/ru/post/337624/

All Articles