Metacomputations and deep convolutional networks: an interview with ITMO professor

After AlphaGo's victory in March 2016, one of the strongest Go players in the world, Lee Cedol, spoke about deep learning methods almost everywhere. And even Google did not miss the opportunity to call itself a company of machine learning and artificial intelligence.

What is behind the term "deep learning"? What are machine learning models and what are they written on? We asked Alexey Potapov, a professor at the ITMO department of computer photonics and video informatics, to answer these and many other questions related to the Ministry of Defense and, in particular, with deep learning.

Alexey Potapov

Alexey Potapov Professor of the Department of Computer Photonics and Video Informatics ITMO. Winner of the competition of grants for young scientific and pedagogical workers of universities in St. Petersburg. The winner of the competition of grants of the President of the Russian Federation for state support of young Russian scientists - doctors of science in the field of "Information and telecommunication systems and technologies".

')

“Today we almost everywhere hear about the boom of machine learning and especially deep learning. How and in what situation can a simple IT person need it?

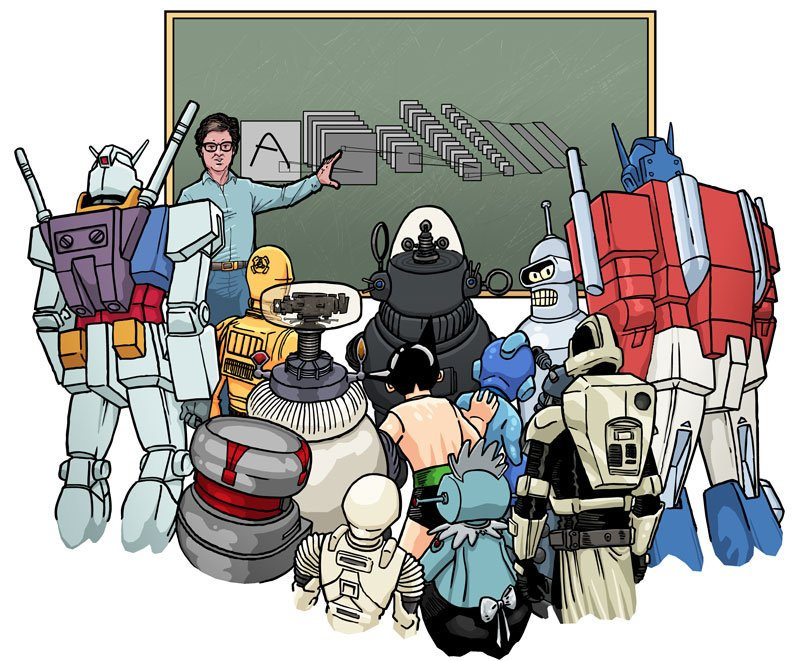

Alexey Potapov : Honestly, I don’t have an image of a “simple IT person” in my head, so it’s not easy for me to answer this question. Is it possible to consider a “simple IT person” who can apply deep learning methods to automate their activities? Not sure. Probably, it will still be "difficult IT-Schnick." Then, it’s probably not necessary for the simple to delve into anything special - except to maintain the current picture of the world and as a demotivator: sooner or later, “robots will take away the work” not only from McDonald’s employees, but also from IT specialists.

- And if a person has a technical education, and he decided to immerse himself in the topic - where should he start and where to go?

Alexey Potapov : It depends very much on how deeply and for what purpose a person decided to dive into the topic, as well as on the level of his training. It is one thing to use existing pre-trained networks or train well-known models with implementation on high-level libraries, such as Keras. This can be done and not the most advanced student, not really delving into the mathematics. It is enough to study the documentation of the corresponding library and learn how to combine different layers, activation functions, etc. using examples. - work as with “black boxes”.

In general, in principle, it is better for many to begin with this - to feel deep learning in practice, to form your intuition about it. Fortunately, now it is quite accessible without special knowledge.

If a person does not approach this way or he wants to dig deeper, he can start with a superficial theory - with a description of the basic models (autoencoders, Boltzmann machines, convolution networks, recurrent blocks - tanh-RNN, LSTM, GRU, etc.) AS- Is. Someone may have to start with logistic regression and perceptrons. It is easy to read about all this on the Internet. At this level of immersion, you can use lower-level libraries (such as Tensorflow and Theano) and implement models not from ready-made blocks, but at the level of tensor operations.

In general, textbooks have now appeared that give a more systematic introduction to the field at a more advanced level than just a description of standard models. For example, deeplearningbook.org is often mentioned, but, unfortunately, I have not read it. Judging by the table of contents, this textbook is not so superficial and quite suitable for a somewhat in-depth study of the issue, although at the same time preference is given explicitly to those questions that the authors dealt with. And this is not the whole area. I also heard about good online courses. Someone starts with them. Unfortunately, I cannot give specific recommendations, since I myself began to study these questions before these textbooks and courses appeared.

For example, here are some pretty good courses:

Introduction into Deep Learning

Neural Networks and Deep Learning

Convolutional Neural Networks for Visual Recognition

- In the report you are considering discriminant and generative models, can you explain with a simple example what is the difference between them and what are they for?

Alexey Potapov : Speaking broadly, discriminant and generative models are used by people everywhere. Say, when the ancient people noticed a difference in the movement of planets and stars across the sky, they built a discriminant model: if a star has a backward motion, then it is a planet, and if it moves uniformly across the celestial sphere, then it is a star. But the Kepler model is a generative model: it does not just pick out some distinguishing feature from observations, but allows you to reproduce the trajectory.

A lot of discriminant models in the humanities, while the exact to the generative. The discriminant model transforms the observed image into some description, often incomplete, identifies features that have a pragmatic meaning.

Take the mushrooms. You want to separate the poisonous mushrooms from edible. The discriminant model will use various visual signs that do not themselves cause toxicity, but can be recognized by them, for example: the color of the cap, the color of the slice or the presence of a fringe. It is very efficient and practical.

The generative model in this case will, say, take the DNA of the fungus and model its formation by cell division or gene expression. It will predict the appearance of the fungus and the substances present in them that can be used to predict toxicity.

If you consider a mushroom, in order to use the generative model, you will have to guess its genotype, for example, from several samples of different genotypes, simulating mushrooms on the basis of them and comparing them with observation. This is terribly inefficient if you do not add discriminant models here. But, by defining the edibility of an unknown mushroom based on a discriminant model built on other mushrooms, you are very much at risk, whereas with a generative model there are much less chances to err. In addition, with the generative model, you can solve not only the problem of recognition, but also, say, the derivation of a new variety of fungus with unique taste or visual properties. Discriminant models are fundamentally incapable of this - in them the output is carried out in one direction. The same, in general, is typical for discriminant and generative models in machine learning.

- Why did the deep learning and probabilistic approach shoot right now, and not 20-30 years ago?

Alexey Potapov : If you answer as simply as possible, then the point is obviously in computing resources, especially in the development of GPGPU, as well as in the availability of data for training. All, of course, a little more complicated, and this is not the only reason, but still the main one. Of course, it is also a matter of developing the views of researchers.

For example, 20 years ago, it was still generally accepted that multi-layer network training is an extremely difficult optimization task, that simplified solutions will lead to extremely far from optimal results, and full-fledged optimization will be hopelessly slow. This idea turned out to be erroneous, but then nobody knew for sure. And few people are taken to engage in unpromising things.

Back then, support vector machines were popular. Many successfully dealt with them. Now, by the way, the same with deep learning. Everyone is engaged in it, and not in other hopelessly heavy approaches, which, however, can also fire in a decade or so. But still the matter is largely connected with computational resources. The same LSTM was known 20 years ago. But if you transfer its modern use to the computers of those times, then it will immediately become unnecessary to anyone ...

Let us turn to technical issues. Neuroprobability programming on Edward - how are the two paradigms combined and what does it allow to model?

Alexey Potapov : In Tensorflow, everything is built on tensors and operations with them and, most importantly, automatic differentiation is added, which is used to optimize a given loss function by gradient descent. In Edward, all operations with tensors remain, but probability distributions are added on top or inside of tensors, as well as special loss functions for distributions, say, Kullback-Leibler divergence, and methods for optimizing them. This makes it possible to simply and compactly set up and train generative deep networks, such as Generative Adversarial Networks (translation option: generative competing networks), variational autoencoders, as well as write your own similar models and make Bayesian inference over any networks.

In the latter case, we do not just find a point estimate for the weights of the network links, but we obtain at the output a probability distribution for these weights. This can give us information about the uncertainty with which the network performs its functions on specific input data. In addition, we can enter any a priori information that can speed up learning and help with transfer training.

- Under what practical conditions would you definitely not recommend the use of deep learning?

Alexey Potapov : In fact, there are still a lot of such tasks where deep learning is not the best approach. They can be divided into two categories - not enough computational resources and not enough data, although often these cases accompany each other. This is often found even in image processing, where, it would seem, convolutional networks have long surpassed manual feature engineering.

Take a robot vacuum cleaner that drives through the house, cleans it and at the same time compiles a room map. Then the hosts come and turn on the light. The robot must continue to successfully recognize locations with changed lighting conditions. The on-board processor is as sickly as possible, so that it spends less energy and is cheaper. In the cost of every penny in the account. The room is previously unknown. Annotated data for different lighting conditions, so that you can train the invariant features, of course, no. Collect such data is expensive. And making a representative sample is difficult.

Of course, manually designed tags will not be the best. But you can develop good signs in the face of lack of data. And they can be made very fast — an order of magnitude faster than a convolutional network with comparable quality. But, of course, if you have enough data and there is no very hard limit on computing resources, then it is easier to train a network in a day, which can easily beat the signs that you developed a week, or even a month.

There are, of course, other cases where deep learning is difficult to apply. For example, this is complex data; when making decisions, tasks that are combinatorial in nature. But, as I understand it, the question was about the conditions when deep learning seems to be applicable, but it has to be abandoned.

- One of the possible classifications of learning models divides them into interpretable and uninterpreted. As a rule, deep learning is attributed to the latter - is there any way, however, to look under the hood and see what she has learned?

Alexey Potapov : You can build a more complex metasnet that will “understand” a simpler network, but this is computationally too expensive. The question is rather what you want to know about the network. Say, “Bayesianization” of a network will give you information about uncertainty. There are techniques for generating "bad" examples. In the case of generative networks, you can visualize the learned diversity by changing one hidden variable. That is, there are separate techniques for analysis. But, of course, they will not show you the whole picture - if you use typical deep learning networks, and not some special compact models, then you cannot simply describe the operation of the network, since such a network is in principle complex.

Classic machine learning algorithms with a teacher (supervised), such as SVM, are trying to determine the class of an object, and without a teacher (unsupervised) - to break a set of objects into classes without labels. From this point of view, autoencoders are doing something very strange: they are trying to recreate the data itself - why and why can it be necessary at all?

Alexey Potapov : Learning without a teacher does not necessarily come down to clustering. This is only one possible class of models. And in general, the division into teaching with and without a teacher is somewhat arbitrary. You can count a class label simply as an additional categorical feature and then you will get the usual formulation of training without a teacher. The question is rather in the objective function, what do you want to do as a result of the training. When teaching with a teacher, as a rule, you are interested in the prediction of the very label and nothing else. When teaching without a teacher, you do not have such a specific goal, it is much more general - you want to find patterns in the data. Perhaps these are clusters into which images are grouped. Perhaps a smooth small-sized manifold on which these images are located. Thus, when learning without a teacher, you are trying to build a model of how the data is distributed. This is usually a generative model, although not necessarily. But how to verify the correctness of such a model? It is necessary to compare the distribution specified by it with the real one, that is, it is necessary to recreate the data from the training sample. Thus, autoencoders do nothing strange - they solve a completely typical learning task without a teacher. This is exactly what all other methods of teaching without a teacher do, although not always explicitly.

The same clustering can be interpreted as a projection of the image on the center of the cluster. That is, each image is reconstructed as the center of the cluster closest to it, and the criterion is also the reconstruction error — the distance from the real image to this center. Just like in autoencoders, just another type of pattern. Feature avtoenkoderov only how specifically they solve this problem. Namely, they include both the generative and discriminant components of the model.

Many researchers (for example, Demis Hassabis and Andre Un) note that machine learning began with the definition of parameters (classical machine learning SVM, C4.5), switched to the construction of features (eg, CNN) and is now entering the stage of finding and building architecture. How technically can such a meta-learning be implemented at all and how are the results comparable with traditional methods?

Alexey Potapov : If we talk about meta-training as a whole, then personally this direction is very interesting for me. When you look at the variety of available models, it becomes obvious that none of them is fundamental, there are some heuristics in all that may not be very applicable, etc. All these models are invented by people, which means that it is possible to automate the process of developing these models and their learning algorithms. And just as manually constructed attributes are now easily overcome by trained attributes, so also manually constructed models and learning algorithms will hypothetically lose to meta-learning. Now there are various separate experiments showing that this is indeed possible - in narrow experiments, meta-learning (and, more generally, AutoML) is superior to human development, although technology is not sufficiently developed for widespread use.

If the topics of Big Data and machine learning are close to you, just like us, we would like to draw your attention to a number of key presentations at the upcoming SmartData 2017 conference , which will be held on October 21 in St. Petersburg:

- Deep convolutional networks for image segmentation (Sergey Nikolenko, POMI RAS)

- From click to forecast and back: Data Science pipelines in OK (Dmitry Bugaychenko, Odnoklassniki)

- Automatic search for contact information on the Internet (Alexander Sibiryakov, Scrapinghub)

- Applied machine learning in e-commerce: scenarios and architectures of pilots and combat projects (Alexander Serbul, 1C-Bitrix)

- Deep Learning: Recognizing scenes and sights on images (Andrei Boyarov, Mail.ru)

Source: https://habr.com/ru/post/337392/

All Articles