Poker AI: how to teach algorithms to bluff

About how to improve artificial intelligence, you can judge by the usual games. Over the past two decades, the algorithms have surpassed the world's best players: backgammon first fell and checkers, then chess, Own Game (Jeopardy!), In 2015 - Atari video games and last year - Go.

All these successes are about games with information symmetry, where players have identical information about the current state of the game. This property of completeness of information underlies the algorithms that ensure these successes, for example, local search during the game.

But what about games with incomplete information?

The most obvious example of such a game is poker. In order to actually deal with this game and the algorithms for solving this problem, we will organize a hackathon to write game bots based on machine learning. On how to teach algorithms to bluff and try your hand at poker, without touching the card, under the cut.

1. AI in games with incomplete information

- Challenges in agent programming

- poker AI

- Practical value of decisions

2. Sberbank Holdem Challenge

- The task of the participants

- Competition program

3. Programming strategies

- An example of a simple bot

- Analysis of game replays

- Preparation of the decision for sending

4. Approaches to creating strategies

- Classic approaches

- Machine learning based approaches

5. Hackathon platform

6. References

1. AI in games with incomplete information

The world is full of tasks related to the interaction between several agents. Historically, people were the main participants in these multi-agent situations; however, with the development of AI, we had the opportunity to introduce algorithms into our daily life as equal participants and agents with whom we can interact. Right now, similar computer agents solve many problems: from simple and innocuous as automatic telephone systems, to critical ones like security management and even autonomous transport management. This allows you to significantly automate many everyday processes, transferring decision-making to algorithms and thereby reducing the burden on people.

A feature of many tasks where we use computer agents is the large number of real-world constraints affecting the complexity of their programming. And the most important thing for computer agents is access to all the information necessary for decision making. Let us examine how this affects the model problems of AI - how our agents play games.

Games with asymmetry and incompleteness of information require much more complex approaches to decision-making compared to similar-sized games with perfect information, fully accessible at any time. The optimal solution at any time depends on the knowledge of the strategy of opponents, depending on the information that is hidden to us and available only to them, which can only be assessed by their past actions. However, their previous actions also depend on the information hidden from them about our actions and how our actions revealed this information. This recursive process shows the main difficulty in building efficient decision-making algorithms.

Challenges in agent programming

By agent we shall mean any autonomous participant in the decision-making process: both a person and a computer. In a multi-agent environment, agents interact with each other and do not always know the strategies, goals, and capabilities of other agents. The optimal behavior of an agent that maximizes its outcome in a similar environment depends on the actions of other agents. To build an effective agent in a multi-agent environment, it is necessary to adapt to the actions of other agents, modeling their strategies and learning from their behavior.

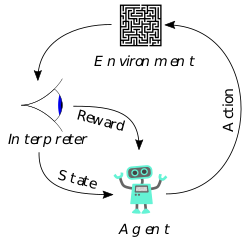

In order for agents to adapt in real time, they need to choose the optimal actions in the course of achieving their results. If you use approaches based on training with reinforcements (Reinforcement Learning), agents will accumulate a reward for their actions. Agents will also balance between following their planned exploitation behavior and exploration experimental actions, trying to learn useful information about the strategies of other players.

In order for agents to adapt in real time, they need to choose the optimal actions in the course of achieving their results. If you use approaches based on training with reinforcements (Reinforcement Learning), agents will accumulate a reward for their actions. Agents will also balance between following their planned exploitation behavior and exploration experimental actions, trying to learn useful information about the strategies of other players.

In addition to the already complex task formulation, agents will face other constraints related to working in a multi-agent environment with incomplete information. We write out the main difficulties that our agents will face:

- Limited number of observations. As a rule, only a small set of observations will be available for agents. This is especially critical in real world applications where human agents are not available for a long time.

- Stochastic observations. Both the environment of interaction between agents and the agents themselves are often subject to a large number of random factors. Selecting a useful signal for training and adaptation becomes especially difficult if the behavior of the agent is a random variable, depending on a large number of other random variables.

- Incompleteness of the observed information. This is a key feature of the environment in which agents operate. Agents have access to only a partial and limited review of it, which hides most of the information about the world from agents.

- Dynamic behavior. With the passage of time, agents can adapt to each other's behavior, leveling the progress made in the course of training and adaptation. The environment can also change with the passage of time, provoking agents to new actions, strategies and behavior.

All these characteristics impose serious difficulties on writing computer agents. Modeling the behavior of agents, even with one of these difficulties, is a complex knowledge-intensive task, not to mention real-world environments, where engineers and authors of such agents will have to face a complete list of these difficulties. We talked about the general case of complex environments — now let's get to the games.

Poker AI

An illustrative example of a complex environment with all the properties described by us is poker. In it there are also incomplete information about the cards, and strategies of the participating players, and the element of randomness associated with the distribution of cards, and the other difficulties we have arising during the game. Moreover, the number of possible game states that characterize game situations is enormous. So huge that only slightly (on a logarithmic scale) is inferior to Guo: in no-limit Hold'em there are 10,160 , while in Guo there are about 10,170 .

Despite the fact that poker is a gamble, it is recognized as an official sport, and there are national federations of sports poker in almost every country (including Russia). Today this game has millions of fans around the world, but even when poker was still far from world popularity, it was appreciated not only by players, but also by scientists. The pioneer of modern game theory, John von Neumann, was so fascinated by this bluffing and betting game that he said:

“Real life is all about bluffing, from little tricks of deception, from thinking about what the other person expects from you. That's what the game is in my theory. ” John von neumann

The history of the development of AI for the game of poker has more than 30 years, but the most outstanding achievements have occurred literally in the last 3 years.

The first programs and algorithms of the poker game appeared in the 1980s, for example, the Orac system from Mike Caro, written by him in 1984 and demonstrated at the Stratosphere tournament. In 1991, the world's first research group on the development of AI for poker was created at the University of Alberta (Canada). In 1997, this group demonstrated its Loki system, the first successful and significant implementation of AI for poker. Loki played at a level slightly worse than the average human player, but this was a significant milestone for the entire research direction. In the 2000s, there was a shift in the paradigm of writing AI for poker bots. Researchers moved away from approaches to poker, inspired by the success of Deep Blue in chess (successfully defeating Garry Kasparov in 1996), to a full-fledged methodology and setting simulation problems for poker right away.

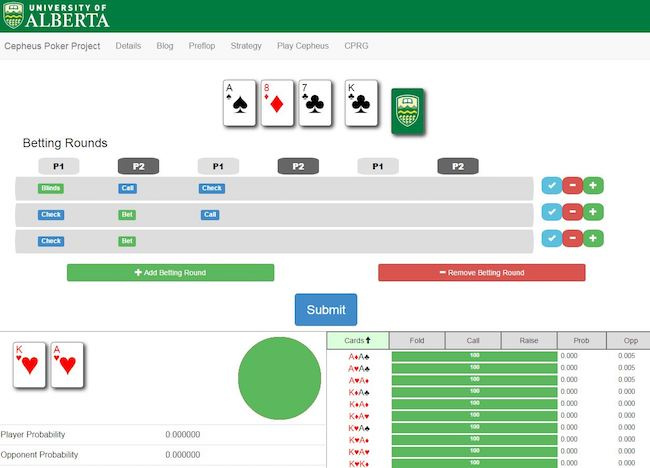

In 2015, the University of Alberta presented its Cepheus system, which literally “decided” one of the types of poker - limit heads-up poker (a simplified version, about 10 18 game situations). This is a significant milestone in the development of AI, since it is currently the only incomplete information game with a complete optimal solution. We managed to achieve this by putting Cepheus to play with myself for two months (AlphaGo, who won the world Go champion, also studied in a similar way).

In 2015, the University of Alberta presented its Cepheus system, which literally “decided” one of the types of poker - limit heads-up poker (a simplified version, about 10 18 game situations). This is a significant milestone in the development of AI, since it is currently the only incomplete information game with a complete optimal solution. We managed to achieve this by putting Cepheus to play with myself for two months (AlphaGo, who won the world Go champion, also studied in a similar way).

It is important to note that the system is not perfect in the sense that it can sometimes lose chips in some hands. However, with a sufficient number of parties, Cepheus will still be the winner. It is also important to note that the unlimited version of heads-up poker still does not have a similar full solution due to too many game states.

This year there were just two important events in the world of poker bots. The University of Alberta introduced the DeepStack algorithm for playing unlimited Heads-Up Poker. Based on deep neural networks, the algorithm successfully overcame many human rivals, including professional players and, similarly, AlphaGo could “learn” to imitate human intuition by continuously playing many games with itself.

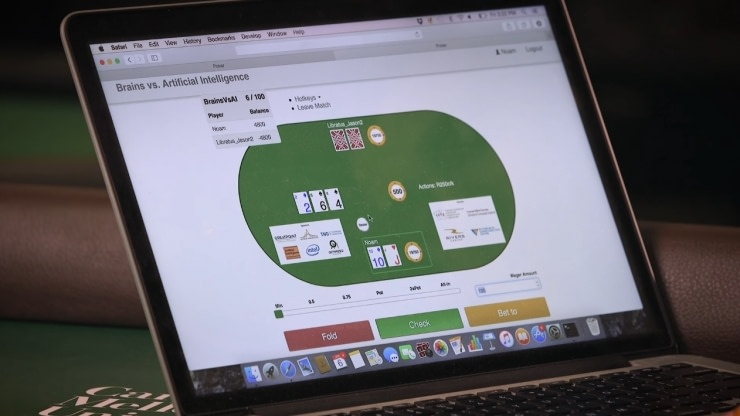

Broadcast tournament Libratus vs man

The most significant event of 2017 in the world of poker bots, and possibly the AI as a whole. The Libratus system from Carnegie Mellon University overpowered professional poker players with confidence - a team of the world's best unlimited Heads-Up poker players. In their assessment, the algorithm was so good that it seemed as if he was flirting and seeing the cards of his rivals. Matches were played in real time during the 20-day tournament, and the actions of the algorithm were considered on the Pittsburgh supercomputer.

Practical value of decisions

Despite the seeming inapplicability of poker bots to real-world problems, their development has brought many methods that can be transferred from practice to the card game. The algorithms of modern poker bots that overwhelm the best human players are universal and are generally aimed at training agents in environments with incomplete and asymmetric information. They can be transferred to a variety of applications where decision-making is required in a medium of similar complexity: from security to marketing, in which you can simulate bidding for an audience.

In the banking sector, too, there are many practical problems, where the algorithms behind the advanced Poker bots would find application. Among such business objectives of Sberbank, first of all, it is worth mentioning risk-return management and pricing in the market with many other object banks. But the list of these applications can be easily extended to tasks such as Customer Value Management or Next Best Action.

2. Sberbank Holdem Challenge

Hackathon on writing game bots based on machine learning

To spur the development of machine learning and artificial intelligence, we organize a unique hackathon, which is preceded by an online competition. We invite machine learning experts to try their hand at writing game artificial intelligence that can make optimal decisions in the face of uncertainty and simulate the behavior of other players in poker.

"Artificial intelligence today should serve not only to develop rational algorithms, but also to simulate the irrational behavior of market participants or, as is the case with our tournament, poker players." Alexander Vedyakhin, Senior Vice President, Sberbank

We hope that the achievements of the winners can be applied in the development of Sberbank artificial intelligence. Nevertheless, even if it may take years before the practical applicability of these developments, such hackathons are important for the development of science on such model problems.

The task of the participants

The game for which you need to write your poker bot is the most popular type of poker: No-limit Texas Hold'em. It is also the most complex variety of the game, to the success in which not a single research group approached: not 2, but 9 players participate in it, but the number of game combinations is huge and reaches 10,160 .

The game for which you need to write your poker bot is the most popular type of poker: No-limit Texas Hold'em. It is also the most complex variety of the game, to the success in which not a single research group approached: not 2, but 9 players participate in it, but the number of game combinations is huge and reaches 10,160 .

Participants will have to implement an agent who will play poker. A poker game is a sequential series of hands (rounds), which ends when all the poker chips remain with only one player, or until the limit of the number of rounds is released. In each game, 9 agents-bot players take part.

Participant agents will randomly form games and tournaments, which will determine the best strategies. At the time of the start of the game, each agent is given a time bank, which can be used to make decisions during the tournament. If the agent goes beyond the time limit or the agent sends a response that does not comply with the data transfer protocol, the simulator performs an automatic reset of cards in each of the hands before the end of the tournament.

Competition program

The competition takes place in 2 stages: an individual qualifying tournament and a team offline hackathon for 100 finalists. The 100 best participants who will pass the qualifying online stage will be invited to a closed offline hackathon.

- August 30, 12:00

The start of the online stage of the competition on the platform of the competition, the beginning of sending solutions. - September 18, 03:00 (term extended from September 15, 23:59)

Closing the online stage, rating the participants and selecting the finalists (top 100). - September 23, 10:00

Start of the offline hackathon stage at Sberbank Corporate University. - September 24, 16:00.

Final hakaton, summing up, award ceremony winners.

Qualifying online stage takes place individually. During the online stage, the competition team provides a test environment for offline hackathons, so that participants learn how to write their poker bots. Also during the online stage, every midnight on schedule, more than 100 random tournaments are held, each day determining the ranking of bots. During the offline stage, participants will be able to form teams among themselves, and the rating-determining tournaments will take place between all participants every hour.

The hackathon is held with the direct participation of the Academy of Technologies and Data of the Sberbank Corporate University, the hackathon final will be held on the campus of the Corporate University , where the finalists will have to refine their algorithms.

Campus of the Sberbank Corporate University

Participants of the offline stage are provided with transfer from Moscow to campus, hotel accommodation, meals, as well as other services and excellent campus facilities. There will be the final competition and a poker tournament between bots with the participation of professional commentators.

Unfortunately, only citizens of the Russian Federation can take part in this competition. Logistics to Moscow also remains on the participants themselves. In this regard, the places in the offline hackathon of those who will be in the top 100, but for some reason will not be able to come to the offline stage, will be transferred next after them in the list of the rating table.

The prize fund for the top three winning teams is 600,000 rubles.

3. Programming strategies

Let's see how you can implement your poker bot and bring it to victory. For this we need 3 things:

- A programming language with which we feel confident (there are ready examples in Python and C ++).

- A simulator of poker, within which our bot will work. The simulator uses the Open-Source PyPokerEngine library .

- The code of the bot itself, which performs game actions inside the simulator.

Let's first deal with the bot to see that it is not so difficult.

An example of a simple bot

Let's look at an example of the simplest bot in the Python language, which performs a CALL operation every time, that is, it is always self-confident and only does what equalizes the opponents bet:

from pypokerengine.players import BasePokerPlayer class FishPlayer(BasePokerPlayer): def declare_action(self, valid_actions, hole_card, round_state): call_action_info = valid_actions[1] action, amount = call_action_info["action"], call_action_info["amount"] return action, amount def receive_game_start_message(self, game_info): pass def receive_round_start_message(self, round_count, hole_card, seats): pass def receive_street_start_message(self, street, round_state): pass def receive_game_update_message(self, action, round_state): pass def receive_round_result_message(self, winners, hand_info, round_state): pass A bot is an object that implements methods of game event handlers and a method for selecting an action at the moment of the declare_action bot move. More information about the implementation of game strategies can be found in the documentation for the library .

Game strategy development is not only available in the Python language and can be implemented in any other programming language. For a description of the API and how to create bots, see the bot preparation guide .

Every day at 00:00 MSK a tournament is held between all bots sent to the system. If a participant has sent several agents, then only his last submitted decision is taken into account.

During the tournament, each bot plays a series of games with random opponents - bots of other participants. The results table is constructed by descending the average amount of the remaining chips at the bot on the basis of all the games for the tournament.

In the tournament game take part exactly 9 bots. The maximum number of rounds is 50. At the beginning of the game, each bot receives 1500 chips, the size of the small blind is 15.

This corresponds to the following parameters of the round in PyPokerEngine:

config = setup_config(max_round=50, initial_stack=1500, small_blind_amount=15) Game Replay Analysis

At the end of the tournament, participants will be available archives with a magazine of games of all bots. Thus, you will be able to analyze the strategy of your opponents, viewing their actions during the game. However, remember that other participants can also analyze your bot's playing style and arrange an ambush for your next tournament.

An example of a game replay file: example_game_replay.json

Game replays are recorded as a JSON object with fields:

rule : game options

seats : information about bots, in particular, for each bot its name is indicated - this name corresponds to the participant who sent the bot

rounds : a list of all rounds indicating the actions performed by bots

Preparation of the decision for sending

A specially prepared docker image is used as the launch environment for bots. In the testing system, you must send the bot code, packed in a ZIP-archive.

Sample archives:

→ example-python-bot.zip

→ example-cpp-bot.zip

In the root of the archive must be a file metadata.json with the following content:

{ "image": "sberbank/python", "entry_point": "python bot.py" } Here image is the name of the docker image in which the solution will be launched, entry_point is the command with which the solution should be launched. For a bot program, the current directory will be the root of the archive, except for the executable file you can put any other auxiliary ones. Archive size limit - 1GB.

For almost any programming language, there is a docker environment in which you can run your bot:

- sberbank / python - Python3 with a large set of libraries installed

- gcc - to run compiled C / C ++ solutions ( more here )

- node - to run javascript

- openjdk - for java

- mono - for C #

- Any other image available for download from DockerHub will also work.

The executable command is exchanged with the game simulator via stdin / stdout. The simulator sends one event in the stdin line, in the format event_type<\t>data , where data is a JSON object with event parameters. An example of the input that the simulator provides to stdin. Description of events and their parameters .

In response to the declare_action event declare_action bot should respond to stdout with a line in the allotted time format:

action<\t>amount Here action is one of the actions available to the player (fold, call, raise), amount is the number of chips for a raise, 0 in other cases.

In the case of using buffered I / O, remember to flush() buffer ( flush() ) after writing the action to stdout. Otherwise, the simulator may not receive a message and the bot will have a time limit.

Development of a bot in Python is most conveniently done using the PyPokerEngine library as shown in the example above. It is recommended to run it in the sberbank / python docker environment, in which Python 3 is installed, as well as a large set of libraries, including PyPokerEngine directly and the data scientist’s gentlemen’s set: numpy, scipy, pandas, sklearn, tensorflow, keras. A complete list of installed python packages can be found in this file .

The full code of the example of the poker bot in python is available below.

import sys import json from pypokerengine.players import BasePokerPlayer class MyPlayer(BasePokerPlayer): # Do not forget to make parent class as "BasePokerPlayer" # we define the logic to make an action through this method. (so this method would be the core of your AI) def declare_action(self, valid_actions, hole_card, round_state): # valid_actions format => [raise_action_info, call_action_info, fold_action_info] call_action_info = valid_actions[1] action, amount = call_action_info["action"], call_action_info["amount"] return action, amount # action returned here is sent to the poker engine def receive_game_start_message(self, game_info): pass def receive_round_start_message(self, round_count, hole_card, seats): pass def receive_street_start_message(self, street, round_state): pass def receive_game_update_message(self, action, round_state): pass def receive_round_result_message(self, winners, hand_info, round_state): pass if __name__ == '__main__': player = MyPlayer() while True: line = sys.stdin.readline().rstrip() if not line: break event_type, data = line.split('\t', 1) data = json.loads(data) if event_type == 'declare_action': action, amount = player.declare_action(data['valid_actions'], data['hole_card'], data['round_state']) sys.stdout.write('{}\t{}\n'.format(action, amount)) sys.stdout.flush() elif event_type == 'game_start': player.set_uuid(data.get('uuid')) player.receive_game_start_message(data) elif event_type == 'round_start': player.receive_round_start_message(data['round_count'], data['hole_card'], data['seats']) elif event_type == 'street_start': player.receive_street_start_message(data['street'], data['round_state']) elif event_type == 'game_update': player.receive_game_update_message(data['new_action'], data['round_state']) elif event_type == 'round_result': player.receive_round_result_message(data['winners'], data['hand_info'], data['round_state']) else: raise RuntimeError('Bad event type "{}"'.format(event_type)) { "image": "sberbank/python", "entry_point": "python bot.py" } For C / C ++, also pay attention to the instructions for launching bots in compiled languages. Full documentation on how to prepare bots for shipping is available here .

4. Approaches to creating strategies

For the more than 30-year history of poker bots, several families of poker strategy approaches have been created.

Classic approaches

One of the easiest to implement and least time consuming is the expert system. In essence, this is a set of fixed IF-THEN rules that relates the game situation to one of the predefined classes. Depending on the strength of the collected combination, the system suggests making one of the many available solutions.

Also, this problem can be solved purely by a mathematical method and at each time point to calculate the optimal solution from the point of view of Nash equilibrium. However, the solution will be optimal if the decisions of the other participants are also optimal. The search for such a solution is resource intensive, so in practice it can only be used along with a large number of restrictions in the rules. For example, in limit Texas Hold'em for two agents or when certain game situations occur.

, -. . , , , - . . , , , Monte Carlo Tree Search . .

, , , — . , . , AlphaGo, . , .

Deepstack

, , DeepStack, . , , « ». 7- , - - . DeepStack .

, . Sberbank Holdem Challenge , .

5.

- holdem.sberbank.ai . , -.

- , , . , - , .

, - , - — -100 :)

- — !

6.

')

Source: https://habr.com/ru/post/337264/

All Articles