Visual Explanation of Floating Numbers

In the early 90s, the creation of a three-dimensional game engine meant that you would force the car to perform tasks that were almost not peculiar to it. Personal computers of that time were designed to run word processors and spreadsheets, and not for 3D computing at a frequency of 70 frames per second. A serious obstacle was that, despite its power, the CPU did not have a hardware device for floating-point calculations. Programmers had only ALU grinding integers.

When writing the book Game Engine Black Book: Wolfenstein 3D, I wanted to visually show how great the problems were when working without a floating point. The brain perceived my attempts to understand floating-point numbers with the help of canonical articles . I began to look for another way. Anything far from and their mysterious exhibitors with mantissas. Maybe in the form of a picture, because my brain perceives them easier.

As a result, I wrote this article and decided to add it to the book. I will not argue that this is my invention, but so far I have not seen such an explanation of floating-point numbers. I hope the article will help those who, like me, are allergic to mathematical notation.

As usually explained by floating-point numbers

Quote from David Goldbert:

')

For many people, floating-point arithmetic seems like some kind of secret knowledge.

Totally agree with him. However, it is important to understand the principles of its work in order to fully understand its usefulness when programming a 3D engine. In C, floating-point values are 32-bit containers that comply with the IEEE 754 standard. They are designed to store and execute operations on approximations of real numbers. So far I have only seen their explanation. The 32 bits are divided into three parts:

- S (1 bit) to store the mark

- E (8 bits) for the exponent

- M (23 bits) for mantissa

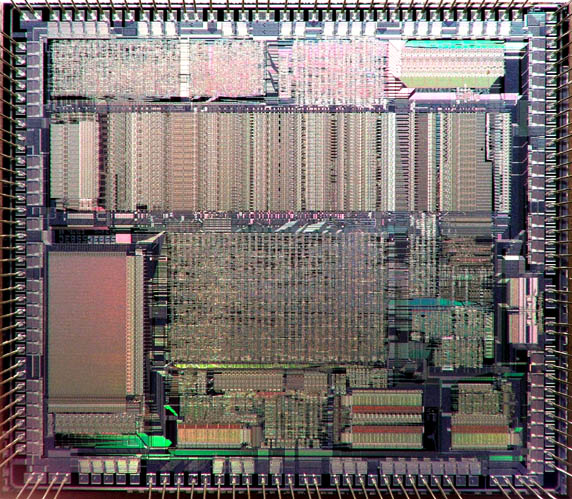

The insides of a floating point number.

Three pieces of a floating point number.

It's okay for now. Let's go further. The way in which numbers are interpreted is usually explained using the following formula:

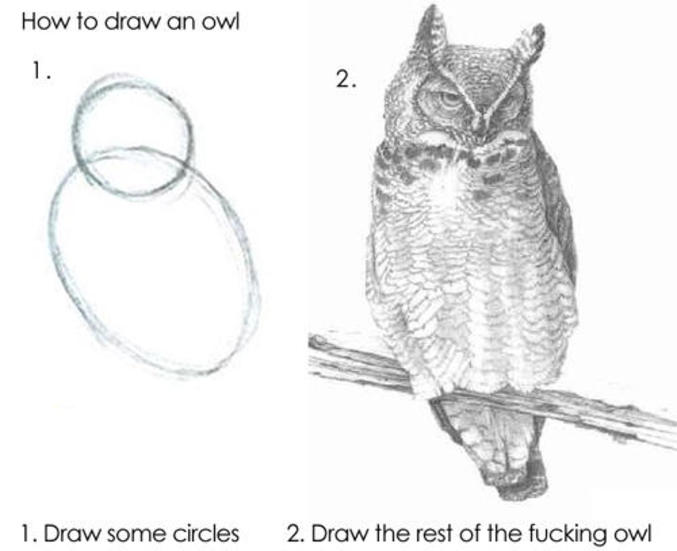

It is this explanation of floating-point numbers that everyone hates.

And here I usually start to lose patience. Maybe I'm allergic to mathematical notation, but when I read this, nothing “clicks” in my brain. This explanation is similar to the way the owl is drawn:

Another way to explain

Although this statement is true, this way of explaining floating-point numbers usually does not give us any understanding. I blame this horrible record for disappointing thousands of programmers, scaring them to such an extent that they never again tried to understand how floating-point calculations actually work. Fortunately, they can be explained differently. Think of the exponent as a window (Window) or the interval between two adjacent integer powers of two. Mantissa perceive as an offset (Offset) in this window.

Three pieces of a floating point number.

The window tells us which two consecutive powers of two will be the number: [0,1], [1,2], [2,4], [4,8] and so on (up to [ , ]. Offset divides the window into segments. Using the window and the offset, you can approximate the number. A window is an excellent protection mechanism against overflow. Having reached the maximum in the window (for example, in [2,4]), you can “swim” to the right and present the number within the next window (for example, [4,8]). The cost of this will be only a small decrease in accuracy, because the window becomes twice as large.

Quiz: How much accuracy is lost when the window closes a larger interval? Let's take an example with the [0,1] window, in which 8388608 offsets are superimposed on an interval of size 1, which gives us accuracy . In the [2048.4096] window, 8388608 offsets are superimposed on the interval that gives us accuracy .

The figure below shows how to encode the number 6.1. The window should start from 4 and end with the next power of 2, i.e. 8. The offset is approximately in the middle of the window.

6.1 is approximated by a floating point number.

Let's take another example with a detailed calculation of the representation as a floating-point number of a value that is well known to all of us: 3.14.

- The number 3.14 is positive .

- The number 3.14 is between the powers of two 2 and 4, that is, the floating-point number window must begin with (see the formula where the window is ).

- Finally have offsets, which can express the location of 3.14 within the interval [2-4]. It is located in within the interval that gives us an offset

In binary form, this translates to the following:

- S = 0 = 0b

- E = 128 = 10000000b

- M = 4781507 = 10010001111010111000011b

Binary representation with floating exact number 3.14.

That is, a value of 3.14 is approximated as 3.1400001049041748046875.

The corresponding value in an incomprehensible formula:

And finally, a graphical representation with a window and offset:

The window and the offset number 3.14.

An interesting fact: if the modules of floating-point operations were so slow, why in the C language did the types float and double be used as a result? Indeed, in the machine on which the language was invented (PDP-11), there was no floating point operation module! The fact is that the manufacturer (DEC) has promised Dennis Ritchie and Ken Thompson that he will be in the next model. They were lovers of astronomy and decided to add these two types to the language.

An interesting fact: those who really needed a floating point unit in 1991 could buy it. The only ones who could need it at that time were scientists (at least, as Intel understood the needs of the market). On the market, they were positioned as "mathematical coprocessors". Their productivity was average, and the price is huge ($ 200 in 1993 is $ 350 in 2016). As a result, the level of sales was mediocre.

I hope the article was useful to you!

Source: https://habr.com/ru/post/337260/

All Articles