Concurrency patterns in Rust from Java

Under the cut there are notes that describe how to implement cunning concurrency patterns in Rust, which I can easily write in Java, and what is the difference in concurrency approaches in these languages. The article will be useful to those who switch to Rust from C #, because it has a similar memory model.

A couple of words about the difference in the approaches of Java and Rust

Java is the first language with a memory model (which describes the synchronization of read and write operations to memory), Rust inherits the C ++ 11 memory model in the LLVM implementation. So in terms of terminology, there are a lot of differences. In fact, in Java there are 3 mechanisms for synchronizing shared memory: volatile variables, locks (synchronized blocks, ReentrantLock, etc.) and atomic. In Rust, only locks (Mutex, Rwlock) and atomic. Volatile is not needed because it duplicates the atomic functionality. The transmission of messages through the channels is omitted.

More differences:

- In Rust there is no garbage collector, so you have to put objects shared between threads in the atomic arc reference counter Arc. Moreover, Rust reverently treats immutable objects, so in order to change the internal state of an object without capturing the link, you will have to wrap it in a Cell or an analog (RefCell, UnsafeCell).

- Atomic operations at Rust use four types of barriers, but this does not change the rules of the game much. Use Relaxed when reading through the race is acceptable (for example, under blocking), Acquire to read + Release to write - when it is not necessary that all threads see the same order of operations, and SeqCst - when necessary. I remind you that volatile in Java guarantees SeqCst. For atomic operations that do both reading and writing at the same time (for example, swap), the AcqRel (Acquire + Release) option is provided.

- Rust has no null and cannot be used as an indicator. For this, use Option, which does not cost anything around the pointer. In Java, sometimes the use of null for an indicator is also not without its dangers - see the second example.

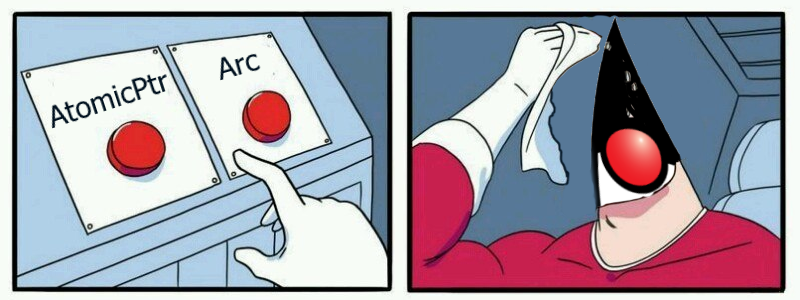

- AtomicPtr in Rust is not the same as AtomicReferenc in Java. This is not an obvious point, if you look only at semantics, but it becomes obvious when used - see the last example. It’s often easier to use a separate AtomicBool for synchronization.

- In Rust, locking (Mutex, RwLock) is inseparable from the object around which it is taken. And access to the object can not be obtained in any other way, except through the lock seizure. In Java, you also need a synchronization object for the monitor, but you can access it without capturing it.

- You must consciously inherit Sync using unsafe for the class that you want to transfer between threads.

- In contrast to the relatively simple idea of happens-before, which is good in common, the idea of barriers is much more complicated for code analysis. I think you will see it in examples.

- All the same, Rust implies that you will not often fumble changing data between threads and organize work differently .

Consider the same way that I just teach Rust myself, so the code on it should be taken skeptically. I tested it, but in the context of concurrency it does not guarantee anything.

Double-check lock

In the Java world, love double-check-lock because before Java 1.5, it could not be implemented correctly. I remind you how it looks:

public class Lazy<T> { volatile T val; final Supplier<T> supp; public Lazy(Supplier<T> supp) { this.supp = supp; } public T get() { if (val == null) { synchronized (this) { if (val == null) { val = supp.get(); } } } return val; } } People who are used to writing concurrency code are not so surprised, but if you think about it, there are a lot of dangerous operations. First, reading through the race. Secondly, we scroll a variable object between threads (the get method changes the internal state of the object). Rust does not like this. He is generally angry. Nevertheless, the closest analogue in Rust looks like this:

pub struct Lazy<T, F: Fn() -> T> { init: AtomicBool, val: UnsafeCell<Option<T>>, supp: Mutex<F> } unsafe impl <T, F: Fn() -> T> Sync for Lazy<T, F> {} impl <T, F: Fn() -> T> Lazy<T, F> { fn new(func: F) -> Self { Lazy{ init: AtomicBool::new(false), val: UnsafeCell::new(None), supp: Mutex::new(func) } } fn get<'a>(&'a self) -> &'a T { if !self.init.load(Ordering::Acquire) { let func = self.supp.lock().unwrap(); if !self.init.load(Ordering::Relaxed) { let value = unsafe { &mut *self.val.get() }; *value = Some(func()); self.init.store(true, Ordering::Release); } } unsafe { (*self.val.get()).as_ref().unwrap() } } } It looks unusual, but stackoverflow thinks so .

There is a lot more code here. Do not pay attention to Mutex around supp - it just has to be somewhere. Due to the lack of null, I had to wrap val in Option. And because of the difficulties in using AtomicPtr, I had to add an AtomicBool initialization flag.

Here note the difference in proof of correctness. For example, in Java, if you remove volatile, then you just say that there is no happens-before from the record to read, and the analysis ends here. It is not necessary to search for a specific version of the execution in which the problem is implemented.

In Rust, you run the risk of being trapped due to different options of memory barriers. Therefore it is necessary to run through all versions of execution. If you read in init true it means you see an entry in it and in val (by transitivity). Therefore, everything will work fine. Otherwise, you take a lock, then reading true there also guarantees the visibility of the record in val. In the last third case, if you are the stream that recorded the value, you will also see it because other threads do not write the value (and if you could not read your own record, it would break single-threaded programs).

Double-check lock with Supplier removal

In the last example, the variable supp is not needed after use. I met this example in Shipilev's report on JMM .

Java code:

public class LazyOpt<T> { volatile Supplier<T> supp; T val; final static Supplier EMPTY = new Supplier() { @Override public Object get() { return null; } }; public LazyOpt(Supplier<T> supp) { this.supp = supp; } public T get() { if (supp != EMPTY) { synchronized (this) { if (val == null) { val = supp.get(); supp = EMPTY; } } } return val; } } Everything is simple and familiar: it is almost double-check-lock, with the only difference that we remove the function that calculates the value. The JMM specification allows us to see supp == null before someone put something there, so EMPTY must be used (for more details, see Shipilev's report at the very end , he asked not to ask it at the interviews). Volatile to supp is needed in order to forward the happens-before between a write operation inside a synchronized and a read outside it. Volatile on val is not needed, because the one who sees the record in supp, also sees the record in val.

Immediately it should be said that this example may not make sense in Rust. The structures on the stack have a fixed size, and if supp is not a pointer, then nothing will be won if you wipe it in None. But if supp is for some reason Arc, then it is possible.

Analog in Rust:

pub struct Lazy<T, F: Fn() -> T> { init: AtomicBool, val: UnsafeCell<Option<T>>, supp: Mutex<UnsafeCell<Option<Arc<F>>>>, } unsafe impl <T, F: Fn() -> T> Sync for Lazy<T, F> {} impl <T, F: Fn() -> T> Lazy<T, F> { fn new(func: F) -> Self { Lazy{ init: AtomicBool::new(false), val: UnsafeCell::new(None), supp: Mutex::new(UnsafeCell::new(Some(Arc::new(func)))), } } fn get<'a>(&'a self) -> &'a T { if !self.init.load(Ordering::Acquire) { let supp = self.supp.lock().unwrap(); if !self.init.load(Ordering::Relaxed) { let value = unsafe { &mut *self.val.get() }; let func = unsafe { & *supp.get() }; if let Some(ref f) = *func { *value = Some(f()); } let func = unsafe { &mut *supp.get() }; *func = None; self.init.store(true, Ordering::Release); } } unsafe { (*self.val.get()).as_ref().unwrap() } } } As for me, the option on Rust looks too difficult to use in practice. However, from the point of view of the proof of correctness, nothing has changed. all operations with the function are done under blocking.

Safe race (benign races)

Another clever trick, from the same report , allows you to write lazy initialization for a small number of cases, such as counting an object hash, which does not require locks.

public class LazyHash { int hash; @Override public int hashCode() { int temp = hash; if (temp == 0) { temp = supp(); hash = temp; } return temp; } public int supp() { return 4; } } Here the main secret is that:

- We do not soar the non-transitivity of the visibility of the fields of an object if: they are not there, they are final, or if it is not a class, but a primitive (with the exception of long and double). In other cases, it hovers, because it cannot be fixed retroactively.

- If supp call multiple times, it returns the same value (not equal to the link).

- We do not soar that the objects may be different by reference, if otherwise they are the same.

- We save the object to a local copy to avoid returning null on double-reading.

- Optimization in this place is necessary (point cutting off most situations).

Although the code looks like we do not use other guarantees for operations, in addition to guarantees for single-threaded programs, this is not so. After all, Java guarantees atomicity of read and write operations on primitives (including references) except long and double, and without atomicity this code is not correct. Also with a local variable trick we add a guarantee of monotony. Rust gives exactly the same guarantees if you make a relaxed operation. .

Heshes believe in Rust is smarter than in Java, so the example below is an abstract getter:

pub struct LazyHash { hash: AtomicIsize } impl LazyHash { fn new() -> Self { LazyHash{hash: AtomicIsize::new(0)} } fn get(&self) -> isize { let mut temp = self.hash.load(Ordering::Relaxed); if temp == 0 { temp = self.supp(); self.hash.store(temp, Ordering::Relaxed); } temp } fn supp(&self) ->isize { 4 } } In Rust, you could return honest self.hash.load (Ordering :: Relaxed) instead of temp, it just doesn't make sense. Also in the nightly assembly of Rust, a bunch of atomics (even 128 bit ones), but in Java you can’t do the same trick with a 64 bit long and you’ll have to sculpt volatile.

Read-write structure for one writer

I heard about this idea in the report computer science is still alive , which described the cunning algorithms used in IDEA.

The idea is as follows: Read-write locks are too slow, with thousands of readers and exactly one writer. But you can speed up if reading threads write flags to ThreadLocal and check the writer's atomic flag. There were no other details, so my implementation will be completely different.

Honestly, the task description is similar to mvcc for the poor. I will explain. If there is no need for monotony, then you can do without locks in a situation where there is no more than one writer and many readers. The readers read from the last (at the time of the operation) copies of the data, and the writer writes in a personal copy. When the writer finishes his work, he updates the general copy atomically, and new readers will already take it. Old readers will work with the old copy. Here monotony is violated. The new fast reader can return a new value, and after it the old slow reader will return the old value. However, it is impossible to notice a violation of monotony if you receive values in the same order in which tasks were created or do not expect tasks from other threads to complete. In the implementation below it is considered acceptable.

However, at least two ways to fix the monotony come to mind:

- For the case when throughput is important to us, you can add a block: the new readers and the writer will not report the completion of their work until all the old readers do this. This is not the same as ReadWrite blocking, since tasks are physically performed at the same time and we simply synchronize visibility: after removing the blocking, all the finished results will be visible instantly. You can keep track of the latter who took the current copy for reading, and everyone who took the next copy will have to wait until he releases it before releasing his own.

- If we assume that the writer is in our priority and his latency is important for us and there is an opportunity to cancel and restart the tasks of the readers, then you can restart all the tasks that did not have time to be completed by the time the writer updated the value - probably it is more suitable for use case from the report.

The code below does not use ThreadLocal (the information in the report is not enough to understand why it is needed, if you can do without it). This code remains valid even if there are more than one writer. But it will break if readers start writing.

public class PoorMvcc<T extends PoorMvcc.Copyable<T>> { volatile T currentValue; final ReentrantLock writersSync = new ReentrantLock(); public PoorMvcc(T val) { currentValue = val; } public T getReadCopy() { return currentValue; } public T getWriteCopy() { writersSync.lock(); // Copy shoud not throw any exception. return currentValue.copy(); } public void returnReadCopy(T oldValue) { // No-op because we don't care about monotonic. } public void returnWriteCopy(T newValue) { currentValue = newValue; writersSync.unlock(); } // Because Cloneable isn't generic and method clone is protected. public static interface Copyable<T> { T copy(); } } For Rust developers, it may seem wild that you have to declare your own interface for copying. In Java, the clone method is declared protected ie. it is visible only to descendants (where you can declare it public) and even if you declare type T as the heir of Cloneable, this will not solve the problem. By the way, in C # with this, everything is also not thankful to God : the ICloneable interface is not generic and returns Object, which still needs to be cast to the desired type.

I did a benchmark on JMH. Even in the case of 1 writer and 1 reader, the implementation on PoorMvcc is much faster than the implementation on ReentrantReadWriteLock. With the increase in the number of writers, the gap is only growing. I do not know how to write a similar benchmark on Rust. It seems there is no functionality to check how the methods influence each other when executed simultaneously.

public static class LongCopy implements PoorMvcc.Copyable<LongCopy> { public long val; public LongCopy(long val) { this.val = val; } @Override public LongCopy copy() { return new LongCopy(val); } } public final PoorMvcc<LongCopy> poorMvcc = new PoorMvcc<>(new LongCopy(1)); @Benchmark @Group("g") @GroupThreads(1) public long inc() { LongCopy copy = poorMvcc.getWriteCopy(); try { copy.val++; return copy.val; } finally { poorMvcc.returnWriteCopy(copy); } } @Benchmark @Group("g") @GroupThreads(10) public long read() { LongCopy copy = poorMvcc.getReadCopy(); try { return copy.val; } finally { poorMvcc.returnReadCopy(copy); } } final LongCopy longCopy = new LongCopy(1); final ReentrantReadWriteLock lock = new ReentrantReadWriteLock(); @Benchmark @Group("g2") @GroupThreads(1) public long inc2() { ReentrantReadWriteLock.WriteLock writeLock = lock.writeLock(); writeLock.lock(); try { longCopy.val++; return longCopy.val; } finally { writeLock.unlock(); } } @Benchmark @Group("g2") @GroupThreads(10) public long read2() { ReentrantReadWriteLock.ReadLock readLock = lock.readLock(); readLock.lock(); try { return longCopy.val; } finally { readLock.unlock(); } } Result:

Benchmark Mode Cnt Score Error Units Main.g avgt 20 60,452 ± 2,956 ns/op Main.g:inc avgt 20 344,700 ± 33,870 ns/op Main.g:read avgt 20 32,027 ± 0,935 ns/op Main.g2 avgt 20 4093,235 ± 3403,373 ns/op Main.g2:inc2 avgt 20 28628,403 ± 37297,944 ns/op Main.g2:read2 avgt 20 1639,718 ± 52,243 ns/op The increment is 83 times faster, and the reading is 51. If there are 100 readers, then the increment will be 12,000 times faster, and 17 000 reading.

In Rust there is a Thread local, but we will not need it here either.

struct PoorMvcc<T: Clone> { current_value: AtomicPtr<T>, write_copy: Mutex<T> } unsafe impl <T: Clone> Sync for PoorMvcc<T> {} impl <T: Clone> PoorMvcc<T> { fn new(val: T) -> Self { PoorMvcc { write_copy: Mutex::new(val.clone()), current_value: AtomicPtr::new(Arc::into_raw(Arc::new(val)) as *mut _) } } fn get_read_copy(&self) -> Arc<T> { let val = unsafe {Arc::from_raw(self.current_value.load(Ordering::Acquire))}; let copy = Arc::clone(&val); Arc::into_raw(val); copy } fn return_read_copy(&self, val: Arc<T>) { // Eliminate a link } fn get_write_copy(&self) -> MutexGuard<T> { self.write_copy.lock().unwrap() } fn return_write_copy(&self, val: MutexGuard<T>) { let new_val = Arc::new(val.clone()); let old_val = self.current_value.swap(Arc::into_raw(new_val) as *mut _, Ordering::AcqRel); //To avoid a memory leak we have to eliminate the old value. unsafe {Arc::from_raw(old_val);} } } Let's first consider getting the value to read. Working with AtomicPtr in Rust must be done carefully so that the memory does not leak and is not released too early. I put the Arc inside because the result will be passed between the threads. Respectively get_read_copy gets the atomically current Arc link and returns its clone (this is how Arc links are passed to Rust). If there is nothing more to be done, then the pointer will get rotten in AtomicPtr, because the link read from there will free the Arc, so I’m doing into_raw to prevent this from happening. And return_read_copy takes the Arc link, destroying this copy.

Getting the value to write is more cunning. Since it is necessary to synchronize records with locking, I start a separate copy of the data wrapped in Mutex. When I call get_write_copy, I simply return the value from Mutex (it is wrapped in MutexGuard to release the lock later - this is the complete equivalent of Java code). And in return_write_copy I accept it back, then I replace the old value for reading with a new copy. There is a trick with the swap operation that needs to be done atomically, which would then release the old Arc link (other threads may have a copy).

That's all for today. Finding bugs is welcome.

')

Source: https://habr.com/ru/post/337232/

All Articles