Fast rendering of ocean waves on mobile devices

Modeling water in computer graphics in real time is still a very difficult task. This is especially important when developing computer games in which you want to create a visually attractive picture for a player within the framework of a hard limit on computing resources. And if on the desktops the programmer can still count on the presence of a powerful video card and processor, then in mobile games it is necessary to rely on a much weaker hardware.

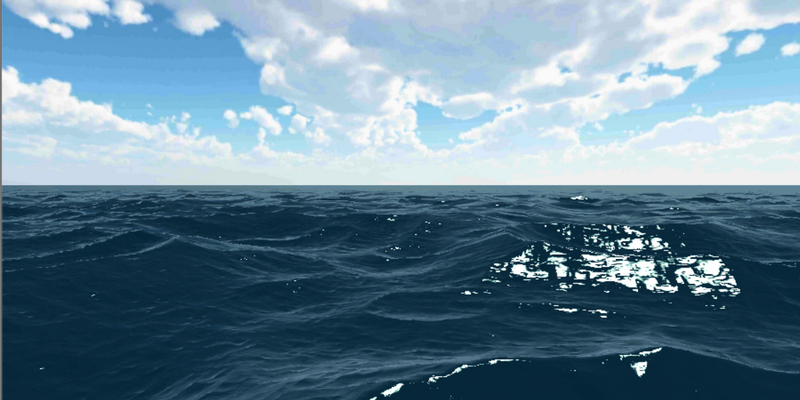

In this article we wanted to talk about modeling waves in the open sea and present an algorithm that allowed us to achieve quite interesting results with acceptable 25-30Fps on an average china phone.

In general, the experimentally selected Phillips spectrum is used to model the surface of waves in the open sea, i.e. decomposition of the entire spectrum of waves in Fourier components, which are animated in time. However, this solution is very resource-intensive and, although fast Fourier decomposition can be performed on a video card, it is almost impossible to use it on weak smartphones both due to speed and due to the limited functionality of the video card (support for rendering in float texture, limited computation accuracy). An example of such a method can be found here and here .

A simpler method is to generate the distribution of waves in advance (or directly in the shader), and then add the waves of different phases and amplitudes.

Despite the simplicity of the method, it can provide very impressive results, but it requires fine tuning and has several limitations. Consider this approach in more detail and try to deal with the emerging nuances in terms of picture quality and speed.

Wave generation

To generate a wave, we need to know its height at a certain point. It can be obtained in many ways. For example, it is easy to use a combination of sines, cosines of the coordinates of this point, but it is obvious that the resulting distribution of heights looks too artificial and is not suitable for solving our problem. In this case, a periodicity is observed even if the direction of the waves is changed relative to each other.

Running a little ahead, the image below shows the surface of the water obtained by adding waves of different amplitudes and heights.

The same algorithm, but from a distance there is a periodicity.

It is more optimal to use the Perlin noise , an example of which generation on shaders is presented below (the code for Cg, for GLSL requires cosmetic changes):

float rand(float2 n) { return frac(sin(dot(n, float2(12.9898, 4.1414))) * 43758.5453); } float noise(float2 n) { const float2 d = float2(0.0, 1.0); float2 b = floor(n), f = smoothstep(0, 1, frac(n)); return lerp(lerp(rand(b), rand(b + d.yx), fx), lerp(rand(b + d.xy), rand(b + d.yy), fx), fy); } rand generates a pseudo-random value, and noise interpolates four random numbers in the corners of the square to any point inside it.

No periodicity observed

The result is already better, the distribution of the waves has noticeably changed, but their shape also differs from the natural waves. With a small amplitude, this is still acceptable, but for large waves, there are sharp peaks.

When using the Phillips spectrum, this is solved by shifting the grid of the water surface to the peaks, which gives the desired shape. However, a simpler method and much more efficient in our case is the use of a simple formula, which leads to a satisfactory result in the form of pointed waves.

The disadvantage of the method is that it leads to the appearance of circles and other closed figures that are noticeable to the eye, but with an appropriate selection of parameters, this disadvantage becomes insignificant.

Phase distribution and animation

Obviously, the presence of only one phase (or otherwise octaves) for water is not enough to get realistic water and it is required to impose several waves with different amplitude and phase, which allows to obtain both large waves and small ripples on the surface.

float amp = maxHeight / 2; for (int i = 0; i < count; ++i){ h += amp * phase(pos + v[i]*t); pos *= sp; amp /= sa; } Good results are obtained by choosing sp, sa equal to 2, but we are free to choose any values that provide acceptable results. The selection of these parameters allows you to get a variety of types of waves and manage their change up to complete calm.

To animate the waves, it is enough to shift each phase in its direction. It should be borne in mind that the speed of movement of large waves is greater and the waves move mainly in the same direction.

Optimization

As the experiments showed, it is quite enough to have about 7 phases to get a “tasty” picture and, in principle, there was no need for the next bike. However, the very first tests on smartphones were shocked because FPS inexorably sought to 0, which could not but grieve. Let's see how many operations in the shader are required to display a single point:

- Perlin noise: For each vertex, 4 random values are required, i.e. 4 sin, 4 fract and other simpler operations.

- The calculation for 7 waves is 28 sin.

- Calculation of surface normals: In addition to the height of the point itself, we need to know the heights of 2 adjacent points. Accordingly, the number of payments increases 3 times - up to 84! sin operations.

In general, one can disagree with the above reasoning, since it is sufficient to calculate the height of the surface as a whole for each vertex of the mesh, which is certainly faster than reading for each point of the screen. But in this case, about any realism can not dream. Maxmum, we get a very rough approximation to the desired result. Thus, all the above operations have to be calculated in the fragment shader. Optimization options:

- Try to reduce the number of phases, but as mentioned earlier, it is desirable to have about 7 phases for an acceptable display. A smaller number significantly degrades the "quality" of the waves.

- Try to replace the costly operation of calculating the noise on a sample of the finished texture. Instead of 84 sin, we get 21 samples already (since 4 sin is required for Perlin noise).

Elevation-normal map

The last option has the right to life, but fps is also too small - about 3-4 frames per second. In addition, in this case, when calculating the normal, we rest on the accuracy of data storage in the texture, which leads to the appearance of “steps” on the water. Of course, you can use a texture with real numbers, but then we will further limit the number of supported devices.

At the same time, to obtain the height of a point, it is necessary to read the height value from the texture, but nothing prevents us from baking a normal map in this texture as well. Thus, with one sample from the texture we can get the height of the point and at the same time the normal to it. Baking data into texture can be performed in advance or directly on the video card by rendering to texture (for example, before launching the application or changing parameters).

When calculating the texture, it is necessary to ensure sufficient accuracy of normal storage. If you keep the normal in the usual form of a normal map, then this accuracy is insufficient, which is manifested in image artifacts.

Indeed, to obtain a normal, it suffices to keep only the projection of the normal in the horizontal plane (n x , n y ). In general, each of these components varies in the range [-1,1]. But in the case of water, the range used is substantially less, since the normals are mainly oriented upwards (which is especially noticeable when generating small amplitude waves). Thus, if we normalize this range by the maximum value, then we can significantly increase the accuracy of storing the normals and, accordingly, the picture quality.

In this case, to generate the resultant wave, it is necessary to accurately transform the normal of each phase, taking into account the amplitude of the wave, its phase, as well as the scaling factor chosen above.

Additional optimization

Despite the performed optimizations, we still have a sample of 7 textures and we would like to reduce this number. As mentioned earlier, reducing this number in general is not desirable.

However, we keep the shape of the waves in texture, in which we can generate several phases at once instead of one phase.

This solves the problem of generating a multitude of waves with a small number of passes, however during animation it becomes noticeable that some of the waves move at the same speed. To reduce this effect, you can save the waves with a larger phase difference, for example, 1 - 3 - 5, while rendering we get 1-1'-3-3'-5-5 '. We also used the approach in which the first two phases of the four used one texture, and the last two already had the other with a different distribution and number of phases. This is the way to get the image shown at the beginning of the post.

Disadvantages of the method

- The main technical problem is that to obtain waves, it is necessary to have a possibility of sampling from the texture in the vertex shader . Technically, this is supported with Opengl ES 2.0, but not all smartphones provide this functionality.

- The need for careful selection of parameters to hide the artifacts of the method and get an attractive image.

- The resulting sea is periodic in nature and this limits the scope of application. When flying high above the surface, periodicity catches the eye.

A little more about optimization

In addition to the methods described above, we tested several other optimization options. The most interesting of them seemed to us interpolation of waves in time.

The meaning of this method is that we can render the resulting map of heights and normals at certain points in time into texture, and interpolate heights and normals for others.

Thus, once every N frames, a full calculation of the surface is required, and an N-1 frame can be considered by simply sampling from two textures.

In this case, it turns out that one wave decreases and the next one appears next. As N decreases, the animation becomes smoother, although the effectiveness of the method decreases.

Thus, it can be quite effectively used in certain conditions, for example, at a low wave velocity or at a relative distance from the surface, when the lack of a method becomes less noticeable.

Skyline

At the moment we have got quite viable and pretty water, but we didn’t discuss which grid we would use for the surface of the water. Obviously, to display water to the horizon line, we actually have to use an infinitely large mesh of points (at least stretch it to the clipping plane of the camera), while the number of points in it is very limited). Simple linear scaling does not work, because near the camera the mesh becomes too rarefied, and in the vicinity of the horizon, on the contrary, it is too thick.

The easiest way to solve this problem is to scale the mesh in the vertex shader depending on the distance to the camera. The disadvantage is quite obvious - it is difficult to choose the necessary parameters, and the resulting distribution of points will also still be uneven.

Another option is to use a mesh with different details depending on the distance. But this may lead to jerks when changing the level of detail, and also requires the introduction of additional logic to control these levels.

Projected Mesh

The most convenient is the use of the projected Grid method, which can generally be described as follows:

- A uniform flat mesh is created, in coordinates [-1..1], always located in the camera space.

- In the vertex shader, this grid is projected on a horizontal plane.

- For the obtained point, all calculations are performed to display the water.

As an analogy, you can imagine a slide with dots attached to a spotlight (camera). Where the shadow from the point falls on the plane and the desired point is located. At the same time, from the point of location of the camera, the observer will see the same uniform grid.

This method has several advantages:

- The resulting mesh is uniform and allows you to easily change its size.

- Not costly performance method. For correct calculation, one multiplication and addition of vectors in the vertex shader is enough

Lighting calculation

The basis for obtaining the correct image is the correct accounting of all components of the light flux - reflected light, light scattered in the water column, glare from the sun, etc.

In general, this is a non-trivial task, but in our case we used a simpler approach, since there was no need to display the bottom surface, caustics and others.

A detailed description of the calculation of lighting will not be, because There are many detailed articles on this subject (for example, here , here ). I would just like to note the need to choose the "right" formula for calculating the Fresnel coefficient.

In the first version of the shader, we could not achieve realistic-looking water. The result was more like drawn or plastic water. It turned out that we used the most primitive version to calculate the Fresnel coefficient:

. At the same time, replacing the formula with

significantly improved the realism of water. Other approximations for the Fresnel coefficient can be found here .

')

results

We have implemented the above water simulation method in Unity3D. We used explicit vertex and fragment shaders to calculate the lighting and create the waveform (it could be implemented on surface shaders, but this does not play a fundamental role). When testing for android smartphones, we received from 25 fps (Adreno 405 + MediaTek MT6735P) to 45 (Adreno 505 + Snapdragon 430). On the part of smartphones, as expected, the application did not work due to the lack of support for reading from the texture in the vertex shader . It is interesting to note that the calculation of the illumination as a result turned out to be comparable to the complexity with the generation of waves. If necessary, you can raise fps by using other models of lighting or turning off some of the elements as the environment map, glare, etc.

Source: https://habr.com/ru/post/336998/

All Articles