1 September is the day of knowledge. Find out everything you need about neural networks.

Friends!

We congratulate all our subscribers on the day of knowledge and wish that there is more knowledge, their acquisition is interesting, and the knowledge itself is more useful.

To bring these wishes to life, we offer you a video of the course “ One-Day Immersion in Neural Networks ”, which we conducted in the summer in the framework of the closed DevCon school . This course will allow for a few hours to immerse yourself in the topic of neural networks and “from scratch” to learn how to use them for image recognition, speech synthesis and other interesting tasks. The ability to program in Python and basic knowledge of mathematics will be useful for successful mastering of the course. Course materials and presets for practical exercises are available on GitHub .

')

The submissions consist of two main parts: videos (intensive recording) and lectures (with examples) and tasks in Azure Notebooks . Therefore, we recommend that you open the video in one window, and the lectures or assignments in the other, in order to simultaneously test all the examples shown.

Azure Notebooks is a technology based on Jupyter that allows you to combine natural language text and code in any programming language in a single document. To work with Azure Notebooks, you need a Microsoft Account - if you don’t have one, create a new email account on Outlook.com .

The final example with the training of the neural network to recognize cats or dogs is best done on a virtual machine with a GPU, otherwise it will be too long. You can create such a machine in the Microsoft Azure cloud if you have a subscription (just keep in mind that with a free Azure Trial subscription, it’s not always possible to create a machine with a GPU).

In the process of watching videos, it is very important to try examples in parallel, and after watching the course, to think of some task for yourself to consolidate knowledge. Since the materials are not an online course (we have not yet reached out), we cannot verify your work or your knowledge. But we highly recommend not to watch the video “just like that,” since this may be wasted time. Start and try the examples is very simple!

To get started, go to the course materials library on Azure Notebooks and click the Clone button. This way you will receive your copy of all materials, and you will be able to make changes to the code on the fly and see what happens. Open the library in a separate window, and start watching the video.

CNTK neural network frameworks allow us to set the network configuration in the form of a “computation graph”, essentially writing this formula:

After that, the framework allows us to apply one of the learning algorithms, adjusting the weights of the W matrix, so that the neural network starts to work better on the training set. In this case, according to a given formula, the framework assumes all the difficulties in calculating the derivatives necessary for the operation of the back propagation method of the error.

Similarly, we can define configurations of more complex networks, for example, for a two-layer network with a hidden layer:

Using higher-level syntax, the same can be written more compactly in the form of a composition of layers:

This part also discusses important questions about retraining networks, and how to choose the network “capacity” depending on the amount of training data in such a way as to avoid retraining.

For example, in convolutional neural networks (Convolutional Neural Network, CNN) used for image analysis, a small window running through the image (3x3 or 5x5) is used, which, as it were, is fed to the input of a small neural network extracting important features from a fragment of the image. From these signs, an “image” of the next layer is obtained, which is processed in a similar way — in this case, this layer is responsible for the signs of a higher level. Combining in this way a multitude (tens and hundreds) of layers, we get networks capable of recognizing objects in an image with an accuracy not much inferior to a human one. Here, for example, how the multilayer convolutional network sees the number 5:

In the second half of the video, Andrei Ustyuzhanin , Yandex-CERN project manager and the Lambda laboratory at HSE, talks about some techniques for building more complex neural network configurations for specific tasks, as well as optimization techniques for teaching deep networks, without which a significant increase in the number of layers and parameters is impossible .

Here, for example, which texts are generated by the neural network from the example we are considering after 3 minutes of training on the texts of the English-language Wikipedia:

I hope we inspired you to study neural networks! After viewing the course, it's time to come up with a task or project for yourself: for example, make a generator of poems or pseudo-scientific articles, or analyze which photographs people have with their eyes closed. The main thing is to try to put knowledge into practice!

You can train simple networks directly in Azure Notebooks, use the Data Science Virtual Machine in the Azure cloud, or install CNTK on your computer . More information about CNTK is available at http://cntk.ai , including training examples of models .

I wish all readers a warm autumn and will be glad to answer all your questions in the comments!

We congratulate all our subscribers on the day of knowledge and wish that there is more knowledge, their acquisition is interesting, and the knowledge itself is more useful.

To bring these wishes to life, we offer you a video of the course “ One-Day Immersion in Neural Networks ”, which we conducted in the summer in the framework of the closed DevCon school . This course will allow for a few hours to immerse yourself in the topic of neural networks and “from scratch” to learn how to use them for image recognition, speech synthesis and other interesting tasks. The ability to program in Python and basic knowledge of mathematics will be useful for successful mastering of the course. Course materials and presets for practical exercises are available on GitHub .

')

Pre-notification: These videos are a recording of the intensive, designed mainly for the audience present in the hall. Therefore, the video is somewhat less dynamic than in online courses, and longer, not cut into thematic fragments. Nevertheless, many viewers found them very useful for themselves, so we decided to share with a wide audience. I hope the opportunity to learn something new causes you to have the same genuine joy, like my daughter in the photo.

What is required

The submissions consist of two main parts: videos (intensive recording) and lectures (with examples) and tasks in Azure Notebooks . Therefore, we recommend that you open the video in one window, and the lectures or assignments in the other, in order to simultaneously test all the examples shown.

Azure Notebooks is a technology based on Jupyter that allows you to combine natural language text and code in any programming language in a single document. To work with Azure Notebooks, you need a Microsoft Account - if you don’t have one, create a new email account on Outlook.com .

The final example with the training of the neural network to recognize cats or dogs is best done on a virtual machine with a GPU, otherwise it will be too long. You can create such a machine in the Microsoft Azure cloud if you have a subscription (just keep in mind that with a free Azure Trial subscription, it’s not always possible to create a machine with a GPU).

Beginning of work

In the process of watching videos, it is very important to try examples in parallel, and after watching the course, to think of some task for yourself to consolidate knowledge. Since the materials are not an online course (we have not yet reached out), we cannot verify your work or your knowledge. But we highly recommend not to watch the video “just like that,” since this may be wasted time. Start and try the examples is very simple!

To get started, go to the course materials library on Azure Notebooks and click the Clone button. This way you will receive your copy of all materials, and you will be able to make changes to the code on the fly and see what happens. Open the library in a separate window, and start watching the video.

Video

Part I

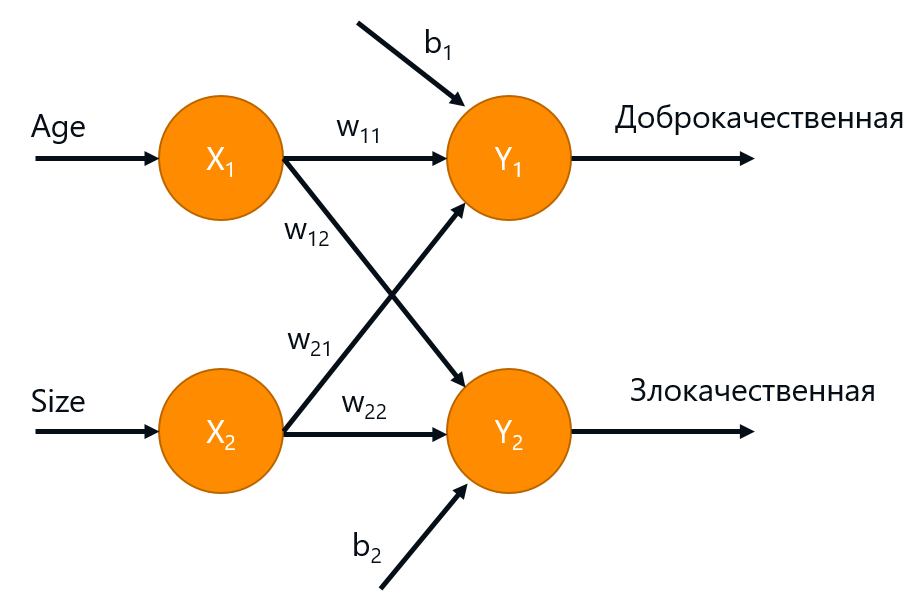

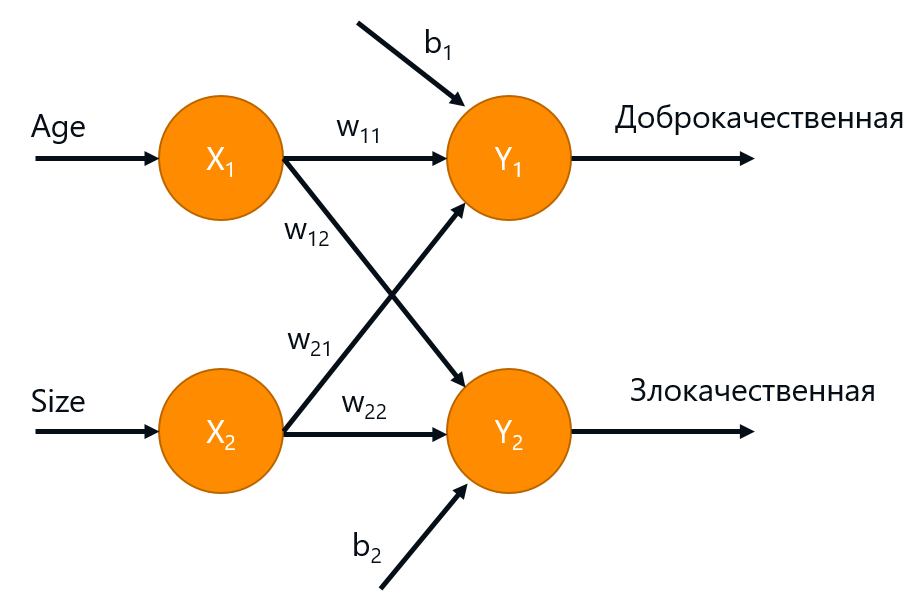

[0:00] Introduction to artificial intelligence in general, cognitive services, Bot Framework, Azure Machine Learning. This is not directly related to neural networks, but is useful for general development.For those who can't wait to get straight to the point - I recommend looking at 1:10, where we start using the Cognitive Toolkit to implement a simple classification task. The basic idea is as follows: the neural network, which is shown schematically in the figure below, can be mathematically represented as the product of the weights matrix W by the input values x, taking into account the shift b: y = Wx + b.

[0:27] An introduction to Azure Notebooks and the basic features of Python that will be important to us.

[0:48] Single-layer perceptron and the implementation of the simplest neural network "manually".

[1:10] Implementing the simplest neural network on Microsoft Cognitive Toolkit (CNTK). Error propagation algorithm.

[1:48] Lab: classification of irises.

[2:27] Lab: Recognition of handwritten numbers.

CNTK neural network frameworks allow us to set the network configuration in the form of a “computation graph”, essentially writing this formula:

features = input_variable(input_dim, np.float32) label = input_variable(output_dim, np.float32) W = parameter(shape=(input_dim, output_dim)) b = parameter(shape=(output_dim)) z = times(features,W)+b After that, the framework allows us to apply one of the learning algorithms, adjusting the weights of the W matrix, so that the neural network starts to work better on the training set. In this case, according to a given formula, the framework assumes all the difficulties in calculating the derivatives necessary for the operation of the back propagation method of the error.

Similarly, we can define configurations of more complex networks, for example, for a two-layer network with a hidden layer:

hidden_dim = 5 W1 = parameter(shape=(input_dim, hidden_dim),init=cntk.uniform(1)) b1 = parameter(shape=(hidden_dim),init=cntk.uniform(1)) y = cntk.sigmoid(times(features,W1)+b1) W2 = parameter(shape=(hidden_dim, output_dim),init=cntk.uniform(1)) b2 = parameter(shape=(output_dim),init=cntk.uniform(1)) z = times(y,W2)+b2 Using higher-level syntax, the same can be written more compactly in the form of a composition of layers:

z = Sequential([ Dense(hidden_dim,init=glorot_uniform(),activation=sigmoid), Dense(output_dim,init=glorot_uniform(),activation=None) ])(features) This part also discusses important questions about retraining networks, and how to choose the network “capacity” depending on the amount of training data in such a way as to avoid retraining.

Part II

[0:00] Recognition of handwritten numbers.The main idea of this part is to tell how from base blocks (neural layers, activation functions, etc.) you can assemble more complex neural network architectures for solving specific tasks, using the example of image analysis. The toolkits (such as Cognitive Toolkit / CNTK, Tensorflow, Caffe, etc.) provide basic functional blocks that can then be combined with each other.

[0:04] Convolutional neural networks for image recognition.

[0:49] Lab: Improve recognition accuracy with the help of a convolutional network.

[1:07] Subtleties of learning: batch normalization, dropout, etc. Complex network architectures to solve specific problems. Autoencoders. The use of neural networks in real projects.

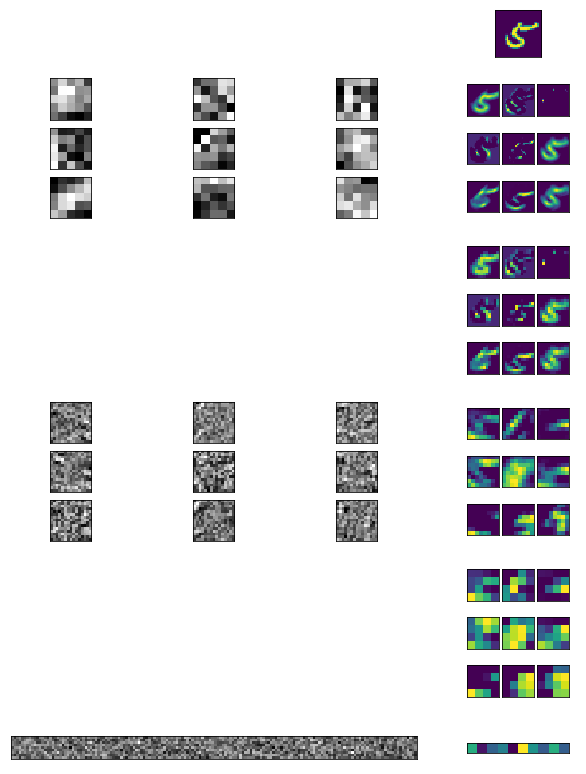

For example, in convolutional neural networks (Convolutional Neural Network, CNN) used for image analysis, a small window running through the image (3x3 or 5x5) is used, which, as it were, is fed to the input of a small neural network extracting important features from a fragment of the image. From these signs, an “image” of the next layer is obtained, which is processed in a similar way — in this case, this layer is responsible for the signs of a higher level. Combining in this way a multitude (tens and hundreds) of layers, we get networks capable of recognizing objects in an image with an accuracy not much inferior to a human one. Here, for example, how the multilayer convolutional network sees the number 5:

In the second half of the video, Andrei Ustyuzhanin , Yandex-CERN project manager and the Lambda laboratory at HSE, talks about some techniques for building more complex neural network configurations for specific tasks, as well as optimization techniques for teaching deep networks, without which a significant increase in the number of layers and parameters is impossible .

Part III

[0:00] Recurrent neural networks.The most important thing in this section is the recurrent neural networks, about which Mikhail Burtsev, the head of the laboratory of deep learning at MIPT, talks. This is a network architecture in which the output of the network is fed to it as an input. This allows you to analyze variable-length sequences — for example, one pass of a text or one word of a sentence is processed in one pass, while the “meaning” is stored in the transmitted recurrent “state”. Based on this approach, machine translation systems, text generators, time series forecasting systems, etc. work.

[1:09] Teaching neural networks in the Microsoft Azure cloud through Azure Batch.

[1:36] Recognizing “cat vs. dog” images and answering questions.

Here, for example, which texts are generated by the neural network from the example we are considering after 3 minutes of training on the texts of the English-language Wikipedia:

demaware boake associet ofte

russing turking or a to t

jectional ray store one country or sentio edaph lawe

t arrell out prodication

zer to a revereing

Instead of conclusion

I hope we inspired you to study neural networks! After viewing the course, it's time to come up with a task or project for yourself: for example, make a generator of poems or pseudo-scientific articles, or analyze which photographs people have with their eyes closed. The main thing is to try to put knowledge into practice!

You can train simple networks directly in Azure Notebooks, use the Data Science Virtual Machine in the Azure cloud, or install CNTK on your computer . More information about CNTK is available at http://cntk.ai , including training examples of models .

I wish all readers a warm autumn and will be glad to answer all your questions in the comments!

Source: https://habr.com/ru/post/336890/

All Articles