In search of a lost gigabit or a bit about windows in TCP

The reason for writing this article was laziness, which, as you know, is the engine of progress and the witness of the birth of incredibly lightweight things.

In my case, it was too lazy to explain to the client for the thousandth time why he rented a point-to-point channel and Ethernet 1 Gbit / s is written in black and white, but he measures, but a little less it turns out.

Where is the rest? Why a shortfall? Where did the internet go from the wire? Or maybe he was scared at all?

Well, let's look, but at the same time we will write a note that will not be ashamed to show a thousand to the first client, who will be short of speed.

Important

If you are a network engineer, do not read this article. She will insult all your feelings. written in the simplest and most accessible language with many omissions.

The story of the window. World to windows

So, to understand where the speed is, we will have to figure out how TCP works in terms of ensuring the reliability of the connection.

As we all know, the TCP has a last resort hope, when all the tricks to deliver the frame to the recipient did not work, it just re-sends the damaged or lost frame.

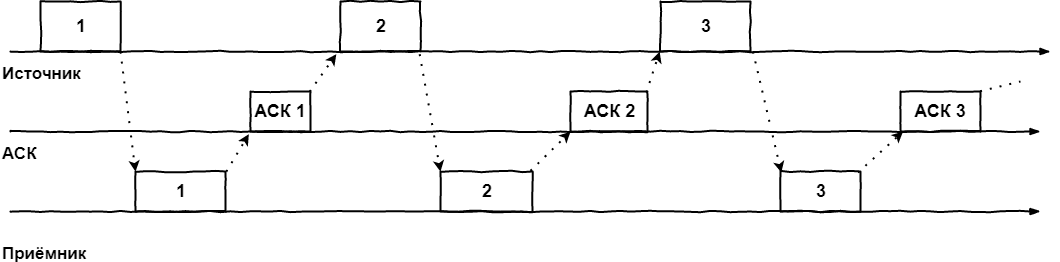

What it looks like in the simplest case:

- The frame is sent by the transmitter. On the transmitter, a timer is activated, during which the receiver must receive an acknowledgment by the ASC about the successful receipt of the frame or an explicit indication that the frame was corrupted / lost on the way - NACK

- If the ACK timer is not received, the packet is sent again.

- If a NACK arrives, the source retries sending the frame.

- And if the ACK is received, the source sends the next frame.

Graphically, this algorithm is easily represented on the timeline:

This algorithm is called the method of idle source, which immediately lets us understand its main disadvantage: the catastrophically inefficient use of the communication channel. Technically, nothing prevents the transmitter from sending the second frame immediately after sending the first frame, but we force it to wait for the arrival of ACK / NACK or expiration of the timer.

Therefore, it is important to understand that the processes of sending and receiving ACKs can proceed independently of each other. On this idea the sliding window method was born.

The first window. Sliding. Theoretical.

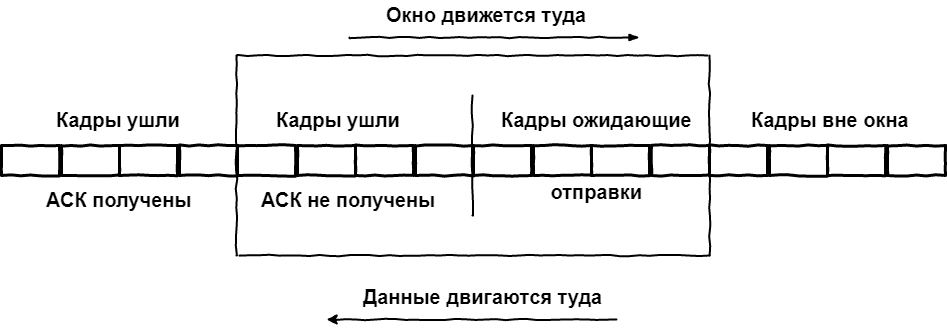

After realizing the minuses of the previous method, it comes to mind to allow the source to transmit packets at the maximum rate possible for it, without waiting for confirmation from the receiver. But their number is not infinite, but limited to some kind of buffer, which is called a window, and its size indicates the number of frames that can be transmitted without waiting for confirmations.

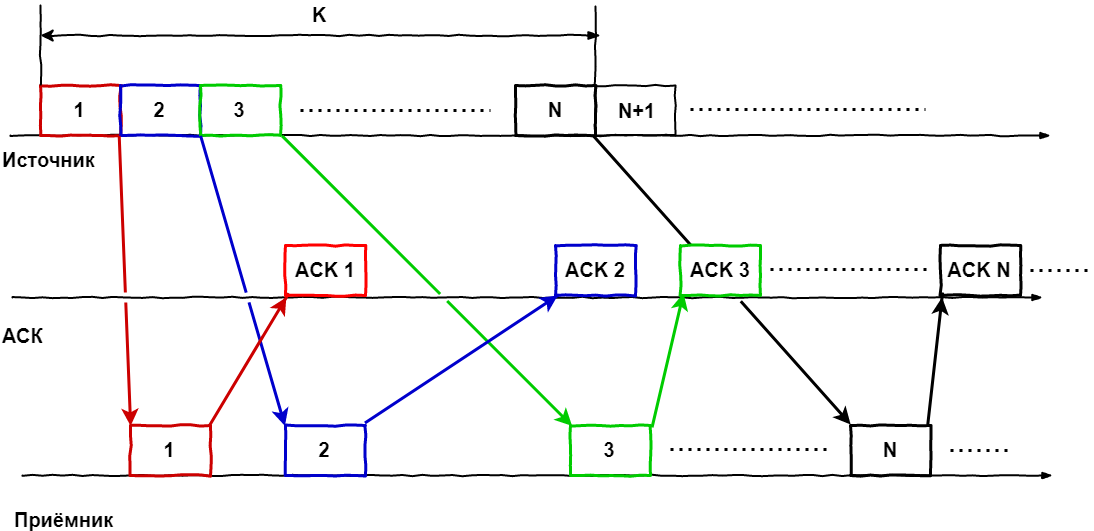

Let's go back to the pictures:

For clarity, let us consider a window with a size of K frames, in which there are packets (1 ... N) at some instant of time, i.e. we had sent a packet of frames, each frame as far as possible reached the recipient, he processed them and sent a confirmation for each.

Now we complicate the situation by turning on the time.

At the time of the arrival of the ASC on the first frame, the last one has not even been sent yet, but since we know about the success of the delivery, we can add the next frame in the window, i.e. the window is shifted and now includes frames 2 ... N + 1. When the ACK arrives at the 2nd frame, the window shifts again: 3 ... N + 2. And so on. It turns out that the window as though "slides" on a flow of packets. Or packages through it, here it is more convenient for someone to present.

Thus, all frames are globally divided into three types:

- Past. Were sent, confirmations were received

- Severe present. Consists of the sent frames, but without received confirmations and those who are already in the window, but standing in the queue for sending

- Bright future. Frames in the queue for getting into the window.

And how does this affect the speed of communication? - you ask.

As we see from the graph, a necessary condition for the maximum utilization of the communication channel is the regular receipt of confirmations within the scope of the window. The ACK is not required to arrive in a clear order, the main thing is that the ACK on the first frame in the window comes before the last frame is sent, otherwise the non-response timer will work, the frame will be sent again, and the window will stop moving.

Also, it is worth noting that in most implementations of the sliding window algorithm, confirmations do not come on every packet, but on the entire received packet (called Selective Ack). This allows you to further increase the effective channel utilization by reducing the amount of service information.

So, what do we have in the dry residue? What parameters have a significant impact on the efficiency of data transfer between two points?

There are two of them:

- The size of the window, which is chosen by the smaller of the two: the window declared by the recipient (size of its buffer) or CWND - the size determined by the sender based on RTT

- RTT itself: the time of reception and transmission equal to the time taken to send a signal, plus the time required to confirm reception.

And we should not transmit more than the recipient is willing to accept or skip the network.

Let's imagine that we have a super reliable network where packages almost never get lost and don't fight. In such a network, it is beneficial for us to have a window of the maximum possible size, which will allow us to minimize the pauses between sending frames.

In a bad network, the situation is reversed - with frequent losses and a large number of broken packets, it is important for us to deliver each of them safe and sound, so we reduce the window so that traffic is lost as little as possible and we avoid fatal re-sending. Sacrifice in this case accounts for speed.

And as we all know, reality is a mixture of two extreme cases, so in a real network the size of the window is variable. Moreover, it can be changed, either unilaterally on either side, or by agreement.

Intermediate summary. As we can see, even without being tied to specific protocols, it is technically extremely difficult to utilize the channel 100%, since whether we like it or not, even in laboratory conditions there will be minimal between frames, but delays that will not allow us to achieve the cherished 100% bandwidth utilization.

And what can we say about the real network? Here in the second part we will consider one of the implementations on the example of TCP.

The second window. TCP implementation

What is remarkable tsr? Based on unreliable IP datagrams, it provides reliable message delivery.

When establishing a logical connection, the TCP modules exchange with each other the following parameters:

- Recipient buffer size is the upper limit of the window size.

- The initial sequence number of the byte from which data flow will begin during this session.

We immediately remember an important feature - in the TCP, the window operates not by the number of frames, but by the number of bytes. This means that the window is a set of numbered bytes of unstructured data flow from the upper protocols. It sounds cumbersome, but it is easier to write.

So, the TCP protocol is duplex, which means that each side at any time acts as both the sender and the receiver. Therefore, on each side there should be a buffer for receiving segments and a buffer for segments that have not yet been sent. But in addition, there must be a buffer for copies of already sent segments that have not yet received confirmation of receipt.

By the way, this leads to a feature overlooked by many: network conditions can be different in two directions, which means different window sizes and different bandwidths.

In this situation, the dance is conducted on the capabilities of the recipient. When establishing a connection, both sides send each other reception windows. The recipient remembers its size and understands how many bytes can be sent without waiting for the ACK.

Then the mechanisms described in the first chapter are included. Sending segments, waiting for confirmation, re-sending if necessary, etc. An important difference from theoretical studies is the combination of various techniques. For example, the receipt of several segments that came in order, occurs automatically, interrupting, only if the order of arrival is lost. This is one of the functions in the receiver's buffer — to restore the order of the segments. And then, if a gap is detected in the stream, the TCP module can repeat the request for the lost segment.

A couple of words about the copy buffer on the sender. All segments in this buffer have a timer. If during the timer the corresponding ACK arrives, the segment is deleted. If not, it is sent again. It is possible that the timer segment will be sent again before the ACK arrives, but in this case, the repeated segment will simply be discarded by the recipient.

And the performance of the TCP depends on this waiting timeout. It will be too short - there will be excessive packet forwarding. Too long - we get downtime because of the expectation of non-existent ASC.

In fact, TCP determines the size of the timeout using a complex adaptive algorithm, which takes into account speed, reliability, line length, and many other factors. But in general terms it is:

- When sending each segment, the time is measured before the arrival of the ASC.

- The values obtained are averaged with weighting factors increasing from the past to the future. This allows newer data to have a greater impact on the final result.

- Then the average value of the averaging at the previous step is calculated and the timeout value is obtained. But if the variation of the values is very large, then the dispersion is also taken into account.

But we are more and more about the sending window, although the receiving window is a more interesting entity. At different ends of the junction window, usually have a different size. In the world of winning client-server technologies, one does not have to expect the client to be ready to operate with a window of the same size that the server can handle.

In the same way, its size can change dynamically, depending on the state of the network, but here the wrong choice implies a “double” responsibility. In the case of receiving more data than the TCP module can process, they will be discarded, the timer will work at the source, it will re-send the data, they will not again fit into the window size, etc.

On the other hand, setting a too small window will lead to the use of a channel at a speed equal to the speed of transmission of one segment. Therefore, TCP developers have proposed a scheme in which when establishing a connection, the window size is set relatively large and in case of problems it starts to shrink twice per step. It really looks strange, that is why the TSR implementations were created following the usual logic: start from a small window and, if the network manages, then start to increase it.

But the size of the receive window can be influenced not only by the receiving party, but also by the data sender. If we see that ASKs regularly arrive after timers, that we often have to re-send segments, then the source can set its receive window value and the rule of the smallest will apply - the smallest value will be accepted, no matter who chooses it.

It remains to consider another scenario, namely overloading the TCP connection. This network condition is characterized by the fact that on the intermediate and end nodes there are queues of packets. In this case, the receiver has two options:

- Reduce the size of the window.

- Completely abandon the reception by setting the window size to zero.

Although you cannot completely close the connection in this way. There is a special urgent pointer that forces the port to accept a data segment, even if it has to clear the existing buffer. And the sender who accepted the zero window size does not lose hope and periodically sends control requests to the receiver and, if he is already ready to accept new data, then in response he will receive a new, not zero, window size.

Total

The captain suggests - if one TCP session cannot in principle provide 100% channel utilization, then use two. If we are talking about a client who rented a point-to-point channel and lifted the GRE tunnel in it, then let him pick up the second one. So that they do not fight for the lane, we wrap in the first important traffic, in the second - any nonsense and terribly slaughter him speed. This is just enough to choose the remnants of the band, which the first session can not take purely technical.

')

Source: https://habr.com/ru/post/336780/

All Articles