Go on devices with small memory

Translation of the article by Samsara developer about the experience of using Go on a car router with 170MB RAM.

At Samsara, we are developing automotive routers that provide real-time engine telemetry via CAN bus, data from wireless thermosensors via Bluetooth Low Energy and Wi-Fi connectivity. These routers are very limited in resources. Unlike servers with 16 GB of RAM, our routers have only 170MB and only one core.

Our new CM11 camera is fixed in the cockpit.

Earlier this year, we released a video camera that is mounted inside the cabin to improve the safety of the machines for our customers. This camera, in fact, is a peripheral device for our router, which generates a lot of data. It records 1080p H.264 video at 30 frames per second.

Our first implementation of the camera service, which was a separate process on the router, consumed 60MB, that is, almost half of all available memory, but we knew that we could achieve better results. We buffered only 3 seconds of video stream on 5Mpbs, and 60MB was enough to hold as much as 90 seconds of video in memory, so we decided to see where we can reduce memory usage.

The implementation of the service work with the camera

The camera service sets the recording parameters in the camera, then receives and saves the video. The saved H.264 video is then converted to mp4 and downloaded to the cloud, but this happens a bit later in the background.

We decided to write a service entirely on Go, so that we can easily integrate with the rest of our system components. This allowed us to quickly and easily write the first implementation of the service, but it consumed half of the memory available on the device and we began to get kernel panics due to lack of memory. Our tasks were as follows:

- support the Resident Set Size (RSS) process within 15MB or less, so that other services also have memory for their tasks

- leave at least 20% of the total memory free to allow periodic peaks in memory usage

Tuning buffer size

Our first attempt to reduce memory usage was simply to reduce the amount of buffering in memory. Since we initially buffered 3 seconds, we tried not to buffer at all and save one frame at a time to disk. This approach did not work, because the overhead of recording 20KB (average frame size) at a frequency of 30 frames per second reduced throughput and increased response time to numbers at which we simply could not cope with the incoming stream.

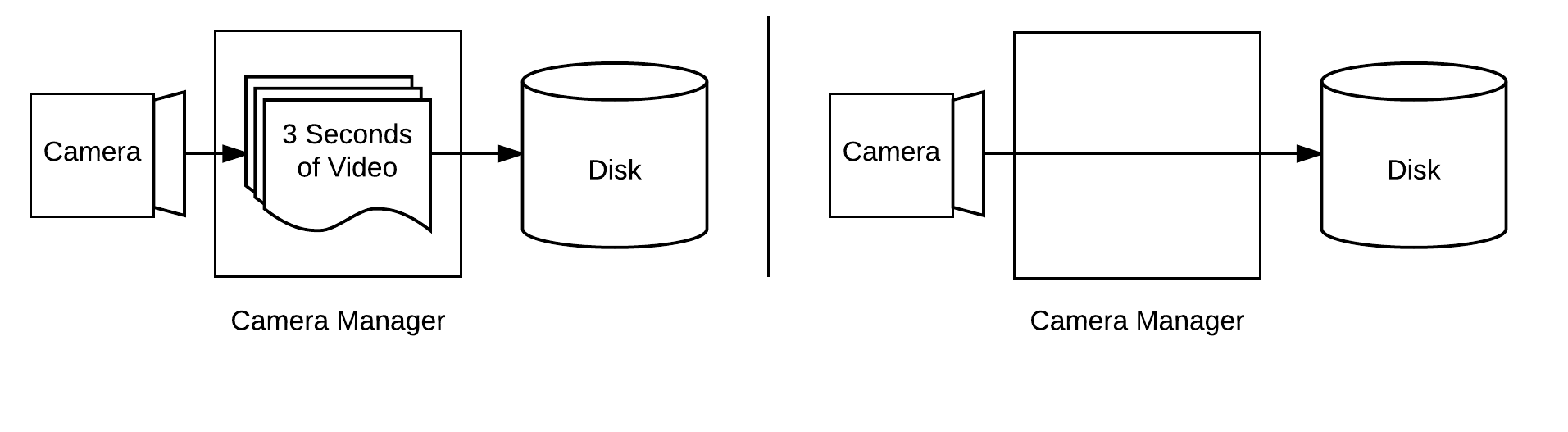

On the left, the original buffering architecture: we buffered about 90 frames of video before burning to disk. Right - unbuffered approach: each frame is written directly to disk

Then we tried to buffer a fixed number of bytes. We used the benefits of the io package from the standard Go library and used bufio.Writer , which provided a buffered record to any io.Writer type, even if the underlying structure does not support buffering. This allowed us to easily indicate how many bytes we want to buffer.

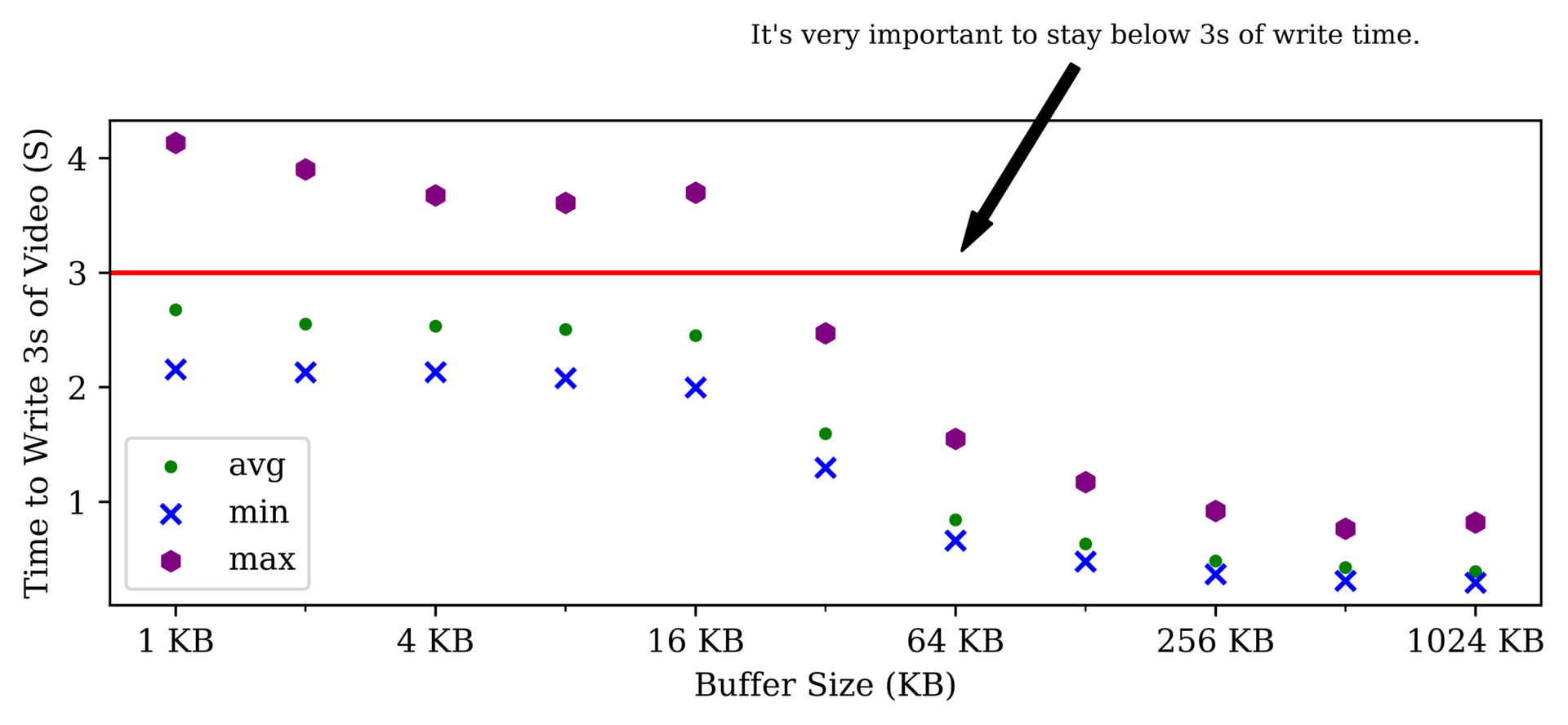

The next challenge was to determine the optimal compromise between the size of the buffer and the waiting time for I / O operations. The buffer is too large and we may lose too much memory, but, on the other hand, there is too much time to read / write and we stop coping with the incoming video from the camera. We spent a simple benchmark, which changed the buffer size from 1KB to 1MB and measured the time required to record 3 seconds (or about 1.8MB) video to disk.

Buffer size vs recording time

On the basis of the graph, one can clearly see a turning point of about 64KB - a good choice, which does not use too much memory and is fast enough not to lose frames. (This noticeable difference in time is explained by the implementation of flash memory). This change to buffers reduced the memory usage by an order of megabytes, but still not below the limit to which we aspired.

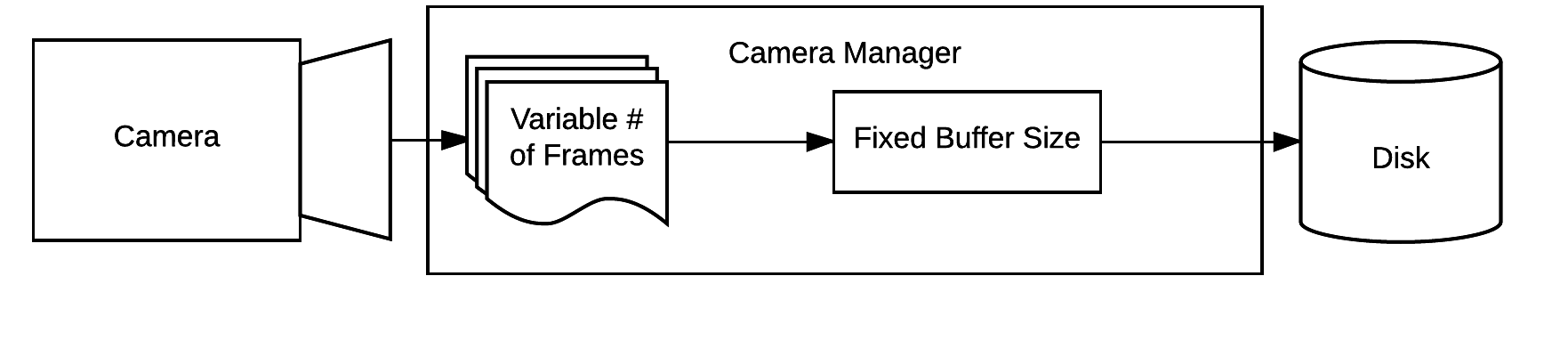

Final architecture: we always buffer 64KB before writing

The next step was profiling the memory usage of the service using the pprof profiler built into Go. We found out that in fact, the process was spending very little time, but something was suspiciously happening to the garbage collector.

Tuning garbage collector

Go's garbage collector relies on low response time and simplicity . It has the only parameter for tuning, the GOGC is the percentage that controls the ratio of the total heap size to the available process size. We played with this parameter, but there was no particular effect, since the memory freed after garbage collection was not immediately returned to the operating system.

After analyzing the Go source code , we discovered that the garbage collector gives unused memory pages to the operating system only once every 5 minutes. Since this allows you to avoid constant cycles of allocating and freeing memory when creating and deleting large buffers, this is good for response time. But for such sensitive memory applications as ours, this was not the best option. Our case is not very sensitive to response time, and we would prefer to exchange a lower response time for less memory usage.

The timeout for returning memory to the operating system cannot be changed, but Go has a function for this debug.FreeOSMemory , which starts garbage collection and returns the forced-out memory to the operating system. It was comfortable. We changed our camera service in such a way that it called this function every 5 seconds and saw that the RSS parameter decreased almost 5 times to an acceptable 10-15MB! Reducing memory consumption is not given for free, of course, but in our case it was suitable, since we did not have real time guarantees and we could slightly sacrifice the response time due to more frequent pauses from garbage collection.

If you are wondering why this helped: we upload videos to the cloud periodically, and this results in memory consumption peaks of around 15MB. We can safely allow such peaks if they hold for a few seconds, but a little longer anymore. A peak of 30MB and a GOGC value of 200% means that the garbage collector can allocate up to 60MB. After the peak, Go does not return the memory for 5 minutes, but by calling debug.FreeOSMemory we reduced this period to 5 seconds.

Conclusion

Adding new peripheral devices with which our router worked led to a serious blow to memory limitations. We experimented with different buffering strategies to reduce memory usage, but that, in the end, this Go garbage collection configuration helped under a different behavior. This was a little surprise for us - usually when you are developing in Go you don’t think about memory allocation and garbage collection, but in our conditions we had to do it. We were able to reduce memory consumption by a pleasant 5 times and ensure that the router always has 50MB of free RAM while supporting video upload to the cloud.

')

Source: https://habr.com/ru/post/336762/

All Articles