Deep diving into Windows Server and Docker containers - Part 2 - Implementing Windows Server containers (translation)

Hi, Habr! I present to your attention the translation of the article Deep dive into Windows Server Containers and Docker - Part 2 - Underlying implementation of Windows Server Containers by Cornell Knulst.

This article describes the features of the Docker implementation in Windows, as well as the differences in the implementation of containers between Windows and Linux.

Before this, a general idea is given of what containers are, how they are similar and how they differ from virtual machines.

Introduction

Introducing Windows Server 2016 Technical Preview 3 on August 3, 2015, Microsoft introduced container technology into the Windows platform. While container technology appeared on Linux in August 2008, this functionality was not previously supported by Microsoft operating systems. Due to the success of Docker on Linux, Microsoft decided almost 3 years ago (the original article was published on May 6, 2017 - approx. Transl. ) To begin work on the implementation of containers for Windows. Since September 2016, we have been able to work with the publicly available version of this new container technology in Windows Server 2016 and Windows 10. But what is the difference between containers and virtual machines? And how are Windows containers internally implemented? In this article we will dive into the implementation of containers on Windows.

Containers and virtual machines

Often, containers are introduced to the phrase “Containers are lightweight virtual machines”. While this may help people give a fundamental understanding of what containers are, it is important to note that this statement is 100% wrong and can be very confusing. Containers are different from virtual machines, and therefore I always see containers as “something different from virtual machines” or even say “containers are NOT virtual machines”. But what is the difference? And why is she so important?

What do containers and virtual machines have in common?

Although containers are NOT virtual machines, they both have three important characteristics:

( Albund image | Dreamstime.com )

What do containers and virtual machines have in common:

- Isolated Environment : Like virtual machines, containers guarantee isolation of the file system, environment variables, the registry, and processes between applications. This means that, like a virtual machine, each container creates an isolated environment for all applications inside it. During migration, both containers and virtual machines retain not only the applications inside, but also the context of these applications.

- Migration between hosts : The great advantage of working with virtual machines is that you can move virtual machine snapshots between hypervisors without having to change their contents. This is true for containers. Where virtual machines can be “moved” between different hypervisors, containers can be “moved” between different container hosts. When “moving” both types of artifacts between different hosts, the contents of the virtual machine / container remain exactly the same as on previous hosts.

- Resource management : another common feature is that the available resources (CPU, RAM, network bandwidth) of both containers and virtual machines can be limited to given values. In both cases, this resource management can be performed only on the host side of the container or hypervisor. Resource management ensures that the container receives limited resources to minimize the risk that it will affect the performance of other containers running on the same host. For example, a container can be given a restriction that it cannot use more than 10% of the CPU.

The difference between containers and virtual machines

Although there are similarities between containers and virtual machines, there are also some important differences between them.

The difference between containers and virtual machines:

- Virtualization Level : Containers are a new level of virtualization. If you look at the history of virtualization, it began with concepts such as virtual memory and virtual machines. Containers are a new level of this virtualization trend. Where virtual machines provide hardware virtualization, containers provide OS virtualization. This means that if hardware virtualization allows a virtual machine to believe that its hardware resources belong only to it, OS virtualization allows the container to believe that the entire OS belongs only to it. It is important to note this difference in virtualization. Containers, for example, do not have their own kernel mode. For this reason, containers are not visible as virtual machines, and they are also not recognized as virtual machines inside the operating system (you can try the PowerShell Get-VM command yourself). A good analogy to explain this difference is at home (virtual machines) and apartments (containers). Houses (virtual machines) are completely self-sufficient and provide protection against uninvited guests. Each house also has its own infrastructure - plumbing, heating, electricity, etc. Apartments (containers) also provide protection from uninvited guests, but they are built on the basis of common infrastructure. In an apartment house (Docker Host) plumbing, heating, electricity, etc. are common. Although they both may have common characteristics, they are different entities.

- OS Interaction : Another important difference between containers and virtual machines is the way they interact with kernel mode. While virtual machines have a full OS (and dedicated kernel mode), containers share “OS (more precisely, kernel mode)” with other containers and with a host of containers. As a result, containers should be guided by the container host OS, while the virtual machine can choose any OS (version and type) that it wishes. Where virtual machines can run Linux on the Windows hypervisor, with container technology it is not possible to start the Linux container on the Windows container host, and vice versa. (In Windows 10, you can run Linux containers, but they run inside a Linux virtual machine - approx. Transl. )

- Growth model : containers share container host resources, and are created based on an image that contains exactly what you need to run your application. You start with the basics and add what is needed. Virtual machines are created in the reverse order. Most often we start with a full operating system and, depending on the application, we remove things that are not needed.

Windows Server Containers

Now that we know the differences between virtual machines and containers, let's dive deeper into the Windows Server container architecture. To explain how containers are internally implemented in the Windows operating system, you need to be aware of two important concepts: user mode and kernel mode. These are two different modes between which the processor constantly switches, depending on the type of code that needs to be executed.

Kernel mode

The kernel mode of the operating system was created for drivers who need unrestricted access to hardware. Normal programs (user mode) have to call the operating system API to gain access to hardware or memory. The code running in kernel mode has direct access to these resources, and it shares the same memory / virtual address space as the operating system and other drivers in the kernel. Therefore, it is very risky to execute code in kernel mode, as data belonging to the operating system or another driver may be damaged if your kernel mode code accidentally writes data to the wrong virtual address. If the kernel mode driver crashes, then the entire operating system crashes. Therefore, in kernel mode, you need to execute as little code as possible. For this very reason, user mode has been created.

User mode

In user mode, the code is always executed in a separate process (user space), which has its own set of memory areas (its own virtual address space). Since each application’s virtual address space is own, one application cannot change data belonging to another application. Each application is executed in isolation, and if the application crashes, then the crash is limited only to this application. In addition to the fact that the virtual address space is private, in user mode it is limited. A user mode processor cannot access virtual addresses reserved for the operating system. Limiting the virtual address space of an application in user mode does not allow it to change, and possibly damage, the critical data of the operating system.

Technical implementation of Windows containers

But what do all these processor modes do with containers? Each container is just a “user mode” of a processor with a couple of additional features, such as isolation of the namespace, resource management, and the concept of a cascade-integrated file system. This means that Microsoft needed to adapt the Windows operating system to allow it to support multiple user modes. Something that was very difficult to do, given the high level of integration between the two modes in previous versions of Windows.

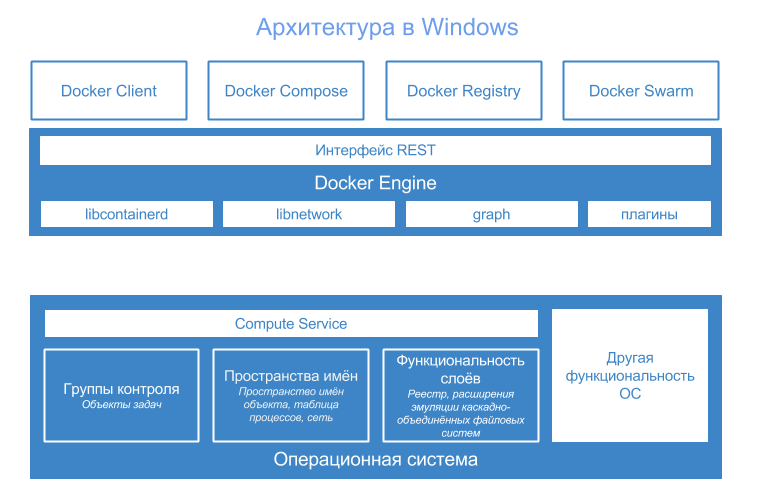

Before the release of Windows Server 2016, each Windows operating system we used consisted of a single “user mode” and a “kernel mode”. With the advent of Windows Server 2016, it has become possible to have several user modes running in the same operating system. The following diagram gives an overview of this new multi-user architecture.

Looking at the user modes in Windows Server 2016, you can identify two different types: host user mode and container user modes (green blocks in the diagram). The host user mode is identical to the normal user mode, which we are familiar with from previous versions of Windows. The purpose of this user mode is to host basic Windows services and processes, such as Session Manager, Event Manager, and the network. Moreover, this user mode facilitates, in the case of the implementation of Windows Server Core, user interaction with Windows Server 2016 using the user interface.

A new feature of Windows Server 2016 is that once you have enabled the Containers component, this user mode of the host will contain some additional container management technologies that ensure that the containers work in Windows. The basis of this container technology is the abstraction of Compute Services (orange block), which gives access via the public API to the low-level capabilities of the container provided by the kernel. In fact, these services contain only the functionality to launch Windows containers, monitor their running, and manage the functionality necessary to restart them. Other container management functions, such as tracking all containers, storing container images, volumes, etc., are implemented in the Docker Engine. This engine communicates directly with Compute Services Container API and uses the “Go language” proposed by Microsoft for this.

The difference between Windows and Linux containers

Same Docker Utilities and Commands in Windows and Linux Containers

Although the same Docker client utilities (Docker Compose, Docker Swarm) can manage both Windows and Linux containers, there are some important differences between container implementations on Windows and on Linux.

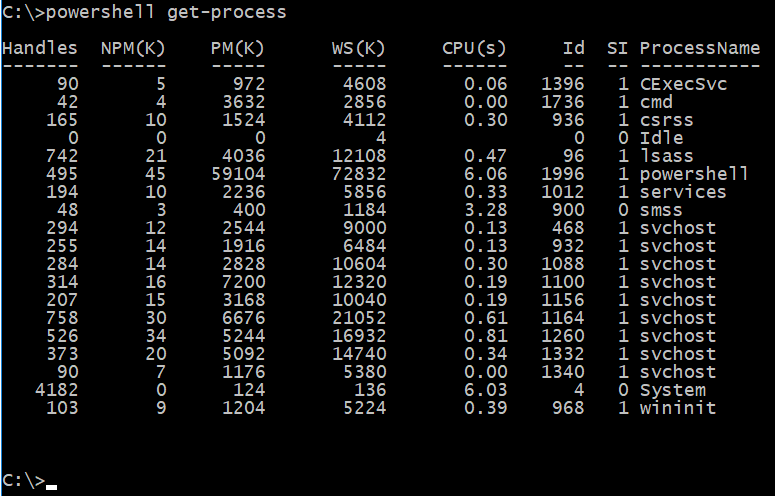

System processes

Where Linux provides its kernel-level functionality through system calls, Microsoft decided to control the available kernel functionality with a DLL (this is also the reason why Microsoft actually did not document its system calls). Although this method of abstraction for system calls has its advantages, it has resulted in a highly integrated Windows operating system with many interdependencies between different Win32 DLLs and the expectation from many DLLs that will start some system services that they (non) explicitly refer to. As a result, it is not very realistic to run only the application processes specified in the Dockerfile inside the Windows container. Therefore, inside the Windows container, you will see a bunch of additional system processes running, while Linux containers need to run only the specified application processes. To ensure that the necessary system processes and services are running inside the Windows container, the so-called smss process is started inside each container. The purpose of the smss process is to start the necessary system processes and services.

SMSS process

OS architecture

Not only in the way kernel functionality is provided, but also at the architecture level, there is an important difference in how both operating systems provide container functionality to Docker client utilities. In the current container implementation in Windows Server 2016, there is an abstraction layer called Compute Services, which abstracts the low-level capabilities of the container from the outside. The reason for this is that Microsoft can change the container's low-level API without having to change the public API called by Docker Engine and other container client utilities. In addition to this Compute Services API, you can write your own container management toolkit using C # or Go binding, which is available at https://github.com/Microsoft/dotnet-computevirtualization and https://github.com/Microsoft/ hcsshim

Cascade unified file system?

The third important difference between the Linux and Windows container implementations is the way that both operating systems use copy-on-write Docker technology. Since many Windows applications rely on NTFS semantics, it was difficult for the Microsoft team to create a full-fledged implementation of a cascading and unified file system in Windows. For features such as USN logs and transactions, this would, for example, require a completely new implementation. Therefore, in the current version of containers in Windows Server 2016 there is no real cascade-integrated file system. Instead, the current implementation creates a new NTFS virtual disk for each container. To keep these virtual disks small, the various file system objects on this virtual NTFS disk are in fact symbolic links to the real files of the host file system. As soon as you change the files inside the container, these files are saved to the virtual block device, and at the moment you want to fix this layer of changes, the changes are picked up from the virtual block device and saved in the container host's file system.

Hyper-V Containers

The final difference in the Linux and Windows container implementation is in the concept of Hyper-V containers. I will write about this interesting type of container in the next article in this series. Stay with us…

')

Source: https://habr.com/ru/post/336642/

All Articles