Quick registration of image focal points using big-vote

Detection and registration of image features has many applications in robotics, video compression, etc. Quick and accurate registration is still an unattainable dream of many programmers and users. It is either quick or neat ...

I have been working for quite some time (about 17 years) on image processing, including reconstructing a 3D mesh from video, and even have my own company selling such a product. However, I decided to part of the development and put the key idea into open access without patent blocking.

The general idea of the existing relatively fast algorithms is as follows:

The proposed registration scheme is as follows.

For each image (detect):

')

To register (bind):

Result:

for FullHD on i7-6900K using single core

For approximately 10,000 dots per image

detect 29.0556 ms / per image

bind 10.46563 ms / per pair

Advantages: fast, reliable with small perspective distortions (small number of incorrectly connected points), simple code, not covered by patents (as far as I know).

Actually source code

On the basis of this scheme, now I am writing a blank for Raspberry Pi SLAM , in my spare time.

I have been working for quite some time (about 17 years) on image processing, including reconstructing a 3D mesh from video, and even have my own company selling such a product. However, I decided to part of the development and put the key idea into open access without patent blocking.

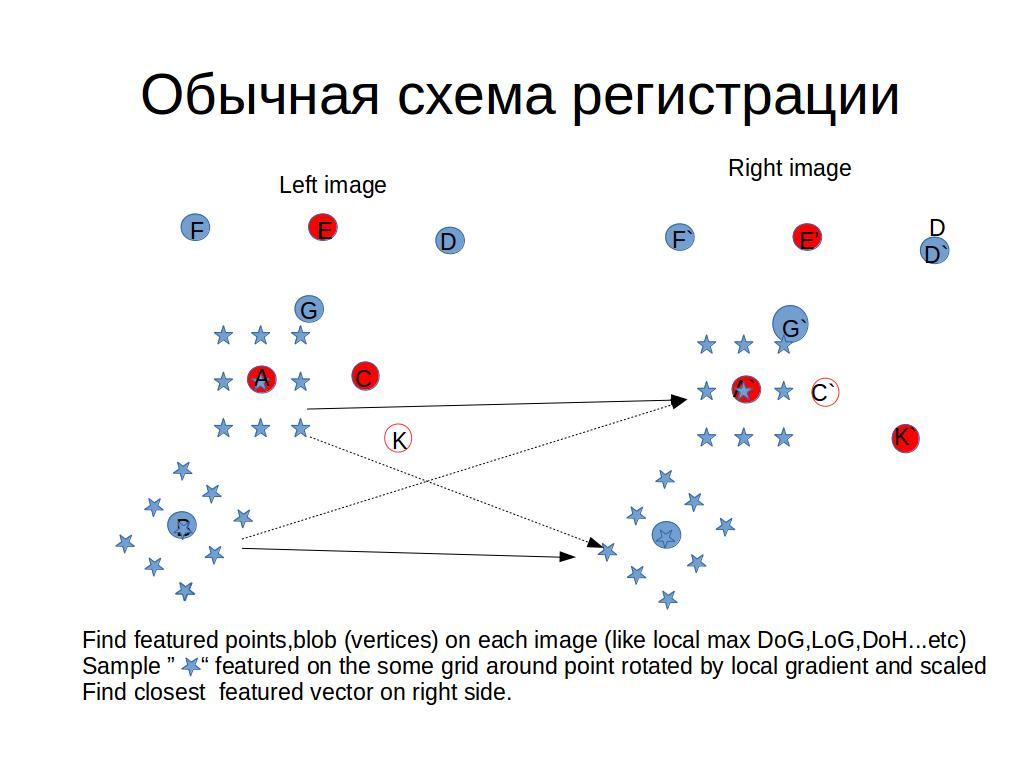

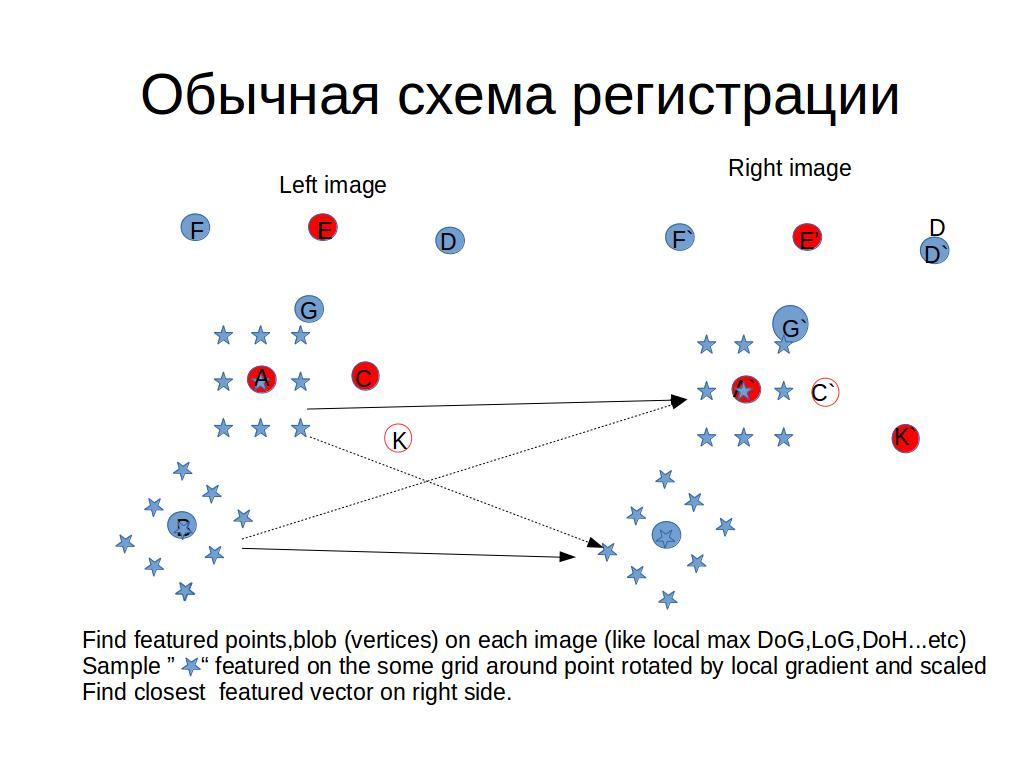

The general idea of the existing relatively fast algorithms is as follows:

- (Feature detection) Find any specific points in each picture, preferably with sub-pixel precision.

- (Feature description) Build a certain array of features for each point, fully or partially meeting the following requirements:

- Invariance to:

- (Physics) noise, exposure changes (brightness and contrast), compression artifacts

- (2D geometry) of turns, shifts, scaling

- (3D geometry) projection distortion

- Compact (less memory, faster comparison)

- Varieties (Near a selected point in a certain predefined pattern):

- Histogram of gradients, brightness, colors. (SIFT, SURF ...)

- Invariance to:

- Read the values and normalize the level. (ORB, BRIFF ...)

- For a pair of pictures, find correspondences of points using the minimum distance (the sum of absolute differences (L1) or the sum of squared differences (L2)) between the arrays of attributes, the asymptotic complexity of this step is O (N ^ 2), where N is the number of singular points.

- (Optional): Check geometric compatibility of pairs using eg RANSAC and repeat step 3

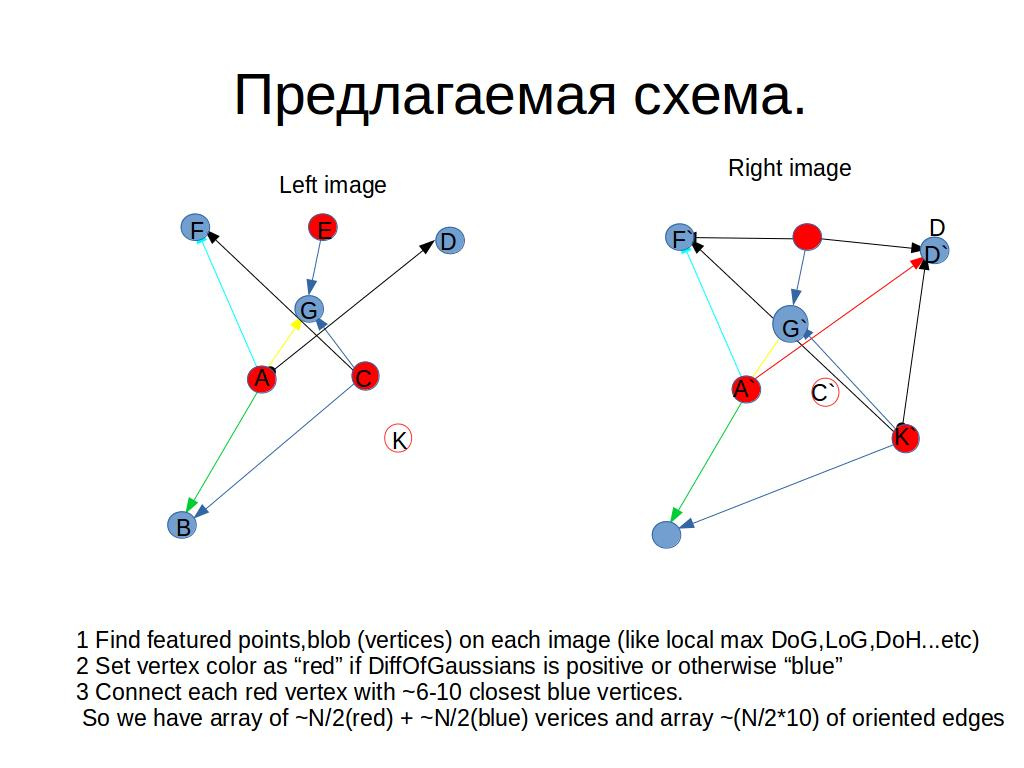

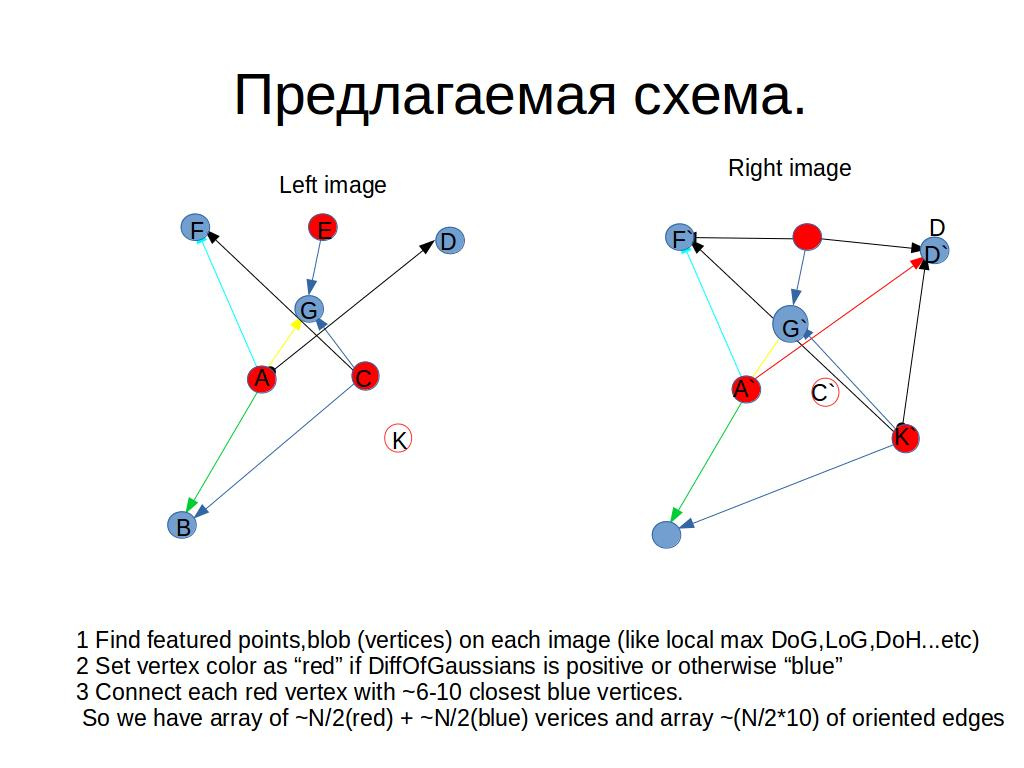

The proposed registration scheme is as follows.

For each image (detect):

')

- Find special points

- Divide the feature points into 2 groups based on the sign of the difference ( DoG ) between the value at the point and the average in the small neighborhood.

- For each point from the first group find about a dozen neighbors.

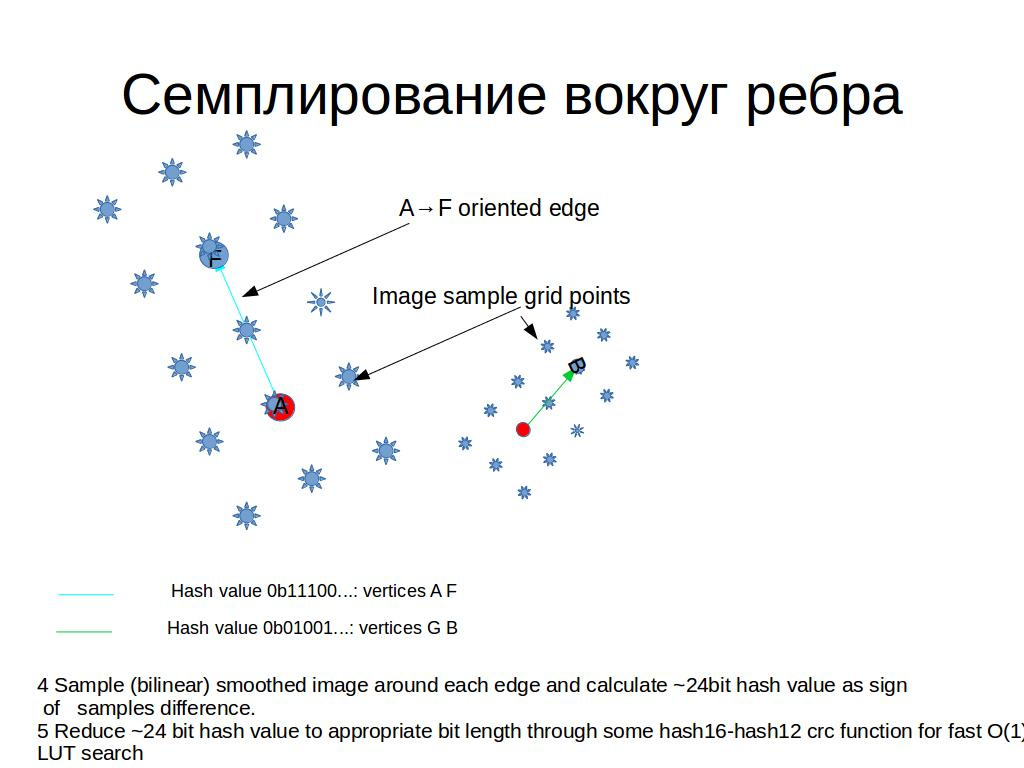

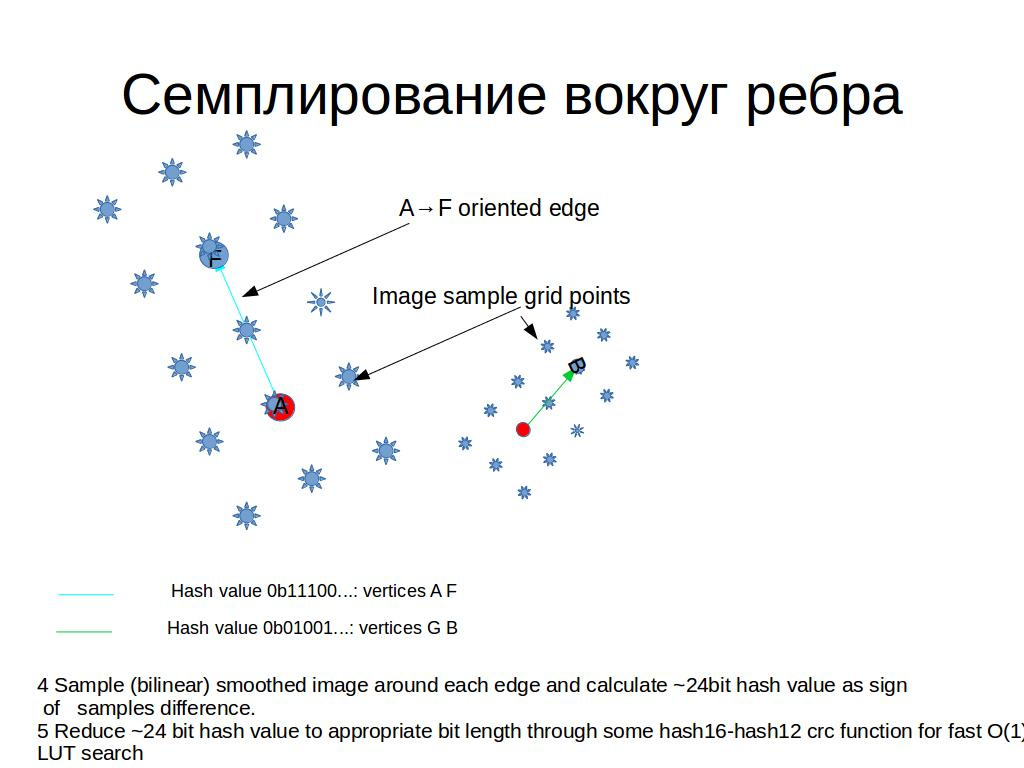

At this stage, we have a biggraph of ~ N / 2 * 10 oriented edges - For each edge we sample at the points of the pattern scaled and rotated along with the edge

- Build a bit (~ 26 bit) hash c by comparing samples

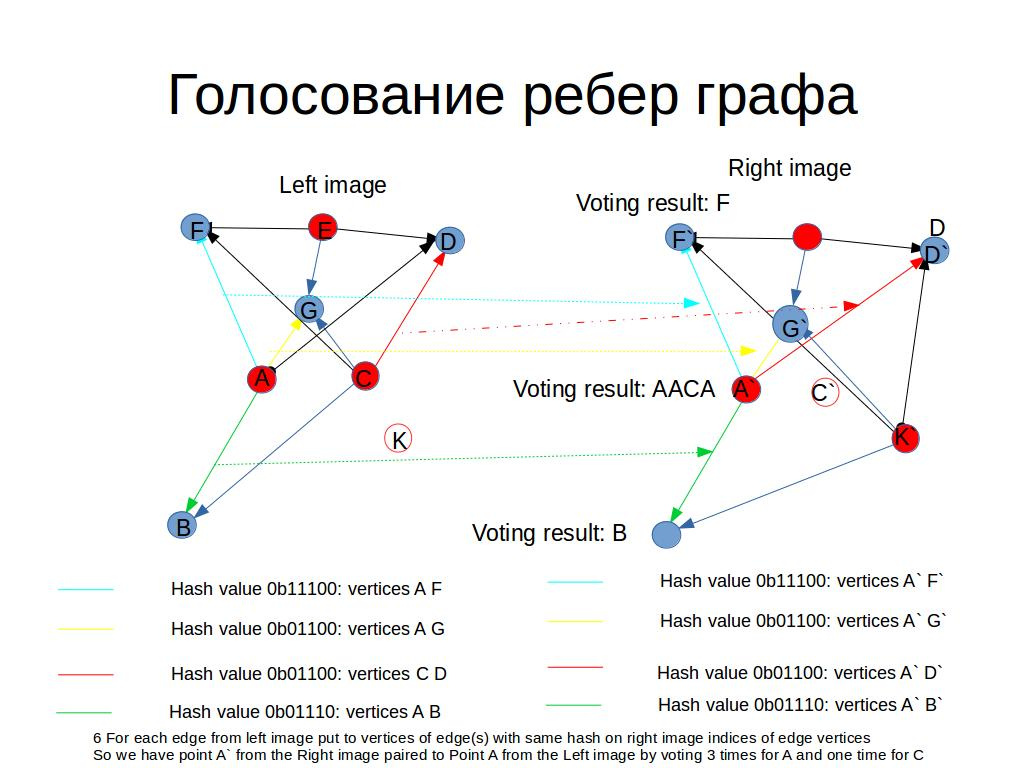

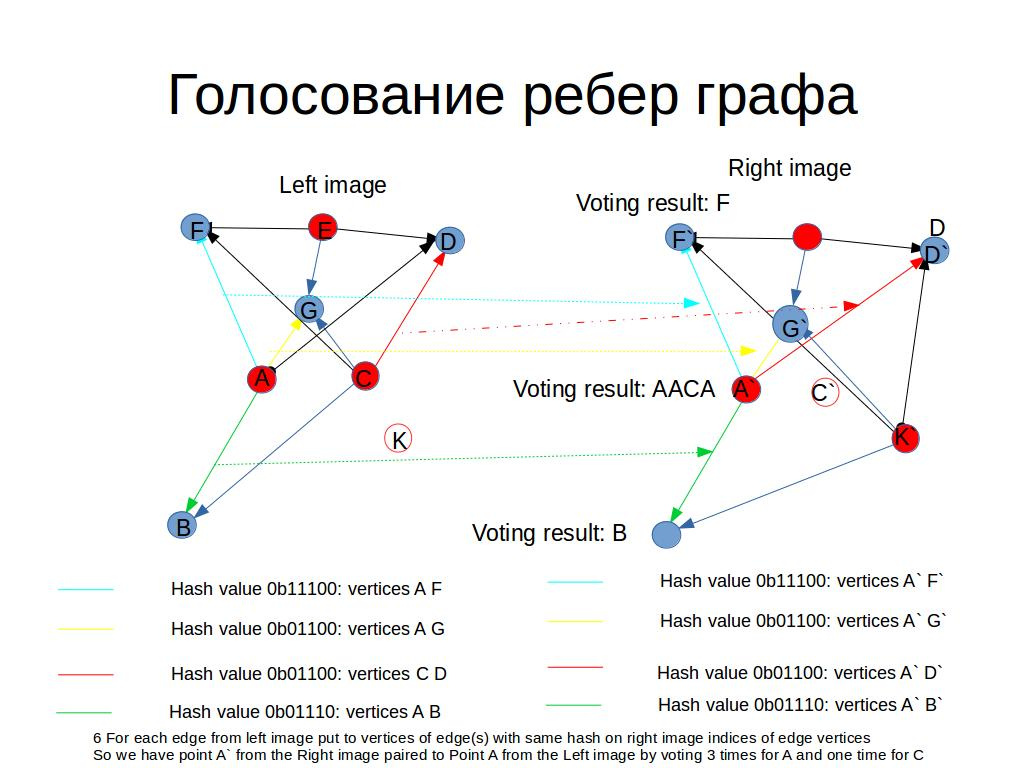

To register (bind):

- We build LUT from edges of the right image on hash value

- For each edge from the left image, look for O (1) edge (s) with the same hash in LUT

- We add 2 indexes of points from the left edge to 2 indexes of points from the right

- Pass through all points of the right picture and count the number of votes

Result:

for FullHD on i7-6900K using single core

For approximately 10,000 dots per image

detect 29.0556 ms / per image

bind 10.46563 ms / per pair

Advantages: fast, reliable with small perspective distortions (small number of incorrectly connected points), simple code, not covered by patents (as far as I know).

Actually source code

On the basis of this scheme, now I am writing a blank for Raspberry Pi SLAM , in my spare time.

Source: https://habr.com/ru/post/336494/

All Articles