Nodebackup - saving data from containers (docker) and the rest

This is a story about a self-written bike, which I tried to fulfill the chief covenant of the system administrator - so that everything worked, but I did not do anything. )

Some intro

IMPORTANT - the information here is somewhat outdated, the general description remains unchanged, but some things in the configuration have changed, I therefore advise you to immediately look at the githaba .

We have been at the company for several years (two years for sure) going all the way to Linus, more precisely to containers. The program itself, which is developed in the company, can also work under Linux, so there are no special problems and the path has been predetermined.

In principle, we only have AD, FileServer and a number of other test servers.

Even at the beginning when containers appeared, the question arose how to save them, then there were some scripts, but you had to do a lot with your hands and with the increase in the number of containers it became annoying. Therefore, I wrote a program so that you can save containers, data from servers (Windows and Linux). This was my first program I wrote in node.js and it was accordingly good, but it worked. A few months later I found an error, but could not fix it, and since I had already learned a bit about node.js, it was decided to rewrite everything to async / await, and so a second version appeared, about which I want to write.

Implementation

As I wrote above, nodebackup can only store data. Under the hood, duplicity works there . Nodebackup only prepares data so that duplicity can feed it.

How it works

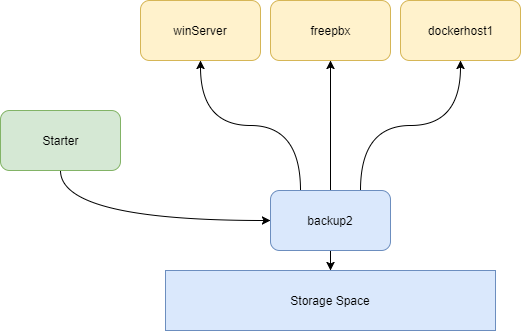

The picture above describes everything well (I hope I did it myself))), but I will repeat it in words.

There are four components - starter, exec, client, server, and they can all be on the same, or on different servers, there can also be many clients.

Starter is actually nodebackup, you can put it wherever node.js is. He works for me in a container (I'll write about it later)

Exec - starter connects to this server and already executes all commands from it. Made for the case, if you need for example to do a save from the Internet to the network, then you can put this part in the DMZ, we have it spinning on the backup server.

Client - in fact, this is a server from which saving is removed, it can be linux, windows or a docker host.

Server is where the data is stored.

On Starter, there is a configuration that says that such a Server makes save for those clients and the configuration for the Client has already been written what to do with it. If Client is a docker host, then Labels are read from each container that says what to do with this container.

Configuration

So, we have a server where nodebackup is installed (this is a starter), for this you just need to install node.js> v6.5 and for example, three servers - windows fileserver (winserver), linux (freepbx), docker host (dockerhost1) and everything this is saved on backup2.

backup2 - performs the role of Exec / Server and to work on it you need to install cifs, duplicy, sshfs. Cifs is needed in order to mount smb, sshfs for mounting data from the freepbx server and duplicity to save it all.

Also, clients need an input via ssh key in the case of linux or the docker of the host.

Actually this is the whole setup on the servers, now you need to create a configuration for nodebackup, so that it knows what to do.

So, create a file config.yaml, should be located in the nodebackup folder.

First part for nodebackup

starter: sshkey: id_rsa log: path: log id_rsa is the ssh key, in this case it is in the same folder.

Further part for server backup (in this case, this is backup2)

backupserver: backup2: passphrase: PASSPHRASE-FOR-DUPLICITY HOST: backup2.example.com USER: admin2 PORT: '22' prerun: '["echo prerun1", "echo prerun2", "echo prerun3"]' #postrun: '["echo postrun1","echo postrun2","echo postrun3"]' backuppath: /backup duplicityarchiv: /backup/duplicityarchiv backuppartsize: 2048 tmpdir: /backup/backuptemp backupfor: '["winserver", "freepbx", "dockerhost1"]' passphrare - password to save, it is used for all clients that are saved to the server

prerun, postrun - commands that are respectively executed before and after backup

backuppath is where backups are stored.

duplicityarchiv - optional, duplicity saves data for itself, quite a lot of them, if you do not set the option, then this is all stored in /home/user/.config/duplicity

backuppartsize - the backup is broken into pieces of 2048 MB, here you can write your own something.

backupfor - client names are written here, the configuration for them will be described below.

Now part for clients, first winserver

clientserver: backup2: HOST: backup2.example.com USER: admin2 PORT: '22' sftpServer: sudo: true path: /usr/lib/sftp-server winserver: HOST: "windows.example.com" SMB: SET: "true" PATH: "d$" PASS: "userpass" DOMAIN: "example.com" USER: "backupservice" PORT: 22 backup: /cygdrive/d include: '["usershare", "publicshare", "Geschäftsleitung"]' exclude: '["publicshare/Softwarepool", "**"]' confprefixes: '["asynchronous-upload", "allow-source-mismatch"]' There is some error here, since the program was written, mainly for Linux, then there are some artifacts left from the configuration that cannot be erased anyway - this is PORT, backup.

SMB is the actual settings for samba, how it will work with the server if it is not in the domain I do not know

include, exclude - this is where the path from SMB / PATH is taken and include is attached to it and the exclude is removed accordingly.

confprefixes - here you can add options from duplicity, all this is in its documentation.

Add a server with Linux, where you need to save only a folder, well, or several.

freepbx: HOST: "freepbx.example.com" USER: root PORT: '22' nextfullbackup: 1M noffullbackup: 2 backup: /var/spool/asterisk/backup/taeglich prerun: '["mv /var/spool/asterisk/backup/taeglich/*.tgz /var/spool/asterisk/backup/taeglich/backup.tgz"]' postrun: '["rm -Rf /var/spool/asterisk/backup/taeglich/backup.tgz"]' confprefixes: '["asynchronous-upload"]' nextfullbackup - says how much incremental backups can be made before a new full backup is made.

noffullbackup - the number of backups (number of fullbackups)

prerun, postrun - commands that run before and after backup

Now the most important part is the backup of the containers.

Start configuration from config.yaml

dockerhost1: HOST: dockerHostN010912m.example.local USER: docker PORT: 22 docker: 'true' sftpServer: sudo: true path: /usr/lib/sftp-server docker - true says this is a docker host

sftpServer - if you do not have root, but there is a user with sudo (without a password), then you need to clean it up, this is what it looks like in ubuntu

After the program sees that it is a docker host, it reads container information from the docker.sock (/var/run/docker.sock) and looks at each Labels , if it finds the necessary ones, then a backup is made.

This is part of docker-compose.yaml, in which labels are registered, they are similar to the configuration from the part about clients

labels: prerun: '["echoc prerun1", "echo prerun2"]' postrun: '["echo postrun1","echo postrun2"]' nextfullbackup: "1M" noffullbackup: "2" backup: "/a/data/backup-new-test" strategy: "off" confprefixes: '["allow-source-mismatch"]' name: "backup-new-test" processes: '["httpd"]' failovercustom: "echo 'failover ####################'" passphrase: "+++++containerPASS#######" prerun, postrun - they are executed not in the container, but on the docker host.

strategy is an option that describes whether to turn off the container for backup or not. IMPORTANT: This will also turn off the link containers.

name - optional, this will be the name of the folder on the backup server, if there is nothing here, then just take the name of the container.

The processes are from the monitoring topic and got here by accident.

failovercustom - optional, if an error occurred during the backup, then this command is executed, if this option is not present, then just "docker start CONTAINER + LINKS"

passphrase - you can register for each container separately, or globally for the entire docker of the host. The priority is the container, then the docker host.

starter: sshkey: id_rsa log: path: log backupserver: backup2: passphrase: PASSPHRASE-FOR-DUPLICITY HOST: backup2.example.com USER: admin2 PORT: '22' prerun: '["echo prerun1", "echo prerun2", "echo prerun3"]' #postrun: '["echo postrun1","echo postrun2","echo postrun3"]' backuppath: /backup duplicityarchiv: /backup/duplicityarchiv backuppartsize: 2048 tmpdir: /backup/backuptemp backupfor: '["winserver", "freepbx", "dockerhost1"]' clientserver: backup2: HOST: backup2.example.com USER: admin2 PORT: '22' sftpServer: sudo: true path: /usr/lib/sftp-server server2: HOST: "windows.example.com" SMB: SET: "true" PATH: "d$" PASS: "userpass" DOMAIN: "example.com" USER: "backupservice" PORT: 22 backup: /cygdrive/d include: '["usershare", "publicshare", "Geschäftsleitung"]' exclude: '["publicshare/Softwarepool", "**"]' confprefixes: '["asynchronous-upload", "allow-source-mismatch"]' freepbx: HOST: "freepbx.example.com" USER: root PORT: '22' nextfullbackup: 1M noffullbackup: 2 backup: /var/spool/asterisk/backup/taeglich prerun: '["mv /var/spool/asterisk/backup/taeglich/*.tgz /var/spool/asterisk/backup/taeglich/backup.tgz"]' postrun: '["rm -Rf /var/spool/asterisk/backup/taeglich/backup.tgz"]' confprefixes: '["asynchronous-upload"]' dockerhost1: HOST: dockerHostN010912m.example.local USER: docker PORT: 22 docker: 'true' sftpServer: sudo: true path: /usr/lib/sftp-server In principle, correctly setting the settings you can do a lot of different things.

Another example for a container. We have one container with a large database, where I don’t want to wait for the database to be copied so that it is fast, we turn off the container, take a snapshot, turn on the container, do a backup from the snapshot and erase it later, here’s the configuration for the container

labels: nextfullbackup: "1M" noffullbackup: "2" backup: "/cephrbd/mariadb-intern-prod/snapshot" prerun: '["sudo docker stop adito4internproddb_mariadb-dev_1 adito4internproddb_mariadb_1","sudo btrfs subvolume snapshot -r /cephrbd/mariadb-intern-prod /cephrbd/mariadb-intern-prod/snapshot","sudo docker start adito4internproddb_mariadb-dev_1 adito4internproddb_mariadb_1"]' postrun: '["sudo btrfs subvolume delete /cephrbd/mariadb-intern-prod/snapshot"]' strategy: "on" Of course, it may also be necessary to save a folder from the server and the same server is the docker host, this also works.

In one config.yaml there can be many backup servers and also many clients. We have for example several servers at Hetzner and they are also registered in the config.yaml

Start

Now that we have config.yaml, we can make a backup.

Command list

/nodebackup # node BackupExecV3.js -h Usage: BackupExecV3 [options] Options: -h, --help output usage information -V, --version output the version number -b, --backup start backup -e, --exec [exec] server, which start backup job -t, --target [target] single server for backup -s, --server [server] backup server -d, --debug debug to console -f, --debugfile debug to file -r, --restore starte restore -c, --pathcustom [pathcustom] path to restore data (custom path) -o, --overwrite overwrite existing folder by recovery -p, --pathdefault [pathdefault] default path from config.yml -m, --time [time] backup from [time] -i, --verify verify all backups on a backup server Info: Read README.md for more info Backup: Backup: sudo ./BackupExecV3 -b -e backup2 -s backup2 Single backup: sudo ./BackupExecV3 -b -e backup2 -s backup2 -t freepbx Recovery: Backup to original path: sudo ./BackupExecV3.js -r -t backup-new-test -s backup2 -p freepbx -o Backup to custom path: sudo ./BackupExecV3.js -r -t backup-new-test -s backup2 -c freepbx:/tmp/newFolder Backup to custom path: sudo ./BackupExecV3.js -r -t backup-new-test -s backup2 -c freepbx:/tmp/newFolder -o -m (2D|1W|10s|50m) Example 1

Make a backup:

node BackupExecV3.js -b -e backup2 -s backup2 -d/f See the program in config.yaml and determine which servers should be saved. After which a backup is made.

Example 2

We make a backup of a separate server or container

node BackupExecV3.js -b -e backup -s bacup2 -t CONTAINERNAME -d/f We simplify a little more

In order not to install nodebackup manually, I made an image for the docker ( here )

This container needs to be fed three files - config.yaml, crontab.tmp and ssh key

docker-compose.yaml

nodebackup: image: adito/nodebackup hostname: nodebackup environment: - SSMTP_SENDER_ADDRESS=no-reply@example.com - SSMTP_MAIL_SERVER=mail.example.com - SSMTP_HOST=nodebackup.example.com volumes: - ./config/config.yml:/nodebackup/config.yml:ro - ./config/id_rsa:/nodebackup/id_rsa:ro - ./config/crontab.tmp:/crontab.tmp:ro - /etc/timezone:/etc/timezone:ro - /etc/localtime:/etc/localtime:ro - /a/data/nodebackup/log:/a/data/log restart: always crontab.tmp

MAILTO=admin@example.com NODE_PATH=/usr/lib/nodejs:/usr/lib/node_modules:/usr/share/javascript # mh dom mon dow command 0 22 * * * node /nodebackup/BackupExecV3.js -b -s backup2 -e backup2 -f 0 22 * * * node /nodebackup/BackupExecV3.js -b -s hetznerdocker -e hetznerdocker -f 0 22 * * * node /nodebackup/BackupExecV3.js -b -s hetznerdocker2 -e hetznerdocker2 -f 0 22 * * * node /nodebackup/BackupExecV3.js -b -s hetznerdocker3 -e hetznerdocker3 -f 0 22 * * * node /nodebackup/BackupExecV3.js -b -s hetznerdocker4 -e hetznerdocker4 -f 0 22 * * * node /nodebackup/BackupExecV3.js -b -s hetznerdocker5 -e hetznerdocker5 -f Conclusion

I don’t know how the rest do it, I was a little googled, but I didn’t find anything good on this topic, it might not even be very correct, but everything was very simplified.

In the readme, there are other examples written on the githaba for recovery and verification, but I didn’t give them here, because everything seems to be there, the main thing is to create the correct configuration - config.yaml.

I wrote it for two reasons.

- We are slowly thinking of switching to kubernetes and there in my opinion it will be the wrong decision. Maybe someone will tell in the comments the right decision.

- I did everything for quite a while and wrote, and I would like other people to use it.

Links

')

Source: https://habr.com/ru/post/335880/

All Articles