How hackers prepare attacks on banks

There is an opinion that hackers use more and more sophisticated techniques to break into financial organizations, including the most up-to-date viruses, exploits from the arsenal of special services and well-targeted phishing. In fact, analyzing the security of information systems, we see that it is possible to prepare a targeted attack on a bank with the help of free public funds without the use of active influence, that is, invisible to the attacked. In this article, we will look at similar hacking techniques, built mainly on the excessive openness of network services, and also present recommendations on how to protect against such attacks.

Step 1. Defining goals

In the offline world, it is not easy to figure out which services and networks belong to a particular organization. However, there are many special tools on the Internet that make it easy to determine the networks controlled by the companies of interest to us, and without lightening them. For passive intelligence in the collection of statistics on the network perimeters of financial organizations, we used:

')

- Search engines (Google, Yandex, Shodan).

- Industry sites for the financial sector - banki.ru , rbc.ru.

- Whois-services 2ip.ru ; nic.ru.

- Search engines for databases of online registrars - Hurricane Electric BGP Toolkit, RIPE .

- Data visualization services for the domain name of the site - Robtex .

- The service for analyzing domain zones dnsdumpster , which contains historical data on domain zones (IP-address changes), which greatly helps to collect data. There are many similar services, one of the most famous analogues is domaintools.com .

This study did not consider such methods as active scanning, detection of firewall versions and IPS, determination of antivirus and other protection tools used, and social engineering. There are several other techniques that we did not use for ethical and other reasons, but hackers often use them:

- Search projects on github . It often happens that on GitHub there is a test project, a backup or a working code, which is forgotten to restrict or incorrectly restrict. The study of such projects requires high qualifications, but gives almost a 100% chance to penetrate the network, using the errors of the studied application or embedded credentials.

- Services for online vulnerability checks, such as HeartBleed, Poodle, DROWN. These services are highly likely to detect specific vulnerabilities, if any, but these checks take a very long time.

- Bruteforce DNS. This technique is an active intervention. It allows you to iterate through DNS-names of systems, identifying available. This happens through DNS queries to the target DNS server, while traffic can be sent, for example, via Google DNS, and from the point of view of the attacked organization, these queries will look legitimate. To implement such techniques, KaliLinux or a similar assembly is usually used. Unfortunately, in practice, DNS logs do not look, or even lead them, until something happens.

So, to begin with, we define a list of organizations that we are going to “put in control”. To do this, you can use search engines, profile sites and other profile information aggregators. For example, if we want to collect statistics on financial organizations, go to banki.ru and pick up the finished top banks and insurance companies. Collecting a list takes almost no time. We have identified the following categories of organizations:

- banks (from 1st place to 25th),

- banks (from the 26th to the 50th),

- banks (from the 51st to the 75th),

- banks (from 76th to 100th),

- microcredit organizations,

- payment systems,

- insurance companies (from 1st to 50th),

- insurance companies (from 51st to 100th).

Now we define the networks owned by the organization. We find the websites of organizations in the search system, to determine their addresses, we use the whois web service. This resource allows you to find out by the domain name of the site IP-address, as well as other important data for searching networks. In this paper, important data includes:

- Netname (network name, very useful when searching on the Ripe database);

- Descr (description can be applied to search using fantasy);

- Address (search for networks registered to the same physical address);

- Contact (you can search in the Ripe database by people who could also register networks);

- other information by which an organization can be identified.

All this information can be obtained through the Unix-command whois. What to use is a matter of taste. In order not to compromise specific banks, we will show this search on the example of our company:

Using the collected information about organizations, we searched for their address ranges in the Ripe registrar database. The Ripe service allows free search through all the networks registered in it. It is also worth paying attention to the Country field: we chose only the Russian network segment.

This stage of work demanded from us a large amount of manual labor, because some addresses can be given to a partner, leased or not belong to the desired organization. Therefore, in order to increase the accuracy of the results, we had to carry out additional checks in order to select only the necessary networks or hosts with the highest possible level of confidence. To verify the networks, we used the publicly available online service of the American telecommunications operator Hurricane Electris, which can provide information on the network in which it is located to the IP address of the site. At this stage of the work, Robtex service was also very useful. It shows all the links for the specified domain name, this allowed us to find networks that we could not find when searching in the Ripe database. In addition, Robtex allows you to see other sites located at this IP-address, and this information may not be superfluous either. Search example:

As already mentioned, the definition of the necessary networks is the worst automated, since it requires manual selection of relevant results. However, it took us only two days to collect information about the financial sector networks. After the completion of this stage, we received a list of the “organization-network” type.

Step 2. Identify available services

To do this, you can use one of the two most well-known tools designed to make the Internet safer: Shodan or Censys. They have similar capabilities, support the work with the API, and can also complement each other. For a full search, both services require registration. Censys is more demanding: in order to remove restrictions on the search results, you will have to write to the developers, convince them of the ethics of the research and the responsible use of the data. The argument will be CEH certificate or detailed information about the study.

We used the service Shodan, because it is more convenient. In addition, Shodan scans in the same way as scanning Nmap with the “-sV” flag, which is a plus in our study: it’s more common to handle the results. Probably the automation process is the most interesting, but it makes no sense to describe it in detail, because everything, including Python code examples, has already been described by its creator John Matherly, also known as @achillean, in a very convenient form . Moreover, there is a repository on GitHub , where you can get acquainted with the official library Shodan for Python.

Detailed information about requests to Shodan can be obtained by reference . An example of a request via the web interface looks like this:

The example shows that UDP port 53, DNS service in the USA and owned by Google is available at 8.8.8.8, as well as the version of the operating system used on this IP address. With requests to Shodan, you can identify much more specific services that are forgotten to restrict access from the Internet, although this should be done. Since it is possible to obtain various banners and versions of these services, it is possible to compare the data obtained with various vulnerability databases.

However, it turns out that we need to get every detected IP address through Shodan, and for a second we got about 100,000 - a bit too much for a manual check ... What is there about the API?

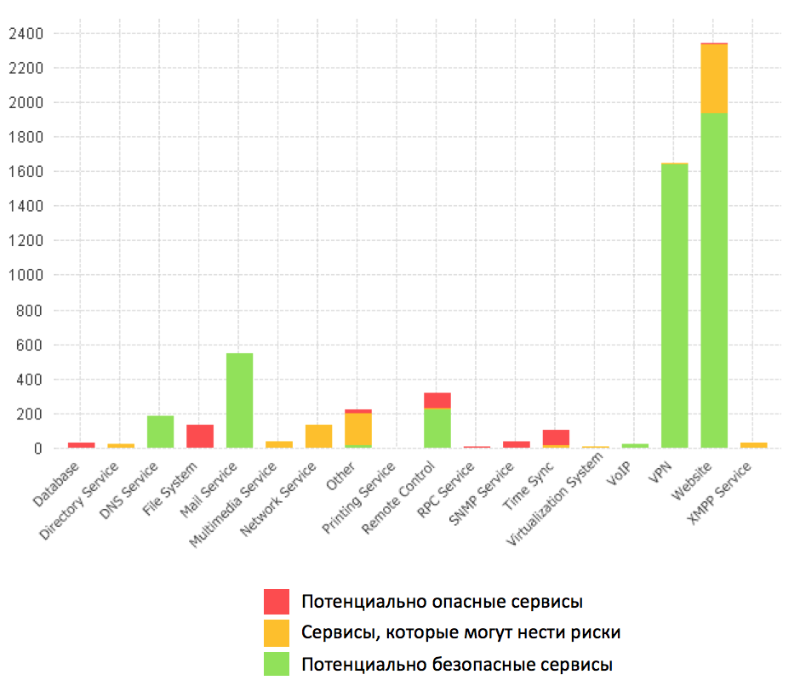

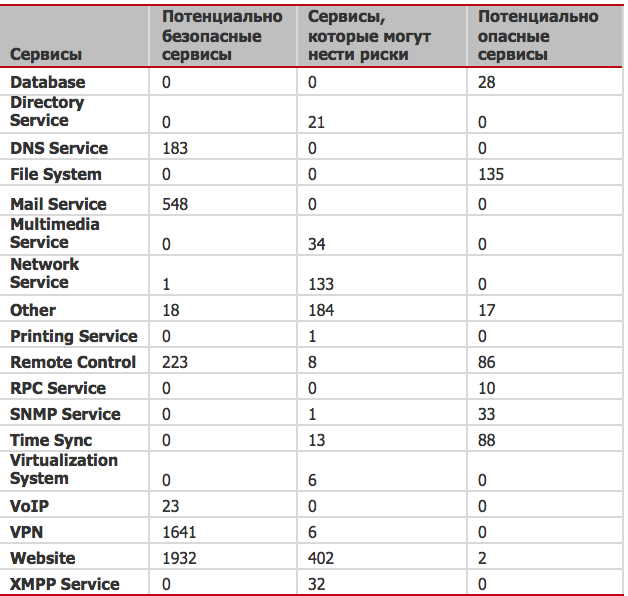

We wrote our own information collector. They launched it - and after a week of work, the programs got a picture of the distribution of available services in the financial sector, without any interaction with them at all! Tracking changes in infrastructures in this way is quite realistic. Here is what we found:

From the most "terrible" on the perimeters of financial organizations found:

- DBMS (for the sake of fairness, we note that some of the banners contained the entry “is not allowed”);

- directory services (LDAP can be obtained from banners);

- Services that provide access to the file system (such as SMB and FTP);

- Printers (and here, judging by the payload, there is no error!), which may have the most dramatic vulnerabilities and are generally recognized as the least protected devices . Yes, yes, the vulnerabilities are old. But when was the last time you updated your printers on the perimeter?

- Insecure remote control services such as Telnet, RDP;

- RPC services;

- Virtualization systems;

- Multimedia services.

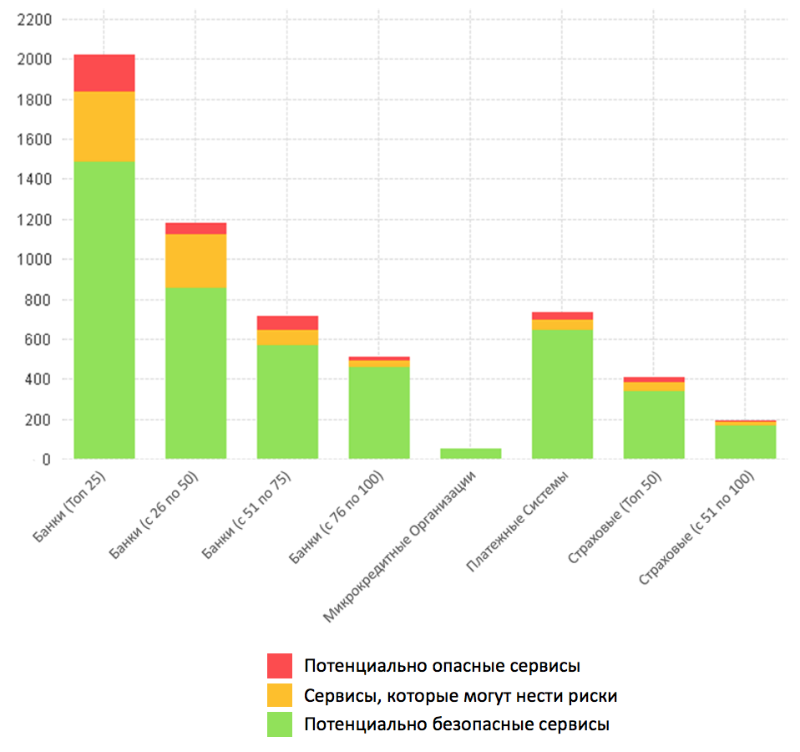

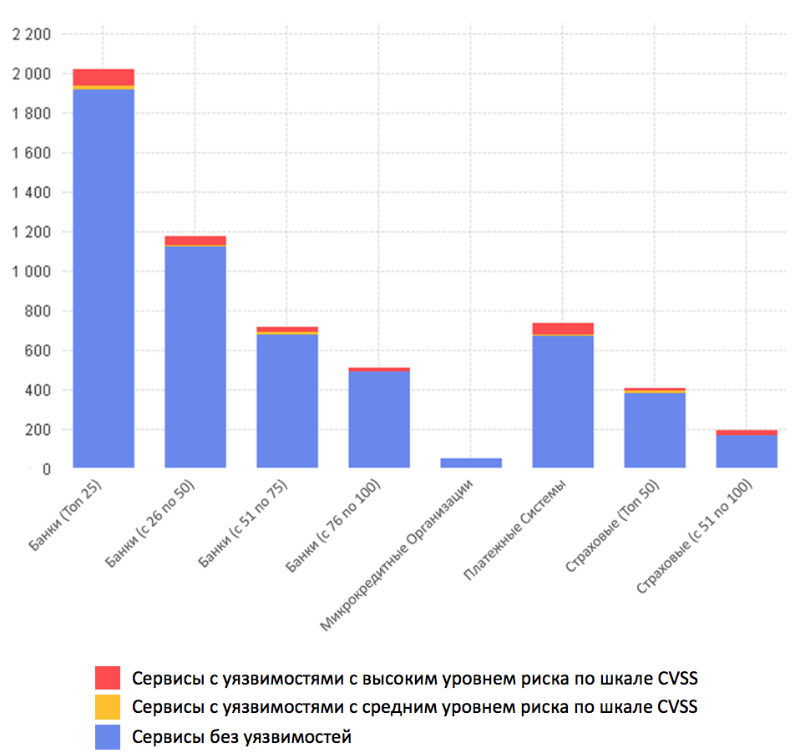

These services were distributed to organizations as follows:

The results did not surprise us: the larger the size of the organization, the more services are placed on the network perimeter, and with an increase in the number of services the probability of a configuration error increases.

Step 3. Identify vulnerable services

The results did not surprise us: the larger the organization, the more services are placed on its network perimeter, and with an increase in the number of services, the probability of a configuration error increases.

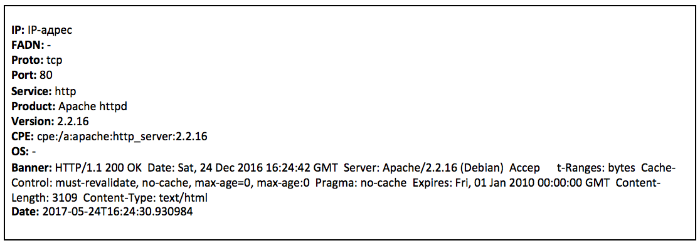

- IP - unique network address of a node in a computer network built using the IP protocol;

- Port - digital number, which is a parameter of transport protocols (such as TCP and UDP);

- Protocol - a set of logic-level interface agreements that define the exchange of data between different programs;

- Hostname is a symbolic name assigned to a network device that can be used to access this device in various ways;

- Service - the name of a particular service;

- Product - the name of the software with which the service is implemented;

- Product_version - the version of a specific software;

- Banner - welcome information given by the service when trying to connect to it;

- CPE - Common Platform Enumeration , a standardized way to name software applications, operating systems, and hardware platforms;

- OS - operating system version.

Not for all open ports, Shodan can give a complete set of information, but if it succeeds, then the data (in the example, the results already processed in our system are selected) look like this:

From the entire attribute space, fields were identified by which information on the vulnerability of this host can be found. For this, a bundle of Product + Product_version or CPE can be excellent. In our case, we decided to use the Product + Product_version bundle, and the search was carried out on the internal vulnerability database of Positive Technologies.

There are a considerable number of publicly available sources for finding vulnerabilities, here are some of them:

• SecurityLab.ru is not only news on information security and a forum, it is also a database on vulnerabilities! Example of information output:

- BDU FSTEK - a database of information security threats that differs from other similar resources in the ability to find vulnerabilities for domestic production software and software;

- nvd.nist.gov is the National Vulnerability Database of the US Institute of Standards and Technology, which integrates US publicly available resources for finding and analyzing vulnerabilities;

- vulners.com - - a large updated database of information security content, allows you to search for vulnerabilities, exploits, patches, bug bounty results;

- cvedetails.com is a convenient web interface for viewing vulnerability data. You can view a list of vendors, products, versions, and CVE related vulnerabilities;

- securityfocus.com is one of the top publicly available sources, especially in terms of filling in exploit data.

All the above resources allow you to quickly search for vulnerabilities on various grounds, including the CPE. Also, these resources in one way or another allow you to automate the search process. As a result, you can find a lot of useful information: detailed descriptions of vulnerabilities, information about the presence of PoC or recorded facts of exploitation, and sometimes links to exploits:

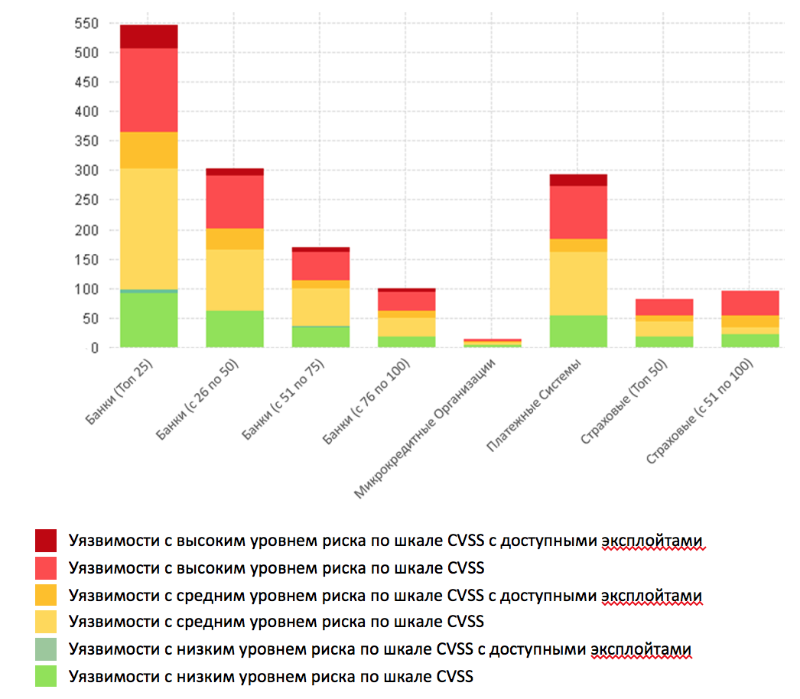

We already have a set of services and their banners, it remains only to get rid of this information through the vulnerability database. Of course, I didn’t want to do this manually, and it would have taken a lot of time. Therefore, having written a simple script, we rather quickly processed all the services received, compared them with our vulnerability database (the same is easily done through the named services) and obtained the following statistics of the distribution of vulnerabilities by services:

The results were as follows: of the total number of services found by Shodan, vulnerabilities were found in 5%. This figure is small: for comparison, according to our own automated perimeter scans, typically vulnerabilities are found in 20–50% of services. But theoretically, the percentage of vulnerability detection can be increased. Let's see how this can be done.

For example, for ROSSSH (in the screenshot, line 4 below), we can assume the availability of the ROSSSH Remote Preauth Heap Corruption vulnerability. Despite the fact that the vulnerability is not new, the probability of meeting it in this service is much higher than zero. Recall our previous research , in which we said that about 30% of systems available from the Internet contain vulnerabilities older than 5 years. Cisco cites similar figures in its research, according to which the average lifetime of known vulnerabilities is more than five and a half years. These results are comparable to ours, and the slight difference is due to different samples and research methods.

Using the example above, we can assume the presence of vulnerabilities in the RDP service CVE-2015-0079 , CVE-2015-2373 , CVE-2015-2472 , CVE-2016-0019 . This is an incomplete list of possible vulnerabilities; in all open sources, these vulnerabilities are bound by CPE to the OS version, ignoring the binding to RDP. The most striking example is the loud exploitable vulnerabilities, to which we will return later. For many other services, it is also possible to build such assumptions about the possible presence of vulnerabilities.

Step 4. Search for exploits

The next step is to search for exploits for specific vulnerabilities. In the search engines described above, it is possible to find exploits in small quantities, but no one bothers to use special utilities for this, because the Pandora's box is already open. For example, there is a freeware utility PTEE. About it is written in great detail another article . And there is Metasploit, which though does not collect anything, but ...

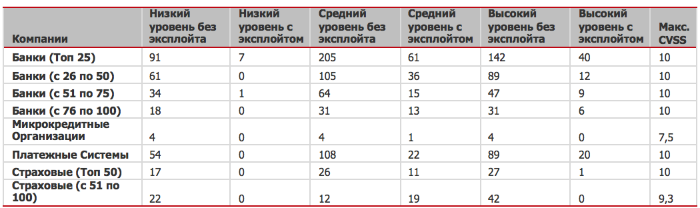

Since our company has its own knowledge base, where vulnerabilities are already associated with exploits, no additional actions at this step were required from us. According to the results of processing, we received:

- 88 of the 559 high-risk CVSS vulnerabilities have available exploits;

- 178 out of 733 medium-risk vulnerabilities have available exploits;

- 8 of the 309 low-risk vulnerabilities have available exploits.

One of the old dogmas of information security says: the level of security of the system is equal to the level of security of the weakest link. Indeed, when planning an attack, as practice shows, a potential attacker will choose the most unprotected system. If you carefully review the results, it is clear that the probability of finding such systems is high for all categories of organizations:

There is a simple explanation for the results: as infrastructure grows, it becomes increasingly difficult to follow. More hosts - more old software and more vulnerabilities, including exploitable ones. In large companies, the perimeter is extremely dynamic: even within one week, up to several dozens of new hosts may appear at the edge of the network, as many may leave, we have shown this in previous studies . If these changes are only the result of an error, then the probability that there will be an “open door” at one of these nodes is very high. For this reason, periodic monitoring of the state of the perimeter in a mode as close as possible to real time is very important for ensuring security.

How long will it take to search for vulnerable services if the vulnerability information has just appeared on the Internet? In our system, the search for specified vulnerabilities takes less than a second. More time is spent analyzing the results, but this is also quite fast: in this study, the analysis of one vulnerability took no more than 15 minutes.

Step 5. Actually Attack

So, the attacker collects data on the target infrastructure and identifies vulnerable services; looking for information about vulnerabilities and selects exploitable ones; then, comparing knowledge of the target infrastructure with data on vulnerabilities, builds assumptions about the presence of these vulnerabilities in the system. In the last step, the attacker conducts an attack on vulnerable systems using available tools.

In our study, of course, there were no real attacks. But we can assess the capabilities of hackers in the last stage Consider for example the last part of a well-known exploit pack merged by The Shadow Brokers group. This pack contained many interesting exploits, for example, the SMB hacking kit, which became well-known after the WannaCry virus outbreak .. In our sample, this exploit came up for 36 systems (data about the perimeter were collected before the archive with exploits was published). At that time, the packaged exploits were applicable to all versions of Windows. Consequently, they were likely to be hacked. This is what WannaCry showed. And this is just the tip of the iceberg, there were other interesting exploits in the pack:

- Esteemaudit (exploit for RDP) . We consider the placement of RDP services on the perimeter without ACL (access control list) as an error. In our download, 44 systems were identified with the availability of this service. According to the description, the exploit is applicable only to older versions of Windows Server 2003. For this reason, we excluded 10 addresses with banners of newer versions of Windows - 3 systems remained for which the exploit could be applicable, and 31 without confirmation.

- A set of exploits for web servers . For 37 systems, an assumption was made about the applicability of exploits aimed at hacking web servers.

- A set of exploits for mail servers . On 13 systems, mail servers were found, versions of which were suitable for operation.

As a result, out of all 3764 available addresses, 111 were identified with potentially vulnerable services. And with high probability they could be hacked using this exploit pack.

At the beginning of the study, the level of danger seemed lower to us, but then WannaCry came and did not agree with us. The reason for the high level of danger was insufficient control of the external perimeter in the organizations. However, even after the publication of warnings and expert recommendations, there was no significant increase in the level of security. This was clearly shown by the epidemic of the next crypto-fiber Petya / NotPetya , which used the same vulnerability (although its propagation vector does not relate to the network perimeter). To infect infrastructure, a single vulnerable system is sufficient, and an attacker needs only one vulnerable service to overcome the perimeter.

Conclusions and recommendations for protection

Let's sum up. If you use a conventional network scanner, which is looking for vulnerabilities, employees of the organization may suspect that someone is “watching” them. The facts of such scans are easily identified with IDS and blocked. But who will track the work of the mass search engine? In this article, we have demonstrated that:

- preparation of a targeted attack on the financial sector does not require special financial costs;

- training may be invisible for the attacked organizations and for those who protect them;

- to implement an attack, it is not necessary to have an NSA exploit pack, although its components also get into open access.

Perimeter security is one of the basic protection vectors. But to defend, not knowing exactly what you are defending, is a difficult and, frankly, meaningless exercise. If you do not know the boundaries of the perimeter you are protecting, you can use the network analysis methods described in this article. And if there are many external subnets (for example, infrastructure distributed throughout the country with multiple Internet connections) and the perimeter is difficult to inventory, then you can seek help from experts. For example, to the experts of Positive Technologies (abc@ptsecurity.com). All you need is a list of dedicated networks from the operator and consent to scanning.

Having received an idea of what the perimeter consists of, let us deal with its protection. Achieving the most secure configurations of information systems is a difficult task, since it is the people who are responsible for the software, its configuration and maintenance, and somewhere it is necessary to make assumptions to please the business. Information security always balances the functionality of the system and its security. Configuration errors are also present in the network perimeters: as our research shows, a lot of unnecessary, including vulnerable, services are exposed to the Internet, which makes it easier for an attacker to get into the organization’s network. A recommended perimeter protection plan might look like this:

- Identify those assets that are grounded from the Internet.

- Services without access justification to withdraw from the perimeter.

- Document and implement a procedure for placing new systems on the outer perimeter.

- Create an ACL, restrict access to administrative interfaces, remote access services, databases and other important services to the minimum possible list of persons.

- Introduce the procedure for installing updates and determine the metrics of its success.

- Perform work on security analysis, such as scanning with specialized tools in audit mode (from the internal network), and scanning for vulnerabilities in pentest mode (scanning from an external site to understand how your infrastructure looks to a potential intruder), with regularity at least times a month.

- Identify the list of persons responsible for the assets (both from the business and from the IT). This will reduce labor costs and reaction time when systems are urgently updated.

- To prioritize vulnerability elimination, determine the value of assets.

- Develop a response plan in case of detection of critical hazards in systems located on the perimeter. The plan must consider how to act if critical vulnerabilities are detected; what actions system administrators and information security professionals should take; Whether these actions are consistent with the business owners of the systems.

Of course, it should be remembered that to counter threats, an integrated approach to ensuring information security is necessary, and it is worth starting from its “network boundaries”.

Authors : Positive Technologies experts Vladimir Lapshin, Maxim Fedotov, Andrey Kulikov

Source: https://habr.com/ru/post/335826/

All Articles